Cache in multiprocessor systems. Cache coherency. MESI Protocol

Hello, Habr!

At one time, this topic seemed very interesting to me, so I decided to share my modest knowledge with you. This article does not claim to be a detailed description, but rather a brief overview.

It's no secret that in modern computers several independent processors (kernels, trades) can have access to memory at the same time. Each of them has its own private caches, in which copies of the necessary lines are stored, and some of them are locally modified. The question arises, what if several processors need the same line at the same time. It is not difficult to conclude that for the correct operation of the system it is necessary to provide a single memory space for all processors.

To ensure this, special coherence protocols were invented. Cache coherence - cache properties, meaning the integrity of data stored in local caches of a shared system. Each cache cell has flags that describe how its state relates to the state of the cell with the same address in other processors of the system.

When the state of the current cell changes, you need to somehow inform the rest of the caches. For example, by generating broadcast messages delivered over the internal network of a multiprocessor system.

Many coherence protocols were invented, which differ in algorithms, number of states, and, as a result, speed of operation and scalability. Most modern coherence protocols are variations of the MESI protocol [1]. For this reason, we will consider it.

In this scheme, each cache line can be in one of four states:

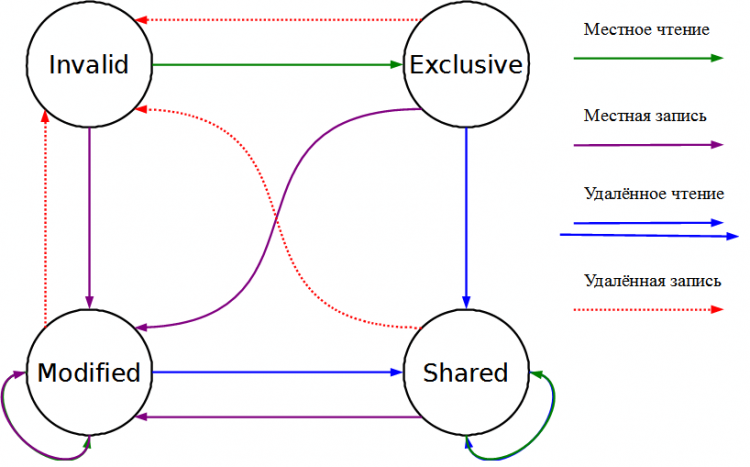

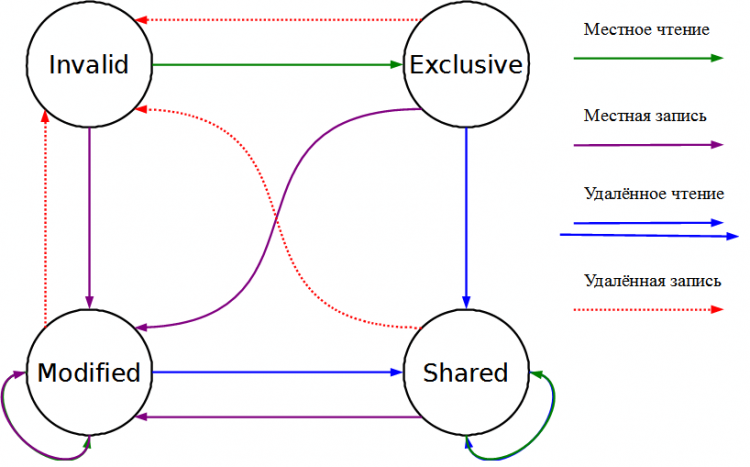

MESI protocol transition diagram. Access is local, if it was initiated by the processors of this cache, remote - if it was called by any other.

I also want to consider one of the optimizations of the MESI protocol.

For this protocol, the status flags have been expanded with another Owner (O) English. owner. This condition is a combination of the “Modified” and “Shared” states. This state avoids the need to write the changed line to the memory, thereby reducing traffic directed to the memory. The cache line in this state contains the most recent data. The described state is similar to Shared in that other processors can also have the most recent data in relation to RAM in their cache. However, unlike it, this state means that the data in memory is out of date. Only one line with a specific address can have this state, and it will be it, and not memory, that will answer all requests for reading at this address.

This is all that I wanted to talk about today, I hope that my article will be of interest to someone.

Additional information on this subject can be found in the sources indicated below.

[1] Review of existing protocols, with transition diagrams

[2] G.S. Rechistov, E.A. Yulyugin, A.A. Ivanov, P.L. Shishpor, N.N. Clickers "Fundamentals of computer software modeling" URL

[3] Ulrich Drepper "What Every Programmer Should Know About Memory" URL

At one time, this topic seemed very interesting to me, so I decided to share my modest knowledge with you. This article does not claim to be a detailed description, but rather a brief overview.

Introduction

It's no secret that in modern computers several independent processors (kernels, trades) can have access to memory at the same time. Each of them has its own private caches, in which copies of the necessary lines are stored, and some of them are locally modified. The question arises, what if several processors need the same line at the same time. It is not difficult to conclude that for the correct operation of the system it is necessary to provide a single memory space for all processors.

To ensure this, special coherence protocols were invented. Cache coherence - cache properties, meaning the integrity of data stored in local caches of a shared system. Each cache cell has flags that describe how its state relates to the state of the cell with the same address in other processors of the system.

When the state of the current cell changes, you need to somehow inform the rest of the caches. For example, by generating broadcast messages delivered over the internal network of a multiprocessor system.

Many coherence protocols were invented, which differ in algorithms, number of states, and, as a result, speed of operation and scalability. Most modern coherence protocols are variations of the MESI protocol [1]. For this reason, we will consider it.

MESI

In this scheme, each cache line can be in one of four states:

- Modified (M) English modified. This flag can only mark a line in one cache. This state means that this line has been changed, but these changes have not yet reached the memory. The owner of such a line can easily read and write to it without questioning the rest.

- Exclusive (E) English exclusive. A line marked with this flag, just like an M-line, can only be in one cache. The data contained in it is completely identical to the data in RAM. You can write and read from it without external requests, since it is stored in only one cache. After recording, such a line should be marked as modified.

- Shared (S) English shared. A line can be simultaneously cached by several devices and shared. Requests for writing to such a line always go to a common bus, which leads to the fact that all lines with such addresses in other caches are marked as invalid. The contents of the main memory are also updated. Reading from such a line does not require any external requests.

- Invalid (I) English invalid. Such a line is considered invalid and an attempt to read will result in a cache miss. A line is marked invalid if it is empty or contains outdated information.

MESI protocol transition diagram. Access is local, if it was initiated by the processors of this cache, remote - if it was called by any other.

I also want to consider one of the optimizations of the MESI protocol.

MOESI

For this protocol, the status flags have been expanded with another Owner (O) English. owner. This condition is a combination of the “Modified” and “Shared” states. This state avoids the need to write the changed line to the memory, thereby reducing traffic directed to the memory. The cache line in this state contains the most recent data. The described state is similar to Shared in that other processors can also have the most recent data in relation to RAM in their cache. However, unlike it, this state means that the data in memory is out of date. Only one line with a specific address can have this state, and it will be it, and not memory, that will answer all requests for reading at this address.

This is all that I wanted to talk about today, I hope that my article will be of interest to someone.

Additional information on this subject can be found in the sources indicated below.

Literature

[1] Review of existing protocols, with transition diagrams

[2] G.S. Rechistov, E.A. Yulyugin, A.A. Ivanov, P.L. Shishpor, N.N. Clickers "Fundamentals of computer software modeling" URL

[3] Ulrich Drepper "What Every Programmer Should Know About Memory" URL