Detection and localization of arbitrary text on images obtained using cameras of mobile phones

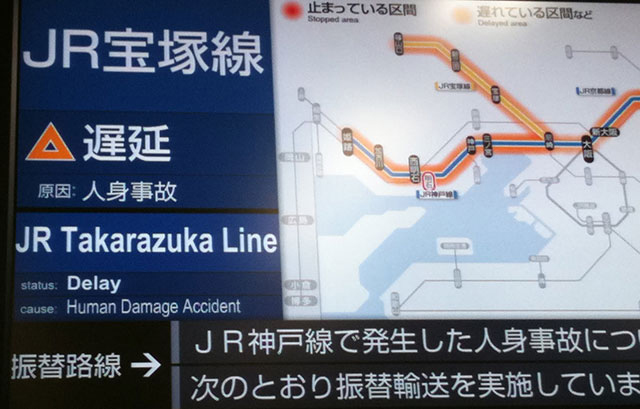

I sometimes travel to different countries, and the language barrier, quite often, becomes a serious obstacle for me. And if in countries where the languages of the German group are used, I can somehow find my way around, but in countries such as China, Israel and the Arab countries without an accompanying person, the journey turns into a mysterious quest. It is impossible to understand the local bus / train / train schedule; street names in small towns are very rarely in English. And the problem with choosing what to eat from a menu in an incomprehensible language is generally akin to walking through a minefield.

Since I am a developer for iOS, I thought, why not write such an application: you point the camera at the sign / schedule / menu and then you get a translation into Russian.

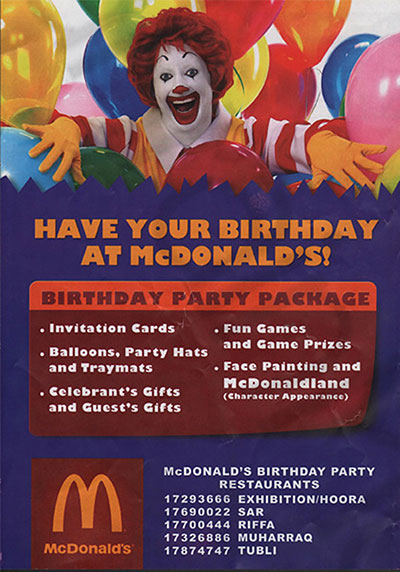

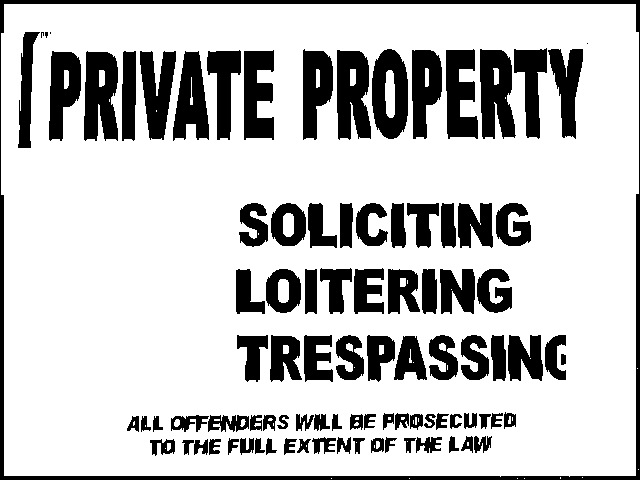

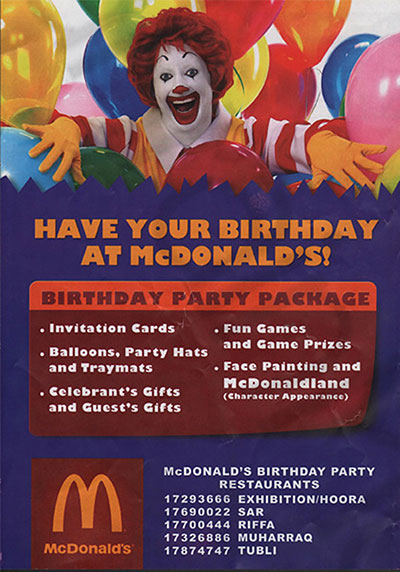

A brief search on the App Store showed that there is only one or two similar applications, and the Russian language is not among the supported ones. So, the path is open and, you can try, write such an application. It is worth mentioning that the conversation is not about those applications that photograph black text on a white sheet of paper and then digitize and translate it. Such applications are really a wagon and a small cart. This is an application that can highlight text on a natural image, for example, on a photograph of a bus, you need to select the text on the route plate and translate it so that the user can understand where this bus is going. Or a question with a menu that is relevant to me, I really want to know what you order to eat.

The main task in the application is the detection and localization of the text, and then its selection and binarization for "feeding" in OCR, for example tesseract. And while the algorithms for detecting text in scanned documents have long been known and have reached 99% accuracy, the detection of text of arbitrary size in photographs is still an actual area of research. The task will be all the more interesting, I thought, and set about studying algorithms.

Naturally, a universal algorithm for finding any text on any image does not exist, usually different algorithms are used for different tasks, plus heuristic methods. To begin with, we formalize the task: for our purposes it is necessary to find text that is quite contrasted with the surrounding background, located horizontally, the angle of inclination does not exceed 20 degrees, and it can be written in a font of different sizes and colors.

After reviewing the algorithms, Ibegan to reinvent the wheel took up the implementation. I decided to write everything myself, without using opencv, for a deeper immersion in the subject. The basis was taken by the so-called edge based method. And that’s what I ended up with.

At the beginning we get the image from the phone’s camera in BGRA format.

We translate it into grayscale and build a Gaussian pyramid of images. At each level of the pyramid, we will find the text of a certain dimension. At the lowest level, we detect fonts with a height of approximately from k to 2 * k-1 pixels, then from 2 * k to 4 * k-1, and so on. Actually, it was necessary to use 4 images in the pyramid, but we remember that we have at our disposal only an iPhone, not a quad-core i7, so we will limit ourselves to 3 images.

We apply the Sobel operator to select vertical boundaries. And we filter the result by simply deleting too short segments to cut off the noise.

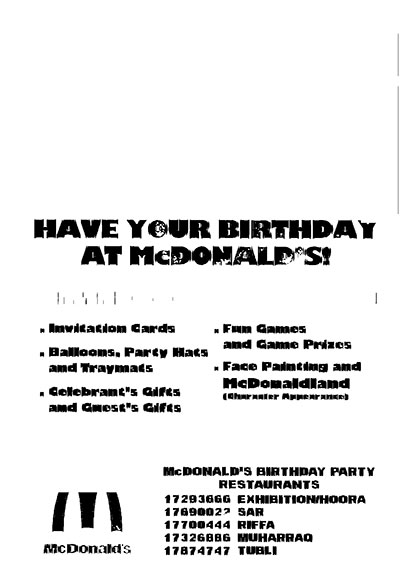

To the vertical boundaries highlighted by the Sobel operator, we apply the morphological closure operation. Horizontal width of the font, and vertical by 5. The result is filtered again. We skip only what fits into the height of the font we are looking for from k to 2 * k-1, and with a length of at least 3 characters. We get this result.

We perform the same operations with the next level of the pyramid.

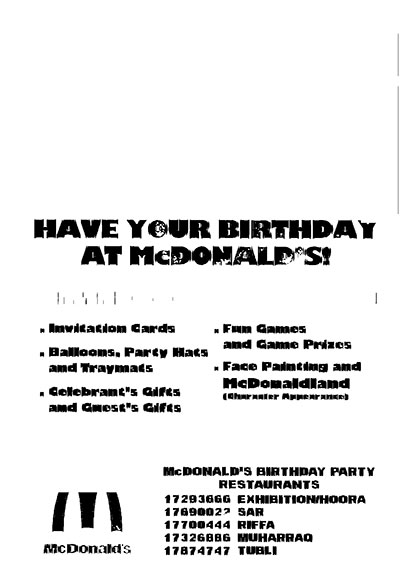

Then we combine all the results into one. Then in the selected area we do adaptive binarization and we get such an image. It is already quite suitable for further recognition in OCR. It can be seen that the largest font was not determined, due to the fact that another image in the Gaussian pyramid is missing.

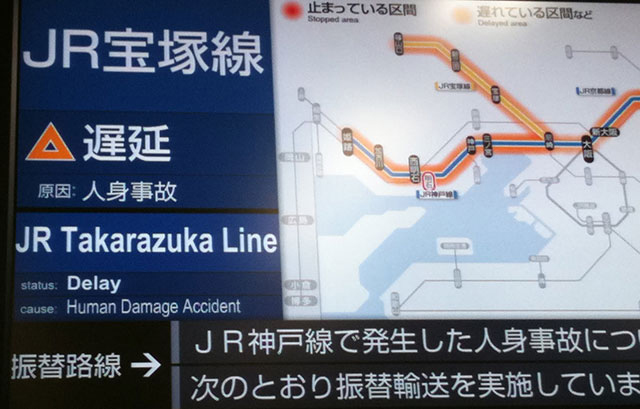

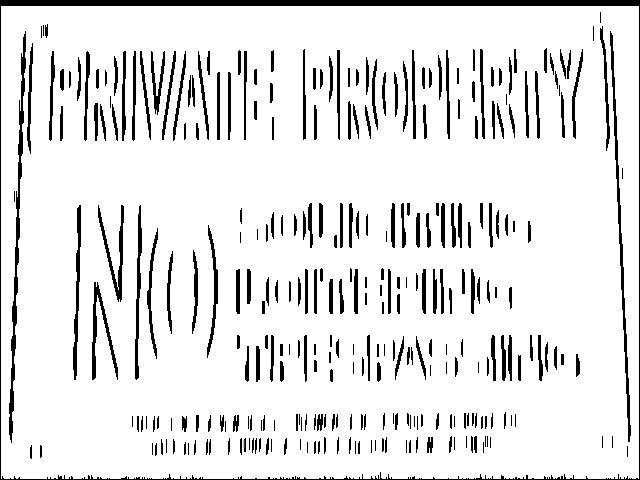

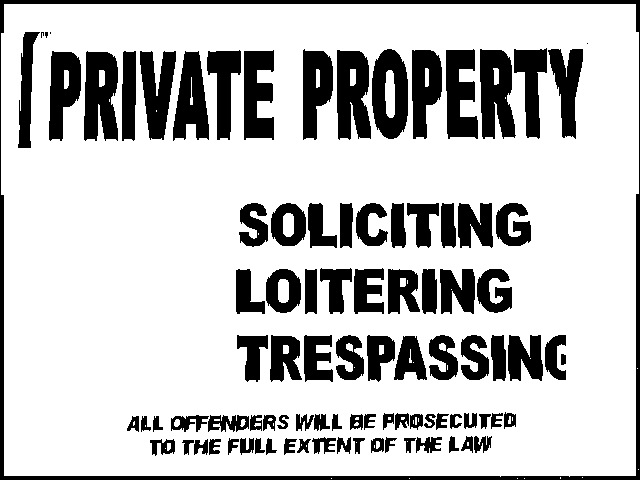

Below are examples of the operation of the algorithm on more complex images; it can be seen that some refinement is still required.

640x480 image processing time on iPhone 5, about 0.3 sec.

PS I will answer questions in comments, write about the grammatical errors in PM.

Since I am a developer for iOS, I thought, why not write such an application: you point the camera at the sign / schedule / menu and then you get a translation into Russian.

A brief search on the App Store showed that there is only one or two similar applications, and the Russian language is not among the supported ones. So, the path is open and, you can try, write such an application. It is worth mentioning that the conversation is not about those applications that photograph black text on a white sheet of paper and then digitize and translate it. Such applications are really a wagon and a small cart. This is an application that can highlight text on a natural image, for example, on a photograph of a bus, you need to select the text on the route plate and translate it so that the user can understand where this bus is going. Or a question with a menu that is relevant to me, I really want to know what you order to eat.

The main task in the application is the detection and localization of the text, and then its selection and binarization for "feeding" in OCR, for example tesseract. And while the algorithms for detecting text in scanned documents have long been known and have reached 99% accuracy, the detection of text of arbitrary size in photographs is still an actual area of research. The task will be all the more interesting, I thought, and set about studying algorithms.

Naturally, a universal algorithm for finding any text on any image does not exist, usually different algorithms are used for different tasks, plus heuristic methods. To begin with, we formalize the task: for our purposes it is necessary to find text that is quite contrasted with the surrounding background, located horizontally, the angle of inclination does not exceed 20 degrees, and it can be written in a font of different sizes and colors.

After reviewing the algorithms, I

At the beginning we get the image from the phone’s camera in BGRA format.

We translate it into grayscale and build a Gaussian pyramid of images. At each level of the pyramid, we will find the text of a certain dimension. At the lowest level, we detect fonts with a height of approximately from k to 2 * k-1 pixels, then from 2 * k to 4 * k-1, and so on. Actually, it was necessary to use 4 images in the pyramid, but we remember that we have at our disposal only an iPhone, not a quad-core i7, so we will limit ourselves to 3 images.

We apply the Sobel operator to select vertical boundaries. And we filter the result by simply deleting too short segments to cut off the noise.

To the vertical boundaries highlighted by the Sobel operator, we apply the morphological closure operation. Horizontal width of the font, and vertical by 5. The result is filtered again. We skip only what fits into the height of the font we are looking for from k to 2 * k-1, and with a length of at least 3 characters. We get this result.

We perform the same operations with the next level of the pyramid.

Then we combine all the results into one. Then in the selected area we do adaptive binarization and we get such an image. It is already quite suitable for further recognition in OCR. It can be seen that the largest font was not determined, due to the fact that another image in the Gaussian pyramid is missing.

Below are examples of the operation of the algorithm on more complex images; it can be seen that some refinement is still required.

640x480 image processing time on iPhone 5, about 0.3 sec.

PS I will answer questions in comments, write about the grammatical errors in PM.