Transition to Percona XtraDB Cluster. One possible configuration

So, I began to implement Percona XtraDB Cluster in my organization - to transfer databases from a regular MySQL server to a cluster architecture.

In the cluster we need to keep:

In other words, the database of almost all of our projects, from those that run on MySQL, should now live in a cluster.

We keep most of the projects remotely in the DC, and therefore the cluster will be located there.

The task of spreading the cluster geographically into different data centers is not worth it.

To build a cluster, 3 servers of the same configuration are used: HP DL160 G6, 2X Xeon E5620, 24 GB RAM, 4x SAS 300GB in hardware RAID 10. A good branded hardware that I have been using for a long time and which has not let me down yet.

- synchronous true multi-master replication (Galera)

- the possibility of commercial support from Percona

- fork MySQL with an impressive list of optimizations

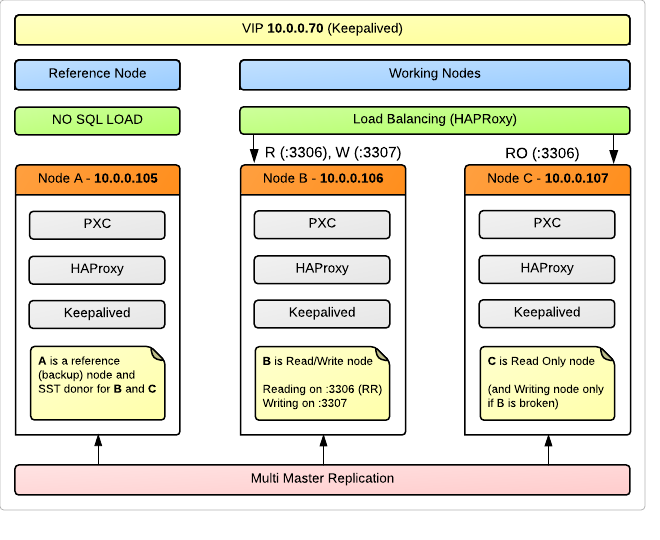

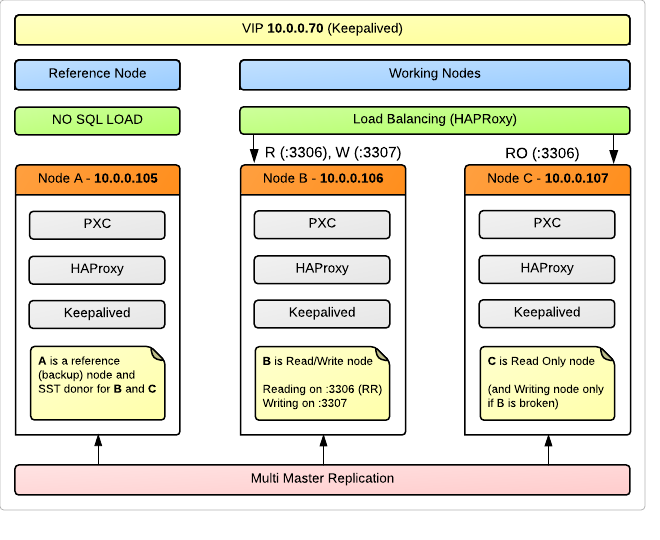

There are 3 nodes in the cluster, for each physical server described above (OS Ubuntu 12.04).

It uses a transparent connection to a single virtual IP address shared between all 3 servers using keepalived . To balance the load on the nodes, HAProxy is used , of course, installed on each server, so that in case of failure of one of them, the load continues to be balanced by the other due to VIP. We intentionally decided to use the same glands for LB and VIP as for the cluster.

the Node AIt is used as a Reference (Backup) Node, which our applications will not load requests. At the same time, she will be a full member of the cluster, and participate in replication. This is due to the fact that in the event of a cluster failure or data integrity violation, we will have a node that almost certainly contains the most consistent data that the applications simply could not destroy due to lack of access. It may seem like a waste of resources, but for us 99% data reliability is still more important than 24/7 availability. This is the node we will use for SST - State Snapshot Transfer - automatic dumping of a dump to a new node connected to the cluster or raised after a failure. In addition, Node A- An excellent candidate for the server, from where we will remove the standard periodic backups.

Schematically, all this can be represented like this:

Node B and Node C are workhorses that hold the load, but only one of them takes care of the recording operations. This is the recommendation of many experts, and below I will dwell on this issue in detail.

Requests on port 3306 , HAProxy spreads on Round Robin between nodes B and the C .

What comes to 3307 , proxied only to the Node Bed and . At the same time, if Node B suddenly crashes, requests will switch to the one specified as backup Node C.

To implement our idea (write to only one of the nodes), applications must be written so that read requests go through a connection with 10.0.0.70 opin306 ( 10.0.0.70 is our VIP), and write requests were sent to 10.0.0.70:07307 .

In our case, this will require some work on creating a new connection in the PHP application config, and replacing the name of the DBHandler variable with another value. In general, it is not so difficult for those applications that we wrote. For third-party projects, whose bases will also be in the cluster, we simply specify the default port 3307. These projects create a small load, and the loss of distributed readability is not so critical.

HAProxy config ( /etc/haproxy/haproxy.cfg ):

In order for HAProxy to determine whether the cluster node is alive, the clustercheck utility is used (included in the percona-xtradb-cluster package), which displays information about the state of the node (Synced / Not Synced) as an HTTP response. The xinetd service should be configured on each node:

/etc/xinetd.d/mysqlchk

/ etc / services

HAProxy raises a web server and provides a script for viewing statistics, which is very convenient for visual monitoring of cluster status.

The URL looks like this:

Well considered the issue of setting up a cluster with balancing through HAProxy here: www.mysqlperformanceblog.com/2012/06/20/percona-xtradb-cluster-reference-architecture-with-haproxy

/etc/keepalived/keepalived.conf

There is already an article on Habr about installing and testing PXC: habrahabr.ru/post/152969 , where both issues are discussed in detail, so I omit the installation. But I will describe the configuration.

First of all, do not forget to synchronize time on all nodes. I missed this point, and for a long time I could not understand why the SST hangs tightly on me - it started, hung in the processes, but in fact nothing happened.

my.cnf on Node A (in my config it is node105 ):

Next up are just the different options:

Node B (node106)

Node C (node107)

In the last two configs, we explicitly tell the server where to look for the first node in the cluster (which knows where all members of the group live), and what data should be taken from it, and not from the other available, for synchronization.

It is on this configuration that I stopped now, and am going to gradually transfer projects to the cluster. I plan to continue writing about my experience further.

I’ll outline here the questions to which I did not immediately find the answer, but the answer to which is especially important for understanding the technology and the correct work with the cluster.

Percona XtraDB Cluster

XtraDB Cluster on mysqlperfomanceblog.com

Percona Community Forums

Percona Discussion Google group

Galera Wiki

Briefly about the task and input data

In the cluster we need to keep:

- DB of several websites with users

- DB with statistics of these users

- Database for ticket systems, project management systems and other trifles

In other words, the database of almost all of our projects, from those that run on MySQL, should now live in a cluster.

We keep most of the projects remotely in the DC, and therefore the cluster will be located there.

The task of spreading the cluster geographically into different data centers is not worth it.

To build a cluster, 3 servers of the same configuration are used: HP DL160 G6, 2X Xeon E5620, 24 GB RAM, 4x SAS 300GB in hardware RAID 10. A good branded hardware that I have been using for a long time and which has not let me down yet.

Why Percona?

- synchronous true multi-master replication (Galera)

- the possibility of commercial support from Percona

- fork MySQL with an impressive list of optimizations

Cluster diagram

There are 3 nodes in the cluster, for each physical server described above (OS Ubuntu 12.04).

It uses a transparent connection to a single virtual IP address shared between all 3 servers using keepalived . To balance the load on the nodes, HAProxy is used , of course, installed on each server, so that in case of failure of one of them, the load continues to be balanced by the other due to VIP. We intentionally decided to use the same glands for LB and VIP as for the cluster.

the Node AIt is used as a Reference (Backup) Node, which our applications will not load requests. At the same time, she will be a full member of the cluster, and participate in replication. This is due to the fact that in the event of a cluster failure or data integrity violation, we will have a node that almost certainly contains the most consistent data that the applications simply could not destroy due to lack of access. It may seem like a waste of resources, but for us 99% data reliability is still more important than 24/7 availability. This is the node we will use for SST - State Snapshot Transfer - automatic dumping of a dump to a new node connected to the cluster or raised after a failure. In addition, Node A- An excellent candidate for the server, from where we will remove the standard periodic backups.

Schematically, all this can be represented like this:

Node B and Node C are workhorses that hold the load, but only one of them takes care of the recording operations. This is the recommendation of many experts, and below I will dwell on this issue in detail.

HAProxy and balancing details

Requests on port 3306 , HAProxy spreads on Round Robin between nodes B and the C .

What comes to 3307 , proxied only to the Node Bed and . At the same time, if Node B suddenly crashes, requests will switch to the one specified as backup Node C.

To implement our idea (write to only one of the nodes), applications must be written so that read requests go through a connection with 10.0.0.70 opin306 ( 10.0.0.70 is our VIP), and write requests were sent to 10.0.0.70:07307 .

In our case, this will require some work on creating a new connection in the PHP application config, and replacing the name of the DBHandler variable with another value. In general, it is not so difficult for those applications that we wrote. For third-party projects, whose bases will also be in the cluster, we simply specify the default port 3307. These projects create a small load, and the loss of distributed readability is not so critical.

HAProxy config ( /etc/haproxy/haproxy.cfg ):

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

maxconn 4096

chroot /usr/share/haproxy

daemon

defaults

log global

mode http

option tcplog

option dontlognull

retries 3

option redispatch

maxconn 2000

contimeout 5000

clitimeout 50000

srvtimeout 50000

frontend pxc-front

bind 10.0.0.70:3306

mode tcp

default_backend pxc-back

frontend stats-front

bind *:81

mode http

default_backend stats-back

frontend pxc-onenode-front

bind 10.0.0.70:3307

mode tcp

default_backend pxc-onenode-back

backend pxc-back

mode tcp

balance leastconn

option httpchk

server c1 10.0.0.106:33061 check port 9200 inter 12000 rise 3 fall 3

server c2 10.0.0.107:33061 check port 9200 inter 12000 rise 3 fall 3

backend stats-back

mode http

balance roundrobin

stats uri /haproxy/stats

stats auth haproxy:password

backend pxc-onenode-back

mode tcp

balance leastconn

option httpchk

server c1 10.0.0.106:33061 check port 9200 inter 12000 rise 3 fall 3

server c2 10.0.0.107:33061 check port 9200 inter 12000 rise 3 fall 3 backup

backend pxc-referencenode-back

mode tcp

balance leastconn

option httpchk

server c0 10.0.0.105:33061 check port 9200 inter 12000 rise 3 fall 3

In order for HAProxy to determine whether the cluster node is alive, the clustercheck utility is used (included in the percona-xtradb-cluster package), which displays information about the state of the node (Synced / Not Synced) as an HTTP response. The xinetd service should be configured on each node:

/etc/xinetd.d/mysqlchk

service mysqlchk

{

disable = no

flags = REUSE

socket_type = stream

port = 9200

wait = no

user = nobody

server = /usr/bin/clustercheck

log_on_failure += USERID

only_from = 0.0.0.0/0

per_source = UNLIMITED

}

/ etc / services

...

# Local services

mysqlchk 9200/tcp # mysqlchk

HAProxy raises a web server and provides a script for viewing statistics, which is very convenient for visual monitoring of cluster status.

The URL looks like this:

http: // VIP: 81 / haproxy / statsThe port, as well as the login and password for Basic authorization are specified in the config.

Well considered the issue of setting up a cluster with balancing through HAProxy here: www.mysqlperformanceblog.com/2012/06/20/percona-xtradb-cluster-reference-architecture-with-haproxy

Keepalived and VIP

$ echo "net.ipv4.ip_nonlocal_bind=1" >> /etc/sysctl.conf && sysctl -p

/etc/keepalived/keepalived.conf

vrrp_script chk_haproxy { # Requires keepalived-1.1.13

script "killall -0 haproxy" # cheaper than pidof

interval 2 # check every 2 seconds

weight 2 # add 2 points of prio if OK

}

vrrp_instance VI_1 {

interface eth0

state MASTER # SLAVE on backup

virtual_router_id 51

priority 101 # 101 on master, 100 and 99 on backup

virtual_ipaddress {

10.0.0.70

}

track_script {

chk_haproxy

}

}

Node configuration

There is already an article on Habr about installing and testing PXC: habrahabr.ru/post/152969 , where both issues are discussed in detail, so I omit the installation. But I will describe the configuration.

First of all, do not forget to synchronize time on all nodes. I missed this point, and for a long time I could not understand why the SST hangs tightly on me - it started, hung in the processes, but in fact nothing happened.

my.cnf on Node A (in my config it is node105 ):

[mysqld_safe]

wsrep_urls=gcomm://10.0.0.106:4567,gcomm://10.0.0.107:4567

# wsrep_urls=gcomm://10.0.0.106:4567,gcomm://10.0.0.107:4567,gcomm://

# закомментированый параметр - тот, что нужно использовать

# при инициализации кластера, т.е. первом запуске первой ноды

# это важный момент, и он будет рассмотрен ниже

[mysqld]

port=33061

bind-address=10.0.0.105

datadir=/var/lib/mysql

skip-name-resolve

log_error=/var/log/mysql/error.log

binlog_format=ROW

wsrep_provider=/usr/lib/libgalera_smm.so

wsrep_slave_threads=16

wsrep_cluster_name=cluster0

wsrep_node_name=node105

wsrep_sst_method=xtrabackup

wsrep_sst_auth=backup:password

innodb_locks_unsafe_for_binlog=1

innodb_autoinc_lock_mode=2

innodb_buffer_pool_size=8G

innodb_log_file_size=128M

innodb_log_buffer_size=4M

innodb-file-per-table

Next up are just the different options:

Node B (node106)

[mysqld_safe]

wsrep_urls=gcomm://10.0.0.105:4567

[mysqld]

bind-address=10.0.0.106

wsrep_node_name=node106

wsrep_sst_donor=node105

Node C (node107)

[mysqld_safe]

wsrep_urls=gcomm://10.0.0.105:4567

[mysqld]

bind-address=10.0.0.107

wsrep_node_name=node107

wsrep_sst_donor=node105

In the last two configs, we explicitly tell the server where to look for the first node in the cluster (which knows where all members of the group live), and what data should be taken from it, and not from the other available, for synchronization.

It is on this configuration that I stopped now, and am going to gradually transfer projects to the cluster. I plan to continue writing about my experience further.

Problematic issues

I’ll outline here the questions to which I did not immediately find the answer, but the answer to which is especially important for understanding the technology and the correct work with the cluster.

Why is it recommended to write on one node from all available in the cluster? After all, it would seem that this contradicts the idea of multi-master replication.

When I first saw this recommendation, I was very upset. I imagined multi-master in such a way that you can write to any node without any worries, and the changes are guaranteed to be applied synchronously on all nodes. But the harsh realities of life are such that cluster-wide deadlocks are possible with this approach. Particularly high probability in the case of a parallel change of the same data in long transactions. Because I am not yet an expert in this matter, I can not explain this process on my fingers. But there is a good article where this problem is covered in more detail: Percona XtraDB Cluster: Multi-node writing and Unexpected deadlocks

My own tests showed that with aggressive recording on all nodes they lay down one after another, leaving only the Reference Node working, i.e. in fact, we can say that the cluster stopped working. This is certainly a minus of such a configuration, because the third node could take the burden in this case, but we are sure that the data there is safe and sound, and in the worst case scenario, we can manually start it in single-server mode.

How to specify the IP addresses of the nodes existing in the cluster when connecting a new one?

There are 2 directives for this:[mysqld_safe] wsrep_urls [mysqld] wsrep_cluster_address

The first, if I understood correctly, was added by Galera relatively recently for the ability to specify multiple node addresses at once. There are no more fundamental differences.

The meaning of these directives at first caused particular confusion for me.

The fact is that many manuals advised to leave the gcomm: // value in wsrep_urls empty on the first node of the cluster .

It turned out to be wrong. The presence of gcomm: // means the initialization of a new cluster. Therefore, immediately after the start of the first node in its config, you need to delete this value. Otherwise, after restarting this node, you will get two different clusters, one of which will consist only of the first node.

For myself, I deduced the configuration order when starting and restarting the cluster (already described in more detail above)

1. Node A: start with gcomm: // B, gcomm: // C, gcomm: //

2. Node A: delete gcomm: / / at the end of line

3. Nodes B, C: starting with gcomm: // A

NB: you must specify the port number for Group Communication requests, by default it is 4567. That is, the correct entry is: gcomm: // A: 4567

Can I write to a donor node with a non-blocking xtrabackup as an SST method?

During SST, the clustercheck on the donor will issue HTTP 503, respectively, for HAProxy or another LB that uses this utility to determine the status, the donor node will be considered unavailable, as well as the node to which the transfer is made. But this behavior can be changed by editing clustercheck , which is essentially a normal bash script.

This is done as follows:

/ usr / bin / clustercheck#AVAILABLE_WHEN_DONOR=0 AVAILABLE_WHEN_DONOR=1

NB: note that this can only be done if you are sure that xtrabackup is used as the SST , and not some other method. In our case, when we use a node without a load as a donor, such editing does not make sense at all.

useful links

Percona XtraDB Cluster

XtraDB Cluster on mysqlperfomanceblog.com

Percona Community Forums

Percona Discussion Google group

Galera Wiki