A little bit about garbage collection and generations

Everyone knows that most modern systems use generations to increase the efficiency of garbage collection. But have you ever wondered how these generations work in general, and how do we gain performance gains? But let's not get ahead of ourselves and take it in order.

So, most modern Garbage Collector (GC) systems use generations to more efficiently release short-lived objects. There is a heuristic rule, which says that most of the newly created objects are used for a very short time and they can be safely removed at the earliest opportunity.

The main idea of generations is that we collect the “garbage” of the young generation much more often and much faster due to the fact that we do not analyze all objects of the managed heap (which can be very, very many), but only objects of this young generation.

Let's look at such an example. Suppose we have some object “A” that initializes its property in a deferred way:

And access to property B, and therefore the creation of this object, occurs even when the object “A” is in the second generation:

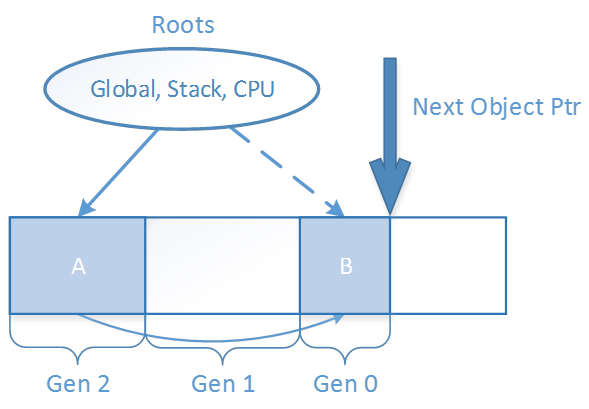

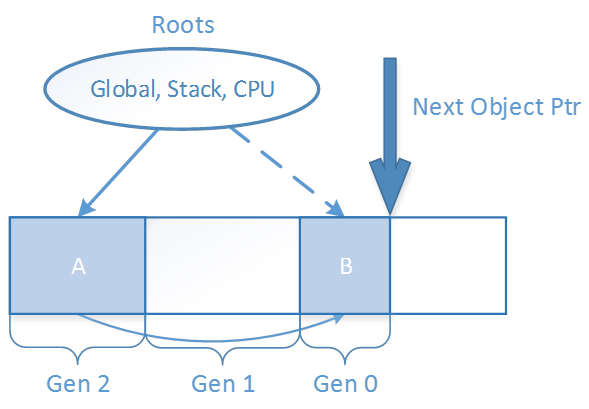

So, garbage collection of the zero generation consists in the analysis of only objects of this generation. This means that when garbage collection starts in line (3), all objects of the zero generation are marked as unreachable, including the newly created object “B”. Then all root links are analyzed to determine the reachability of objects of this generation; if the object is reachable, then it is considered alive, and all other objects that cannot be accessed from root links are considered garbage and deleted.

But in our case, object “B” is not directly reachable from root links, which means that to determine its reach the garbage collector will have to analyze the fields of all objects in all heaps of our application, otherwise the newly created objects may be “mistakenly” collected by the garbage collector which we obviously would not want. Then what then is the meaning of generations, if each time to determine the reachability of objects of zero generation you still have to analyze the entire managed heap as a whole?

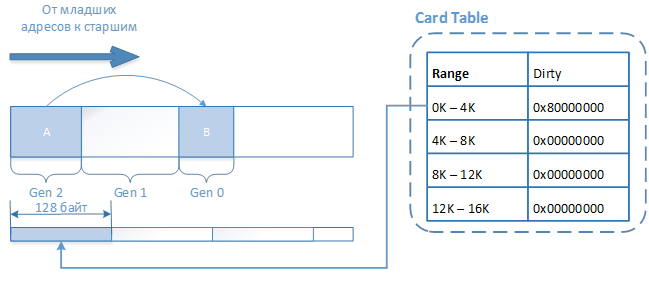

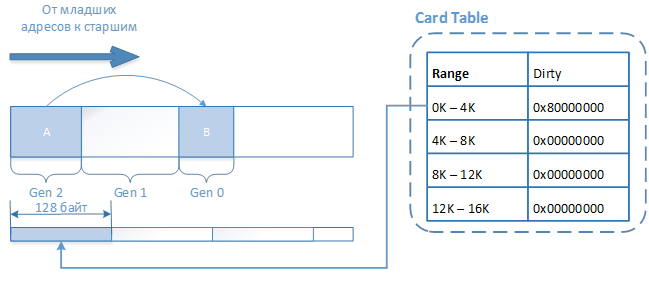

To solve this problem, we need to somehow add object “A” to the list of objects that need to be analyzed to determine the reachability of object “B”. However, instead of storing a list of all the "dirty" objects, most generations of garbage collector implementations use a special data structure called card table, which stores the address of the object that created the "young" descendant.

Card table is a simple data structure, which is a bitmask, each bit of which indicates that an object located at an address with a certain range is “dirty” and contains a link to a “young” object. At the moment, one bit of the bitmask represents a range of 128 bytes, which means that each byte of the bitmask contains information about the 1K range. This approach is a compromise between efficiency and the amount of extra memory required by the garbage collector to keep this table up to date. Thus, for a 32-bit system in which 2 GB of address space is available to the user mode, the size of the card table is 2 MB. However, since one bit in the card table marks a range of 128 bytes of address space,

To keep this data structure up to date, each time the object field is written, the JIT compiler generates a so-called “write barrier”, which is reduced to updating the card table if the address of the object being written is in the ephemeral segment, those. is a young object of the 0th or 1st generation.

Now, if we return to our case, then the object “B” will not be collected by the garbage collector, since not only the root links (which are not referenced) will be analyzed for reachability analysis, but also all objects located on the lower 128 bytes of the second generation, where our object “A” gets.

Yes, there is no particular practical benefit in the information on how the garbage collection is implemented (until you subscribe to the event of a long-lived object and forget to unsubscribe). It’s just that every time when discussing garbage collection generations are necessarily mentioned, it is very rarely discussed that without additional scrap and a well-known mother, it is simply impossible to implement efficient garbage collection.

By the way, this implementation has a small practical consequence: despite the fact that older generation objects create new objects and store them in the fields not so often (British scientists have found that no more than 1% of second generation objects do this) writing to the object field requires some additional costs for the very hack required to update the card table. This, for example, makes writing a field in a managed world a slightly more expensive operation compared to an unmanaged world (for example, with C ++).

So, most modern Garbage Collector (GC) systems use generations to more efficiently release short-lived objects. There is a heuristic rule, which says that most of the newly created objects are used for a very short time and they can be safely removed at the earliest opportunity.

The main idea of generations is that we collect the “garbage” of the young generation much more often and much faster due to the fact that we do not analyze all objects of the managed heap (which can be very, very many), but only objects of this young generation.

Let's look at such an example. Suppose we have some object “A” that initializes its property in a deferred way:

public class B

{ }

public class A

{

private Lazy _lazyB = new Lazy(

() => new B());

public B B

{

get { return _lazyB.Value; }

}

}

And access to property B, and therefore the creation of this object, occurs even when the object “A” is in the second generation:

var a = new A();

GC.Collect();

GC.Collect();

// output: A resides in Gen 2, A.B resides in Gen 0

Console.WriteLine("A resides in Gen {0}, A.B resides in Gen {1}",

GC.GetGeneration(a), GC.GetGeneration(a.B));

GC.Collect();

So, garbage collection of the zero generation consists in the analysis of only objects of this generation. This means that when garbage collection starts in line (3), all objects of the zero generation are marked as unreachable, including the newly created object “B”. Then all root links are analyzed to determine the reachability of objects of this generation; if the object is reachable, then it is considered alive, and all other objects that cannot be accessed from root links are considered garbage and deleted.

But in our case, object “B” is not directly reachable from root links, which means that to determine its reach the garbage collector will have to analyze the fields of all objects in all heaps of our application, otherwise the newly created objects may be “mistakenly” collected by the garbage collector which we obviously would not want. Then what then is the meaning of generations, if each time to determine the reachability of objects of zero generation you still have to analyze the entire managed heap as a whole?

To solve this problem, we need to somehow add object “A” to the list of objects that need to be analyzed to determine the reachability of object “B”. However, instead of storing a list of all the "dirty" objects, most generations of garbage collector implementations use a special data structure called card table, which stores the address of the object that created the "young" descendant.

Card table is a simple data structure, which is a bitmask, each bit of which indicates that an object located at an address with a certain range is “dirty” and contains a link to a “young” object. At the moment, one bit of the bitmask represents a range of 128 bytes, which means that each byte of the bitmask contains information about the 1K range. This approach is a compromise between efficiency and the amount of extra memory required by the garbage collector to keep this table up to date. Thus, for a 32-bit system in which 2 GB of address space is available to the user mode, the size of the card table is 2 MB. However, since one bit in the card table marks a range of 128 bytes of address space,

To keep this data structure up to date, each time the object field is written, the JIT compiler generates a so-called “write barrier”, which is reduced to updating the card table if the address of the object being written is in the ephemeral segment, those. is a young object of the 0th or 1st generation.

Now, if we return to our case, then the object “B” will not be collected by the garbage collector, since not only the root links (which are not referenced) will be analyzed for reachability analysis, but also all objects located on the lower 128 bytes of the second generation, where our object “A” gets.

Why do I need all this?

Yes, there is no particular practical benefit in the information on how the garbage collection is implemented (until you subscribe to the event of a long-lived object and forget to unsubscribe). It’s just that every time when discussing garbage collection generations are necessarily mentioned, it is very rarely discussed that without additional scrap and a well-known mother, it is simply impossible to implement efficient garbage collection.

By the way, this implementation has a small practical consequence: despite the fact that older generation objects create new objects and store them in the fields not so often (British scientists have found that no more than 1% of second generation objects do this) writing to the object field requires some additional costs for the very hack required to update the card table. This, for example, makes writing a field in a managed world a slightly more expensive operation compared to an unmanaged world (for example, with C ++).