We manage home-made pieces of iron through the air using Open Sound Control

In this article I will try to tell you how you can remotely control your home-made device connected to the network from a phone or tablet on iOS and Android. Anyone who is at least somewhat familiar with the topic, by this time already decided that it will be about the next web interface to your Arduino and mbed'am - I hasten to interrupt your thoughts - will not go. The method I want to talk about is quick, cheap, has ready-made feedback, convenient controls and has visibility that the most licked web interface will envy.

So that the material does not seem divorced from real life, I will show how we did this trick 4 months ago with our Lightpack. This situation is slightly different from the spherical scenario of using the Open Sound Control protocol (further OSC) in a vacuum, but nevertheless is a good example of how it can be effectively used in rapid prototyping.

In stock, we had an open-source USB backlight device , which is controlled by cross-platform software and has on its side this API for managing from external programs or plug-ins. We wanted to control this device over the network from our tablets and smartphones. Turn it on and off, change the settings profiles, control the brightness, adjust the backlight for each individual channel and can even launch other plugins.

In your case, the situation may be different, not complicated by the intermediary in the form of a full-fledged PC and some kind of API. For example, a device on an ARM / AVR that periodically connects to the network, or at least an Arduino with an ethernet shield that 24/7 logs power consumption for you behind the dashboard in the entrance and sends the data directly to the Google document.

The first thing that comes to mind for solving such a problem is the web interface. It would seem universal: you can access it from any platform. But we did not want to force the web server to spin constantly, no matter how microscopic it is. And we didn’t find any good web-interface designers who would have suitable controls for us, we didn’t want to draw our own and understood that the result could seriously work only on the desktop and ordinary MK wouldn’t master it if we had to manage standalone in the future -device.

However, if you can connect to the device via the network (in our case, you can send commands to the API directly via Telnet), you could write a full-fledged application for the mobile platform that would simply send message packets, and the server would parse them and thus control the device . Despite the fact that over the evening we put together a small prototype for Android that exploited this method, it still remained “expensive” even in comparison with the web interface.

Time passed, the task was recorded somewhere in the subcortex and did not get out of there until I accidentally stumbled upon the TouchOSC mobile application, which (almost) works equally well on iOS and Android.

After demonstration videos and screenshots, it was simply impossible to pass by without trying.)

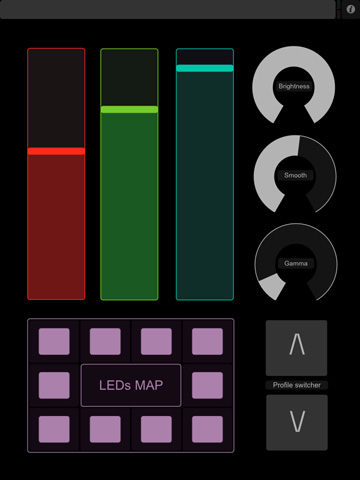

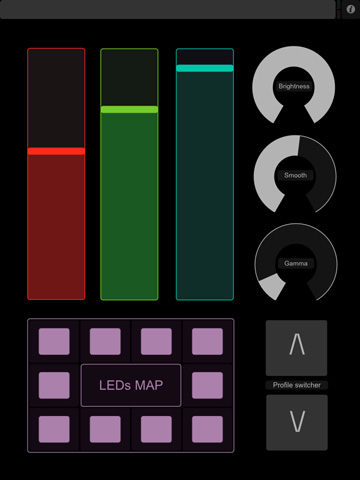

In short, the TouchOSC application ( AppStore , Google Play ) implements the OSC and MIDI protocol interfaces on your mobile devices, which all specialized synthesizers, sound editors, programs for DJs, recording studios, etc. are used to working with. In addition, TouchOSC makes it possible to use only predefined layouts of controls (layouts) of buttons, sliders, shortcuts, but also very simple and quick to draw your own in a special editor. All this stuff works through wi-fi, but it can also work on wires with special adapters.

A typical layout of predefined looks something like this. Level of difficulty: "Koreans", which, however, does not stop you from repeating it in the layout editor.

A superficial analysis showed that this application is not the only one and, if desired, for iOS you can use iOSC, OSCemote, Mrmr OSC controller, Remokon for OSC (I won’t even list them for Android - there are even more of them). The same analysis showed that the protocol itself is used in a large number of programs and devices, and the most important thing for us is that libraries and wrappers with its implementation exist for many programming languages and platforms (for example, options for Arduino and mbed ). An incomplete list is on the official website.

We now have the opportunity in an untypical way to apply new, interesting, at first glance, tools. Ahead was a free day off, so with the words “call accepted” timsat Google deployed to one half of the monitor, and Notepad ++ to the second.

Open Sound Control is a packet protocol for transferring data between multimedia devices (primarily electronic musical instruments / synthesizers and PCs), which has recently been used more and more as a replacement for MIDI. The main feature of the protocol is working through the network, but there are other features . The latest version of the specification is available at the Berkeley University Center for Audio Technology (CNMAT).

As I mentioned, today the typical application of the protocol is to provide an interface between input devices (synthesizers, tablets, game controllers, etc.) and specialized programs such as Super Collider, Traktor, REAPER, etc. OSC out of the box is supported by awesome devices like lemurand Monome .

Working over the network, the protocol allows the exchange of data in both directions, which allows for feedback with the device. If you look closely at the screenshots, then some control buttons have an additional indicator that can be lit after the command sent earlier was executed by the server in reality. Those. Your tablet may reflect the real state of the device that you are controlling (target). If you get the hang of it, you can even draw graphs, but this is for musiers who know a lot about perversions.

Here is an example of the messages exchanged between the OSC server and the client:

In this case, we have the exact control identifier and / 1 / fader2 0.497120916843 means that from the second slider on the first tab (yes, you can use several tabs within the same layout), the value 0.49 arrived to the server, etc. The Z-message from this example can be included as desired, and I will talk about it later.

In the Lightpack example, it was convenient for us to send the names of profiles to the tablet and switch between them. In addition, at the start of the session, the positions of all controls on the tablet are automatically aligned according to the actual settings of the target device.

To work with the Lightpack API, we use our own wrapper written in Python. Therefore, and also because for Python there was a OSC library that satisfactory us , we decided to write a small script that was fatally a connector between the API and the network device sending OSC messages. The main requirements for such an implementation:

Although, if you dig deeper into the tunnels on your router, you can bypass the second restriction and manage the drinker for your hamster from the office on the other side of the city.

For those who, like me, prefer pictures, I have developed a scheme of interaction:

In our case, the exchange between the connector and the API is implemented through sockets (this is a feature of the API itself), while, as you can see in the demo, everything works quite quickly. Of course, in the equation with the Arduino hamster, right behind the router will be the device itself with the center of logic in the form of a microcontroller with firmware, which already includes libraries for working with the network and OSC.

In fact, for implementation, we only had to deal with the process of initializing the exchange, feedback, and converting the received / transmitted values. The lion's share of the code is simple mapping. Those. after you draw the layout you will have a list of control addresses (buttons, sliders, shortcuts, etc.) on your hands, each of which needs to be mapped to an API function. All.

Honestly, I don’t know what more can be written here. From a programming point of view, the task is primitive. The source code for this “connector”, as usual, lies in our repository and is accessible to everyone.

By the way, TouchOSC implements some fairly convenient additional features: Firstly, it is possible to use the / ping messageexchanged between client and server to maintain a connection even when the data itself is not running. Secondly, the so-called z-message is supported - a special message that tells the server that the user on the client has finally finished tapping the screen with his finger (the moment the input is completed). We did not use this feature, but I do not exclude that there are situations in which it will be extremely useful. Thirdly, like most similar applications, TouchOSC can send data to the server from the accelerometer built into the mobile device . Those. You can use your smartphone or tablet as a tricky input device for your network homemade products (twist the camera on servos, etc.).

Everything about interacting with the TouchOSC app runs smoothly. Even loading your own layouts is done “over the air” with the click of a button. The only noticeable drawback today is the inability to download your own layouts for the version for Android. In a correspondence with the author, he managed to convince me that "just now, I'm preparing for release."

The library for Python turned out to be not very well documented, so we took longer to initialize the data exchange process than actually programmed the layout behavior.

In general, the process of creating such a mashup is extremely intuitive and does not take much time. That's why I would recommend using OSC for rapid prototyping instead of full-fledged client-server stacks with web interfaces. Moreover, if you are going to present this prototype at an event, or, say, to a customer, a more visual option for implementing an accompanying wow! -How-you-miss-in-eppstor with questions I simply do not know.

So, once again: If you need to quickly and cheaply implement a simple way to visually control a homemade network device with feedback, the OSC protocol is an interesting choice.

So that the material does not seem divorced from real life, I will show how we did this trick 4 months ago with our Lightpack. This situation is slightly different from the spherical scenario of using the Open Sound Control protocol (further OSC) in a vacuum, but nevertheless is a good example of how it can be effectively used in rapid prototyping.

Task

In stock, we had an open-source USB backlight device , which is controlled by cross-platform software and has on its side this API for managing from external programs or plug-ins. We wanted to control this device over the network from our tablets and smartphones. Turn it on and off, change the settings profiles, control the brightness, adjust the backlight for each individual channel and can even launch other plugins.

In your case, the situation may be different, not complicated by the intermediary in the form of a full-fledged PC and some kind of API. For example, a device on an ARM / AVR that periodically connects to the network, or at least an Arduino with an ethernet shield that 24/7 logs power consumption for you behind the dashboard in the entrance and sends the data directly to the Google document.

The first thing that comes to mind for solving such a problem is the web interface. It would seem universal: you can access it from any platform. But we did not want to force the web server to spin constantly, no matter how microscopic it is. And we didn’t find any good web-interface designers who would have suitable controls for us, we didn’t want to draw our own and understood that the result could seriously work only on the desktop and ordinary MK wouldn’t master it if we had to manage standalone in the future -device.

However, if you can connect to the device via the network (in our case, you can send commands to the API directly via Telnet), you could write a full-fledged application for the mobile platform that would simply send message packets, and the server would parse them and thus control the device . Despite the fact that over the evening we put together a small prototype for Android that exploited this method, it still remained “expensive” even in comparison with the web interface.

Time passed, the task was recorded somewhere in the subcortex and did not get out of there until I accidentally stumbled upon the TouchOSC mobile application, which (almost) works equally well on iOS and Android.

After demonstration videos and screenshots, it was simply impossible to pass by without trying.)

Idea

In short, the TouchOSC application ( AppStore , Google Play ) implements the OSC and MIDI protocol interfaces on your mobile devices, which all specialized synthesizers, sound editors, programs for DJs, recording studios, etc. are used to working with. In addition, TouchOSC makes it possible to use only predefined layouts of controls (layouts) of buttons, sliders, shortcuts, but also very simple and quick to draw your own in a special editor. All this stuff works through wi-fi, but it can also work on wires with special adapters.

A typical layout of predefined looks something like this. Level of difficulty: "Koreans", which, however, does not stop you from repeating it in the layout editor.

A superficial analysis showed that this application is not the only one and, if desired, for iOS you can use iOSC, OSCemote, Mrmr OSC controller, Remokon for OSC (I won’t even list them for Android - there are even more of them). The same analysis showed that the protocol itself is used in a large number of programs and devices, and the most important thing for us is that libraries and wrappers with its implementation exist for many programming languages and platforms (for example, options for Arduino and mbed ). An incomplete list is on the official website.

We now have the opportunity in an untypical way to apply new, interesting, at first glance, tools. Ahead was a free day off, so with the words “call accepted” timsat Google deployed to one half of the monitor, and Notepad ++ to the second.

Protocol

Open Sound Control is a packet protocol for transferring data between multimedia devices (primarily electronic musical instruments / synthesizers and PCs), which has recently been used more and more as a replacement for MIDI. The main feature of the protocol is working through the network, but there are other features . The latest version of the specification is available at the Berkeley University Center for Audio Technology (CNMAT).

As I mentioned, today the typical application of the protocol is to provide an interface between input devices (synthesizers, tablets, game controllers, etc.) and specialized programs such as Super Collider, Traktor, REAPER, etc. OSC out of the box is supported by awesome devices like lemurand Monome .

Working over the network, the protocol allows the exchange of data in both directions, which allows for feedback with the device. If you look closely at the screenshots, then some control buttons have an additional indicator that can be lit after the command sent earlier was executed by the server in reality. Those. Your tablet may reflect the real state of the device that you are controlling (target). If you get the hang of it, you can even draw graphs, but this is for musiers who know a lot about perversions.

Here is an example of the messages exchanged between the OSC server and the client:

/1/fader2 0.497120916843

/1/fader2 /z

/1/rotary1 0.579584240913

/1/rotary1 0.605171322823

/1/rotary1 /zIn this case, we have the exact control identifier and / 1 / fader2 0.497120916843 means that from the second slider on the first tab (yes, you can use several tabs within the same layout), the value 0.49 arrived to the server, etc. The Z-message from this example can be included as desired, and I will talk about it later.

In the Lightpack example, it was convenient for us to send the names of profiles to the tablet and switch between them. In addition, at the start of the session, the positions of all controls on the tablet are automatically aligned according to the actual settings of the target device.

Implementation

To work with the Lightpack API, we use our own wrapper written in Python. Therefore, and also because for Python there was a OSC library that satisfactory us , we decided to write a small script that was fatally a connector between the API and the network device sending OSC messages. The main requirements for such an implementation:

- Availability of client / server ports (you can configure your own in TouchOSC)

- The client and server must be on the same network.

Although, if you dig deeper into the tunnels on your router, you can bypass the second restriction and manage the drinker for your hamster from the office on the other side of the city.

For those who, like me, prefer pictures, I have developed a scheme of interaction:

In our case, the exchange between the connector and the API is implemented through sockets (this is a feature of the API itself), while, as you can see in the demo, everything works quite quickly. Of course, in the equation with the Arduino hamster, right behind the router will be the device itself with the center of logic in the form of a microcontroller with firmware, which already includes libraries for working with the network and OSC.

In fact, for implementation, we only had to deal with the process of initializing the exchange, feedback, and converting the received / transmitted values. The lion's share of the code is simple mapping. Those. after you draw the layout you will have a list of control addresses (buttons, sliders, shortcuts, etc.) on your hands, each of which needs to be mapped to an API function. All.

Honestly, I don’t know what more can be written here. From a programming point of view, the task is primitive. The source code for this “connector”, as usual, lies in our repository and is accessible to everyone.

By the way, TouchOSC implements some fairly convenient additional features: Firstly, it is possible to use the / ping messageexchanged between client and server to maintain a connection even when the data itself is not running. Secondly, the so-called z-message is supported - a special message that tells the server that the user on the client has finally finished tapping the screen with his finger (the moment the input is completed). We did not use this feature, but I do not exclude that there are situations in which it will be extremely useful. Thirdly, like most similar applications, TouchOSC can send data to the server from the accelerometer built into the mobile device . Those. You can use your smartphone or tablet as a tricky input device for your network homemade products (twist the camera on servos, etc.).

Underwater rocks

Everything about interacting with the TouchOSC app runs smoothly. Even loading your own layouts is done “over the air” with the click of a button. The only noticeable drawback today is the inability to download your own layouts for the version for Android. In a correspondence with the author, he managed to convince me that "just now, I'm preparing for release."

The library for Python turned out to be not very well documented, so we took longer to initialize the data exchange process than actually programmed the layout behavior.

In general, the process of creating such a mashup is extremely intuitive and does not take much time. That's why I would recommend using OSC for rapid prototyping instead of full-fledged client-server stacks with web interfaces. Moreover, if you are going to present this prototype at an event, or, say, to a customer, a more visual option for implementing an accompanying wow! -How-you-miss-in-eppstor with questions I simply do not know.

So, once again: If you need to quickly and cheaply implement a simple way to visually control a homemade network device with feedback, the OSC protocol is an interesting choice.