Diagnostics in pictures: we understand the state of the product using tables and graphs

The beautiful idea of a product within a large company or start-up almost always inevitably encounters a number of difficulties at the implementation stage. It often happens that the work goes on, bugs are fixed, the release is approaching, but there is no general understanding of the state of the product. This happens because their own genius of the creators of the software or service (especially when it comes to startups) captures their eyes, and the problems of the product are not adequately understood. As a result, in the best case scenario, the team does not meet the release dates, and in the worst case, a non-viable product is born, which users contemptuously call alpha and send hate rays to the creators through the feedback form.

Captain Evidence hints: in order to prevent this, it is important to be able to understand the state of your product at each stage of its development. This large article proposes a methodology for assessing his condition in the most visual form - in the form of tables and graphs. Here, my experience and the experience of the entire team of the Parallels Novosibirsk office over the past six years are summarized. To make it clear: we are making Parallels Plesk Panel - a hosting panel that is used on approximately every second server in the world that provides web hosting services. Applying this technique, we got the following results:

I ask interested persons under cut and in comments. I will answer any questions.

The article is based on a speech at the QASib conference in Omsk.

By signing an offer to work for a large company or creating a startup, we expect money, success and recognition from work. At the beginning of the journey, it often seems that everything is beautiful and cloudless, that we are geniuses who have come up with cool software or a service with a set of useful functions, we have worked out a team and a lot of time. The road to success looks simple and hassle-free.

Understanding usually comes in preparation for a release. Problems begin: a third-party team that you outsourced some of the work, dynamite you with deadlines, someone in your team unexpectedly fell ill, conceived features during implementation turned out to be much more difficult, the selected technical solutions do not seem to be the most successful. In addition, you are drowning in a sea of bugs, requirements from future users are constantly changing, new super important features appear that you definitely need to shove into this release. Against this background, people's motivation is slowly melting. These problems can swallow you and prevent the release from taking place. Consequently, the "bag of money" can not be seen. There is not far from a panic.

The answer to the well-known questions “Who is to blame?” And “What to do?” Can be obtained by analyzing the data that will inevitably be collected in your hands. If you somehow and somewhere fix your tasks, current progress, results, problems found and other useful information, then tracking the status of the product will be easy. Maintaining a “logbook” of the project in the barn book has the right to life, but it is better to store the data in some system that has an API or an understandable database structure. Using the API and / or database will make the information easily accessible and parsed.

The Parallels Plesk Panel team works with tasks and bugs in the TargetProcess system. This system has a number of built-in reports that allow us to quickly receive information about the release, current progress on working on it, tasks done and those that have yet to be done, loading the team and balancing resources, found bugs, the dynamics of their location, etc. If we need more detailed and diverse information, then we get it directly from the TargetProcess database.

Well, now directly to the data and graphs.

One of the first steps that in the future will allow you to correctly assess the state of your product is to evaluate whether you will be able to do everything you have planned.

On the one hand, you have a great team, and, on the other hand, a set of features from the customer / product owner / program manager. This is what needs to be compared.

First you need to figure out how many man-hours (or story points, or "parrots") your team can digest. For example, the release duration is estimated at three months. Each month has four weeks, each week has 5 days, each day has 8 hours (for simplicity I do not take holidays into account). The team at the same time consists of 10 people, where three are mega-productive guys, five are ordinary employees and two are newcomers who have worked on the project for quite a bit of time. Summing up and multiplying, we get that our team is able to digest the volume of work with a total weight of about 4000 man-hours in one release.

Now let's figure out what the team will have to do: testing new features - 2200 hours, checking bugs - 350 hours, regression testing at the end of the release - 600 hours, automation - 500 hours, holidays - 160 hours (you can subtract them from team capacity, and you can lay it here as you wish), risks - 200 hours (people’s illnesses, new features that come unexpectedly marked “must”, delays caused by other teams, etc. usually fall here). Total 3800 hours. It looks like we manage to do everything and ensure the release of the proper quality.

Or not?

But what if our team productivity is significantly less than the amount of work that remains to be done?

There are several options:

Be sure to make sure that the amount of work you need to do correlates with the amount of work that your team can digest. Well, or you had a clear plan on how to get around this problem.

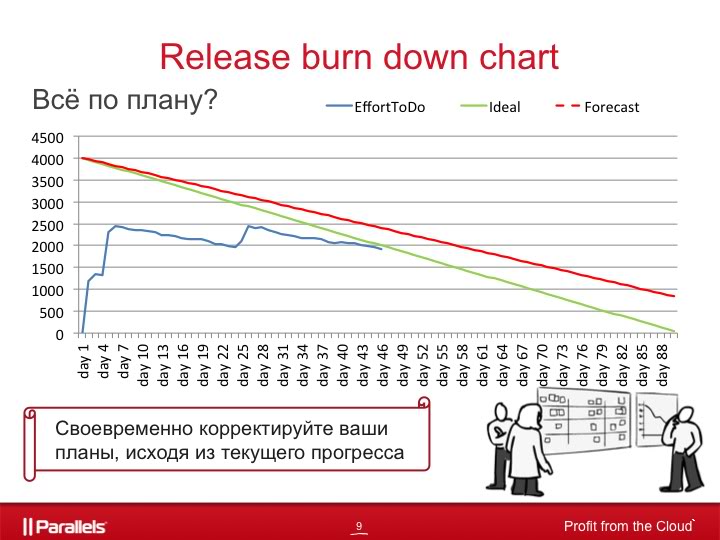

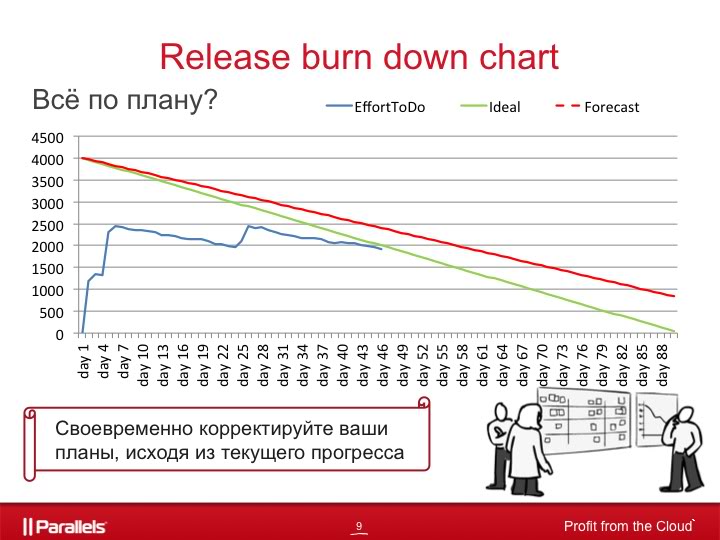

Your estimates will lay the foundation for release burn down chart.

Translated into Russian, burn down will sound like “burn to the ground” - which is well consistent with the meaning of this graph - to show the amount of work done and remaining work relative to time.

Now there will be slides.

For example, consider the QA team work schedule for a release lasting three months.

The green line corresponds to the ideal utilization of resources, when each team member performs a fixed amount of work every day, corresponding to his qualifications and performance. The blue line shows the amount of work that needs to be done at any given time.

The graph shows that:

If we had this inherent in the risks - excellent, if not - then we need to decide what to do in order to rectify the situation.

And another line on the chart, the red dotted line, shows when to expect the end of the release in fact, if we continue to work at the same pace as in recent days. It is recalculated every day, based on the real speed of utilization of tasks in recent days.

The schedule looks simple, but it is very useful for understanding the current situation in your project and allows you to timely adjust your plans based on the current situation.

Useful life hack. In addition to the general schedule for the project, it makes sense to have separate schedules for development and for QA. If the teams are large and they can be divided into subcommands, it is better to keep a schedule for each of them. This can be incredibly useful in finding bottlenecks in your project. You may even be able to find the team that pulls the entire project down and does not allow to fly up.

Often we have questions: do we fix it? Do we have time? The answer here can be given by information on the number of open bugs on the release, grouped by priority.

For each bug, we set the priority from p0 to p4, where:

As an example, consider the following graph.

The green line shows the number of open bugs p0-p1, judging by the graph, we can assume that 4 and 8 weeks are the ends of iterations when all bugs p0-p1 are repaired. This is one of the criteria for closing an iteration.

The red line, respectively, shows the number of open bugs p0-p2, and the blue line shows the total number of open bugs on the release.

Therefore, if you see at the beginning and middle of the iteration that the volume of bugs p0-p1 grows so fast that it is impossible to fix everything and check their fixes before the end of the iteration, then you need to make the appropriate decisions: re-prioritizing bugs (of interest is reducing the priority for some bugs p0- p1), transferring some of the features to the next iteration (spend the free time on bug fixing), extend the iteration until all bugs p0-p1 have been fixed, add additional resources to the team, etc.

Toward the end of the release, it makes sense to add another line to the same chart. The purple line shows the number of bugs that a team of developers can fix. That is, if our release should happen at the beginning of the 19th week, then in the 18th week developers will be able to fix 38 bugs, in the 17th and 18th weeks - 79 bugs, in the 16-17-18th weeks - 147 bugs, and so on.

Such a schedule allows you to predict a situation when a team of developers is not able to fix all the bugs that should be fixed before the end of the release. This will allow you to take certain actions in advance:

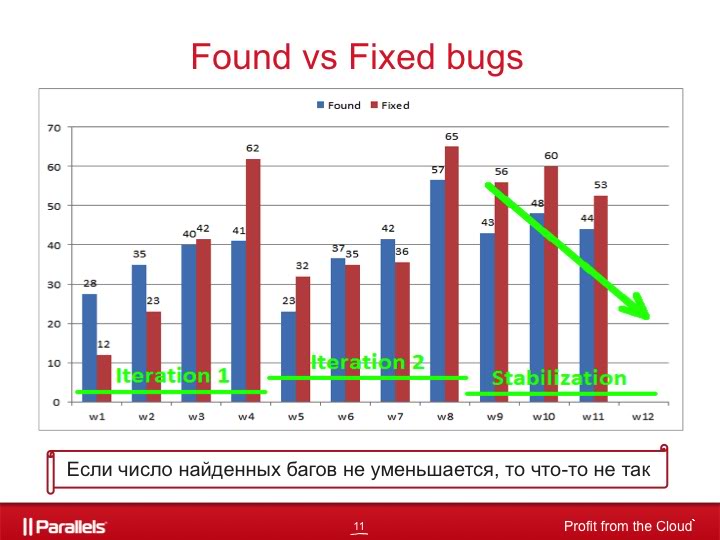

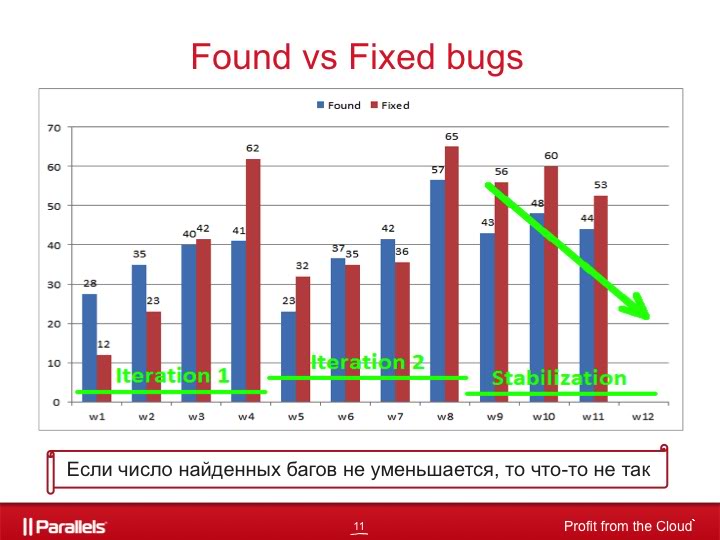

The next thing that makes sense to look at is the number of bugs found and fixed relative to time.

The picture shows an example release of three iterations, where two iterations are the development of new features and one is the stabilization of the product.

If we look at this graph at the time of work on one of the first iterations, then this allows us to monitor the quality of features that are currently being worked on. For example, if we received 60 bugs instead of 37 bugs in the 6th week, this would be a signal for us to figure out why we found 2 times more problems than usual at this stage of iteration.

Roughly the same thing applies to bug fixing. For example, if on the 4th and 8th week significantly fewer bugs were repaired than usual, then you need to understand why. Are bugs more complicated? Or is there less time allocated for bug fixing than anticipated?

If we look at this graph at the stage of product stabilization, we can immediately see whether we are bringing the product to a state where it can be given to end users. It seems that the answer is simple: yes, if the number of bugs found falls, and no, if it grows. But this is not always the case. This graph gives us only information about how many bugs were found, but does not indicate what kind of bugs they are.

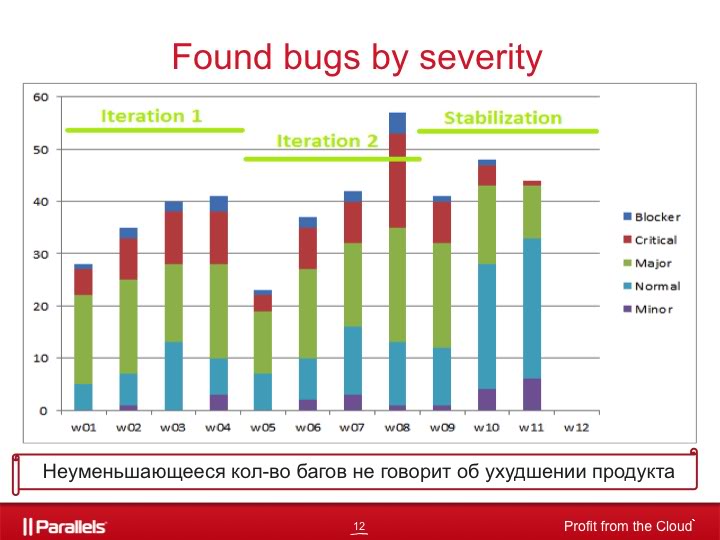

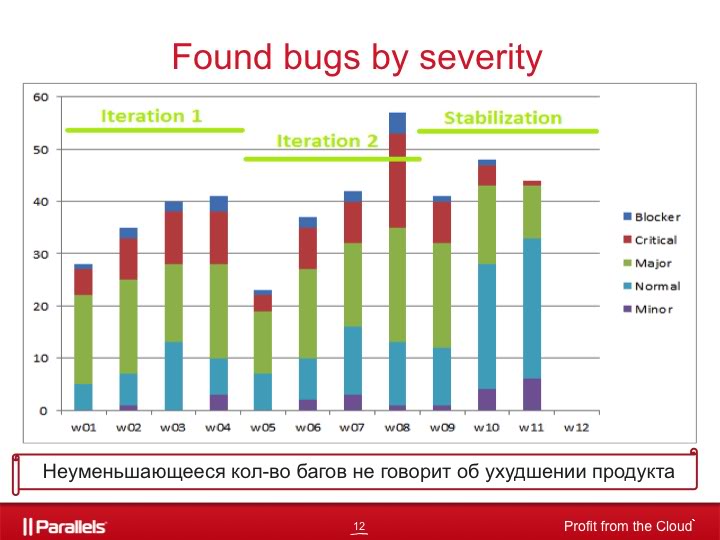

If we consider the distribution of found bugs by severity on the same release, it will become clear that the release is stabilizing even though the number of bugs found does not decrease as it approaches the end date.

Found bugs on the latest releases are less critical from the point of view of validity: last week blockers completely disappeared, critics almost disappeared, the number of major ones found was halved, but the number of normals and minors increased.

Therefore, the non-decreasing number of bugs found does not mean that the product is not improving.

Another valuable source of information is the distribution of bugs by the circumstances in which they were found.

To do this, we have a special field “Found under condition” for bugs, which can take the following values:

Understanding how found bugs are distributed by these categories gives us a number of useful information that can be briefly presented here in such an easily perceived table.

Another useful source of information is the distribution of bugs by component, it will give us an answer to the question of which component of your product is weak at the knees.

Combining this with severity distribution, you can highlight the most problematic components at the moment and try to understand why this is happening and how to fix it.

If the problematic components do not change from week to week, you can be congratulated: you have found a bottleneck in your product. And if customomers constantly complain about these components, it makes sense to think about their quality, architecture, the team that works on them, adding additional resources there both from the development side and from the QA side, increasing test coverage and much more .

One of the main sources of product information for you, of course, is your users. When you are not working on the first version of the product, then you have a chance to take advantage of feedback from them that you can receive through your support, forum, sales, manual, automatic reporters, built into your product, etc.

This will allow:

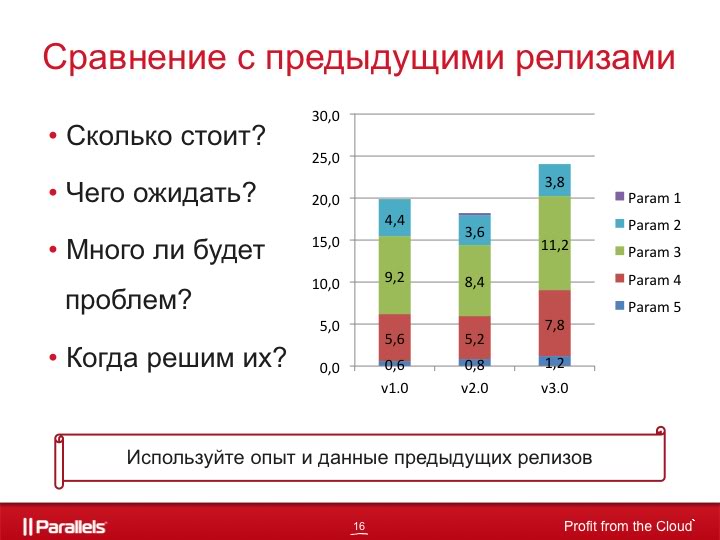

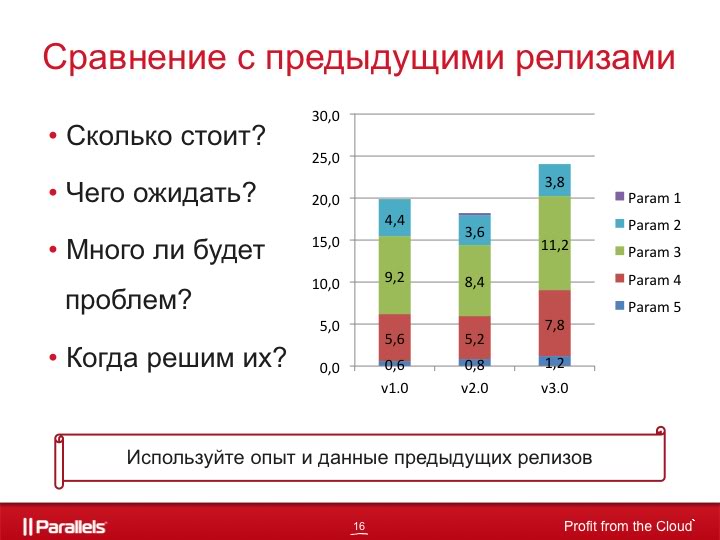

Another powerful tool is to compare the current situation with previous releases.

Since we have been using TargetProcess for several years, the volume of accumulated information is very large, this allows us to accurately predict what exactly to expect from the release, to understand how much we are mistaken in those estimates that give, how many bugs we find and how many we fix, what risks usually shoot and how to get around them, how much time we will need for certain tasks, etc.

What else is worth paying attention to when analyzing the current release status:

As you can see, all these graphs and tables are a very powerful and useful tool that will help you plan the release correctly and accurately, taking into account most of the possible problems, predict the problem in advance and help fix it in time, and, no less important, avoid it in the future.

In particular, when developing the recently released Parallels Plesk Panel 11, they allowed.

There should be a list of what QA has become in Splash 11 a real breakthrough. Of course, if we could talk about it out loud.

But! It is very important to remember that no matter how much data you collect, no matter how many tablets and graphs you build, they will be useful only if you draw useful conclusions from them and change the product and processes for the better .

Captain Evidence hints: in order to prevent this, it is important to be able to understand the state of your product at each stage of its development. This large article proposes a methodology for assessing his condition in the most visual form - in the form of tables and graphs. Here, my experience and the experience of the entire team of the Parallels Novosibirsk office over the past six years are summarized. To make it clear: we are making Parallels Plesk Panel - a hosting panel that is used on approximately every second server in the world that provides web hosting services. Applying this technique, we got the following results:

- significantly improved the quality of releases (according to the Incident rate);

- releases have become more predictable, the accuracy of our forecasts and estimates has increased significantly;

- I understood why something was going wrong and how to avoid it in the future.

I ask interested persons under cut and in comments. I will answer any questions.

The article is based on a speech at the QASib conference in Omsk.

By signing an offer to work for a large company or creating a startup, we expect money, success and recognition from work. At the beginning of the journey, it often seems that everything is beautiful and cloudless, that we are geniuses who have come up with cool software or a service with a set of useful functions, we have worked out a team and a lot of time. The road to success looks simple and hassle-free.

Understanding usually comes in preparation for a release. Problems begin: a third-party team that you outsourced some of the work, dynamite you with deadlines, someone in your team unexpectedly fell ill, conceived features during implementation turned out to be much more difficult, the selected technical solutions do not seem to be the most successful. In addition, you are drowning in a sea of bugs, requirements from future users are constantly changing, new super important features appear that you definitely need to shove into this release. Against this background, people's motivation is slowly melting. These problems can swallow you and prevent the release from taking place. Consequently, the "bag of money" can not be seen. There is not far from a panic.

The answer to the well-known questions “Who is to blame?” And “What to do?” Can be obtained by analyzing the data that will inevitably be collected in your hands. If you somehow and somewhere fix your tasks, current progress, results, problems found and other useful information, then tracking the status of the product will be easy. Maintaining a “logbook” of the project in the barn book has the right to life, but it is better to store the data in some system that has an API or an understandable database structure. Using the API and / or database will make the information easily accessible and parsed.

The Parallels Plesk Panel team works with tasks and bugs in the TargetProcess system. This system has a number of built-in reports that allow us to quickly receive information about the release, current progress on working on it, tasks done and those that have yet to be done, loading the team and balancing resources, found bugs, the dynamics of their location, etc. If we need more detailed and diverse information, then we get it directly from the TargetProcess database.

Well, now directly to the data and graphs.

One of the first steps that in the future will allow you to correctly assess the state of your product is to evaluate whether you will be able to do everything you have planned.

On the one hand, you have a great team, and, on the other hand, a set of features from the customer / product owner / program manager. This is what needs to be compared.

First you need to figure out how many man-hours (or story points, or "parrots") your team can digest. For example, the release duration is estimated at three months. Each month has four weeks, each week has 5 days, each day has 8 hours (for simplicity I do not take holidays into account). The team at the same time consists of 10 people, where three are mega-productive guys, five are ordinary employees and two are newcomers who have worked on the project for quite a bit of time. Summing up and multiplying, we get that our team is able to digest the volume of work with a total weight of about 4000 man-hours in one release.

Now let's figure out what the team will have to do: testing new features - 2200 hours, checking bugs - 350 hours, regression testing at the end of the release - 600 hours, automation - 500 hours, holidays - 160 hours (you can subtract them from team capacity, and you can lay it here as you wish), risks - 200 hours (people’s illnesses, new features that come unexpectedly marked “must”, delays caused by other teams, etc. usually fall here). Total 3800 hours. It looks like we manage to do everything and ensure the release of the proper quality.

Or not?

But what if our team productivity is significantly less than the amount of work that remains to be done?

There are several options:

- Keep silent and leave everything as it is, thinking that then it will somehow be decided on its own, or throw out people's vacations, or automation, or part of the testing, which is likely to lead to a decrease in the quality of the product. So what? Fine! It's normal if you are working on a product that you hate, or with a team that you want to destroy ... It is a pity that such an approach is so often used in the industry.

- To bring this problem of limiting the productivity of the team to program and project managers and achieve a reduction in the number of features on the release or a shift in the release date to a later date. It sounds more or less. And you can treat this situation as a difficult and interesting test and go the third way.

- Come up with a way to do the same thing, but in a shorter period of time and with less effort. Ideally, you should use the last two options at the same time.

Be sure to make sure that the amount of work you need to do correlates with the amount of work that your team can digest. Well, or you had a clear plan on how to get around this problem.

Your estimates will lay the foundation for release burn down chart.

Translated into Russian, burn down will sound like “burn to the ground” - which is well consistent with the meaning of this graph - to show the amount of work done and remaining work relative to time.

Now there will be slides.

For example, consider the QA team work schedule for a release lasting three months.

The green line corresponds to the ideal utilization of resources, when each team member performs a fixed amount of work every day, corresponding to his qualifications and performance. The blue line shows the amount of work that needs to be done at any given time.

The graph shows that:

- the first seven days were planning and evaluating release tasks

- then, for about three weeks, work was underway on the release (since the blue line is parallel to the green, most likely the tasks were done according to the plan, and the initial estimates hit the mark)

- a month after the start of the release, we see a jump in the blue line up, most likely due to the addition of new features or revaluation of labor costs

- then again we see work on tasks that clearly does not go according to plan (it may be caused by illness of one of the teams, delays on the side of other teams, poor quality features, etc.)

If we had this inherent in the risks - excellent, if not - then we need to decide what to do in order to rectify the situation.

And another line on the chart, the red dotted line, shows when to expect the end of the release in fact, if we continue to work at the same pace as in recent days. It is recalculated every day, based on the real speed of utilization of tasks in recent days.

The schedule looks simple, but it is very useful for understanding the current situation in your project and allows you to timely adjust your plans based on the current situation.

Useful life hack. In addition to the general schedule for the project, it makes sense to have separate schedules for development and for QA. If the teams are large and they can be divided into subcommands, it is better to keep a schedule for each of them. This can be incredibly useful in finding bottlenecks in your project. You may even be able to find the team that pulls the entire project down and does not allow to fly up.

Often we have questions: do we fix it? Do we have time? The answer here can be given by information on the number of open bugs on the release, grouped by priority.

For each bug, we set the priority from p0 to p4, where:

- P0 - must be repaired by ASAP

- P1 - must be fixed before the end of the iteration / sprint

- P2 - must be fixed before the end of the release

- P3 - repair if there is time

- P4 - everything else, without which you can definitely live

As an example, consider the following graph.

The green line shows the number of open bugs p0-p1, judging by the graph, we can assume that 4 and 8 weeks are the ends of iterations when all bugs p0-p1 are repaired. This is one of the criteria for closing an iteration.

The red line, respectively, shows the number of open bugs p0-p2, and the blue line shows the total number of open bugs on the release.

Therefore, if you see at the beginning and middle of the iteration that the volume of bugs p0-p1 grows so fast that it is impossible to fix everything and check their fixes before the end of the iteration, then you need to make the appropriate decisions: re-prioritizing bugs (of interest is reducing the priority for some bugs p0- p1), transferring some of the features to the next iteration (spend the free time on bug fixing), extend the iteration until all bugs p0-p1 have been fixed, add additional resources to the team, etc.

Toward the end of the release, it makes sense to add another line to the same chart. The purple line shows the number of bugs that a team of developers can fix. That is, if our release should happen at the beginning of the 19th week, then in the 18th week developers will be able to fix 38 bugs, in the 17th and 18th weeks - 79 bugs, in the 16-17-18th weeks - 147 bugs, and so on.

Such a schedule allows you to predict a situation when a team of developers is not able to fix all the bugs that should be fixed before the end of the release. This will allow you to take certain actions in advance:

- sort p1-p2 bugs in order to re-prioritize them, development focus only on those bugs that really need to be fixed, and do not waste time on p3-p4 bugs and p1-p2 pseudo-bugs

- attract additional resources to bug fixing

- move the release date to a later time, etc.

The next thing that makes sense to look at is the number of bugs found and fixed relative to time.

The picture shows an example release of three iterations, where two iterations are the development of new features and one is the stabilization of the product.

If we look at this graph at the time of work on one of the first iterations, then this allows us to monitor the quality of features that are currently being worked on. For example, if we received 60 bugs instead of 37 bugs in the 6th week, this would be a signal for us to figure out why we found 2 times more problems than usual at this stage of iteration.

Roughly the same thing applies to bug fixing. For example, if on the 4th and 8th week significantly fewer bugs were repaired than usual, then you need to understand why. Are bugs more complicated? Or is there less time allocated for bug fixing than anticipated?

If we look at this graph at the stage of product stabilization, we can immediately see whether we are bringing the product to a state where it can be given to end users. It seems that the answer is simple: yes, if the number of bugs found falls, and no, if it grows. But this is not always the case. This graph gives us only information about how many bugs were found, but does not indicate what kind of bugs they are.

If we consider the distribution of found bugs by severity on the same release, it will become clear that the release is stabilizing even though the number of bugs found does not decrease as it approaches the end date.

Found bugs on the latest releases are less critical from the point of view of validity: last week blockers completely disappeared, critics almost disappeared, the number of major ones found was halved, but the number of normals and minors increased.

Therefore, the non-decreasing number of bugs found does not mean that the product is not improving.

Another valuable source of information is the distribution of bugs by the circumstances in which they were found.

To do this, we have a special field “Found under condition” for bugs, which can take the following values:

- Customer - we missed a bug in one of the previous versions, and the custom team found it and registered it with us

- Regression - functionality working in previous versions was broken in the current

- Fresh Look - we began to test better and more and found bugs from previous versions that we missed earlier

- New TC - we found a bug in new features of the current release

- Other...

Understanding how found bugs are distributed by these categories gives us a number of useful information that can be briefly presented here in such an easily perceived table.

| What happened? | What to do? |

| We find many regression bugs when testing new functionality made in the current iteration | You need to understand the reason why, when making new functionality, we break the old one working, talk with the developers of the corresponding features, increase the number of unit tests, plan additional regression testing. |

| Find a lot of new TCs bugs | Talk with the developers about the reasons for the low quality of features, send them for revision, improve code review procedures. |

| Find a lot of fresh look bugs | On the one hand, we began to test better, look deeper and wider, on the other hand, why didn’t we find these problems before? If the deadlines are very tight at the end of the release, then similar bugs can be sacrificed, because the product will not get worse, and the bugs found may not bother anyone, since no one complains from users about them. |

Another useful source of information is the distribution of bugs by component, it will give us an answer to the question of which component of your product is weak at the knees.

Combining this with severity distribution, you can highlight the most problematic components at the moment and try to understand why this is happening and how to fix it.

If the problematic components do not change from week to week, you can be congratulated: you have found a bottleneck in your product. And if customomers constantly complain about these components, it makes sense to think about their quality, architecture, the team that works on them, adding additional resources there both from the development side and from the QA side, increasing test coverage and much more .

One of the main sources of product information for you, of course, is your users. When you are not working on the first version of the product, then you have a chance to take advantage of feedback from them that you can receive through your support, forum, sales, manual, automatic reporters, built into your product, etc.

This will allow:

- understand what features are in demand, and what is not, what is worth developing and what is not;

- to understand exactly how the customomers use your product, which scenarios (very often it happens that you seem to have checked everything before the release, and after the release there are bugs that such and such script does not work, and this does not work, and you went completely different trails);

- use risk-based testing (for example, conduct full testing on the most common configurations and trimmed on all others).

Another powerful tool is to compare the current situation with previous releases.

Since we have been using TargetProcess for several years, the volume of accumulated information is very large, this allows us to accurately predict what exactly to expect from the release, to understand how much we are mistaken in those estimates that give, how many bugs we find and how many we fix, what risks usually shoot and how to get around them, how much time we will need for certain tasks, etc.

What else is worth paying attention to when analyzing the current release status:

- regular test results

- the difference between the initial estimates and the time actually spent,

- the quality of features affixed by the people responsible for testing these features,

- number of open bugs by severity-priority,

- number of bugs per time relative to time,

- what percentage of bugs found during the release are we repairing,

- density of bugs in the code, etc.

As you can see, all these graphs and tables are a very powerful and useful tool that will help you plan the release correctly and accurately, taking into account most of the possible problems, predict the problem in advance and help fix it in time, and, no less important, avoid it in the future.

In particular, when developing the recently released Parallels Plesk Panel 11, they allowed.

There should be a list of what QA has become in Splash 11 a real breakthrough. Of course, if we could talk about it out loud.

But! It is very important to remember that no matter how much data you collect, no matter how many tablets and graphs you build, they will be useful only if you draw useful conclusions from them and change the product and processes for the better .