Near future interfaces

Have you noticed? Before our eyes, once again, a real revolution is taking place. No, no, this is not what you thought about - no policy for the New Year! I am talking about a revolution in the field of interfaces. Despite the fact that some of its signs could be observed throughout 2010, the overall picture, I think, is not obvious to everyone.

This article is a small overview of trends in interfaces, in which I will try to convince you that very soon we will find ourselves in a fantastic future.

Let's start with the platitudes, and postpone the most delicious for later.

We have already managed to get used to this phrase. Multi-touch is already on iPhones, on touchpads and touch screens of some laptops, on Microsoft Surface and TouchTable touch tables - in general, it is already a completely working and commercialized technology. Although devices that support multi-touch, not everyone has it, but for geeks this technology has become as commonplace as a cell phone.

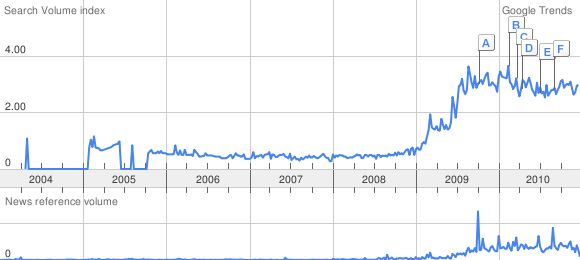

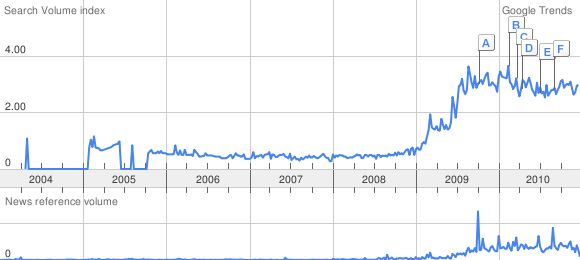

I just want to recall that the victorious procession of this interface around the world began only five years ago (although it was invented back in the eighties ). Take a look at the statistics of queries for the word multi touch in Google Trends: Actually, from this interface you can also count (conditionally) that revolution, which will be discussed later.

Attempts to "measure out" a flat image for a hundred years in the afternoon. But the last two years, there has been some revival in this area. Over the past couple of years, electronics manufacturers have created so many models of autostereoscopic displays (i.e., which do not require glasses) that they could be devoted to a separate large review. And yes, you can already equip a 3D cinema at home for the foreseeable price .

In the context of this review, 3D-visualization technologies are interesting not so much on their own, but in combination with other human-machine interfaces, which will be discussed below. Remember the Apple patent ?

Augmented reality technology is also not new for Habrausers: topics about it regularly appear on Habré.

There is almost no doubt that augmented reality will firmly enter our everyday life, although people began to realize the potential of this technology only two years ago. It is possible that very soon thanks to it, virtual reality and the gross material world will merge into a single whole, and the concepts of “offline” and “online” will become meaningless and indistinguishable. After all, the prototype of the glasses, familiar to animeshnikov on the series Dennou Coil , has already been created .

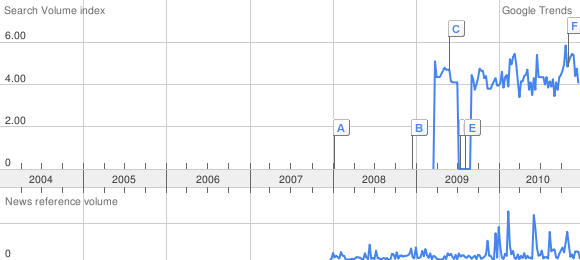

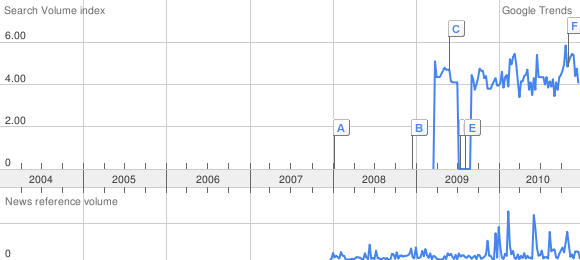

Now let's turn to Google Trends again, and see that the first progress in this area began about five years ago, as in the case of multi-touch. A relatively wide popularity came to this technology, and even less - just two years ago.

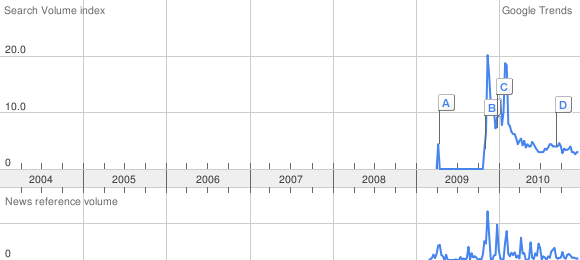

In June 2010, Microsoft introduced Kinect technology to the world . Actually, interactive systems based on motion recognition appeared a little earlier , some of them even integrated with 3D-visualization tools . But Kinect opened a new page in the era of interfaces, for two reasons. Firstly, thanks to a large number of sensors and competent algorithms, they were able to achieve stunning accuracy and versatility of recognition. And secondly, thanks to PC compatibility and the presence of an SDK, a community of developers immediately formed around the technology, and the libfreenect project appeared , the purpose of which is to develop drivers for Kinect for the most common platforms.

In addition to Kinect, there is another project related to gesture recognition, which, with bated breath, is watched by geeks around the world. And this project is called ...

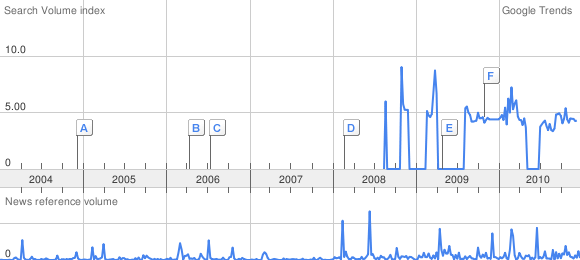

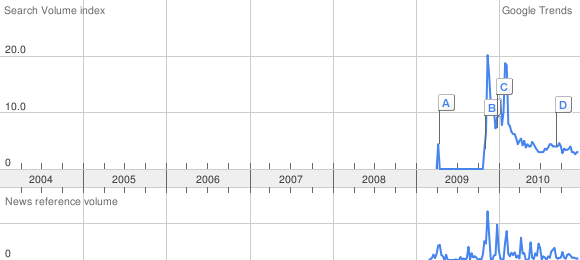

SixthSense was first introduced at Computer Human Interfaces 2009 in Boston, after which BBC News wrote a short article about technology , which, however, was not noticed by the general public. But the exciting performance of Pranava Mystry for TED talks was noticed by many, a link to it was on Habré .

Sixthsense technology uses a combination of a large number of devices at once: a mobile computer, a camera, a pocket projector, a mirror, headphones, some kind of clothespin and funny colorful patches on the fingers. And of course, it was not without cunning software, the most interesting lies in it. As a result, the real world and cyber space interact in incredible ways with each other. You can write a note by hand and stick it on your “desktop”, or drag a real paper document to your computer with your finger, or even drive it into cars on a regular piece of paper.

However, this was just a demonstration, before the actual implementation of the technology, you probably need to write thousands, or even millions of lines of code. Representatives of MIT Media Lab promised to open access to the SixthSense code for the developer community, but so far this has not happened. Nevertheless, there seems to be no technical obstacles to the implementation of this technology, and if you combine it with Kinect, miracles will turn out in general. At a minimum, it will not be necessary to constantly wear colored electrical tape on your fingers. And if you add here also 3D visualization technology? Mm ... but I already dreamed about it, because pocket 3D projectors do not exist yet.

It's hard to believe, but the Sixth Sense is not the most interesting thing that interface developers created for us in the first decade of the 21st century (at least from my subjective point of view). So move on.

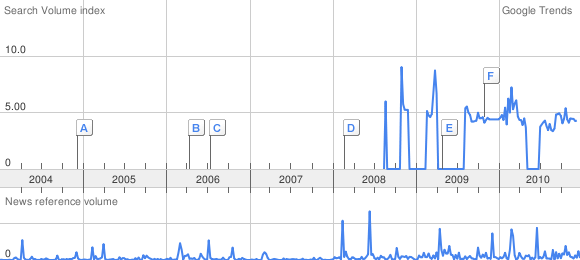

A neurocomputer interface , or BCI (brain computer interface), is a system that provides input into a computer directly, so to speak, from the brain, bypassing the user's hands and other unnecessary peripherals. Simply put, controlling the power of thought. The most developed and affordable device of this kind, as far as I know, is Emotiv EPOC (correct me if this is not so).

The most recent mention of Emotiv EPOC on Habré dates back to 2008 . Then it was noted with regret that sales of the device are postponed to 2009. Since then nothing was heard about Emotiv EPOC on Habré. Meanwhile, the device is already on sale. True, so far only in America, but when did it stop the Russian geeks? ;) Moreover, this thing hasa community of developers (albeit rather conditional) and 6 SDK options , from a lightweight free version to a fancy Enterprise Plus for 7.5 kilobacks. Unfortunately, if you want to debug on a real device and have documentation and access to the API, you will have to pay not $ 300, which a regular user will give, but $ 500 for Developer Edition . But this is also not cosmic money, and I hope there will be a brave experimenter on Habré who will take the opportunity to pick the interface of the future and describe his feat for future generations.

Emotiv EPOC is not the only commercially available device using BCI. There is at least Gamma Sys from g-tec and the Neural Impulse Actuatorfrom OCZ Technology.

Gamma Sys, it seems, is more focused on research organizations than on ordinary users - on the manufacturer’s website I did not find any prices or links to distributors: apparently, it is proposed to send a request for a technical and commercial offer or something like that.

Neural Impulse Actuator (NIA) looks more attractive to the end user - you can buy it for only $ 100 , and it even has manuals in Russian. He does not have an SDK, official support for each game must be expected from the manufacturer (and only under Windows). True, knowledgeable people realized that NIA is an ordinary HID device , and you can write firewood for it yourself. On the one hand, this seems to be a plus, and on the other hand, as I understand it, this architecture seriously limits the capabilities of the device compared to EPOC, which is not just an advanced mouse, but a complete device for measuring the activity of various parts of the brain.

CCC-COMBO BREAKER! And now I would like to say a few words, at first glance a few falling out of the general canvas. Let me remind you that the article began with the words about the revolution in the field of interfaces, the signs of which we have been observing for the last five years or so. As you can see from the above, scientists and engineers have done a great job to improve the user experience with digital devices. Everything that I wrote earlier related mainly to new peripheral devices or methods of working with them. Now, let's look at a concept such as Software Usability:

This topic has also become popular recently. And I have a feeling that attention to her will increase. To date, a huge amount of software has been created that covers almost any user needs. And now, in order to successfully compete among their own kind, programs are forced to have a simple, convenient and aesthetic user interface.

And now I will return to technology and try to draw general conclusions.

It seems that for the coming of the future we have enough physical devices, only the software that makes their use simple and convenient is not enough. Of course, this is also a laborious task, probably comparable to the development of an operating system (how many years has ReactOS been written there ?). But there would be a desire ... Who knows, maybe, among the people reading this article, there will be a person who organizes an open-source project to integrate all of these devices among themselves and create a 21st Century Computer Interface?

In conclusion, let's fantasize a little. Imagine: you are sitting in a chair, but there is no computer in front of you (more precisely, you do not see it). Instead, on your table (a regular table from Ikea) you can see virtual objects - text documents, windows with games, three-dimensional models, and move them around the table with your hands. Or hang in the air above the table. A pop-up window appears, and then closes: you removed it with the power of thought. You open a desk drawer with your hand, and from there the browser with Habr jumps out. The pages scroll as they read.

And remember the guys who make a cartoon about Gypsy? The second (well, third), they will make their own cartoon in six months. Compare: instead of a 3D editor, audio editor, and editing software, they now have one thought grabber. They don’t even need to render 3D models. They just sit and imagine a cartoon in detail. Of course, it also has its own subtleties and its own technical process ... but the performance is still much higher.

Today, such fantasies seem distant and irrelevant. But remember, once you discovered that everyone around you, not excluding you, is using mobile phones and the Internet without seeing anything surprising in this? The future is it. It comes unnoticed.

Multi touch

We have already managed to get used to this phrase. Multi-touch is already on iPhones, on touchpads and touch screens of some laptops, on Microsoft Surface and TouchTable touch tables - in general, it is already a completely working and commercialized technology. Although devices that support multi-touch, not everyone has it, but for geeks this technology has become as commonplace as a cell phone.

I just want to recall that the victorious procession of this interface around the world began only five years ago (although it was invented back in the eighties ). Take a look at the statistics of queries for the word multi touch in Google Trends: Actually, from this interface you can also count (conditionally) that revolution, which will be discussed later.

Stereoscopic and 3D image

Attempts to "measure out" a flat image for a hundred years in the afternoon. But the last two years, there has been some revival in this area. Over the past couple of years, electronics manufacturers have created so many models of autostereoscopic displays (i.e., which do not require glasses) that they could be devoted to a separate large review. And yes, you can already equip a 3D cinema at home for the foreseeable price .

In the context of this review, 3D-visualization technologies are interesting not so much on their own, but in combination with other human-machine interfaces, which will be discussed below. Remember the Apple patent ?

Augmented Reality

Augmented reality technology is also not new for Habrausers: topics about it regularly appear on Habré.

There is almost no doubt that augmented reality will firmly enter our everyday life, although people began to realize the potential of this technology only two years ago. It is possible that very soon thanks to it, virtual reality and the gross material world will merge into a single whole, and the concepts of “offline” and “online” will become meaningless and indistinguishable. After all, the prototype of the glasses, familiar to animeshnikov on the series Dennou Coil , has already been created .

Now let's turn to Google Trends again, and see that the first progress in this area began about five years ago, as in the case of multi-touch. A relatively wide popularity came to this technology, and even less - just two years ago.

Gesture analyzers

In June 2010, Microsoft introduced Kinect technology to the world . Actually, interactive systems based on motion recognition appeared a little earlier , some of them even integrated with 3D-visualization tools . But Kinect opened a new page in the era of interfaces, for two reasons. Firstly, thanks to a large number of sensors and competent algorithms, they were able to achieve stunning accuracy and versatility of recognition. And secondly, thanks to PC compatibility and the presence of an SDK, a community of developers immediately formed around the technology, and the libfreenect project appeared , the purpose of which is to develop drivers for Kinect for the most common platforms.

In addition to Kinect, there is another project related to gesture recognition, which, with bated breath, is watched by geeks around the world. And this project is called ...

... SixthSense

SixthSense was first introduced at Computer Human Interfaces 2009 in Boston, after which BBC News wrote a short article about technology , which, however, was not noticed by the general public. But the exciting performance of Pranava Mystry for TED talks was noticed by many, a link to it was on Habré .

Sixthsense technology uses a combination of a large number of devices at once: a mobile computer, a camera, a pocket projector, a mirror, headphones, some kind of clothespin and funny colorful patches on the fingers. And of course, it was not without cunning software, the most interesting lies in it. As a result, the real world and cyber space interact in incredible ways with each other. You can write a note by hand and stick it on your “desktop”, or drag a real paper document to your computer with your finger, or even drive it into cars on a regular piece of paper.

However, this was just a demonstration, before the actual implementation of the technology, you probably need to write thousands, or even millions of lines of code. Representatives of MIT Media Lab promised to open access to the SixthSense code for the developer community, but so far this has not happened. Nevertheless, there seems to be no technical obstacles to the implementation of this technology, and if you combine it with Kinect, miracles will turn out in general. At a minimum, it will not be necessary to constantly wear colored electrical tape on your fingers. And if you add here also 3D visualization technology? Mm ... but I already dreamed about it, because pocket 3D projectors do not exist yet.

It's hard to believe, but the Sixth Sense is not the most interesting thing that interface developers created for us in the first decade of the 21st century (at least from my subjective point of view). So move on.

Neurocomputer interface

A neurocomputer interface , or BCI (brain computer interface), is a system that provides input into a computer directly, so to speak, from the brain, bypassing the user's hands and other unnecessary peripherals. Simply put, controlling the power of thought. The most developed and affordable device of this kind, as far as I know, is Emotiv EPOC (correct me if this is not so).

The most recent mention of Emotiv EPOC on Habré dates back to 2008 . Then it was noted with regret that sales of the device are postponed to 2009. Since then nothing was heard about Emotiv EPOC on Habré. Meanwhile, the device is already on sale. True, so far only in America, but when did it stop the Russian geeks? ;) Moreover, this thing hasa community of developers (albeit rather conditional) and 6 SDK options , from a lightweight free version to a fancy Enterprise Plus for 7.5 kilobacks. Unfortunately, if you want to debug on a real device and have documentation and access to the API, you will have to pay not $ 300, which a regular user will give, but $ 500 for Developer Edition . But this is also not cosmic money, and I hope there will be a brave experimenter on Habré who will take the opportunity to pick the interface of the future and describe his feat for future generations.

Emotiv EPOC is not the only commercially available device using BCI. There is at least Gamma Sys from g-tec and the Neural Impulse Actuatorfrom OCZ Technology.

Gamma Sys, it seems, is more focused on research organizations than on ordinary users - on the manufacturer’s website I did not find any prices or links to distributors: apparently, it is proposed to send a request for a technical and commercial offer or something like that.

Neural Impulse Actuator (NIA) looks more attractive to the end user - you can buy it for only $ 100 , and it even has manuals in Russian. He does not have an SDK, official support for each game must be expected from the manufacturer (and only under Windows). True, knowledgeable people realized that NIA is an ordinary HID device , and you can write firewood for it yourself. On the one hand, this seems to be a plus, and on the other hand, as I understand it, this architecture seriously limits the capabilities of the device compared to EPOC, which is not just an advanced mouse, but a complete device for measuring the activity of various parts of the brain.

Usability

This topic has also become popular recently. And I have a feeling that attention to her will increase. To date, a huge amount of software has been created that covers almost any user needs. And now, in order to successfully compete among their own kind, programs are forced to have a simple, convenient and aesthetic user interface.

And now I will return to technology and try to draw general conclusions.

Conclusion

It seems that for the coming of the future we have enough physical devices, only the software that makes their use simple and convenient is not enough. Of course, this is also a laborious task, probably comparable to the development of an operating system (how many years has ReactOS been written there ?). But there would be a desire ... Who knows, maybe, among the people reading this article, there will be a person who organizes an open-source project to integrate all of these devices among themselves and create a 21st Century Computer Interface?

In conclusion, let's fantasize a little. Imagine: you are sitting in a chair, but there is no computer in front of you (more precisely, you do not see it). Instead, on your table (a regular table from Ikea) you can see virtual objects - text documents, windows with games, three-dimensional models, and move them around the table with your hands. Or hang in the air above the table. A pop-up window appears, and then closes: you removed it with the power of thought. You open a desk drawer with your hand, and from there the browser with Habr jumps out. The pages scroll as they read.

And remember the guys who make a cartoon about Gypsy? The second (well, third), they will make their own cartoon in six months. Compare: instead of a 3D editor, audio editor, and editing software, they now have one thought grabber. They don’t even need to render 3D models. They just sit and imagine a cartoon in detail. Of course, it also has its own subtleties and its own technical process ... but the performance is still much higher.

Today, such fantasies seem distant and irrelevant. But remember, once you discovered that everyone around you, not excluding you, is using mobile phones and the Internet without seeing anything surprising in this? The future is it. It comes unnoticed.