Latent semantic analysis

How to find texts that are similar in meaning? What algorithms are there for searching texts of the same subject? - Questions regularly arising in various programming forums. Today I’ll talk about one of the approaches that the search giants are actively using and which sounds like a mantra for SEO aka search engine optimizers. This approach is called latent semantic analysis (LSA), aka latent semantic indexing (LSI)

Suppose you are faced with the task of writing an algorithm that can distinguish news about pop stars from news on the economy. The first thing that comes to mind is to choose words that are found exclusively in articles of each type and use them for classification. The obvious problem with this approach is how to list all the possible words and what to do if there are words from several classes in the article. Adding to the complexity are homonyms . Those. words with many meanings. For example, the word “banks” in one context can mean glass vessels, and in another context it can be financial institutions.

Latent-semantic analysis maps documents and individual words into the so-called "semantic space", in which all further comparisons are made. The following assumptions are made:

1) Documents are just a bunch of words. Word order in documents is ignored. The only important thing is how many times this or that word appears in the document.

2) The semantic meaning of a document is determined by the set of words that usually go together. For example, in stock exchanges, the words are often found: “fund”, “stock”, “dollar”

3) Each word has a unique meaning. This, of course, is a great simplification, but it is it that makes the problem solvable.

For an example, I selected some headlines from various news. They were not chosen by chance, the fact is that for random sampling a very large amount of data would be required, which would greatly complicate the further presentation. So, a few headlines have been selected.

First of all, the so-called stop symbols were excluded from these headers. These are words that are found in every text and do not carry a semantic load, these are, first of all, all conjunctions, particles, prepositions and many other words. A complete list of used stop symbols can be found in my previous article on stop symbols.

Next, a stamming operation was performed. It is not mandatory, some sources claim that good results are obtained without it. Indeed, if the set of texts is large enough, then this step can be omitted. If the texts are in English, then this step can also be ignored, due to the fact that the number of variations of a particular word form in English is significantly less than in Russian. In our case, skipping this step is not worth it. this will lead to significant degradation of the results. For Stemming I used algorithm Porter .

Then the words occurring in a single copy were excluded. This is also an optional step; it does not affect the final result, but greatly simplifies mathematical calculations. As a result, we are left with the so-called index-linked words are in bold:

1. British polyC tions knows the whereabouts of the founder of I the WikiLeaks

2. The Court is, the United States begins the process of Fr. Yves Russians, send spam

3. Ceremony uw awarded Ia Nobel th prem uu world boycott 19 countries

4. In the United K uuArestova Mr. founder of s website Wikileaks Julian Assange

5. Ukraine ignores the ceremony uw awarded Ia Nobel th prem ii

6. The Swedish court has refused to hear an appeal of the founder of I Wikileaks

7. NATO and the United States have developed plans for the defense of countries Baltic Fr. Yves Russia

8. Police Ia United K uu found founder of I the WikiLeaks , but not Arestova la

9. In Stockholm and Oslo will be held today awarded s Nobel their prem s

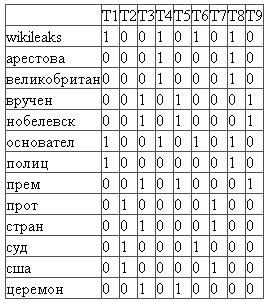

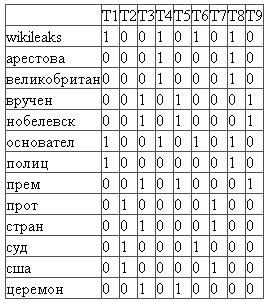

The first step is to compile a frequency matrix of indexed words. In this matrix, rows correspond to indexed words, and columns correspond to documents. Each cell of the matrix indicates how many times the word appears in the corresponding document.

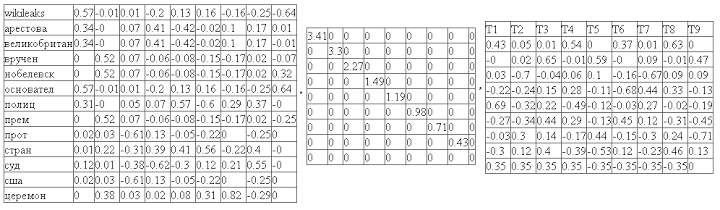

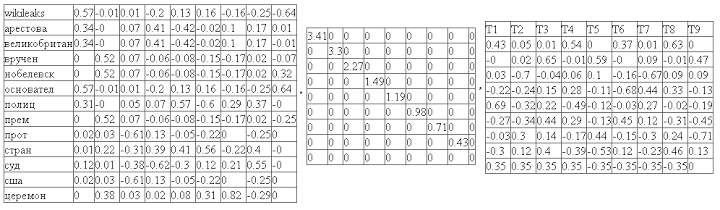

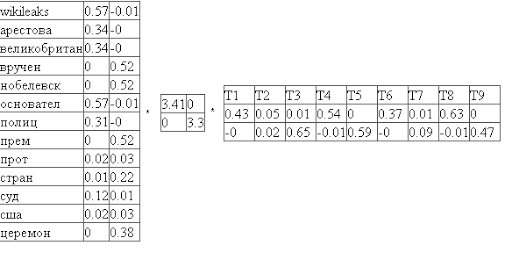

The next step is a singular decomposition of the resulting matrix. A singular decomposition is a mathematical operation that decomposes a matrix into three components. Those. we represent the original matrix M in the form:

M = U * W * V t

where U and V t are orthogonal matrices, and W is the diagonal matrix. Moreover, the diagonal elements of the matrix W are ordered in decreasing order. The diagonal elements of the matrix W are called singular numbers.

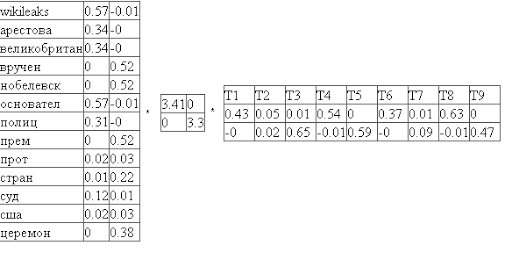

The beauty of the singular decomposition is that it highlights the key components of the matrix, allowing you to ignore the noise. According to the simple rules for the product of matrices, it can be seen that the columns and rows corresponding to smaller singular values make the least contribution to the final product. For example, we can discard the last columns of the matrix U and the last rows of the matrix V ^ t, leaving only the first 2. It is important that this ensures the optimality of the resulting product. An expansion of this kind is called a two-dimensional singular expansion:

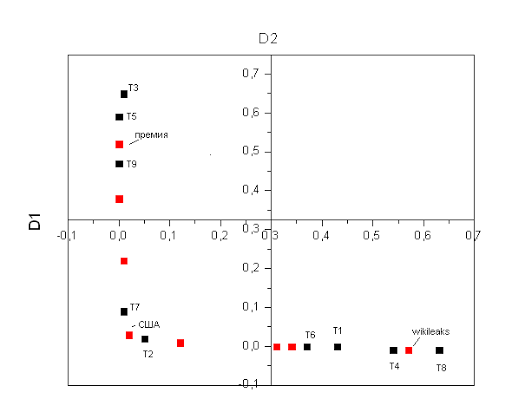

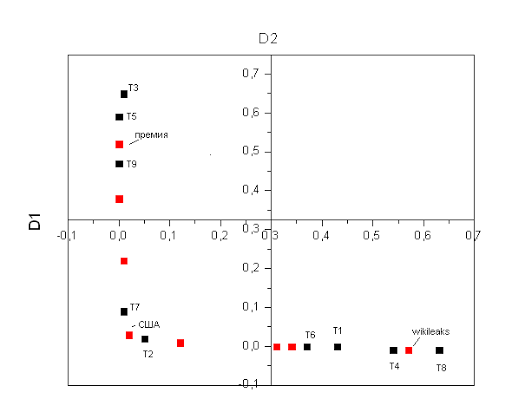

Let's now mark on the graph the points corresponding to individual texts and words, we get such an interesting picture:

This graph shows that the articles form three independent groups, the first group of articles is located next to the word "wikileaks", and indeed, if we look at the names of these articles, it becomes clear that they are related to wikileaks. Another group of articles is formed around the word "prize", and indeed they are discussing the Nobel Prize.

In practice, of course, the number of groups will be much larger, the space will not be two-dimensional but multi-dimensional, but the idea itself remains the same. We can determine the locations of words and articles in our space and use this information to, for example, determine the subject of an article.

It is easy to notice that the overwhelming number of cells in the frequency matrix of indexed words created in the first step contain zeros. The matrix is very sparse and this property can be used to improve performance and memory consumption while creating a more complex implementation.

In our case, the texts were about the same length; in real situations, the frequency matrix should be normalized. Standard way to normalize the TF-IDF matrix.

We used two-dimensional decomposition of SVD-2, in real examples, the dimension can be several hundred or more. The choice of dimension is determined by a specific task, but the general rule is this: the smaller the dimension, the less semantic groups you can find, the larger the dimension, the greater the influence of noise.

To write the article, we used the Java library for working with Jama matrices . In addition, the SVD function is implemented in well-known mathematical packages like Mathcad, there are libraries for Python and C ++.

Suppose you are faced with the task of writing an algorithm that can distinguish news about pop stars from news on the economy. The first thing that comes to mind is to choose words that are found exclusively in articles of each type and use them for classification. The obvious problem with this approach is how to list all the possible words and what to do if there are words from several classes in the article. Adding to the complexity are homonyms . Those. words with many meanings. For example, the word “banks” in one context can mean glass vessels, and in another context it can be financial institutions.

Latent-semantic analysis maps documents and individual words into the so-called "semantic space", in which all further comparisons are made. The following assumptions are made:

1) Documents are just a bunch of words. Word order in documents is ignored. The only important thing is how many times this or that word appears in the document.

2) The semantic meaning of a document is determined by the set of words that usually go together. For example, in stock exchanges, the words are often found: “fund”, “stock”, “dollar”

3) Each word has a unique meaning. This, of course, is a great simplification, but it is it that makes the problem solvable.

Example

For an example, I selected some headlines from various news. They were not chosen by chance, the fact is that for random sampling a very large amount of data would be required, which would greatly complicate the further presentation. So, a few headlines have been selected.

First of all, the so-called stop symbols were excluded from these headers. These are words that are found in every text and do not carry a semantic load, these are, first of all, all conjunctions, particles, prepositions and many other words. A complete list of used stop symbols can be found in my previous article on stop symbols.

Next, a stamming operation was performed. It is not mandatory, some sources claim that good results are obtained without it. Indeed, if the set of texts is large enough, then this step can be omitted. If the texts are in English, then this step can also be ignored, due to the fact that the number of variations of a particular word form in English is significantly less than in Russian. In our case, skipping this step is not worth it. this will lead to significant degradation of the results. For Stemming I used algorithm Porter .

Then the words occurring in a single copy were excluded. This is also an optional step; it does not affect the final result, but greatly simplifies mathematical calculations. As a result, we are left with the so-called index-linked words are in bold:

1. British polyC tions knows the whereabouts of the founder of I the WikiLeaks

2. The Court is, the United States begins the process of Fr. Yves Russians, send spam

3. Ceremony uw awarded Ia Nobel th prem uu world boycott 19 countries

4. In the United K uuArestova Mr. founder of s website Wikileaks Julian Assange

5. Ukraine ignores the ceremony uw awarded Ia Nobel th prem ii

6. The Swedish court has refused to hear an appeal of the founder of I Wikileaks

7. NATO and the United States have developed plans for the defense of countries Baltic Fr. Yves Russia

8. Police Ia United K uu found founder of I the WikiLeaks , but not Arestova la

9. In Stockholm and Oslo will be held today awarded s Nobel their prem s

Latent semantic analysis

The first step is to compile a frequency matrix of indexed words. In this matrix, rows correspond to indexed words, and columns correspond to documents. Each cell of the matrix indicates how many times the word appears in the corresponding document.

The next step is a singular decomposition of the resulting matrix. A singular decomposition is a mathematical operation that decomposes a matrix into three components. Those. we represent the original matrix M in the form:

M = U * W * V t

where U and V t are orthogonal matrices, and W is the diagonal matrix. Moreover, the diagonal elements of the matrix W are ordered in decreasing order. The diagonal elements of the matrix W are called singular numbers.

The beauty of the singular decomposition is that it highlights the key components of the matrix, allowing you to ignore the noise. According to the simple rules for the product of matrices, it can be seen that the columns and rows corresponding to smaller singular values make the least contribution to the final product. For example, we can discard the last columns of the matrix U and the last rows of the matrix V ^ t, leaving only the first 2. It is important that this ensures the optimality of the resulting product. An expansion of this kind is called a two-dimensional singular expansion:

Let's now mark on the graph the points corresponding to individual texts and words, we get such an interesting picture:

This graph shows that the articles form three independent groups, the first group of articles is located next to the word "wikileaks", and indeed, if we look at the names of these articles, it becomes clear that they are related to wikileaks. Another group of articles is formed around the word "prize", and indeed they are discussing the Nobel Prize.

In practice, of course, the number of groups will be much larger, the space will not be two-dimensional but multi-dimensional, but the idea itself remains the same. We can determine the locations of words and articles in our space and use this information to, for example, determine the subject of an article.

Algorithm Improvements

It is easy to notice that the overwhelming number of cells in the frequency matrix of indexed words created in the first step contain zeros. The matrix is very sparse and this property can be used to improve performance and memory consumption while creating a more complex implementation.

In our case, the texts were about the same length; in real situations, the frequency matrix should be normalized. Standard way to normalize the TF-IDF matrix.

We used two-dimensional decomposition of SVD-2, in real examples, the dimension can be several hundred or more. The choice of dimension is determined by a specific task, but the general rule is this: the smaller the dimension, the less semantic groups you can find, the larger the dimension, the greater the influence of noise.

Remarks

To write the article, we used the Java library for working with Jama matrices . In addition, the SVD function is implemented in well-known mathematical packages like Mathcad, there are libraries for Python and C ++.