Intel Omni-Path. Data is everywhere with us.

Mass clustering of everything and everything requires specific data exchange technologies, characterized primarily by low latencies and high speed. The most famous and used standard in this area is Infiniband . Appearing back in 1999, in the process of its development, Infiband increased its speed from 2 to 100 Gb / s. Intel is a member of the association developing Infiband and manufactures Infiband equipment. This, however, does not prevent her on her own to support another standard of high-speed switching networks - Intel Omni-Path . This name appeared several times in the posts of our blog, and each time readers asked for details. Now we have the opportunity to talk about them.

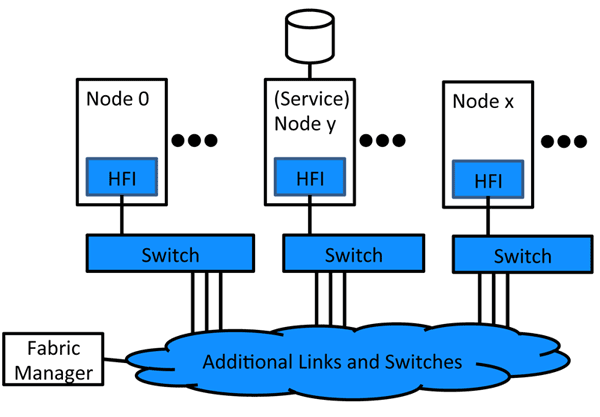

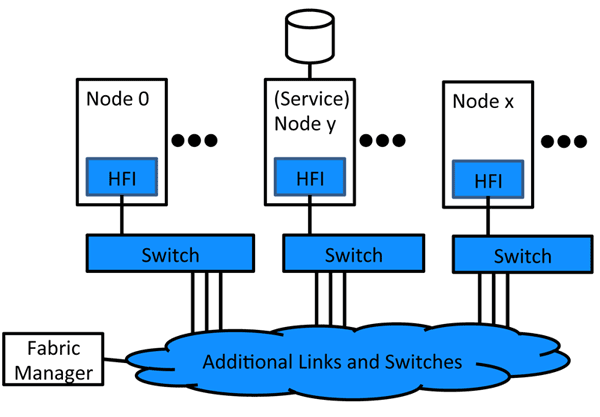

Intel Omni-Path has a standard architecture for this kind of architecture. Its infrastructure consists of network adapters ( HFI, Host Fabric Interface ) for connecting computing and control nodes, switches, allowing you to create the necessary topology and number of ports and management tools for controlling and monitoring the entire factory.

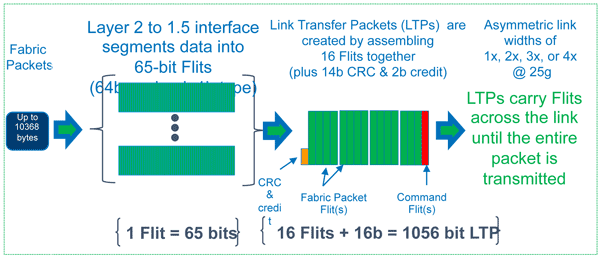

From a network point of view, Omni-Path fits quite accurately into the OSI reference model with one small nuance: the protocol description uses the term “one and a half layer” ( LTP, Link Transfer Protocol ), which is responsible for reliable delivery of second-level objects, flow control and connection. The second level is responsible for addressing, switching and resource allocation.

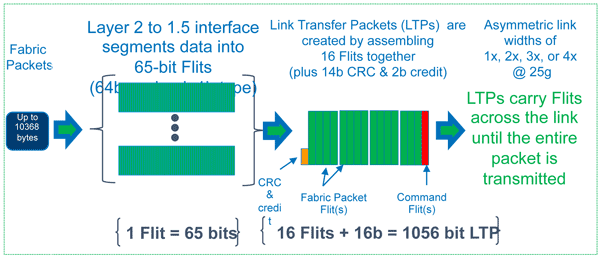

When moving from the second to one and a half levels, the data is cut into 64-bit pieces, a type bit is added to them, and the result is a Flit . The data unit that LTP works with consists of 16 flits, 14 CRC bits, and two reporting bits. One or more fleets is a command and contains control protocol information. If necessary, re-transmit a specific LTP ( Link Transfer Packet ) sends an empty LTP with the necessary data in the command part. The reporting bits of 4 consecutive LTPs are added to the 8-bit report delivered in the command fleet.

Operating with small pieces of data allows you to control the transmission delays, minimize their spread, provides the basis for QoS mechanisms. The so-called “virtual lines” ( Virtual Lanes ) are used to rank traffic . For addressing factory subscribers, 24-bit identifiers are used.

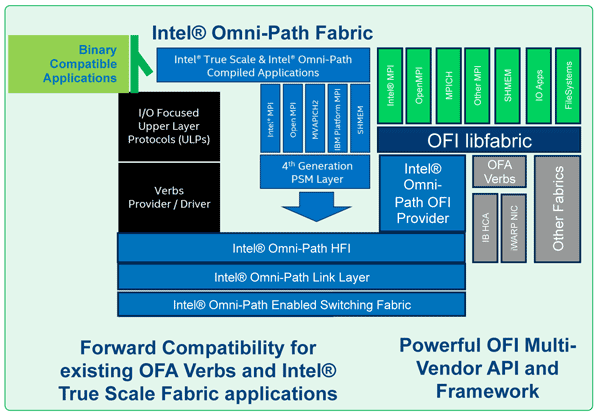

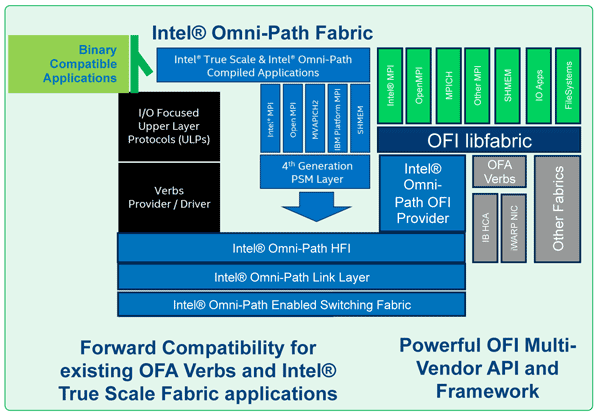

The fourth and subsequent levels integrate Omni-Path into the required software environment. Performance Scaled Messaging (PSM) is an API and corresponding layer 4 protocol designed for the needs of HPC - High Performance Computing. Open Fabrics Interface (OFI) is a versatile framework and software application for a wide variety of needs and protocols. Open fabrics alliance verbs- API and corresponding protocol of the 4th level for the implementation of RDMA ( Remote Direct Memory Access ).

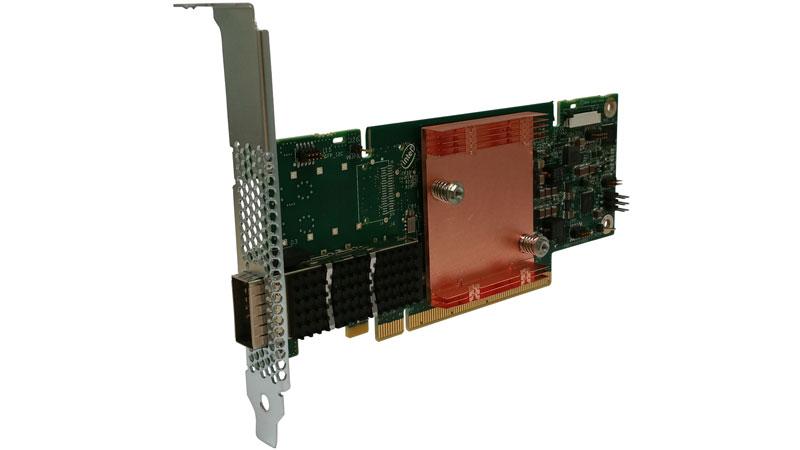

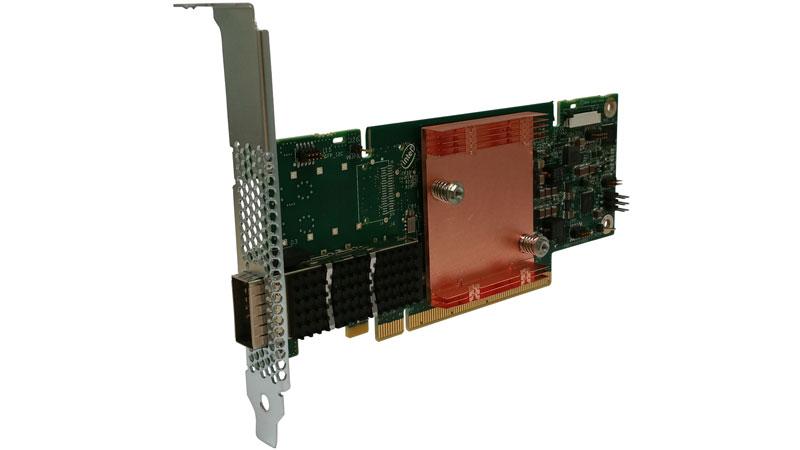

Intel Omni-Path technology exists not only in the form of beautiful pictures. In 2015-16, Intel released the full range of devices necessary for its practical use. First of all, these are node end adapters - HFI. They exist in two versions: in the form of a PCI Express card x8 (maximum data speed 56 Gb / s) and in the form of a card x16 (maximum data speed 100 Gb / s). The connection interface is QSFP28. Intel also produces QSFP cables of various lengths , copper passive and optical active.

For the organization of the required network topology Omni-Path Intel offers two types of switches Intel Omni-Path Edge Switch 100 Series with 24 and 48 100G ports QSFP28. Judging by the declared characteristics, the switches provide an honest duplex 100G to each port without oversubscribing. Two modular Intel Omni-Path Director Class Switch switches with a significantly larger number of ports (192 and 768) and a substantial oversubscription (utilization of not more than 25%) were also announced.

The company plans to integrate Omni-Path controllers into Intel Xeon processors and actively use technology in Xeon Phi. We will wait for the next news from this area.

Intel Omni-Path Architecture

Intel Omni-Path has a standard architecture for this kind of architecture. Its infrastructure consists of network adapters ( HFI, Host Fabric Interface ) for connecting computing and control nodes, switches, allowing you to create the necessary topology and number of ports and management tools for controlling and monitoring the entire factory.

From a network point of view, Omni-Path fits quite accurately into the OSI reference model with one small nuance: the protocol description uses the term “one and a half layer” ( LTP, Link Transfer Protocol ), which is responsible for reliable delivery of second-level objects, flow control and connection. The second level is responsible for addressing, switching and resource allocation.

When moving from the second to one and a half levels, the data is cut into 64-bit pieces, a type bit is added to them, and the result is a Flit . The data unit that LTP works with consists of 16 flits, 14 CRC bits, and two reporting bits. One or more fleets is a command and contains control protocol information. If necessary, re-transmit a specific LTP ( Link Transfer Packet ) sends an empty LTP with the necessary data in the command part. The reporting bits of 4 consecutive LTPs are added to the 8-bit report delivered in the command fleet.

Operating with small pieces of data allows you to control the transmission delays, minimize their spread, provides the basis for QoS mechanisms. The so-called “virtual lines” ( Virtual Lanes ) are used to rank traffic . For addressing factory subscribers, 24-bit identifiers are used.

The fourth and subsequent levels integrate Omni-Path into the required software environment. Performance Scaled Messaging (PSM) is an API and corresponding layer 4 protocol designed for the needs of HPC - High Performance Computing. Open Fabrics Interface (OFI) is a versatile framework and software application for a wide variety of needs and protocols. Open fabrics alliance verbs- API and corresponding protocol of the 4th level for the implementation of RDMA ( Remote Direct Memory Access ).

Omni-Path Equipment

Intel Omni-Path technology exists not only in the form of beautiful pictures. In 2015-16, Intel released the full range of devices necessary for its practical use. First of all, these are node end adapters - HFI. They exist in two versions: in the form of a PCI Express card x8 (maximum data speed 56 Gb / s) and in the form of a card x16 (maximum data speed 100 Gb / s). The connection interface is QSFP28. Intel also produces QSFP cables of various lengths , copper passive and optical active.

For the organization of the required network topology Omni-Path Intel offers two types of switches Intel Omni-Path Edge Switch 100 Series with 24 and 48 100G ports QSFP28. Judging by the declared characteristics, the switches provide an honest duplex 100G to each port without oversubscribing. Two modular Intel Omni-Path Director Class Switch switches with a significantly larger number of ports (192 and 768) and a substantial oversubscription (utilization of not more than 25%) were also announced.

The company plans to integrate Omni-Path controllers into Intel Xeon processors and actively use technology in Xeon Phi. We will wait for the next news from this area.