JavaScript, Node, Puppeteer: Chrome Automation and Web Scraping

- Transfer

The puppeteer library for Node.js allows you to automate your work with the Google Chrome browser. In particular, with the help of

Today we’ll talk about creating a web scraper based on Node.js and

Before you begin, you will need Node 8+. You can find and download it here by selecting the current version. If you have never worked with Node before, take a look at these training courses or look for other materials, since there are plenty of them on the Web.

After installing Node, create a folder for the project and install

After installation,

First, create a file

Let's parse this code line by line. First we show the big picture.

In this line, we connect the previously installed library

Here represented by the main function

In this line, we call the function

It is important to pay attention to the fact that a function

Due to the use of the keyword function in the definition

Now let's parse the function code

Here we run

Here we create a new page in a browser controlled by program code. Namely, we request this operation, wait for its completion, and write a link to the page into a constant

Using the variable

Here we ask for

The function

The above code saved in a file

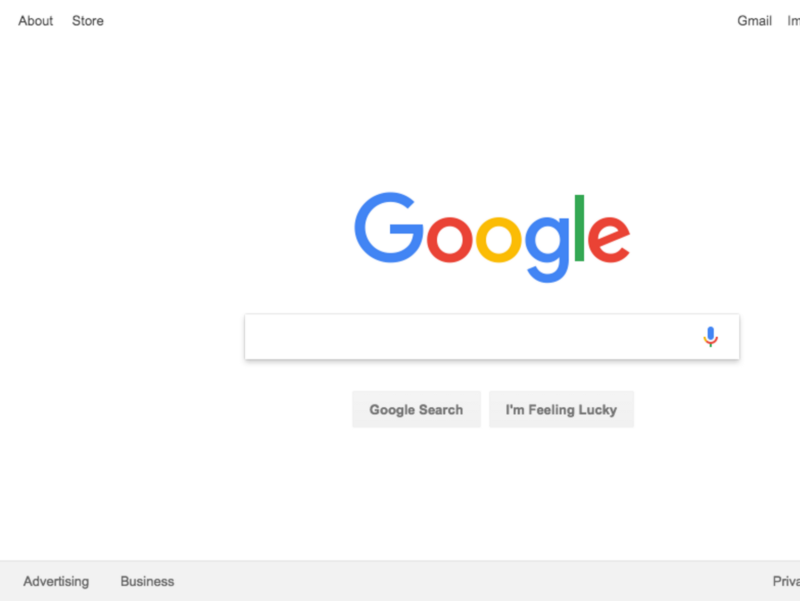

Here's what happens after it successfully completes:

Great! And now, to make it more fun (and to make debugging easier), we can do the same thing by launching Chrome in normal mode.

What would that mean? Try it and see for yourself. To do this, replace this line of code:

To this:

Save the file and run it again using Node:

Great, right? By passing the object

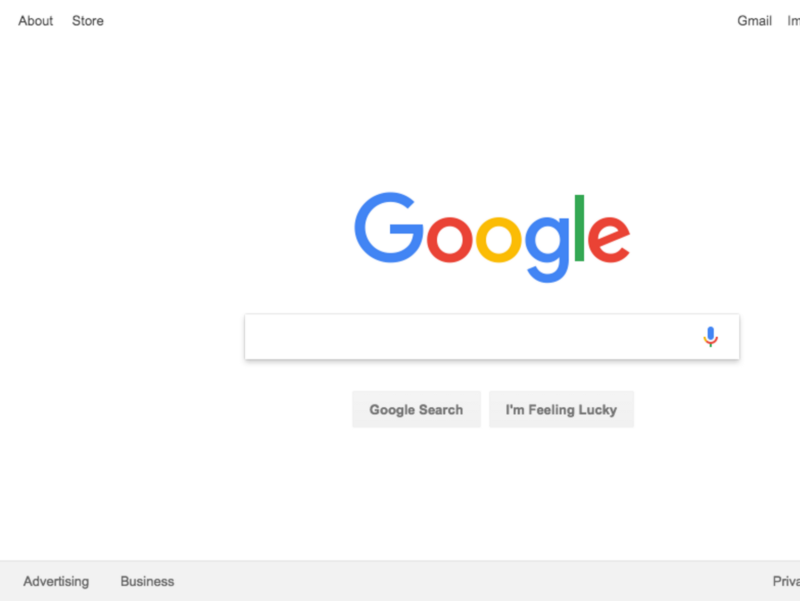

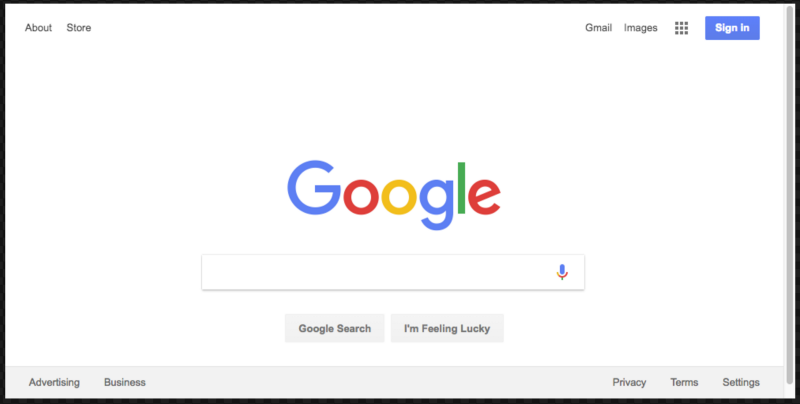

Before moving on, we’ll do something else. Have you noticed that the screenshot made by the program includes only part of the page? This is because the browser window is slightly smaller than the size of the web page. You can fix this with the following line, changing the size of the window:

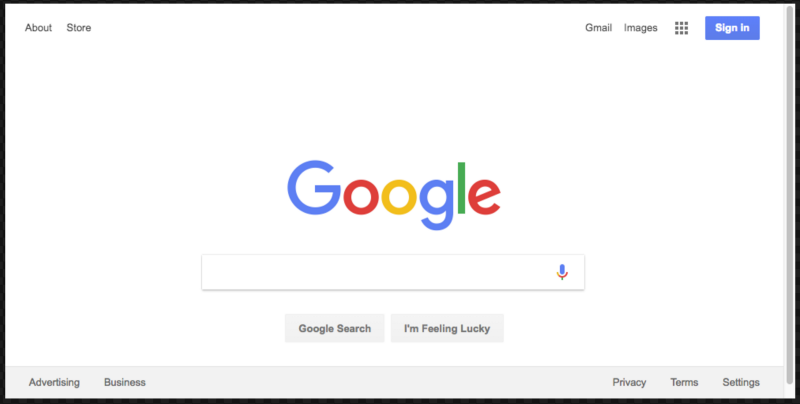

It must be added to the code immediately after the command to navigate to the URL. This will cause the program to take a screenshot that looks much better:

Here is what the final version of the code will look like:

Now that you’ve mastered the basics of Chrome automation with

First, take a look at the documentation for

We will collect data from Books To Scrape . This is an imitation of an electronic bookstore, created for web scraping experiments.

In the same directory where the file is located

Ideally, after parsing the first example, you should already understand how this code works. But if this is not so, then it’s okay.

In this fragment we connect the previously installed one

Let's check this code by adding a

After that, run the program with the command

First you need to create a browser instance, open a new page and go to the URL. Here's how we do it all:

Let's analyze this code.

In this line we create a browser instance and set the parameter

Here we create a new page in the browser.

Go to the address

Here we add a delay of 1000 milliseconds in order to give the browser time to fully load the page, but usually this step can be omitted.

Here we close the browser and return the result.

Preliminary preparation is completed, now we will take up scraping.

As you probably already understood, the Books To Scrape website has a large catalog of real books with conditional data. We are going to take the first book located on the page and return its name and price. Here is the home page of the site. Click on the first book (it is highlighted in red).

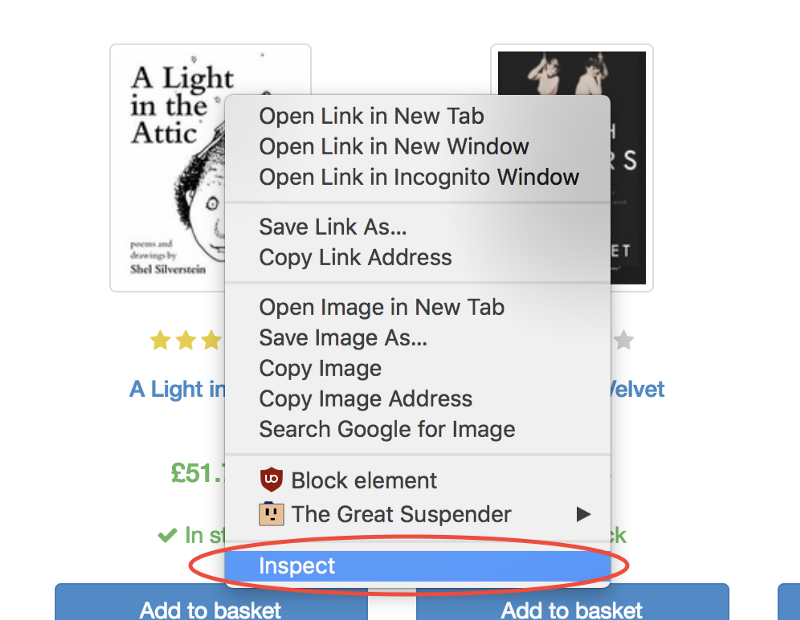

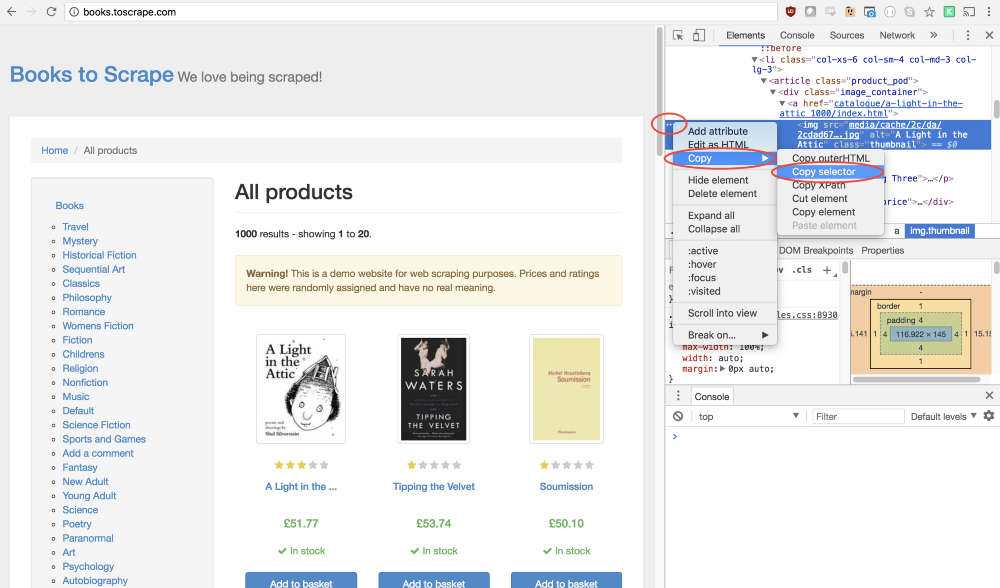

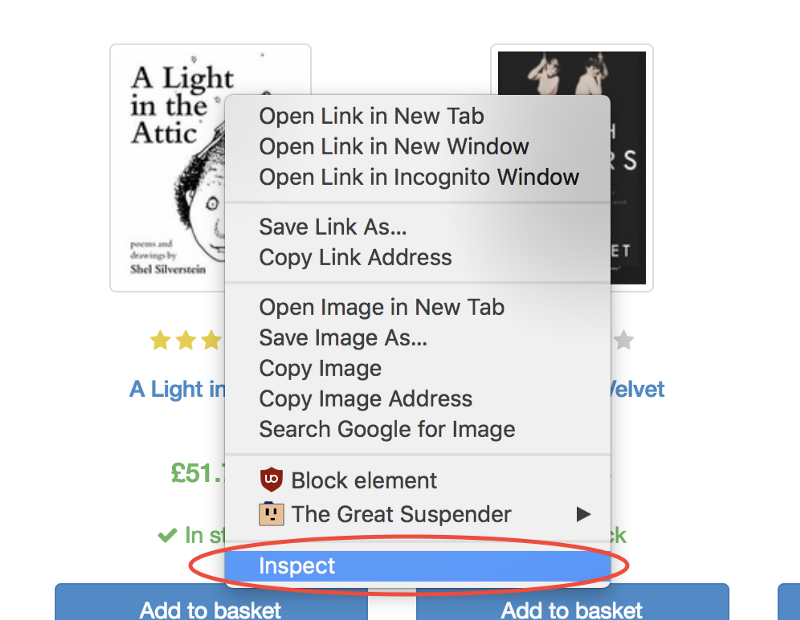

In the documentation for

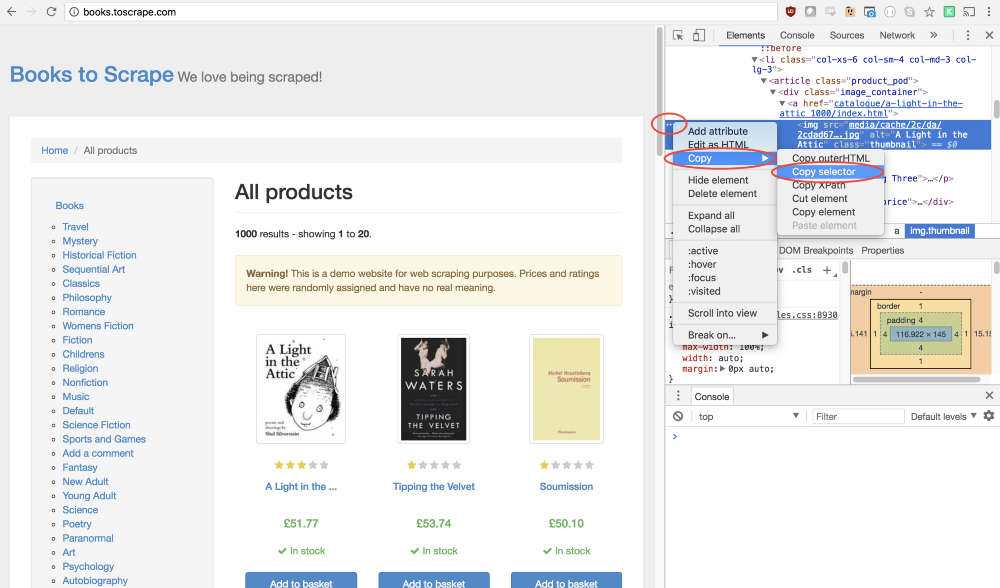

The view design is a selector to search for the item to click on. If several elements are found that satisfy the selector, then a click will be made on the first of them. The good thing is that the Google Chrome developer tools allow, without much difficulty, to determine the selector of a particular element. In order to do this, just right-click on the image and select the command (View Code). This command will open the (Elements) panel , in which the page code will be presented, the fragment of which corresponding to the element of interest to us will be highlighted. After that, you can click on the button with three dots on the left and select the command (Copy → Copy selector) in the menu that appears .

Excellent! Now we have a selector and everything is ready to form a method

Now the program simulates a click on the first image of the product, which leads to the opening of the page of this product.

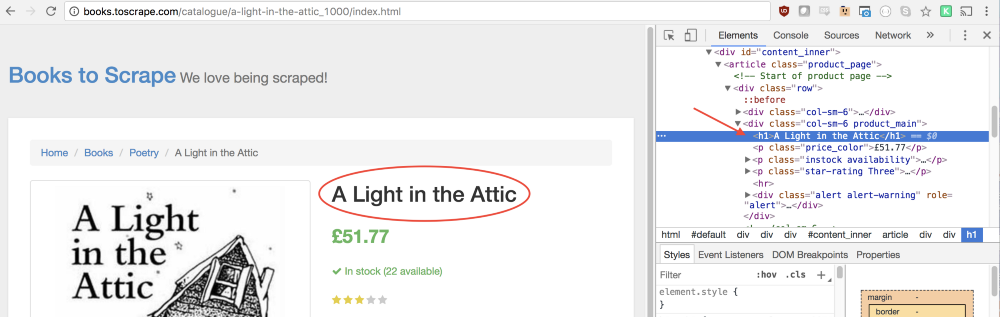

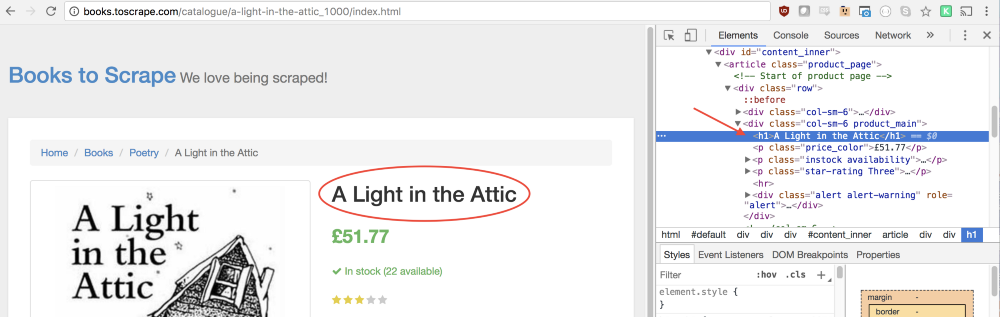

On this new page we are interested in the name of the book and its price. They are highlighted in the figure below.

In order to get to these values, we will use the method

First, call the method

In this function we can select the necessary elements. In order to understand how to describe what we need, we will again use the Chrome developer tools. To do this, right-click on the name of the book and select the command

In panel

Since we need the text contained in this element, we need to use the property

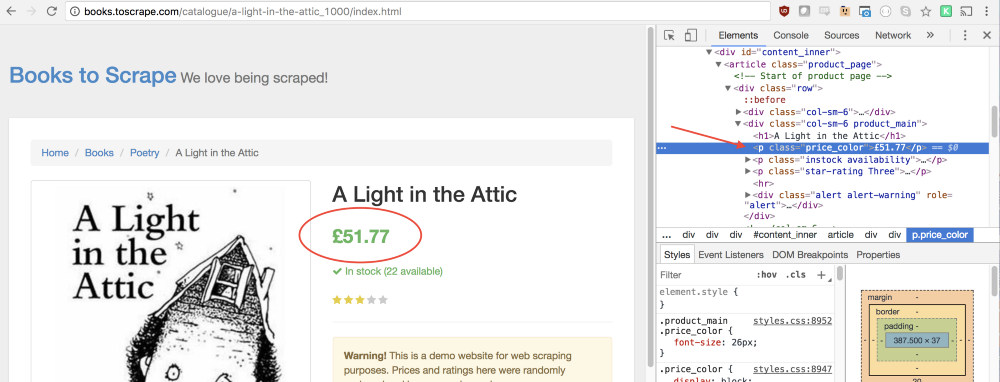

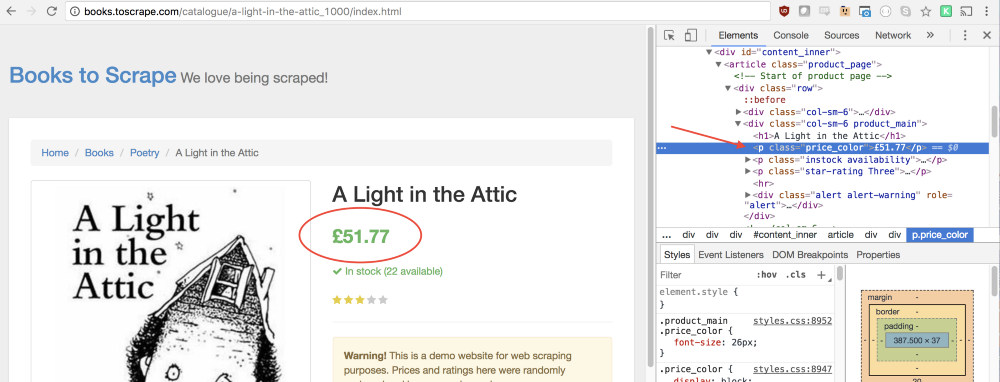

The same approach will help us figure out how to take the price of a book from a page.

You may notice that the line with the price corresponds to the class

Now that we have pulled out the name of the book and its price from the page, we can return all this from the function as an object:

The result is the following code:

Here we read the name of the book and the price from the page, save them in the object and return this object, which leads to writing it to

Now it remains only to return the constant

The full code for this example will look like this:

Now you can run the program using Node:

If everything is done correctly, the name of the book and its price will be displayed in the console:

Actually, all this is web scraping, and you just took the first steps in this lesson.

Here you may have quite reasonable questions: “Why click on the link leading to the page of the book, if both its name and price are displayed on the home page? Why not take them straight from there? And, if we could do this, why not read the names and prices of all the books? ”

The answer to these questions is that there are many approaches to web scraping! In addition, if you restrict yourself to the data displayed on the home page, you may encounter the fact that the names of the books will be shortened. However, all these thoughts give you a great opportunity to practice.

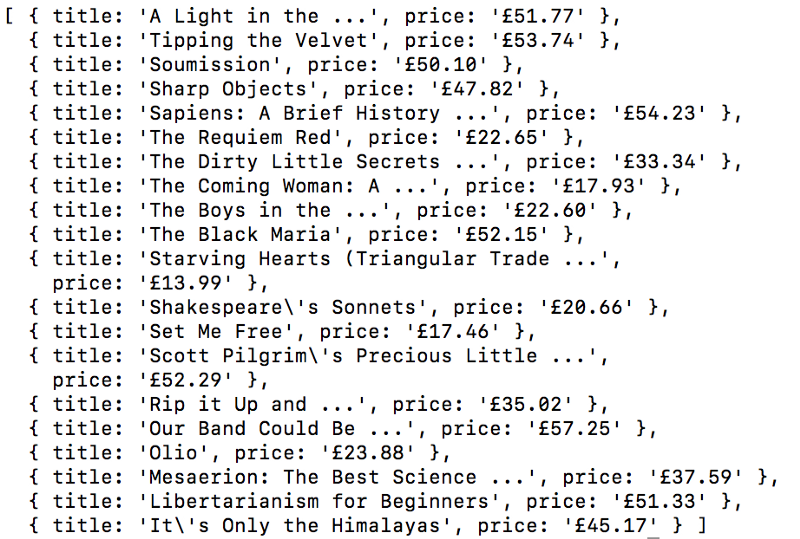

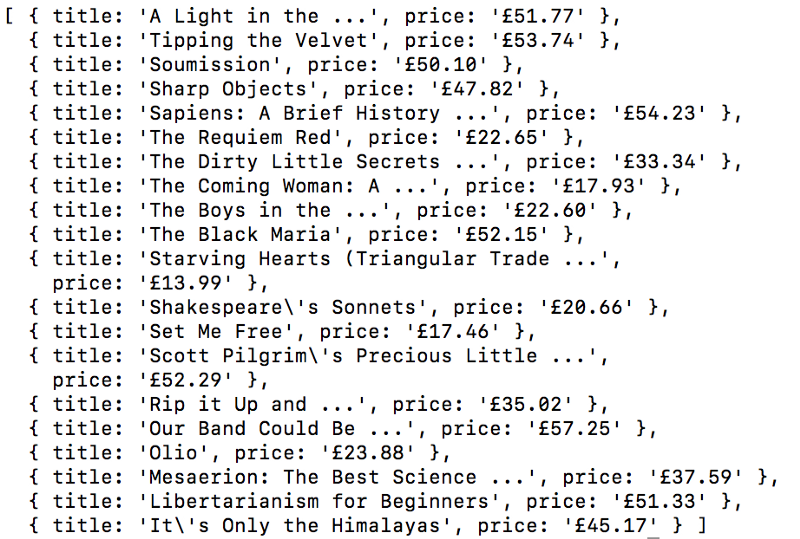

Your goal is to read all the book titles and their prices from the home page and return them as an array of objects. Here is the array I got:

You can proceed. Do not read further, try to do everything yourself. I must say that this problem is very similar to the one we just solved.

Happened? If not, then here is a hint.

The main difference between this task and the previous example is that here we need to go through the list of data. Here's how to do it:

If even now you are unable to solve the problem, there is nothing to worry about. This is a matter of practice. Here is one of the possible solutions.

In this article, you learned how to use the Google Chrome browser and the Puppeteer library to create a web scraping system. Namely, we examined the structure of the code, how to programmatically control the browser, the method of creating screen copies, methods of simulating the user’s work with the page, and approaches to reading and saving data posted on web pages. If this was your first acquaintance with web scraping, we hope that now you have everything you need in order to get everything you need from the Internet.

Dear readers! Do you use the Puppeteer library and Google Chrome browser without a user interface?

puppeteerit is possible to create programs for the automatic collection of data from websites, the so-called web scrapers that simulate the actions of an ordinary user. In such scenarios, a browser without a user interface, the so-called “Headless Chrome,” can be used. Using it puppeteer, you can control a browser that is running in normal mode, which is especially useful when debugging programs.

Today we’ll talk about creating a web scraper based on Node.js and

puppeteer. The author of the article strove to make the article interesting to the widest possible audience of programmers, therefore both web developers who already have some experience with puppeteerand those who first come across such a concept as “Headless” will benefit from it. Chrome. "Preliminary preparation

Before you begin, you will need Node 8+. You can find and download it here by selecting the current version. If you have never worked with Node before, take a look at these training courses or look for other materials, since there are plenty of them on the Web.

After installing Node, create a folder for the project and install

puppeteer. Together with it, the current version of Chromium will be installed, which is guaranteed to work with the API of interest to us. You can do this with the following command:npm install --save puppeteerExample # 1: Making Screen Shots

After installation,

puppeteerwe will analyze a simple example. He, with minor modifications, repeats the library documentation. The code that we are going to review now takes a screenshot of a given web page. First, create a file

test.jsand put the following into it:const puppeteer = require('puppeteer');

async function getPic() {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://google.com');

await page.screenshot({path: 'google.png'});

await browser.close();

}

getPic();Let's parse this code line by line. First we show the big picture.

const puppeteer = require('puppeteer');In this line, we connect the previously installed library

puppeteeras a dependency.async function getPic() {

...

}Here represented by the main function

getPic(). This function contains code that automates the work with the browser.getPic();In this line, we call the function

getPic(), that is, execute it. It is important to pay attention to the fact that a function

getPic()is asynchronous; it is defined with a keyword async. It uses a construction async / awaitfrom ES 2017. Since it getPic()is an asynchronous function, it, when called, returns an object Promise. Such objects are usually called “promises”. When a function defined with a keyword asyncterminates and returns a value, the promise will either be allowed (if the operation succeeds) or rejected (if an error occurs). Due to the use of the keyword function in the definition

async, we can make calls to other functions with the keyword in itawait. It suspends the execution of the function and allows you to wait for the resolution of the corresponding promise, after which the function continues. If all this is not yet clear to you, just read on and gradually everything will begin to fall into place. Now let's parse the function code

getPic().const browser = await puppeteer.launch();Here we run

puppeteer. In fact, this means that we launch an instance of the Chrome browser and write a link to it in the newly created constant browser. Since the keyword is used in this line await, the execution of the main function will be suspended until the corresponding promise is resolved. In this case, this means waiting for either a successful Chrome instance to start, or an error.const page = await browser.newPage();Here we create a new page in a browser controlled by program code. Namely, we request this operation, wait for its completion, and write a link to the page into a constant

page.await page.goto('https://google.com');Using the variable

pagecreated in the previous line, we can give the page a command to go to the specified URL. In this example, we go to https://google.com. Code execution, as in the previous lines, will pause until the operation is completed.await page.screenshot({path: 'google.png'});Here we ask for

puppeteera screenshot of the current page represented by the constant page. The method screenshot()accepts, as a parameter, an object. Here you can specify the path where you want to save the screenshot in the format .png. Again, the keyword is used here await, which pauses the function until the operation completes.await browser.close();The function

getPic()exits and we close the browser.Running example

The above code saved in a file

test.jscan be run using Node as follows:node test.jsHere's what happens after it successfully completes:

Great! And now, to make it more fun (and to make debugging easier), we can do the same thing by launching Chrome in normal mode.

What would that mean? Try it and see for yourself. To do this, replace this line of code:

const browser = await puppeteer.launch();To this:

const browser = await puppeteer.launch({headless: false});Save the file and run it again using Node:

node test.jsGreat, right? By passing the object

{headless: false}as a parameter when the browser starts, we can observe how the code controls the operation of Google Chrome. Before moving on, we’ll do something else. Have you noticed that the screenshot made by the program includes only part of the page? This is because the browser window is slightly smaller than the size of the web page. You can fix this with the following line, changing the size of the window:

await page.setViewport({width: 1000, height: 500})It must be added to the code immediately after the command to navigate to the URL. This will cause the program to take a screenshot that looks much better:

Here is what the final version of the code will look like:

const puppeteer = require('puppeteer');

async function getPic() {

const browser = await puppeteer.launch({headless: false});

const page = await browser.newPage();

await page.goto('https://google.com');

await page.setViewport({width: 1000, height: 500})

await page.screenshot({path: 'google.png'});

await browser.close();

}

getPic();Example 2: web scraping

Now that you’ve mastered the basics of Chrome automation with

puppeteer, let’s take a look at a more complex example where we’ll be collecting data from web pages. First, take a look at the documentation for

puppeteer. You can pay attention to the fact that there are a huge number of different methods that allow us to not only simulate mouse clicks on page elements, but also fill out forms and read data from pages. We will collect data from Books To Scrape . This is an imitation of an electronic bookstore, created for web scraping experiments.

In the same directory where the file is located

test.js, create a file scrape.jsand paste the following workpiece there:const puppeteer = require('puppeteer');

let scrape = async () => {

// Здесь выполняются операции скрапинга...

// Возврат значения

};

scrape().then((value) => {

console.log(value); // Получилось!

});Ideally, after parsing the first example, you should already understand how this code works. But if this is not so, then it’s okay.

In this fragment we connect the previously installed one

puppeteer. Next, we have a function scrape()in which, below, we will add the code for scraping. This function will return some value. And finally, we call the function scrape()and work with what it returned. In this case, we simply print it to the console. Let's check this code by adding a

scrape()line return to the function :let scrape = async () => {

return 'test';

};After that, run the program with the command

node scrape.js. A word should appear in the console test. We confirmed the operability of the code, the desired value gets to the console. Now you can do web scraping.▍Step 1: setup

First you need to create a browser instance, open a new page and go to the URL. Here's how we do it all:

let scrape = async () => {

const browser = await puppeteer.launch({headless: false});

const page = await browser.newPage();

await page.goto('http://books.toscrape.com/');

await page.waitFor(1000);

// Код для скрапинга

browser.close();

return result;

};Let's analyze this code.

const browser = await puppeteer.launch({headless: false});In this line we create a browser instance and set the parameter

headlessto false. This allows us to observe what is happening.const page = await browser.newPage();Here we create a new page in the browser.

await page.goto('http://books.toscrape.com/');Go to the address

http://books.toscrape.com/.await page.waitFor(1000);Here we add a delay of 1000 milliseconds in order to give the browser time to fully load the page, but usually this step can be omitted.

browser.close();

return result;Here we close the browser and return the result.

Preliminary preparation is completed, now we will take up scraping.

▍Step 2: scraping

As you probably already understood, the Books To Scrape website has a large catalog of real books with conditional data. We are going to take the first book located on the page and return its name and price. Here is the home page of the site. Click on the first book (it is highlighted in red).

In the documentation for

puppeteeryou can find a method that allows you to simulate clicks on the page:page.click(selector[, options])The view design is a selector to search for the item to click on. If several elements are found that satisfy the selector, then a click will be made on the first of them. The good thing is that the Google Chrome developer tools allow, without much difficulty, to determine the selector of a particular element. In order to do this, just right-click on the image and select the command (View Code). This command will open the (Elements) panel , in which the page code will be presented, the fragment of which corresponding to the element of interest to us will be highlighted. After that, you can click on the button with three dots on the left and select the command (Copy → Copy selector) in the menu that appears .

selector Inspect

ElementsCopy → Copy selector

Excellent! Now we have a selector and everything is ready to form a method

clickand paste it into the program. Here's how it would look:await page.click('#default > div > div > div > div > section > div:nth-child(2) > ol > li:nth-child(1) > article > div.image_container > a > img');Now the program simulates a click on the first image of the product, which leads to the opening of the page of this product.

On this new page we are interested in the name of the book and its price. They are highlighted in the figure below.

In order to get to these values, we will use the method

page.evaluate(). This method allows you to use JavaScript methods to work with the DOM, like querySelector(). First, call the method

page.evaluate()and assign the value returned to it to a constant result:const result = await page.evaluate(() => {

// что-нибудь возвращаем

});In this function we can select the necessary elements. In order to understand how to describe what we need, we will again use the Chrome developer tools. To do this, right-click on the name of the book and select the command

Inspect(View Code).

In panel

Elements(elements) you can see that the title of the book - this is the usual title of the first level h1. You can select this item using the following code:let title = document.querySelector('h1');Since we need the text contained in this element, we need to use the property

.innerText. As a result, we arrive at the following construction:let title = document.querySelector('h1').innerText;The same approach will help us figure out how to take the price of a book from a page.

You may notice that the line with the price corresponds to the class

price_color. We can use this class to select an element and read the text contained in it:let price = document.querySelector('.price_color').innerText;Now that we have pulled out the name of the book and its price from the page, we can return all this from the function as an object:

return {

title,

price

}The result is the following code:

const result = await page.evaluate(() => {

let title = document.querySelector('h1').innerText;

let price = document.querySelector('.price_color').innerText;

return {

title,

price

}

});Here we read the name of the book and the price from the page, save them in the object and return this object, which leads to writing it to

result. Now it remains only to return the constant

resultand display its contents in the console.return result;The full code for this example will look like this:

const puppeteer = require('puppeteer');

let scrape = async () => {

const browser = await puppeteer.launch({headless: false});

const page = await browser.newPage();

await page.goto('http://books.toscrape.com/');

await page.click('#default > div > div > div > div > section > div:nth-child(2) > ol > li:nth-child(1) > article > div.image_container > a > img');

await page.waitFor(1000);

const result = await page.evaluate(() => {

let title = document.querySelector('h1').innerText;

let price = document.querySelector('.price_color').innerText;

return {

title,

price

}

});

browser.close();

return result;

};

scrape().then((value) => {

console.log(value); // Получилось!

});Now you can run the program using Node:

node scrape.jsIf everything is done correctly, the name of the book and its price will be displayed in the console:

{ title: 'A Light in the Attic', price: '£51.77' }Actually, all this is web scraping, and you just took the first steps in this lesson.

Example No. 3: improving the program

Here you may have quite reasonable questions: “Why click on the link leading to the page of the book, if both its name and price are displayed on the home page? Why not take them straight from there? And, if we could do this, why not read the names and prices of all the books? ”

The answer to these questions is that there are many approaches to web scraping! In addition, if you restrict yourself to the data displayed on the home page, you may encounter the fact that the names of the books will be shortened. However, all these thoughts give you a great opportunity to practice.

▍ Task

Your goal is to read all the book titles and their prices from the home page and return them as an array of objects. Here is the array I got:

You can proceed. Do not read further, try to do everything yourself. I must say that this problem is very similar to the one we just solved.

Happened? If not, then here is a hint.

▍Hint

The main difference between this task and the previous example is that here we need to go through the list of data. Here's how to do it:

const result = await page.evaluate(() => {

let data = []; // Создаём пустой массив

let elements = document.querySelectorAll('xxx'); // Выбираем всё

// Проходимся в цикле по всем товарам

// Выбираем название

// Выбираем цену

data.push({title, price}); // Помещаем данные в массив

return data; // Возвращаем массив с данными

});If even now you are unable to solve the problem, there is nothing to worry about. This is a matter of practice. Here is one of the possible solutions.

▍Solving the problem

const puppeteer = require('puppeteer');

let scrape = async () => {

const browser = await puppeteer.launch({headless: false});

const page = await browser.newPage();

await page.goto('http://books.toscrape.com/');

const result = await page.evaluate(() => {

let data = []; // Создаём пустой массив для хранения данных

let elements = document.querySelectorAll('.product_pod'); // Выбираем все товары

for (var element of elements){ // Проходимся в цикле по каждому товару

let title = element.childNodes[5].innerText; // Выбираем название

let price = element.childNodes[7].children[0].innerText; // Выбираем цену

data.push({title, price}); // Помещаем объект с данными в массив

}

return data; // Возвращаем массив

});

browser.close();

return result; // Возвращаем данные

};

scrape().then((value) => {

console.log(value); // Получилось!

});Summary

In this article, you learned how to use the Google Chrome browser and the Puppeteer library to create a web scraping system. Namely, we examined the structure of the code, how to programmatically control the browser, the method of creating screen copies, methods of simulating the user’s work with the page, and approaches to reading and saving data posted on web pages. If this was your first acquaintance with web scraping, we hope that now you have everything you need in order to get everything you need from the Internet.

Dear readers! Do you use the Puppeteer library and Google Chrome browser without a user interface?