Illusion of movement

- Transfer

The story is about the sense of vision, perception of frames and refresh rate, motion blur of a moving object and television screens.

(also see the translation of an article by the same author, “ Illusion of Speed ” - approx. per.)

You could hear the term frames per second (FPS), and that 60 FPS is a really good reference for any animation. But most console games go at 30 FPS, and movies usually record at 24 FPS, so why should we aim at 60 FPS?

Filming of the 1950 Hollywood film “Julius Caesar” with Charlton Heston

When the first filmmakers began making films, many discoveries were made not by a scientific method, but by trial and error. The first cameras and projectors were controlled manually, and the film was very expensive - so expensive that when shooting, they tried to use the lowest possible frame rate to save film. This threshold was usually between 16 and 24 FPS.

When a sound (audio track) was applied to the physical film and played it simultaneously with the video, then manually controlled playback became a problem. It turned out that people normally perceive a variable frame rate for video, but not for sound (when the tempo and pitch change), so the filmmakers had to choose a constant speed for both. They chose 24 FPS, and now, after almost a hundred years, it remains the standard in the movie. (In television, the frame rate had to be slightly changed due to how CRT televisions are synchronized with the frequency of the mains).

But if 24 FPS is hardly acceptable for a movie, then what is the optimal frame rate? This is a tricky question, because there is no optimal frame rate.

Perception of movement is the process of deriving the speed and direction of scene elements based on visual, vestibular and proprioceptive sensations. Although the process seems simple to most observers, it has proven to be a complex problem from a computational point of view and extremely difficult to explain in terms of neural processing. - Wikipedia

Eye is not a camera. He does not perceive the movement as a series of frames. He perceives a continuous flow of information, and not a set of individual pictures. Why then do cadres work at all?

Two important phenomena explain why we see movement when we look at fast-changing pictures:inertia of visual perception and phi-phenomenon (stroboscopic illusion of continuous movement - approx. per.).

Most filmmakers think that the only reason is the inertia of visual perception , but this is not so; although confirmed, but not scientifically proven, the inertia of visual perception is a phenomenon according to which the afterimage is likely to remain for about 40 milliseconds on the retina. This explains why we do not see dark flicker in movie theaters or (usually) on a CRT.

Phi phenomenon in action. Noticed movement in the picture, although nothing moves on it?

On the other hand, many consider it a phi phenomenon.the true reason that we see movement behind individual images. This is an optical illusion of the perception of continuous movement between separate objects, if they are quickly shown one after another. But even the phi-phenomenon is being questioned , and scientists have not come to a consensus.

Our brain helps very well to fake movement - not perfect, but good enough. A series of motionless frames simulating motion creates different perceptual artifacts in the brain, depending on the frame rate. Thus, the frame rate will never be optimal, but we can get closer to the ideal.

To better understand the absolute scale of frame rate quality, I suggest looking at the overview table. But remember that the eye is a complex system and it does not recognize individual frames, so this is not an exact science, but simply the observations of different people over the past time.

Note: Although 60 FPS is considered a good frame rate for smooth animation, this is not enough for a great picture. Contrast and sharpness can still be improved beyond this value. To study how sensitive our eyes are to changes in brightness, a number of scientific studies have been conducted. They showed that the subjects are able to recognize a white frame among thousands of black frames. If you want to dig a little deeper, here are a few resources , and more .

60vs24fps.mp4

Thanks to my friend Mark Tonsing for making this fantastic comparison.

The Hobbit was a popular movie shot on a 48 FPS dual frame rate called HFR (high frame rate). Unfortunately, not everyone liked the new look. There were several reasons for this, the main one being the so-called “ soap opera effect ”.

The brain of most people is trained to perceive 24 full frames per second as a high-quality movie, and 50-60 half-frames (interlaced television signals) remind us of television and destroy the “ film effect ”. A similar effect is created if you activate motion interpolation on your TV for 24p material (progressive scan). Many do not like it (despite the fact that modern algorithms are pretty good at rendering smooth movements without artifacts, which is the main reason why critics reject this function).

Although HFRsignificantly improves the image (makes movements less intermittent and struggles with blurry moving objects), it is not easy to find the answer how to improve its perception. This requires retraining of the brain. Some viewers do not notice any problems after ten minutes of watching The Hobbit, but others do not tolerate HFR at all.

But if 24 FPS is called a barely tolerable freyt, then why have you never complained about the interruption of the video when leaving the cinema? It turns out that camcorders have a built-in function - or a bug, if you like - which is missing in CGI (including CSS animations!): This is motion blur, that is, blurring a moving object.

After you saw motion blur, its absence in video games and in software becomes painfully obvious.

Motion blur, as defined on Wikipedia, is

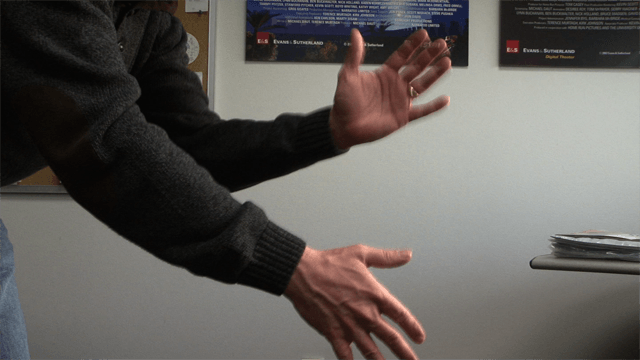

In this case, a picture is better than a thousand words.

No motion blur

C motion blur

Images from Evans & Sutherland Computer Corporation, Salt Lake City, Utah. Used with permission. All rights reserved.

Motion blur uses a trick, depicting a lot of movement in one frame, sacrificing detail . This is the reason why the 24 FPS movie looks relatively acceptable compared to the 24 FPS video games.

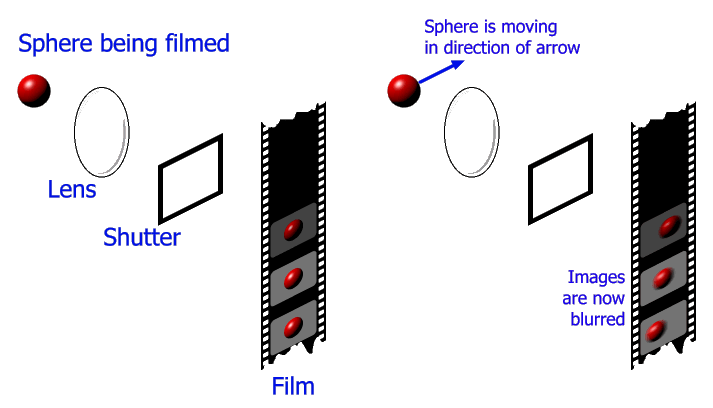

But how does motion blur initially appear? According to the E&S description , which first applied 60 FPS to its mega-dome screens:

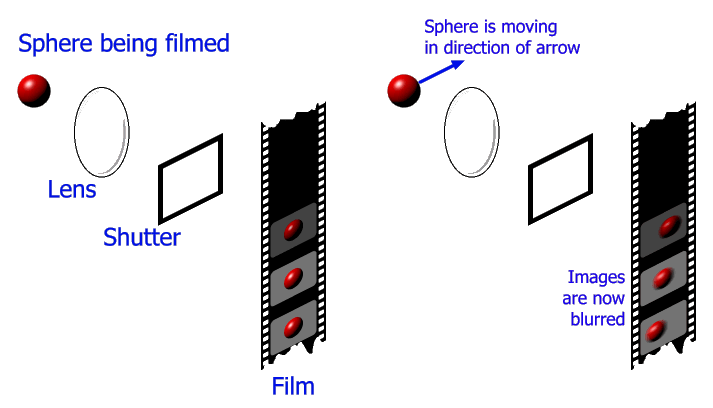

Here is a graphic that simplifies the process.

Images of Hugo Elias . Used with permission.

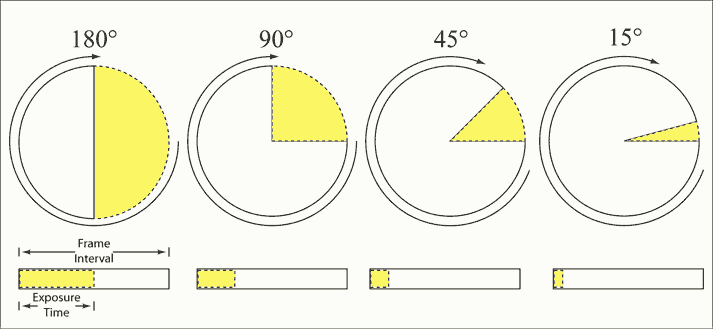

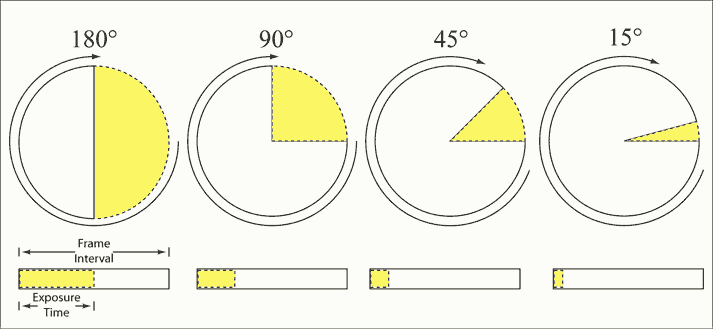

Classic movie cameras use a shutter (rotating partitioned disk - approx. Per.) To capture motion blur. Rotating the dial, you open the shutter for a controlled period of time at a certain angle and, depending on this angle, change the exposure time. If the shutter speed is small, then less motion will be recorded on the film, that is, motion blur will be weaker; and if the shutter speed is longer, more movement will be recorded and the effect will manifest itself more strongly.

Shutter in action. Via Wikipedia

If motion blur is such a useful thing, then why do filmmakers want to get rid of it? Well, when you add motion blur you lose detail; and getting rid of it - you lose the smoothness of movements. So when directors want to shoot a scene with a lot of details, like an explosion with a lot of flying particles or a complex scene with an action, they often choose a slow shutter speed that reduces blur and creates a clear puppet animation effect .

Motion Blur capture visualization. Via Wikipedia

So why not just add it?

Motion blur significantly improves the animation in games and on websites, even at low frame rates. Unfortunately, its implementation is too expensive. To create the perfect motion blur, you would need to take four times as many frames of an object in motion, and then temporarily filter or smooth it (here's a great explanation from Hugo Eliash). If you need to render at 96 FPS to release acceptable material at 24 FPS, then instead you can simply raise the frame rate, so this is often not an option for content that is rendered in real time. Exceptions are video games, where the trajectory of objects is known in advance, so you can calculate the approximate motion blur, as well as declarative animation systems like CSS Animations and, of course, CGI movies like Pixar.

Note: hertz (Hz) is commonly used when talking about refresh rates, while frames per second (fps) is an established term for frame-by-frame animation. In order not to confuse them, we use Hz for the refresh rate and FPS for the frame rate.

If you are wondering why Blu-Ray disc playback looks so ugly on your laptop, then often the reason is that the frame rate is unevenly divided by the refresh rate of the screen (in contrast, DVDs are converted before transferring). Yes, the refresh rate and frame rate are not the same thing. According to Wikipedia, “[..] the refresh rate includes re-drawing identical frames, while the frame rate measures how often the original video material will display a full frame of new data on the display.”So the frame rate corresponds to the number of individual frames on the screen, and the refresh rate corresponds to the number of times the image on the screen is updated or redrawn.

Ideally, the refresh rate and frame rate are fully synchronized, but in certain situations there are reasons to use the refresh rate three times higher than the frame rate, depending on the projection system used.

Film projectors

Many people think that during projection film projectors scroll the film in front of a light source. But in this case, we would observe a continuous blurry image. Instead, a shutter is used to separate frames from each other , as is the case with movie cameras. After displaying the frame, the shutter closes and the light does not pass until the shutter opens for the next frame, and the process repeats.

Movie projector shutter in action. From Wikipedia .

However, this is not a complete description. Of course, as a result of such processes, you will see the film, but the flickering of the screen due to the fact that the screen remains dark 50% of the time will drive you crazy. These blackouts between frames will destroy the illusion. To compensate, the projectors actually close the shutter two or three times on each frame.

Of course, this seems illogical - why, as a result of adding additional flickers, does it seem to us that there are fewer of them ? The challenge is to reduce the dimming period, which has a disproportionate effect on the visual system. Flicker fusion threshold(closely related to the inertia of visual perception) describes the effect of these blackouts. At approximately ~ 45 Hz, blackout periods should be less than ~ 60% of the time the frame was displayed, which is why the movie shutter double-release method is effective. At more than 60 Hz, dimming periods can be more than 90% of the frame display time (necessary for displays like CRTs). The whole concept as a whole is a bit more complicated, but in practice, here's how to avoid flickering:

Flickering CRTs CRT

monitors and televisions work by directing electrons to a fluorescent screen that contains a phosphor with a low afterglow time . How short is the afterglow time? So small that you will never see the full image! Instead, in the process of electronic scanning, the phosphor ignites and loses its brightness in less than 50 microseconds - it is 0.05 milliseconds ! For comparison, the full frame on your smartphone is demonstrated for 16.67 ms.

Screen update shot at a shutter speed of 1/3000 second. From Wikipedia .

So the only reason CRT works at all is the inertia of visual perception. Due to the long dark spaces between the backlights, CRTs often seem to flicker - especially in the PAL system, which runs at 50 Hz, unlike NTSC, which runs at 60 Hz, where the flicker fusion threshold is already in effect.

To complicate matters even further, the eye does not perceive flicker equally on every part of the screen. In fact, peripheral vision, although it sends a more blurred image to the brain, is more sensitive to brightness and has a significantly shorter response time. It was probably very useful in ancient times to find wild animals jumping to the side to eat you, but it is inconvenient when watching CRT films at close range or at a strange angle.

Blurry LCDs

Liquid crystal displays (LCDs), which are classified as sampling and storage devices , are actually quite amazing because they generally do not have blackouts between frames. The current image is continuously displayed on it until a new image arrives.

Let me repeat: There is no flicker on the LCDs caused by a screen refresh, regardless of the refresh rate .

But now you are thinking: “Wait a minute, I recently chose a TV, and every manufacturer advertised, damn it, a higher refresh rate!” And although this is mostly pure marketing, LCDs with a higher refresh rate solve the problem - they just don’t the one you are thinking about.

Visual blur in motion

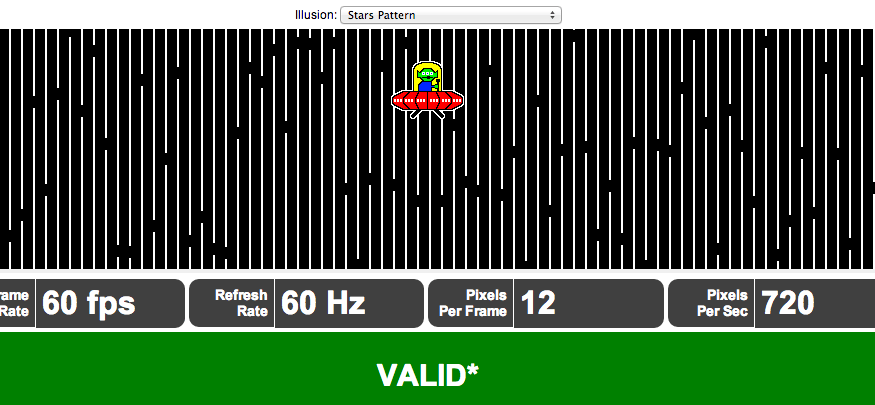

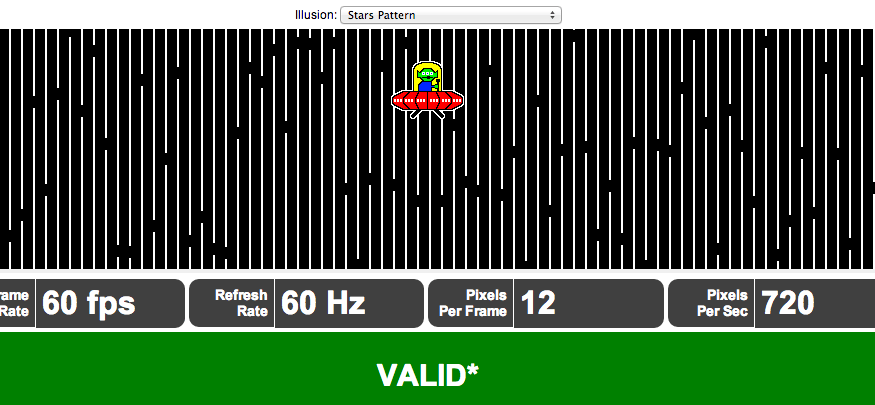

LCD manufacturers are increasing and increasing the refresh rate due to on - screen or visual motion blur . And there is; Not only is the camera capable of recording motion blur, but your eyes can too! Before explaining how this happens, here are two demolishing demosto help you feel the effect (click on the image). In the first experiment, focus your eyes on the stationary flying alien above - and you will clearly see white lines. And if you focus your eyes on a moving alien, then the white lines magically disappear. From the Blur Busters website:

In fact, the effect of tracking the gaze of various objects can never be completely prevented, and often it is such a big problem in cinema and production that there are special people whose only job is to predict what exactly will track the viewer's eyes in the frame and ensure that nothing another will not bother him.

In a second experiment, the Blur Busters guys are trying to recreate the effect of the LCD display compared to a screen with a small afterglow by simply inserting black frames between the frames of the display - surprisingly, it works.

As shown earlier, motion blur can be either a blessing or a curse - it sacrifices sharpness for the sake of smoothness, and blurring added by your eyes is always undesirable. So why is motion blur so big a problem for LCDs compared to CRTs where there are no such issues? Here is an explanation of what happens if a short-term frame (received in a short time) lingers on the screen longer than expected.

The following quote is from an excellent article by Dave Marsh on MSDN on temporary oversampling . It is surprisingly accurate and relevant for an article 15 years ago:

And his conclusion:

Here is how! It turns out that what we need to do is to illuminate the image on the retina, and then allow the eye to interpolate the movement together with the brain.

No one knows for sure, but there are definitely many situations where the brain helps create the final image of what is shown to it. Take, for example, this blind spot test : it turns out that there is a blind spot in the place where the optic nerve joins the retina. In theory, the spot should be black, but in fact the brain fills it with an interpolated image from the surrounding space.

As mentioned earlier, there are problems if the frame rate and the refresh rate of the screen are not synchronized, that is, when the refresh rate is not divided without a remainder by the frame rate.

What happens when your game or application starts to draw a new frame on the screen and the display is in the middle of the update cycle? This literally breaks the frame into pieces:

This is what happens behind the scenes. Your CPU / GPU performs certain calculations to compose a frame, then passes it to the buffer, which should wait for the monitor to trigger an update through the driver stack. Then the monitor reads this frame and starts displaying it (here you need double buffering so that one image is always given out and one is composed). The gap occurs when the buffer, which is currently displayed on the screen from top to bottom, is replaced by the next frame that the video card produces. The result is that the upper part of your screen is obtained from one frame, and the lower part from another.

Note: to be precise, a screen break may occur even if the refresh rate and frame rate are the same! They should matchboth phase and frequency .

Screen break in action. From Wikipedia

This is clearly not what we need. Fortunately, there is a solution!

Screen tearing can be fixed with Vsync, short for “vertical sync.” This is a hardware or software function that ensures that no break occurs - that your software can draw a new frame only when the previous screen update is completed. Vsync changes the frame rate of the above process buffer so that the image never changes in the middle of the screen.

Therefore, if a new frame is not yet ready for rendering on the next screen update, the screen will simply take the previous frame and redraw it. Unfortunately, this leads to the following problem.

Although our frames are no longer torn, playback is still far from smooth. This time the cause is a problem that is so serious that every industry gives it its own name: jadder, jitter , statter, junk or hitching, trembling and coupling. Let's dwell on the term jitter.

Jitter occurs when an animation is played at a different frame rate compared to the one at which it was shot (or intended to be played).Often this means that jitter appears when the playback frequency is unstable or variable rather than fixed (since most content is recorded at a fixed frequency). Unfortunately, this is exactly what happens when you try to display, for example, 24 FPS content on a screen that is updated 60 times per second. From time to time, since 60 is not divided by 24 without a remainder, you have to show one frame twice (if you do not use more advanced conversions), which spoils smooth effects, such as panning the camera.

In games and on websites with a lot of animation, this is even more noticeable. Many can not play the animation on a constant, dividing framerate without a trace. Instead, the frame rate varies greatly for various reasons, such as the independent operation of individual graphic layers, the processing of user input, and so on. It may shock you, but an animation with a maximum frequency of 30 FPS looks much, much better than the same animation with a frequency that varies from 40 to 50 FPS.

It is not necessary for me to take a word; look with your own eyes. Here is a spectacular demonstration of microjitter (microstatter) .

“ Teleprojector ” is a method of converting a film image into a video signal. Dear professional converters such as those used in television, osuschestvlyayut this operation mainly through a process called control motion vector (motion vector steering). He is able to create very compelling new frames to fill the gaps. At the same time, two other methods are still widely used.

Acceleration

When converting 24 FPS to a PAL signal at 25 FPS (for example, TV or video in the UK), it is common practice to simply speed up the original video by 1/25 of a second. So if you’ve ever wondered why the Ghostbusters in Europe are a couple of minutes shorter, then here’s the answer. Although the method works surprisingly well for video, it terribly affects the sound. You may ask how much worse a 1/25 accelerated sound can be without an additional pitch change? Almost half a ton worse.

Take a real example of a major failure. When Warner released the Lord of the Rings extended Blu-Ray collection in Germany, they used the already adjusted PAL version of the soundtrack for German dubbing, which was previously accelerated by 1/25, followed by a lower tone to correct the changes. But since Blu-ray goes at 24 FPS, they had to reverse-convert the video, so they slowed it down again. Of course, from the very beginning it was a bad idea to perform such a double conversion, due to losses, but even worse, after slowing down the video to match the Blu-ray frame rate, they forgot to change the tone back on the soundtrack, so all the actors in the film suddenly became sound over-depressing, talking half a tone lower. Yes, this is a real story and yes, it really offended the fans, there were a lot of tears,

The moral of the story: changing speed is not a good idea.

Pulldown

Converting film footage for NTSC, the American television standard, will not work with simple acceleration, because converting 24 FPS to 29.97 FPS corresponds to an acceleration of 24.875%. Unless you truly love chipmunks, this would not be the best option.

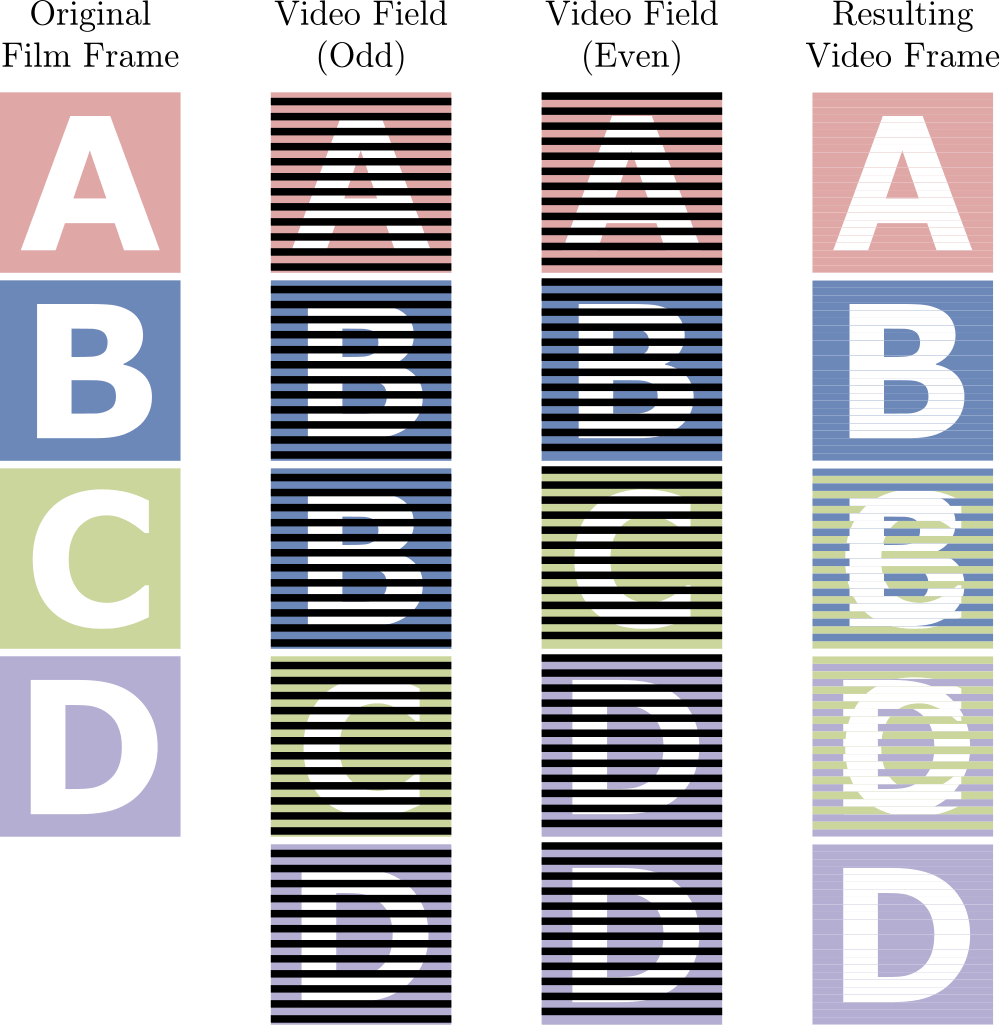

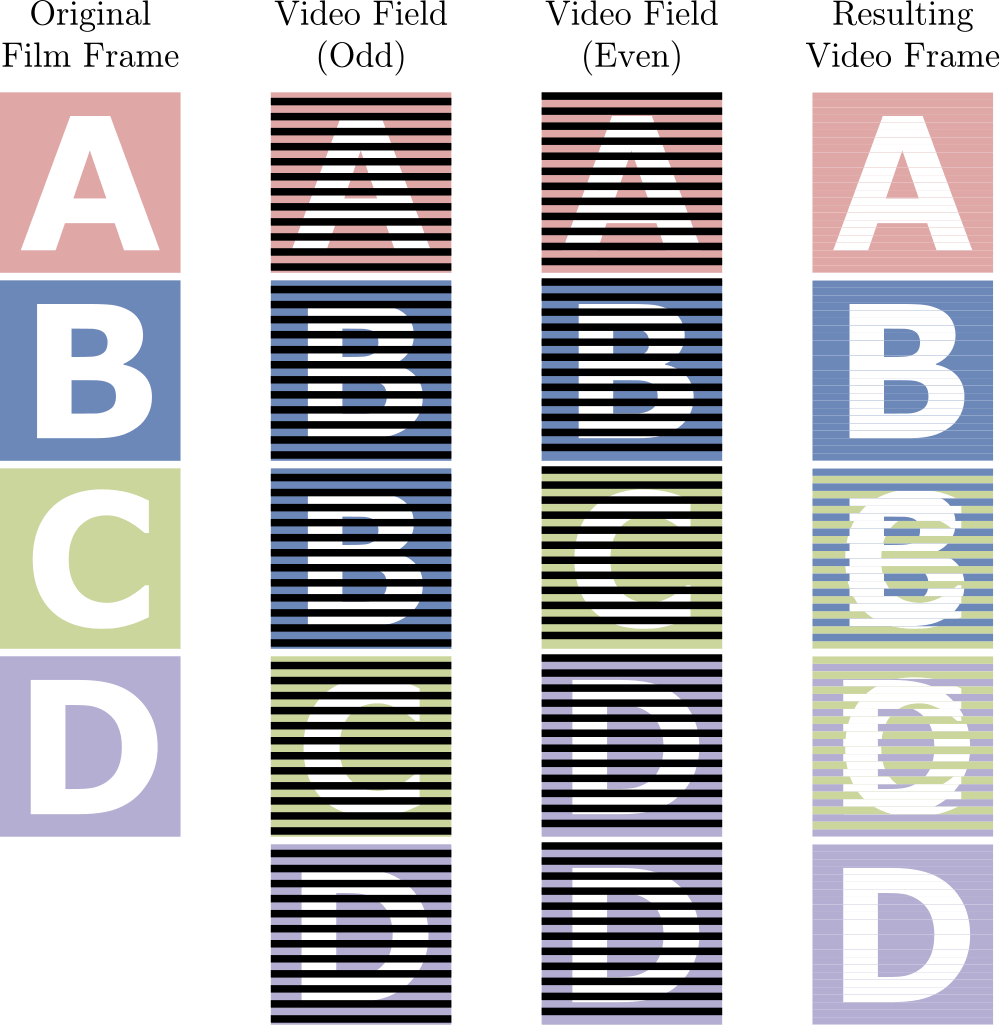

Instead, a process called 3: 2 pulldown (among others) is used, which has become the most popular conversion method. As part of this process, 4 original frames are taken and converted into 10 interlaced half-frames or 5 full frames. Here is an illustration that describes the process.

3: 2 Pulldown in action. From Wikipedia.

On an interlaced display (i.e., CRT), the video fields in the middle are displayed in tandem, each in interlaced version, so they consist of every second row of pixels. The original frame A is split into two half-frames, both of which are displayed on the screen. The next frame B is also split, but the odd video field is displayed twice, so this frame is distributed over three half-frames. And, in total, we get 10 half-frames distributed over video fields from 4 original full frames.

This works quite well when displayed on an interlaced screen (such as a CRT TV) with about 60 video fields per second (almost half frames), since half frames never show together. But such a signal looks terrible on displays that do not support half-frames and should be together 30 full frames, as in the rightmost column in the illustration above. The reason for the failure is that every third and fourth frames are blinded from two different frames of the original, which leads to what I call Frankenframe. It looks especially terrible on fast motion when there are significant differences between adjacent frames.

So pulldown looks elegant, but it is also not a universal solution. What then? Is there really no perfect option? As it turns out, he does exist, and the solution is deceptively simple!

Instead of struggling with a fixed refresh rate, it is of course much better to use a variable refresh rate, which is always in sync with the frame rate. This is exactly what Nvidia G-Sync and AMD Freesync technologies are for . G-Sync is a module built into monitors, it allows them to synchronize with GPU output instead of forcing the GPU to synchronize with a monitor, and Freesync achieves the same goal without a module. These are truly revolutionary technologies that eliminate the need for a "TV projector", and all content with a variable frame rate, such as games and web animations, looks much smoother.

Instead of struggling with a fixed refresh rate, it is of course much better to use a variable refresh rate, which is always in sync with the frame rate. This is exactly what Nvidia G-Sync and AMD Freesync technologies are for . G-Sync is a module built into monitors, it allows them to synchronize with GPU output instead of forcing the GPU to synchronize with a monitor, and Freesync achieves the same goal without a module. These are truly revolutionary technologies that eliminate the need for a "TV projector", and all content with a variable frame rate, such as games and web animations, looks much smoother.

Unfortunately, both G-Sync and Freesync are relatively new technologies and have not yet been widely distributed, so if you, as a web developer, make animations for websites or applications and cannot afford to use full-fledged 60 FPS, then the best thing will be limit the maximum frame rate so that it is completely divided by the refresh rate - in almost all cases, the best limit would be 30 FPS.

So how to achieve a decent balance, taking into account all the desired effects - minimal motion blur, minimal flicker, constant frame rate, good motion display and good compatibility with all displays - without much burden on the GPU and display? Yes, extra-large frame rates can reduce motion blur, but at a great price. The answer is clear and after reading this article you should know it: 60 FPS .

Now that you're smarter, do your best to run all animated content at 60 frames per second.

(also see the translation of an article by the same author, “ Illusion of Speed ” - approx. per.)

Introduction

You could hear the term frames per second (FPS), and that 60 FPS is a really good reference for any animation. But most console games go at 30 FPS, and movies usually record at 24 FPS, so why should we aim at 60 FPS?

Frames ... per second?

Early filmmaking times

Filming of the 1950 Hollywood film “Julius Caesar” with Charlton Heston

When the first filmmakers began making films, many discoveries were made not by a scientific method, but by trial and error. The first cameras and projectors were controlled manually, and the film was very expensive - so expensive that when shooting, they tried to use the lowest possible frame rate to save film. This threshold was usually between 16 and 24 FPS.

When a sound (audio track) was applied to the physical film and played it simultaneously with the video, then manually controlled playback became a problem. It turned out that people normally perceive a variable frame rate for video, but not for sound (when the tempo and pitch change), so the filmmakers had to choose a constant speed for both. They chose 24 FPS, and now, after almost a hundred years, it remains the standard in the movie. (In television, the frame rate had to be slightly changed due to how CRT televisions are synchronized with the frequency of the mains).

Frames and the human eye

But if 24 FPS is hardly acceptable for a movie, then what is the optimal frame rate? This is a tricky question, because there is no optimal frame rate.

Perception of movement is the process of deriving the speed and direction of scene elements based on visual, vestibular and proprioceptive sensations. Although the process seems simple to most observers, it has proven to be a complex problem from a computational point of view and extremely difficult to explain in terms of neural processing. - Wikipedia

Eye is not a camera. He does not perceive the movement as a series of frames. He perceives a continuous flow of information, and not a set of individual pictures. Why then do cadres work at all?

Two important phenomena explain why we see movement when we look at fast-changing pictures:inertia of visual perception and phi-phenomenon (stroboscopic illusion of continuous movement - approx. per.).

Most filmmakers think that the only reason is the inertia of visual perception , but this is not so; although confirmed, but not scientifically proven, the inertia of visual perception is a phenomenon according to which the afterimage is likely to remain for about 40 milliseconds on the retina. This explains why we do not see dark flicker in movie theaters or (usually) on a CRT.

Phi phenomenon in action. Noticed movement in the picture, although nothing moves on it?

On the other hand, many consider it a phi phenomenon.the true reason that we see movement behind individual images. This is an optical illusion of the perception of continuous movement between separate objects, if they are quickly shown one after another. But even the phi-phenomenon is being questioned , and scientists have not come to a consensus.

Our brain helps very well to fake movement - not perfect, but good enough. A series of motionless frames simulating motion creates different perceptual artifacts in the brain, depending on the frame rate. Thus, the frame rate will never be optimal, but we can get closer to the ideal.

Standard frames, from bad to perfect

To better understand the absolute scale of frame rate quality, I suggest looking at the overview table. But remember that the eye is a complex system and it does not recognize individual frames, so this is not an exact science, but simply the observations of different people over the past time.

| Frame rate | Human perception |

|---|---|

| 10-12 FPS | The absolute minimum for demonstrating movement. Smaller values are already recognized by the eye as separate images. |

| <16 FPS | Visible hitches are created; for many, this frame rate causes headaches. |

| 24 FPS | Minimum tolerable frame rate for the perception of movement, cost-effective |

| 30 FPS | Much better than 24 FPS, but not realistic. This is the standard for NTSC video due to AC frequency. |

| 48 FPS | Good, but not enough for true realism (although Thomas Edison thought otherwise ). Also see this article . |

| 60 FPS | Zone of the best perception; most people will not accept further quality improvements above 60 FPS. |

| ∞ FPS | To date, science has not been able to prove or by observation discover the theoretical limit of man. |

Demo: what does 24 FPS look like compared to 60 FPS?

60vs24fps.mp4

Thanks to my friend Mark Tonsing for making this fantastic comparison.

HFR: Remounting the Brain with the Hobbit

The Hobbit was a popular movie shot on a 48 FPS dual frame rate called HFR (high frame rate). Unfortunately, not everyone liked the new look. There were several reasons for this, the main one being the so-called “ soap opera effect ”.

The brain of most people is trained to perceive 24 full frames per second as a high-quality movie, and 50-60 half-frames (interlaced television signals) remind us of television and destroy the “ film effect ”. A similar effect is created if you activate motion interpolation on your TV for 24p material (progressive scan). Many do not like it (despite the fact that modern algorithms are pretty good at rendering smooth movements without artifacts, which is the main reason why critics reject this function).

Although HFRsignificantly improves the image (makes movements less intermittent and struggles with blurry moving objects), it is not easy to find the answer how to improve its perception. This requires retraining of the brain. Some viewers do not notice any problems after ten minutes of watching The Hobbit, but others do not tolerate HFR at all.

Cameras and CGI: the history of motion blur

But if 24 FPS is called a barely tolerable freyt, then why have you never complained about the interruption of the video when leaving the cinema? It turns out that camcorders have a built-in function - or a bug, if you like - which is missing in CGI (including CSS animations!): This is motion blur, that is, blurring a moving object.

After you saw motion blur, its absence in video games and in software becomes painfully obvious.

Motion blur, as defined on Wikipedia, is

... a visible toffee of fast-moving objects in a still image or sequence of images, such as a movie or animation. It occurs if the recorded image changes during the recording of one frame, either due to rapid movement or during prolonged exposure.

In this case, a picture is better than a thousand words.

No motion blur

C motion blur

Images from Evans & Sutherland Computer Corporation, Salt Lake City, Utah. Used with permission. All rights reserved.

Motion blur uses a trick, depicting a lot of movement in one frame, sacrificing detail . This is the reason why the 24 FPS movie looks relatively acceptable compared to the 24 FPS video games.

But how does motion blur initially appear? According to the E&S description , which first applied 60 FPS to its mega-dome screens:

When you shoot a film at 24 FPS, the camera sees and records only part of the movement in front of the lens, and the shutter closes after each shutter speed to rewind the film to the next frame. This means that the shutter speed is closed for the same time as open. With fast movement and action in front of the camera, the frame rate is not high enough to catch up with them, and the images are blurred in each frame (due to exposure time).

Here is a graphic that simplifies the process.

Images of Hugo Elias . Used with permission.

Classic movie cameras use a shutter (rotating partitioned disk - approx. Per.) To capture motion blur. Rotating the dial, you open the shutter for a controlled period of time at a certain angle and, depending on this angle, change the exposure time. If the shutter speed is small, then less motion will be recorded on the film, that is, motion blur will be weaker; and if the shutter speed is longer, more movement will be recorded and the effect will manifest itself more strongly.

Shutter in action. Via Wikipedia

If motion blur is such a useful thing, then why do filmmakers want to get rid of it? Well, when you add motion blur you lose detail; and getting rid of it - you lose the smoothness of movements. So when directors want to shoot a scene with a lot of details, like an explosion with a lot of flying particles or a complex scene with an action, they often choose a slow shutter speed that reduces blur and creates a clear puppet animation effect .

Motion Blur capture visualization. Via Wikipedia

So why not just add it?

Motion blur significantly improves the animation in games and on websites, even at low frame rates. Unfortunately, its implementation is too expensive. To create the perfect motion blur, you would need to take four times as many frames of an object in motion, and then temporarily filter or smooth it (here's a great explanation from Hugo Eliash). If you need to render at 96 FPS to release acceptable material at 24 FPS, then instead you can simply raise the frame rate, so this is often not an option for content that is rendered in real time. Exceptions are video games, where the trajectory of objects is known in advance, so you can calculate the approximate motion blur, as well as declarative animation systems like CSS Animations and, of course, CGI movies like Pixar.

60 Hz! = 60 FPS: refresh rate and why is it important

Note: hertz (Hz) is commonly used when talking about refresh rates, while frames per second (fps) is an established term for frame-by-frame animation. In order not to confuse them, we use Hz for the refresh rate and FPS for the frame rate.

If you are wondering why Blu-Ray disc playback looks so ugly on your laptop, then often the reason is that the frame rate is unevenly divided by the refresh rate of the screen (in contrast, DVDs are converted before transferring). Yes, the refresh rate and frame rate are not the same thing. According to Wikipedia, “[..] the refresh rate includes re-drawing identical frames, while the frame rate measures how often the original video material will display a full frame of new data on the display.”So the frame rate corresponds to the number of individual frames on the screen, and the refresh rate corresponds to the number of times the image on the screen is updated or redrawn.

Ideally, the refresh rate and frame rate are fully synchronized, but in certain situations there are reasons to use the refresh rate three times higher than the frame rate, depending on the projection system used.

Each display has a new problem.

Film projectors

Many people think that during projection film projectors scroll the film in front of a light source. But in this case, we would observe a continuous blurry image. Instead, a shutter is used to separate frames from each other , as is the case with movie cameras. After displaying the frame, the shutter closes and the light does not pass until the shutter opens for the next frame, and the process repeats.

Movie projector shutter in action. From Wikipedia .

However, this is not a complete description. Of course, as a result of such processes, you will see the film, but the flickering of the screen due to the fact that the screen remains dark 50% of the time will drive you crazy. These blackouts between frames will destroy the illusion. To compensate, the projectors actually close the shutter two or three times on each frame.

Of course, this seems illogical - why, as a result of adding additional flickers, does it seem to us that there are fewer of them ? The challenge is to reduce the dimming period, which has a disproportionate effect on the visual system. Flicker fusion threshold(closely related to the inertia of visual perception) describes the effect of these blackouts. At approximately ~ 45 Hz, blackout periods should be less than ~ 60% of the time the frame was displayed, which is why the movie shutter double-release method is effective. At more than 60 Hz, dimming periods can be more than 90% of the frame display time (necessary for displays like CRTs). The whole concept as a whole is a bit more complicated, but in practice, here's how to avoid flickering:

- Use a different type of display, where there is no dimming between frames, that is, it constantly displays the frame on the screen.

- Apply permanent, unchanging dimming phases with a duration of less than 16 ms

Flickering CRTs CRT

monitors and televisions work by directing electrons to a fluorescent screen that contains a phosphor with a low afterglow time . How short is the afterglow time? So small that you will never see the full image! Instead, in the process of electronic scanning, the phosphor ignites and loses its brightness in less than 50 microseconds - it is 0.05 milliseconds ! For comparison, the full frame on your smartphone is demonstrated for 16.67 ms.

Screen update shot at a shutter speed of 1/3000 second. From Wikipedia .

So the only reason CRT works at all is the inertia of visual perception. Due to the long dark spaces between the backlights, CRTs often seem to flicker - especially in the PAL system, which runs at 50 Hz, unlike NTSC, which runs at 60 Hz, where the flicker fusion threshold is already in effect.

To complicate matters even further, the eye does not perceive flicker equally on every part of the screen. In fact, peripheral vision, although it sends a more blurred image to the brain, is more sensitive to brightness and has a significantly shorter response time. It was probably very useful in ancient times to find wild animals jumping to the side to eat you, but it is inconvenient when watching CRT films at close range or at a strange angle.

Blurry LCDs

Liquid crystal displays (LCDs), which are classified as sampling and storage devices , are actually quite amazing because they generally do not have blackouts between frames. The current image is continuously displayed on it until a new image arrives.

Let me repeat: There is no flicker on the LCDs caused by a screen refresh, regardless of the refresh rate .

But now you are thinking: “Wait a minute, I recently chose a TV, and every manufacturer advertised, damn it, a higher refresh rate!” And although this is mostly pure marketing, LCDs with a higher refresh rate solve the problem - they just don’t the one you are thinking about.

Visual blur in motion

LCD manufacturers are increasing and increasing the refresh rate due to on - screen or visual motion blur . And there is; Not only is the camera capable of recording motion blur, but your eyes can too! Before explaining how this happens, here are two demolishing demosto help you feel the effect (click on the image). In the first experiment, focus your eyes on the stationary flying alien above - and you will clearly see white lines. And if you focus your eyes on a moving alien, then the white lines magically disappear. From the Blur Busters website:

“Due to the movement of your eyes, the vertical lines are blurred into thicker lines each time the frame is updated, filling in the black voids. Small afterglow displays (such as CRTs or LightBoost ) eliminate this kind of motion blur, so this test looks different on such displays. ”

In fact, the effect of tracking the gaze of various objects can never be completely prevented, and often it is such a big problem in cinema and production that there are special people whose only job is to predict what exactly will track the viewer's eyes in the frame and ensure that nothing another will not bother him.

In a second experiment, the Blur Busters guys are trying to recreate the effect of the LCD display compared to a screen with a small afterglow by simply inserting black frames between the frames of the display - surprisingly, it works.

As shown earlier, motion blur can be either a blessing or a curse - it sacrifices sharpness for the sake of smoothness, and blurring added by your eyes is always undesirable. So why is motion blur so big a problem for LCDs compared to CRTs where there are no such issues? Here is an explanation of what happens if a short-term frame (received in a short time) lingers on the screen longer than expected.

The following quote is from an excellent article by Dave Marsh on MSDN on temporary oversampling . It is surprisingly accurate and relevant for an article 15 years ago:

When a pixel is addressed, it is loaded with a certain value and remains with this value of the light output until the next addressing. In terms of drawing an image, this is wrong. A specific instance of the original scene is valid only in a specific moment. After this moment, the scene objects should be moved to other places. It is incorrect to keep images of objects in fixed positions until the next sample arrives. Otherwise, it turns out that the object suddenly jumps to a completely different place.

And his conclusion:

Your gaze will try to smoothly follow the movements of the object of interest, and the display will keep it stationary for the whole frame. The result will inevitably be a blurred image of a moving object.

Here is how! It turns out that what we need to do is to illuminate the image on the retina, and then allow the eye to interpolate the movement together with the brain.

Additionally: so to what extent does our brain perform interpolation, actually?

No one knows for sure, but there are definitely many situations where the brain helps create the final image of what is shown to it. Take, for example, this blind spot test : it turns out that there is a blind spot in the place where the optic nerve joins the retina. In theory, the spot should be black, but in fact the brain fills it with an interpolated image from the surrounding space.

Frames and screen updates do not mix and do not match!

As mentioned earlier, there are problems if the frame rate and the refresh rate of the screen are not synchronized, that is, when the refresh rate is not divided without a remainder by the frame rate.

Problem: Screen Break

What happens when your game or application starts to draw a new frame on the screen and the display is in the middle of the update cycle? This literally breaks the frame into pieces:

This is what happens behind the scenes. Your CPU / GPU performs certain calculations to compose a frame, then passes it to the buffer, which should wait for the monitor to trigger an update through the driver stack. Then the monitor reads this frame and starts displaying it (here you need double buffering so that one image is always given out and one is composed). The gap occurs when the buffer, which is currently displayed on the screen from top to bottom, is replaced by the next frame that the video card produces. The result is that the upper part of your screen is obtained from one frame, and the lower part from another.

Note: to be precise, a screen break may occur even if the refresh rate and frame rate are the same! They should matchboth phase and frequency .

Screen break in action. From Wikipedia

This is clearly not what we need. Fortunately, there is a solution!

Solution: Vsync

Screen tearing can be fixed with Vsync, short for “vertical sync.” This is a hardware or software function that ensures that no break occurs - that your software can draw a new frame only when the previous screen update is completed. Vsync changes the frame rate of the above process buffer so that the image never changes in the middle of the screen.

Therefore, if a new frame is not yet ready for rendering on the next screen update, the screen will simply take the previous frame and redraw it. Unfortunately, this leads to the following problem.

New issue: jitter

Although our frames are no longer torn, playback is still far from smooth. This time the cause is a problem that is so serious that every industry gives it its own name: jadder, jitter , statter, junk or hitching, trembling and coupling. Let's dwell on the term jitter.

Jitter occurs when an animation is played at a different frame rate compared to the one at which it was shot (or intended to be played).Often this means that jitter appears when the playback frequency is unstable or variable rather than fixed (since most content is recorded at a fixed frequency). Unfortunately, this is exactly what happens when you try to display, for example, 24 FPS content on a screen that is updated 60 times per second. From time to time, since 60 is not divided by 24 without a remainder, you have to show one frame twice (if you do not use more advanced conversions), which spoils smooth effects, such as panning the camera.

In games and on websites with a lot of animation, this is even more noticeable. Many can not play the animation on a constant, dividing framerate without a trace. Instead, the frame rate varies greatly for various reasons, such as the independent operation of individual graphic layers, the processing of user input, and so on. It may shock you, but an animation with a maximum frequency of 30 FPS looks much, much better than the same animation with a frequency that varies from 40 to 50 FPS.

It is not necessary for me to take a word; look with your own eyes. Here is a spectacular demonstration of microjitter (microstatter) .

Fighting Jitter

When converting: "TV projector"

“ Teleprojector ” is a method of converting a film image into a video signal. Dear professional converters such as those used in television, osuschestvlyayut this operation mainly through a process called control motion vector (motion vector steering). He is able to create very compelling new frames to fill the gaps. At the same time, two other methods are still widely used.

Acceleration

When converting 24 FPS to a PAL signal at 25 FPS (for example, TV or video in the UK), it is common practice to simply speed up the original video by 1/25 of a second. So if you’ve ever wondered why the Ghostbusters in Europe are a couple of minutes shorter, then here’s the answer. Although the method works surprisingly well for video, it terribly affects the sound. You may ask how much worse a 1/25 accelerated sound can be without an additional pitch change? Almost half a ton worse.

Take a real example of a major failure. When Warner released the Lord of the Rings extended Blu-Ray collection in Germany, they used the already adjusted PAL version of the soundtrack for German dubbing, which was previously accelerated by 1/25, followed by a lower tone to correct the changes. But since Blu-ray goes at 24 FPS, they had to reverse-convert the video, so they slowed it down again. Of course, from the very beginning it was a bad idea to perform such a double conversion, due to losses, but even worse, after slowing down the video to match the Blu-ray frame rate, they forgot to change the tone back on the soundtrack, so all the actors in the film suddenly became sound over-depressing, talking half a tone lower. Yes, this is a real story and yes, it really offended the fans, there were a lot of tears,

The moral of the story: changing speed is not a good idea.

Pulldown

Converting film footage for NTSC, the American television standard, will not work with simple acceleration, because converting 24 FPS to 29.97 FPS corresponds to an acceleration of 24.875%. Unless you truly love chipmunks, this would not be the best option.

Instead, a process called 3: 2 pulldown (among others) is used, which has become the most popular conversion method. As part of this process, 4 original frames are taken and converted into 10 interlaced half-frames or 5 full frames. Here is an illustration that describes the process.

3: 2 Pulldown in action. From Wikipedia.

On an interlaced display (i.e., CRT), the video fields in the middle are displayed in tandem, each in interlaced version, so they consist of every second row of pixels. The original frame A is split into two half-frames, both of which are displayed on the screen. The next frame B is also split, but the odd video field is displayed twice, so this frame is distributed over three half-frames. And, in total, we get 10 half-frames distributed over video fields from 4 original full frames.

This works quite well when displayed on an interlaced screen (such as a CRT TV) with about 60 video fields per second (almost half frames), since half frames never show together. But such a signal looks terrible on displays that do not support half-frames and should be together 30 full frames, as in the rightmost column in the illustration above. The reason for the failure is that every third and fourth frames are blinded from two different frames of the original, which leads to what I call Frankenframe. It looks especially terrible on fast motion when there are significant differences between adjacent frames.

So pulldown looks elegant, but it is also not a universal solution. What then? Is there really no perfect option? As it turns out, he does exist, and the solution is deceptively simple!

On display: G-Sync, Freesync and maximum frame rate limitation

Instead of struggling with a fixed refresh rate, it is of course much better to use a variable refresh rate, which is always in sync with the frame rate. This is exactly what Nvidia G-Sync and AMD Freesync technologies are for . G-Sync is a module built into monitors, it allows them to synchronize with GPU output instead of forcing the GPU to synchronize with a monitor, and Freesync achieves the same goal without a module. These are truly revolutionary technologies that eliminate the need for a "TV projector", and all content with a variable frame rate, such as games and web animations, looks much smoother.

Instead of struggling with a fixed refresh rate, it is of course much better to use a variable refresh rate, which is always in sync with the frame rate. This is exactly what Nvidia G-Sync and AMD Freesync technologies are for . G-Sync is a module built into monitors, it allows them to synchronize with GPU output instead of forcing the GPU to synchronize with a monitor, and Freesync achieves the same goal without a module. These are truly revolutionary technologies that eliminate the need for a "TV projector", and all content with a variable frame rate, such as games and web animations, looks much smoother.Unfortunately, both G-Sync and Freesync are relatively new technologies and have not yet been widely distributed, so if you, as a web developer, make animations for websites or applications and cannot afford to use full-fledged 60 FPS, then the best thing will be limit the maximum frame rate so that it is completely divided by the refresh rate - in almost all cases, the best limit would be 30 FPS.

Conclusion and next steps

So how to achieve a decent balance, taking into account all the desired effects - minimal motion blur, minimal flicker, constant frame rate, good motion display and good compatibility with all displays - without much burden on the GPU and display? Yes, extra-large frame rates can reduce motion blur, but at a great price. The answer is clear and after reading this article you should know it: 60 FPS .

Now that you're smarter, do your best to run all animated content at 60 frames per second.

a) If you are a web developer

Сходите на jankfree.org, где разработчики Chrome собирают лучшие ресурсы о том, как сделать все ваши приложения и анимации безупречно плавными. Если у вас есть время только для одной статьи, то выберите отличную статью Пола Льюиса The Runtime Performance Checklist.b) Если вы Android-разработчик

Сверьтесь с нашими «Лучшими практиками для производительности» в официальном разделе Android Training, где мы собрали для вас список самых важных факторов, узких мест и хитростей оптимизации.c) Если вы работаете в киноиндустрии

Record all content at 60 FPS or, even better, at 120 FPS so that you can reduce it to 60 FPS, 30 FPS and 24 FPS if necessary (unfortunately, to add support for 50 FPS and 25 FPS (PAL) you will have to increase the frequency frames up to 600 FPS). Play all the content at 60 FPS and don’t apologize for the “soap opera effect”. This revolution will take time, but it will happen.d) For everyone else

Require 60 FPS for any moving pictures on the screen, and if anyone asks why, send him to this article.