AWS Cost Optimization in SaaS Business

- Transfer

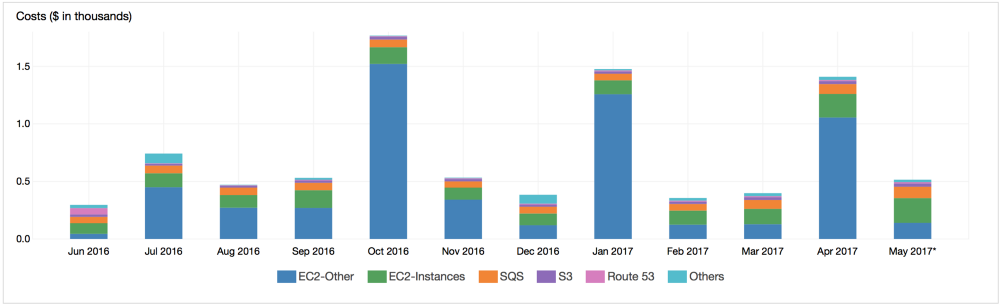

Cronitor’s AWS expenses over the past 12 months

In the first 30 days after Cronitor’s transfer to AWS in January 2015, we collected $ 535 payments and paid $ 64.47 for hosting, data transfer and domain name. Since then, we have increased the consumption of services, upgraded instances, added services. Despite AWS's reputation as an expensive pistol to shoot in the foot, our accounts remained at 12.5% of revenue. See for yourself.

Bruises and bumps from cheap AWS

It soon became clear that our idea had some promise. We realized that we needed to raise the bar from a side project to a full-fledged small business. The goal was not high availability, but only increased availability compared to the previous configuration on a single 2 GB Linode. We really only wanted to restart the database without losing incoming telemetry. Nothing special. The initial installation was quite simple:

- ELB

- SQS

- A pair of t2.small instances with web application and data collection, both on us-west-2

- One m3.medium instance, where MySQL worked and our daemon, which detected failures and sent alerts

We completed the migration in two hours with virtually no downtime and were truly pleased with ourselves. Poured beer. Congratulatory tweets set off.

The joy was short-lived.

Problem 1: ELB Fails

Our users send telemetry pings when tasks, processes and demons work for them. With NTP, today the average server has very accurate hours, and we see traffic spikes at the beginning of every second, minute, hour and day - up to a 100-fold excess of our basic traffic.

Immediately after the migration, users began to complain about periodic timeouts, so we decided to double-check the server configuration and the history of the ELB logs. With traffic jumps so far less than 100 requests per second, we abandoned the idea that the problem is in the ELB, and began to look for errors in our own configuration. In the end, we ran a test to continuously ping the service. It started a few moments before 00:00 UTC and ended moments after midnight - and saw unsuccessful requests that do not fall into the ELB logs at all. Separate instances were available, and a request queue was not created. It became clear that the connections were broken at the load balancer level, probably because our traffic jumps were too large and too short for it to warm up to a larger load.

Lesson learned:

- Cloud based solutions such as resilient load balancers are generally designed for normal, mid-sized use. Think about how you differ from the average.

Problem 2: getting to know the balance of CPU loans

The line of T2 instances with support for bursts is economically viable if the load occasionally increases, according to the official website. But I would like it to be written there: if you run your instance at a constant load of 25% CPU, then your balance of CPU credits starts to deplete, and when it ends, you essentially have the processing power of the Rasperry Pi. You will not receive any warnings about this, and your CPU% will not reflect reduced power. For the first time, having exhausted the balance of loans, we decided that the connections were broken for some other reason.

Lessons learned:

- If something is offered very cheaply, there is a good reason for this, you need to understand this

- T2 instances should be used only in autoscale group

- Just in case, just create a CloudWatch notification that will warn you in case the credit balance drops below 100

Problem 3: what is written in small print

At a re: Invent conference last year, Amazon updated its Reserved Instance offering, likely in response to more favorable terms from Google Cloud. The press release said that reserved instances would be cheaper and could be transferred between zones. This is great!

When the time came in October to close our last T2 instances, we rolled out the new M3 with these cheaper and more flexible 12-month reservations. After the appearance of several large clients in April, we decided to raise the level of instances again, now to M4.large. Six months were left from the October reservation, so I decided to sell them, as I always did before. And then I learned the bitter truth that the price for these cheaper and more flexible reservations was that ... you cannot resell them.

Lessons learned:

- If something is offered very cheaply, there is a good reason for this, you need to understand this

- Always read the terms of the offer twice. AWS billing is incredibly complex.

A Look at Real AWS Prices

Today, our infrastructure remains quite simple:

- M4.large cluster collects incoming telemetry

- M3.medium Cluster Serves Web Application and API

- Worker M4.large with our monitoring daemon and alert system

- M4.xlarge for MySQL and Redis

We continue to use a number of managed services, including SQS, S3, Route53, Lambda, and SNS.

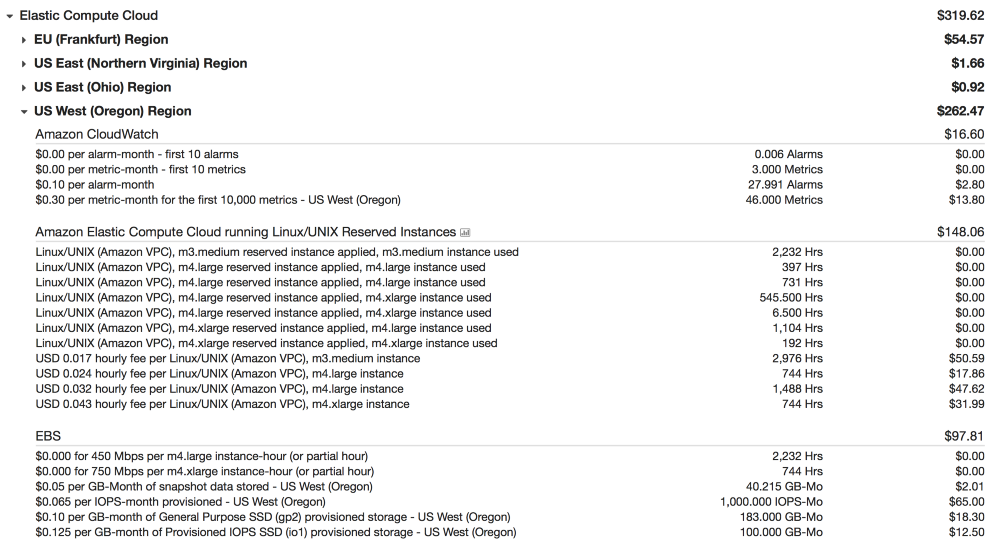

Elastic compute

We use partial advance bookings for all of our services.

You may notice that 2/3 of our monthly bills are spent on secured IOPS (input / output operations per second), as we do for instances. Unlike guaranteed IOPS in most cloud metrics, which are just a record in the contract, this is a real service that has a cost. They will also make up a substantial part of your EC2 budget for any host where disk performance matters. If you do not pay for IOPS, your tasks will queue and wait for the release of resources.

Please do not ask what “alarm-month” means.

SQS

We use SQS extensively to queue incoming telemetry pings and results from the system status check service. A few months after the migration, we made one optimization - max-batching readings. You pay for the number of requests, not the number of messages, so this reduces costs and significantly speeds up message processing.

During the migration, we were worried that SQS was the only point of failure for our data collection pipeline. To avoid risk, we launched small daemons on each host to buffer and retry SQS write in the event of a failure. Message buffering was required only once in 2.5 years, so 1) it was 100% worth running; 2) SQS has proven incredible reliability in the us-west-2 region.

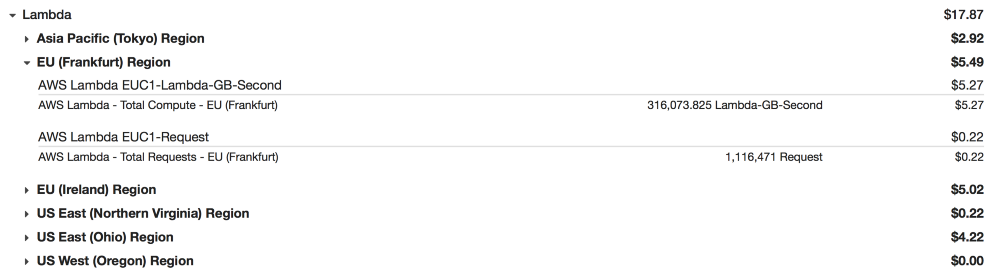

Lambda

Our Healthchecks service is built in part on Lambda Workers in the regions listed below. It should be noted that Lambda has a generous free level (Free Tier), which applies to each region separately. Currently, the free tier is touted as "indefinite . "

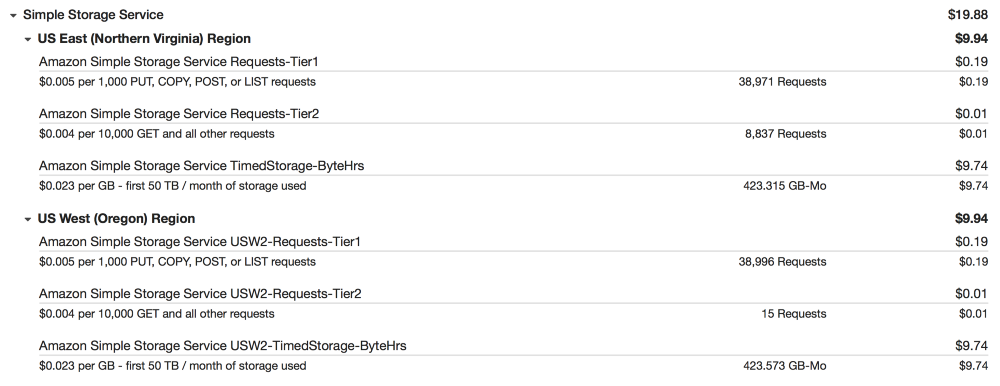

S3

We make backup copies of database snapshots and logs on S3 with replication in us-east-1 for recovery in the event of a disaster.

Professional advice for AWS is that EBS backups and snapshots are vital in the event of a disaster recovery, but truly serious disruptions in a region are usually not limited to a single service. If you cannot start the instance, then most likely you will not be able to copy your files to another region. So do it well in advance!

In conclusion

Having worked with large corporate and investment projects on AWS, I can personally guarantee that you can start a big business here. They created a fabulous set of tools and brilliant objects that are all taken together - and they take money from you for everything you touch . This is dangerous, you need to be restrained and limit your appetites, but you will be rewarded with the opportunity to grow a small business into something more, when any resources are available to you by order, as soon as needed. Sometimes it's good to stop for a second and think about how healthy it is - and then return to work again.