Internal TCP mechanisms that affect download speed: Part 1

- Transfer

Acceleration of any processes is impossible without a detailed presentation of their internal structure. Acceleration of the Internet is impossible without understanding (and corresponding configuration) of the fundamental protocols - IP and TCP. Let's deal with the features of protocols that affect the speed of the Internet.

IP (Internet Protocol) provides routing between hosts and addressing. TCP (Transmission Control Protocol) provides an abstraction in which a network reliably operates over an essentially unreliable channel.

The TCP / IP protocols were proposed by Wint Cerf and Bob Kahn in an article entitled “Communication Protocol for a Packet-Based Network,” published in 1974. The original proposal, registered as RFC 675, has been edited several times and in 1981 the 4th version of the TCP / IP specification was published as two different RFCs:

- RFC 791 - Internet Protocol

- RFC 793 - Transmission Control Protocol

Since then, several improvements have been made to TCP, but its core remains the same. TCP quickly replaced other protocols, and now with its help the main components of how we imagine the Internet are functioning: sites, email, file transfer, and others.

TCP provides the necessary abstraction of network connections so that applications do not have to solve various related tasks, such as retransmitting lost data, delivering data in a specific order, data integrity, and the like. When you work with a TCP stream, you know that the bytes sent will be identical to the received ones, and that they will come in the same order. We can say that TCP is more “tuned” to the correctness of data delivery, and not to speed. This fact creates a number of problems when it comes to optimizing site performance.

The HTTP standard does not require the use of TCP as a transport protocol. If we want, we can transmit HTTP via a datagram socket (UDP - User Datagram Protocol) or through any other. But in practice, all HTTP traffic is transmitted through TCP, due to the convenience of the latter.

Therefore, it is necessary to understand some of the internal mechanisms of TCP in order to optimize sites. Most likely, you will not work with TCP sockets directly in your application, but some of your decisions regarding application design will dictate the TCP performance through which your application will work.

Triple handshake

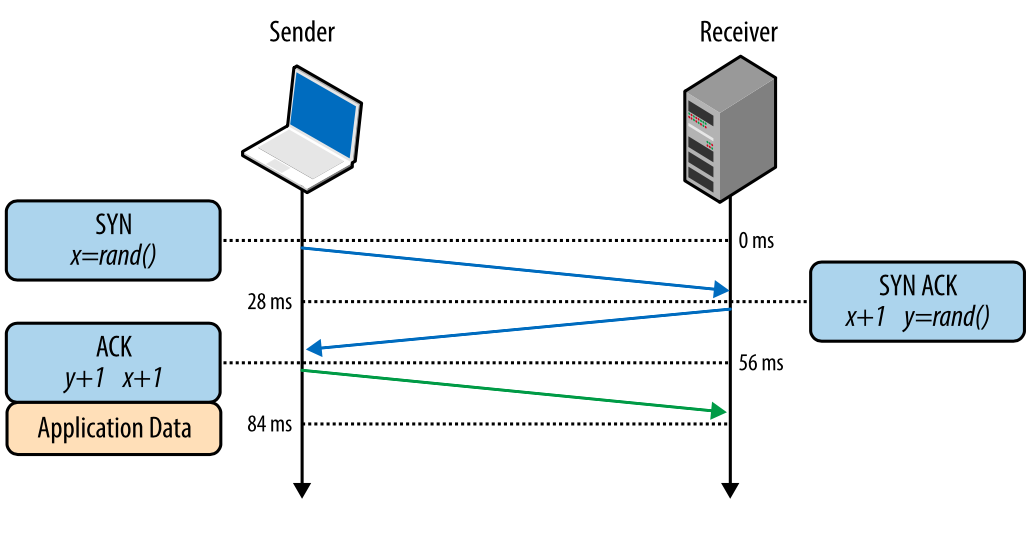

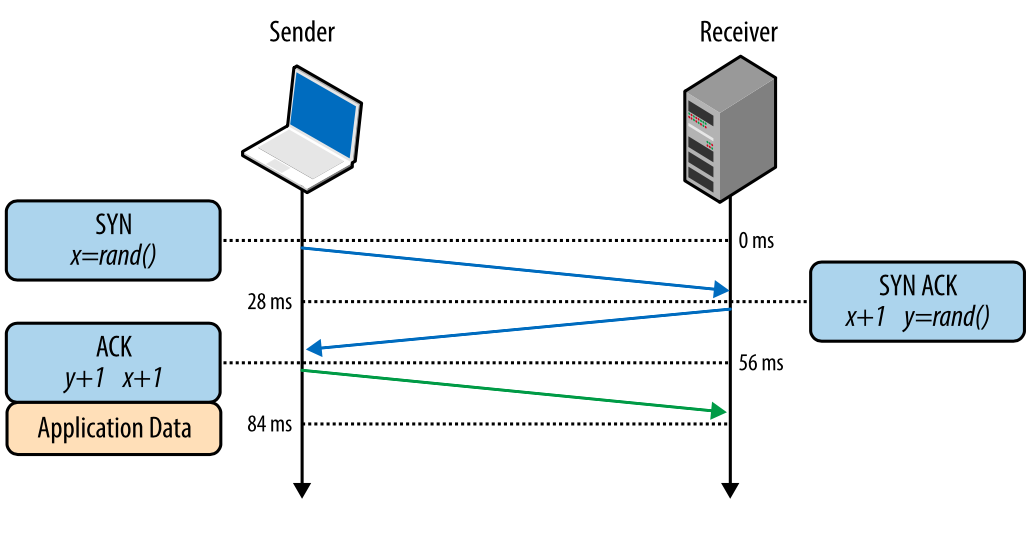

All TCP connections begin with a triple handshake (Figure 1). Before the client and server can exchange any application data, they must "agree" on the initial number of packet sequences, as well as on a number of other variables associated with this connection. Sequence numbers are randomly selected on both sides for safety.

SYN

The client selects a random number X and sends a SYN packet, which may also contain additional TCP flags and option values.

SYN ACK

The server selects its own random number Y, adds 1 to the value of X, adds its flags and options, and sends a response.

ASK

The client adds 1 to the values of X and Y and completes the handshake by sending an ACK packet.

Fig. 1. Triple handshake.

Once the handshake is perfect, data exchange can begin. The client can send the data packet immediately after the ACK packet, the server must wait for the ACK packet to start sending data. This process occurs at every TCP connection and presents a serious challenge in terms of site performance. After all, every new connection means some network delay.

For example, if the client is in New York, the server is in London, and we are creating a new TCP connection, it will take 56 milliseconds. 28 milliseconds for the packet to go in one direction and the same amount to return to New York. The channel width does not play any role here. Creating TCP connections is “expensive”, so reusing connections is an important way to optimize any TCP application.

TCP Fast Open (TFO)

Downloading a page can mean downloading hundreds of its components from different hosts. This may require the browser to create dozens of new TCP connections, each of which will give a delay due to handshake. Needless to say, this can degrade the loading speed of such a page, especially for mobile users.

TCP Fast Open (TFO) is a mechanism that reduces latency by allowing data to be sent inside a SYN packet. However, it also has its limitations: in particular, on the maximum data size inside a SYN packet. In addition, only some types of HTTP requests can use TFO, and this only works for reconnections because it uses a cookie.

Using TFO requires explicit support for this mechanism on the client, server, and in the application. This works on a server with a Linux kernel version 3.7 and higher and with a compatible client (Linux, iOS9 and higher, OSX 10.11 and higher), and you will also need to enable the corresponding socket flags inside the application.

Google experts determined that TFO can reduce network latency for HTTP requests by 15%, speed up page loading by 10% on average, and in some cases up to 40%.

Congestion control

At the beginning of 1984, John Nagle described the state of the network, which he called “overload collapse,” which can form on any network where the channel width between nodes is not the same.

When the round-trip delay (round-trip packet transit times) exceeds the maximum retransmission interval, hosts start sending copies of the same datagrams to the network. This will cause buffers to be clogged and packets to be lost. As a result, the hosts will send packets several times, and after several attempts, the packets will reach the destination. This is called "overload collapse."

Nagle showed that the collapse of congestion was not a problem for ARPANETN at that time, because the nodes had the same channel width and the backbone (high-speed trunk) had excessive bandwidth. However, this is no longer the case on the modern Internet. Back in 1986, when the number of nodes in the network exceeded 5000, a series of overload collapses occurred. In some cases, this led to the network speed falling 1000 times, which meant actual inoperability.

To cope with this problem, several mechanisms were applied in TCP: flow control, congestion control, congestion prevention. They determined the speed at which data can be transmitted in both directions.

Flow control

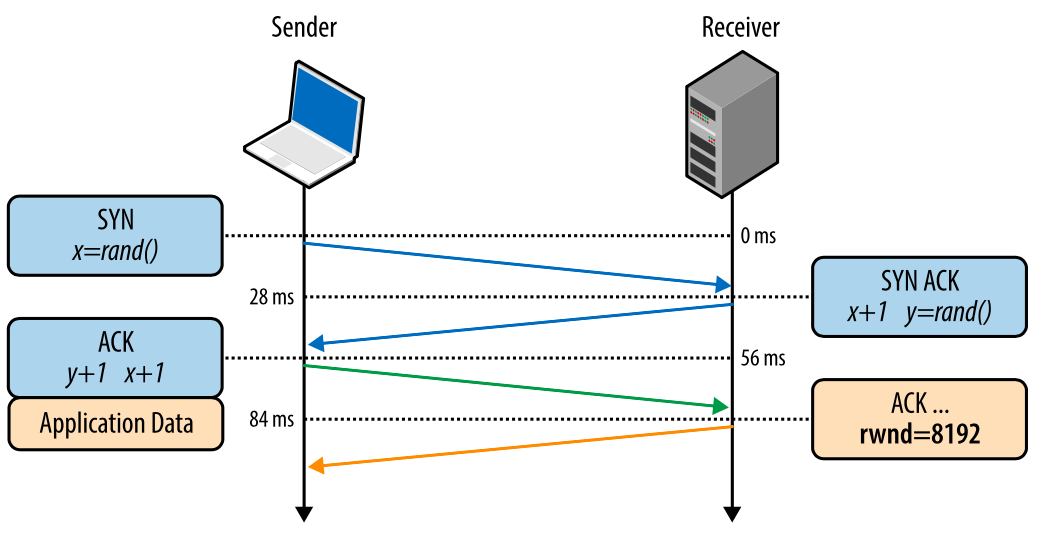

Flow control prevents too much data from being sent to the recipient that it cannot process. To prevent this from happening, each side of the TCP connection reports the size of the available buffer space for incoming data. This parameter is the "receive window" (receive window - rwnd).

When a connection is established, both sides set their rwn values based on their system defaults. Opening a typical page on the Internet will mean sending a large amount of data from the server to the client, so the client reception window will be the main limiter. However, if the client sends a lot of data to the server, for example, uploading video there, then the server reception window will be the limiting factor.

If for some reason one side cannot cope with the incoming data stream, it should report a reduced value of its receive window. If the receive window reaches 0, it signals the sender that it is no longer necessary to send data until the receiver buffer is cleared at the application level. This sequence is repeated constantly in each TCP connection: each ACK packet carries a fresh rwnd value for both sides, allowing them to dynamically adjust the data flow rate in accordance with the capabilities of the receiver and sender.

Fig. 2. Passing the value of the receive window.

Window Scaling (RFC 1323)

The original TCP specification limited the size of the transmitted value of the receive window to 16 bits. This severely limited it from above, since the receive window could not be more than 2 ^ 16 or 65,535 bytes. It turned out that this is often not enough for optimal performance, especially in networks with a large “product of channel width by delay” (BDP - bandwidth-delay product).

To cope with this problem, RFC 1323 introduced the TCP window scaling option, which made it possible to increase the size of the receive window from 65,535 bytes to 1 gigabyte. The window scaling parameter is transmitted during a triple handshake and represents the number of bits for shifting to the left the 16-bit size of the receive window in the following ASK packets.

Today, receiving window scaling is enabled by default on all major platforms. However, intermediate nodes, routers, and firewalls can overwrite or even remove this parameter. If your connection cannot fully use the entire channel, you need to start by checking the values of the receive windows. On the Linux platform, the window scaling option can be checked and set like this:

$> sysctl net.ipv4.tcp_window_scaling

$> sysctl -w net.ipv4.tcp_window_scaling=1In the next part, we will understand what TCP Slow Start is, how to optimize the data transfer speed and increase the initial window, and also collect all the recommendations for optimizing the TCP / IP stack together.