Recovery of data storage and VMFS partitions. Lifting EMC iomega from that light ...

Hello! Recently, more and more often I come across the fact that many administrators use cheap storage systems (SOHO) for productive environments ... At the same time, rarely thinking about data availability and fault tolerance of the solution ... Alas, not many also think about backups and backups ...

And today I got an “interesting treatment” instance: A

wonderful copy of EMC (not even Lenovo yet) iomega storcenter px4 (which is not loaded further than 25%)

Read about the recovery details under the cat.

So let's get started.

We have two tasks:

1) To restore some data from disks

2) To restore the functionality of the storage system

First we need to understand what we are dealing with and the manufacturer’s website and the documentation with the storage specification will help us with this - PDF dock.

Based on the PDF above, you can understand that the storage is nothing more than a small server on an Intel processor and, obviously, not on Windows, some kind of Linux on board.

In the documentation itself there is no mention of any RAID controller - therefore I had to open the patient and make sure that the hardware filling does not contain surprises.

So, what did we get from the owners of the storage system and the documentation:

1) we have the storage system with disks in some kind of RAID (without understanding what raid the customer collected disks in);

2) there is no hardware RAID in the storage system - so we rely on a software solution;

3) some of Linux is used in the storage system (since the system does not boot up - see what did not work there);

4) There is an understanding of what we are looking for on disks (there are a couple of VMFS partitions that were given to VM under ESXi and a couple of file shares for general use).

Recovery plan:

1) install the OS and connect the drives;

2) we look that useful can be pulled out from the information on disks;

3) We collect Raid and try to mount the partitions;

4) mount VMFS partitions;

5) we merge all the necessary information to another storage;

6) we think what to do with EMC.

Since it failed to “revive” the storage system (if anyone knows the flashing methods and can share the utilities - welk in a comment or PM) - we connect the disks to another system:

In my case, there was an old AMD Phenom server at hand ... The most important thing was to find a motherboard where You can connect a minimum of 4 drives from the storage + 1 drive to install the OS and other utilities.

The OS was chosen by Debian 8, as it is best friends with both vmfs-tools and iscsi target (ubunta catches glitches).

SDA - disk with OS, the remaining 4 - disks from SHD

As you can see on the disks there are two sections:

20 GB - as I understand the OS of the storage itself

1.8 TB - for user data

All disks have an identical breakdown - from which we can conclude that they were the same array in RAID.

FSTYPE sections are defined as linux_raid_member so let's try to see what we can collect from them.

We collect an array:

When mounting the array, they gave us a hint - filesystem type 'LVM2_member'.

Install LVM2 and scan the disks:

As you can see, we found the volume group. It is logical to assume that the second part can be assembled in this way.

Now finds 2 LVM physical volumes

It can be seen from the result that the storage system collected VG on LVM and then crushed LV into the required sizes.

We activate the partitions and try to mount them:

As you can see, only part of the partitions was mounted. And all because on other sections - VMFS.

Alas, dancing with a tambourine could not be avoided ... VMFS did not want to mount directly (there is a suspicion that this is due to the new version of VMFS and the old vmfs-tools)

Break a bunch of forums, a solution was found.

Create a loop device:

A little bit about kpartx can be read HERE .

We try to mount the resulting mapper:

Mounted successfully! (errors can be scored since Lun ID mismatch)

Since it was not possible to merge the virtual machines from the mounted datastores ... Let's try to connect these partitions to the real ESXi and copy the virtual machines through it (it must be friends with VMFS).

We will connect our sections to ESXi using iSCSi (we will describe the process briefly):

1) Install the iscsitarget package

2) add the necessary parameters to /etc/iet/ietd.conf

3) Start the service iscsitarget start service

If everything is OK, create on ESXi Software iSCSi controller and register our server with mounted partitions in Dynamic discovery.

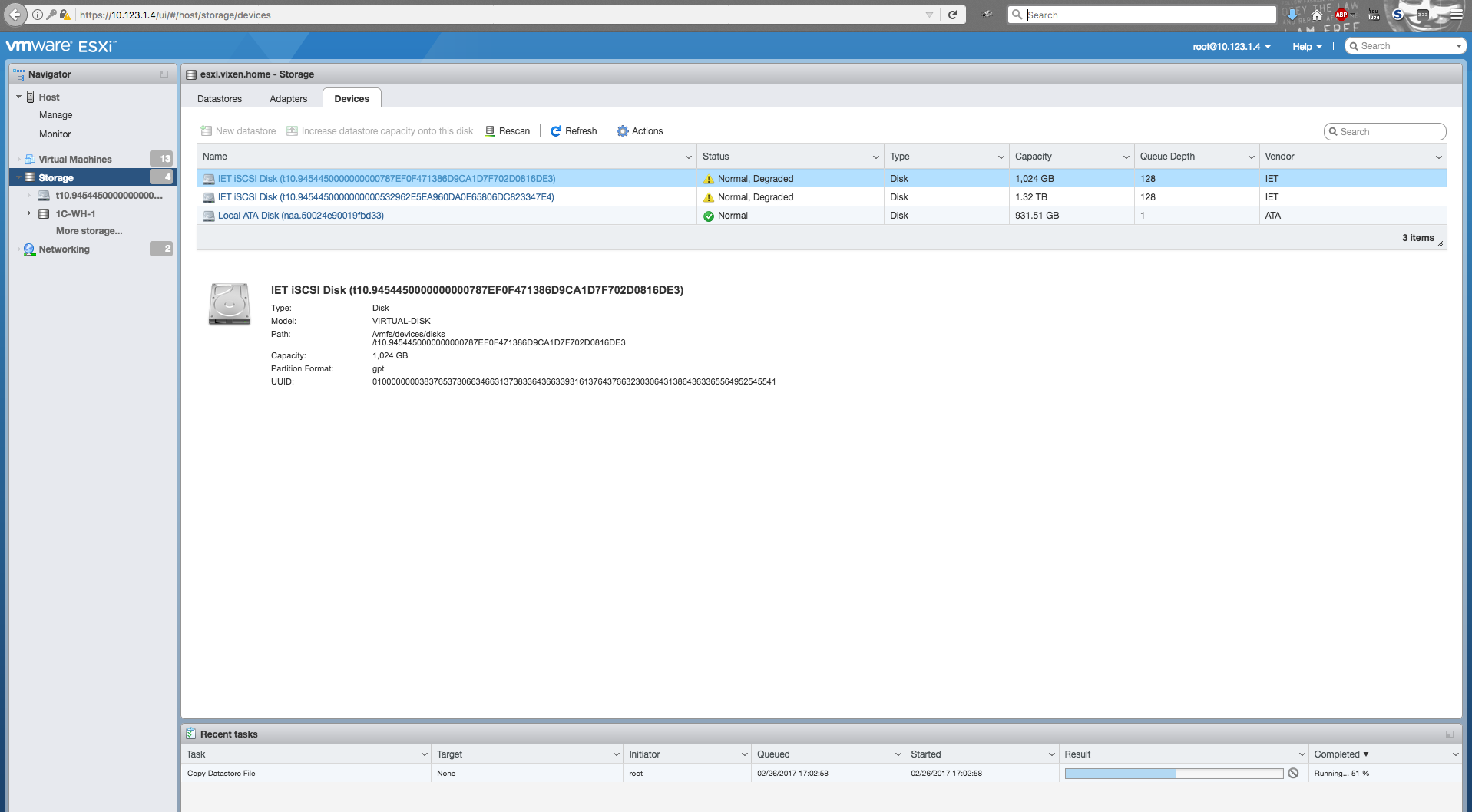

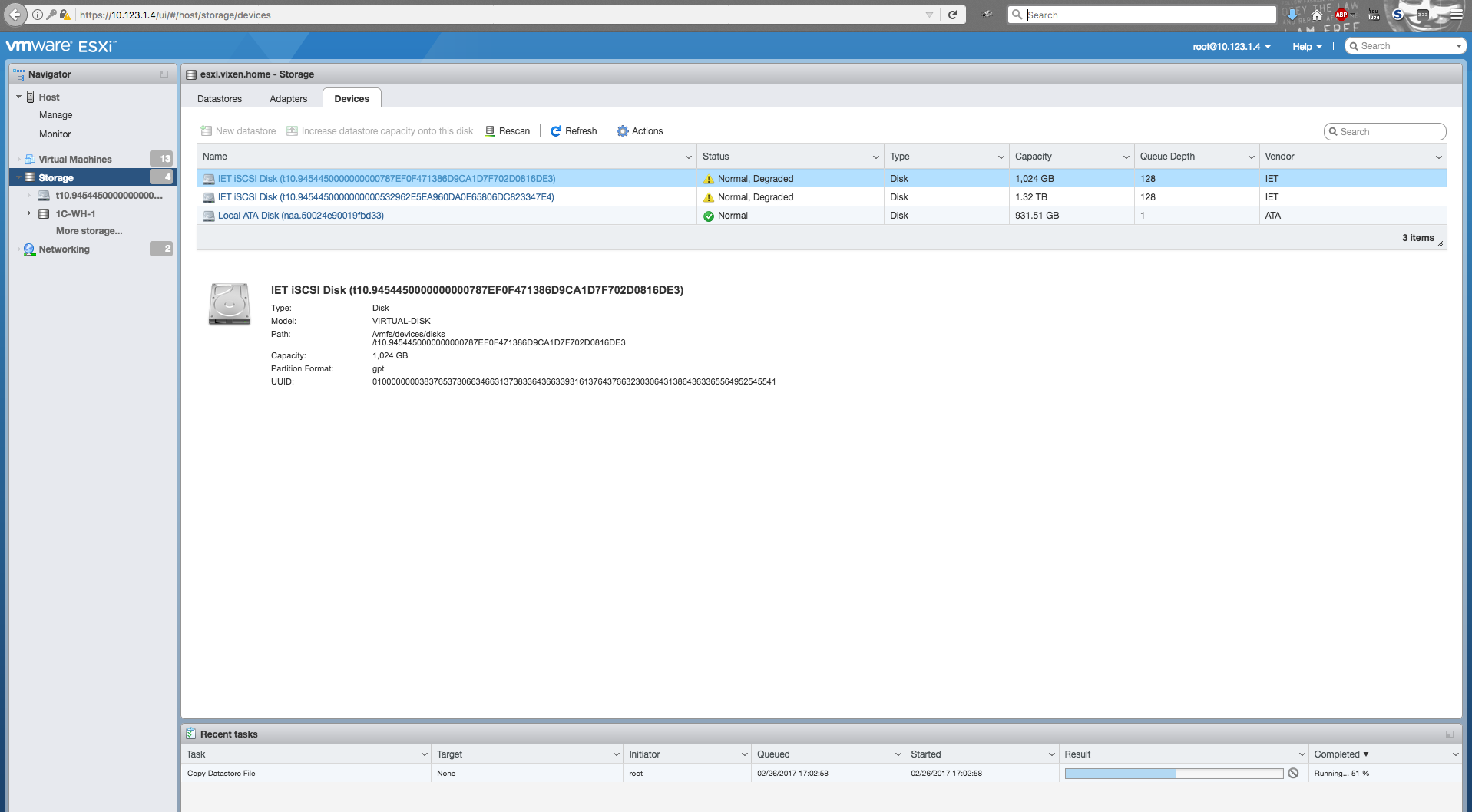

As you can see, the partitions successfully pulled up:

Since the LUN ID of the resulting partition does not match the one registered in the metadata on the partition itself, to add datastores to the host, we will use KB from VMware.

The datastores are restored and information can be easily drained from them.

PS I merge on scp by enabling access on the SSH server - this is much faster than merging a virtual machine through the web or a regular client.

How to restore the storage itself - I can’t imagine. If someone has firmware files and information on how to connect the console, I will gladly accept help.

And today I got an “interesting treatment” instance: A

wonderful copy of EMC (not even Lenovo yet) iomega storcenter px4 (which is not loaded further than 25%)

Read about the recovery details under the cat.

So let's get started.

We have two tasks:

1) To restore some data from disks

2) To restore the functionality of the storage system

First we need to understand what we are dealing with and the manufacturer’s website and the documentation with the storage specification will help us with this - PDF dock.

Based on the PDF above, you can understand that the storage is nothing more than a small server on an Intel processor and, obviously, not on Windows, some kind of Linux on board.

In the documentation itself there is no mention of any RAID controller - therefore I had to open the patient and make sure that the hardware filling does not contain surprises.

So, what did we get from the owners of the storage system and the documentation:

1) we have the storage system with disks in some kind of RAID (without understanding what raid the customer collected disks in);

2) there is no hardware RAID in the storage system - so we rely on a software solution;

3) some of Linux is used in the storage system (since the system does not boot up - see what did not work there);

4) There is an understanding of what we are looking for on disks (there are a couple of VMFS partitions that were given to VM under ESXi and a couple of file shares for general use).

Recovery plan:

1) install the OS and connect the drives;

2) we look that useful can be pulled out from the information on disks;

3) We collect Raid and try to mount the partitions;

4) mount VMFS partitions;

5) we merge all the necessary information to another storage;

6) we think what to do with EMC.

Step # 1

Since it failed to “revive” the storage system (if anyone knows the flashing methods and can share the utilities - welk in a comment or PM) - we connect the disks to another system:

In my case, there was an old AMD Phenom server at hand ... The most important thing was to find a motherboard where You can connect a minimum of 4 drives from the storage + 1 drive to install the OS and other utilities.

The OS was chosen by Debian 8, as it is best friends with both vmfs-tools and iscsi target (ubunta catches glitches).

Step number 2

root@mephistos-GA-880GA-UD3H:~# fdisk -l

Disk /dev/sda: 931.5 GiB, 1000204886016 bytes, 1953525168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x000c0a96

Device Boot Start End Sectors Size Id Type

/dev/sda1 * 2048 1920251903 1920249856 915.7G 83 Linux

/dev/sda2 1920253950 1953523711 33269762 15.9G 5 Extended

/dev/sda5 1920253952 1953523711 33269760 15.9G 82 Linux swap / Solaris

Disk /dev/sdb: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: BFAE2033-B502-4C5B-9959-82E50F8E9920

Device Start End Sectors Size Type

/dev/sdb1 72 41961848 41961777 20G Microsoft basic data

/dev/sdb2 41961856 3907029106 3865067251 1.8T Microsoft basic data

Disk /dev/sdc: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: B6515182-BF5B-4DED-AAB1-5AE489BF23B0

Device Start End Sectors Size Type

/dev/sdc1 72 41961848 41961777 20G Microsoft basic data

/dev/sdc2 41961856 3907029106 3865067251 1.8T Microsoft basic data

Disk /dev/sdd: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 378627A1-2696-4DAC-88D1-B90AFD2B1A98

Device Start End Sectors Size Type

/dev/sdd1 72 41961848 41961777 20G Microsoft basic data

/dev/sdd2 41961856 3907029106 3865067251 1.8T Microsoft basic data

Disk /dev/sde: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: BA871E29-DB67-4266-A8ED-E5A33D6C24D2

Device Start End Sectors Size Type

/dev/sde1 72 41961848 41961777 20G Microsoft basic data

/dev/sde2 41961856 3907029106 3865067251 1.8T Microsoft basic data

SDA - disk with OS, the remaining 4 - disks from SHD

As you can see on the disks there are two sections:

20 GB - as I understand the OS of the storage itself

1.8 TB - for user data

All disks have an identical breakdown - from which we can conclude that they were the same array in RAID.

root@mephistos-GA-880GA-UD3H:~# lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

sdd

|-sdd2 linux_raid_member px4-300r-THXLON:1 27b7ba6d-6a41-dd56-ad4b-7652f461a3b6

`-sdd1 linux_raid_member px4-300r-THXLON:0 b8b8526a-37ef-2a9b-e9e7-c249645dacb0

sdb

|-sdb2 linux_raid_member px4-300r-THXLON:1 27b7ba6d-6a41-dd56-ad4b-7652f461a3b6

`-sdb1 linux_raid_member px4-300r-THXLON:0 b8b8526a-37ef-2a9b-e9e7-c249645dacb0

sde

|-sde2 linux_raid_member px4-300r-THXLON:1 27b7ba6d-6a41-dd56-ad4b-7652f461a3b6

`-sde1 linux_raid_member px4-300r-THXLON:0 b8b8526a-37ef-2a9b-e9e7-c249645dacb0

sdc

|-sdc2 linux_raid_member px4-300r-THXLON:1 27b7ba6d-6a41-dd56-ad4b-7652f461a3b6

`-sdc1 linux_raid_member px4-300r-THXLON:0 b8b8526a-37ef-2a9b-e9e7-c249645dacb0

sda

|-sda2

|-sda5 swap 451578bf-ed6d-4ee7-ba91-0c176c433ac9 [SWAP]

`-sda1 ext4 fdd535f2-4350-4227-bb5e-27e402c64f04 /

Step number 3

FSTYPE sections are defined as linux_raid_member so let's try to see what we can collect from them.

root@mephistos-GA-880GA-UD3H:~# apt-get install mdadmWe collect an array:

root@mephistos-GA-880GA-UD3H:~# mdadm --assemble /dev/md0 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1

mdadm: /dev/md0 has been started with 4 drives.

root@mephistos-GA-880GA-UD3H:~# mkdir /mnt/md0

root@mephistos-GA-880GA-UD3H:~# mount /dev/md0 /mnt/md0/

mount: unknown filesystem type 'LVM2_member'

When mounting the array, they gave us a hint - filesystem type 'LVM2_member'.

Install LVM2 and scan the disks:

root@mephistos-GA-880GA-UD3H:~# apt-get install lvm2root@mephistos-GA-880GA-UD3H:~# lvmdiskscan

/run/lvm/lvmetad.socket: connect failed: No such file or directory

WARNING: Failed to connect to lvmetad. Falling back to internal scanning.

/dev/ram0 [ 64.00 MiB]

/dev/md0 [ 20.01 GiB] LVM physical volume

/dev/ram1 [ 64.00 MiB]

/dev/sda1 [ 915.65 GiB]

/dev/ram2 [ 64.00 MiB]

/dev/ram3 [ 64.00 MiB]

/dev/ram4 [ 64.00 MiB]

/dev/ram5 [ 64.00 MiB]

/dev/sda5 [ 15.86 GiB]

/dev/ram6 [ 64.00 MiB]

/dev/ram7 [ 64.00 MiB]

/dev/ram8 [ 64.00 MiB]

/dev/ram9 [ 64.00 MiB]

/dev/ram10 [ 64.00 MiB]

/dev/ram11 [ 64.00 MiB]

/dev/ram12 [ 64.00 MiB]

/dev/ram13 [ 64.00 MiB]

/dev/ram14 [ 64.00 MiB]

/dev/ram15 [ 64.00 MiB]

0 disks

18 partitions

0 LVM physical volume whole disks

1 LVM physical volume

root@mephistos-GA-880GA-UD3H:~# lvdisplay

/run/lvm/lvmetad.socket: connect failed: No such file or directory

WARNING: Failed to connect to lvmetad. Falling back to internal scanning.

--- Logical volume ---

LV Path /dev/66e7945b_vg/vol1

LV Name vol1

VG Name 66e7945b_vg

LV UUID No8Pga-YZaE-7ubV-NQ05-7fMh-DEa8-p1nP4c

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 20.01 GiB

Current LE 5122

Segments 1

Allocation inherit

Read ahead sectors auto

As you can see, we found the volume group. It is logical to assume that the second part can be assembled in this way.

root@mephistos-GA-880GA-UD3H:~# mdadm --assemble /dev/md1 /dev/sdb2 /dev/sdc2 /dev/sdd2 /dev/sde2

mdadm: /dev/md1 has been started with 4 drives.

root@mephistos-GA-880GA-UD3H:~# lvmdiskscan

/run/lvm/lvmetad.socket: connect failed: No such file or directory

WARNING: Failed to connect to lvmetad. Falling back to internal scanning.

/dev/ram0 [ 64.00 MiB]

/dev/md0 [ 20.01 GiB] LVM physical volume

/dev/ram1 [ 64.00 MiB]

/dev/sda1 [ 915.65 GiB]

/dev/md1 [ 5.40 TiB] LVM physical volume

/dev/ram2 [ 64.00 MiB]

/dev/ram3 [ 64.00 MiB]

/dev/ram4 [ 64.00 MiB]

/dev/ram5 [ 64.00 MiB]

/dev/sda5 [ 15.86 GiB]

/dev/ram6 [ 64.00 MiB]

/dev/ram7 [ 64.00 MiB]

/dev/ram8 [ 64.00 MiB]

/dev/ram9 [ 64.00 MiB]

/dev/ram10 [ 64.00 MiB]

/dev/ram11 [ 64.00 MiB]

/dev/ram12 [ 64.00 MiB]

/dev/ram13 [ 64.00 MiB]

/dev/ram14 [ 64.00 MiB]

/dev/ram15 [ 64.00 MiB]

0 disks

18 partitions

0 LVM physical volume whole disks

2 LVM physical volumes

Now finds 2 LVM physical volumes

root@mephistos-GA-880GA-UD3H:~# lvdisplay

/run/lvm/lvmetad.socket: connect failed: No such file or directory

WARNING: Failed to connect to lvmetad. Falling back to internal scanning.

--- Logical volume ---

LV Path /dev/3b9b96bf_vg/lv6231c27b

LV Name lv6231c27b

VG Name 3b9b96bf_vg

LV UUID wwRwuz-TjVh-aT6G-r5iR-7MpJ-tZ0P-wjbb6g

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 2.00 TiB

Current LE 524288

Segments 2

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/3b9b96bf_vg/lv22a5c399

LV Name lv22a5c399

VG Name 3b9b96bf_vg

LV UUID GHAUtd-qvjL-n8Fa-OuCo-sqtD-CBzg-M46Y9o

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 3.00 GiB

Current LE 768

Segments 1

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/3b9b96bf_vg/lv13ed5d5e

LV Name lv13ed5d5e

VG Name 3b9b96bf_vg

LV UUID iMQZFw-Xrmj-cTkq-E1NT-VwUa-X0E0-MpbCps

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 10.00 GiB

Current LE 2560

Segments 2

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/3b9b96bf_vg/lv7a6430c7

LV Name lv7a6430c7

VG Name 3b9b96bf_vg

LV UUID UlFd4y-huNe-Z501-EylQ-mOd6-kAGt-jmvlqa

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 1.00 TiB

Current LE 262144

Segments 1

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/3b9b96bf_vg/lv47d612ce

LV Name lv47d612ce

VG Name 3b9b96bf_vg

LV UUID pzlrpE-dikm-6Rtn-GU6O-SeEx-3QJJ-cK3cdR

LV Write Access read/write

LV Creation host, time s-mars-stor-1, 2017-02-04 16:14:49 +0200

LV Status NOT available

LV Size 1.32 TiB

Current LE 345600

Segments 2

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/66e7945b_vg/vol1

LV Name vol1

VG Name 66e7945b_vg

LV UUID No8Pga-YZaE-7ubV-NQ05-7fMh-DEa8-p1nP4c

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 20.01 GiB

Current LE 5122

Segments 1

Allocation inherit

Read ahead sectors autoIt can be seen from the result that the storage system collected VG on LVM and then crushed LV into the required sizes.

root@mephistos-GA-880GA-UD3H:~# vgdisplay

/run/lvm/lvmetad.socket: connect failed: No such file or directory

WARNING: Failed to connect to lvmetad. Falling back to internal scanning.

--- Volume group ---

VG Name 3b9b96bf_vg

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 49

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 5

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size 5.40 TiB

PE Size 4.00 MiB

Total PE 1415429

Alloc PE / Size 1135360 / 4.33 TiB

Free PE / Size 280069 / 1.07 TiB

VG UUID Qg8rb2-rQpK-zMRL-qVzm-RU5n-YNv8-qOV6yZ

--- Volume group ---

VG Name 66e7945b_vg

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 2

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size 20.01 GiB

PE Size 4.00 MiB

Total PE 5122

Alloc PE / Size 5122 / 20.01 GiB

Free PE / Size 0 / 0

VG UUID Sy2RsX-h51a-vgKt-n1Sb-u1CA-HBUf-C9sUNTroot@mephistos-GA-880GA-UD3H:~# lvscan

/run/lvm/lvmetad.socket: connect failed: No such file or directory

WARNING: Failed to connect to lvmetad. Falling back to internal scanning.

inactive '/dev/3b9b96bf_vg/lv6231c27b' [2.00 TiB] inherit

inactive '/dev/3b9b96bf_vg/lv22a5c399' [3.00 GiB] inherit

inactive '/dev/3b9b96bf_vg/lv13ed5d5e' [10.00 GiB] inherit

inactive '/dev/3b9b96bf_vg/lv7a6430c7' [1.00 TiB] inherit

inactive '/dev/3b9b96bf_vg/lv47d612ce' [1.32 TiB] inherit

inactive '/dev/66e7945b_vg/vol1' [20.01 GiB] inheritWe activate the partitions and try to mount them:

root@mephistos-GA-880GA-UD3H:~# modprobe dm-mod

root@mephistos-GA-880GA-UD3H:~# vgchange -ay

/run/lvm/lvmetad.socket: connect failed: No such file or directory

WARNING: Failed to connect to lvmetad. Falling back to internal scanning.

5 logical volume(s) in volume group "3b9b96bf_vg" now active

1 logical volume(s) in volume group "66e7945b_vg" now activeroot@mephistos-GA-880GA-UD3H:~# mkdir /mnt/1

root@mephistos-GA-880GA-UD3H:~# mkdir /mnt/2

root@mephistos-GA-880GA-UD3H:~# mkdir /mnt/3

root@mephistos-GA-880GA-UD3H:~# mkdir /mnt/4

root@mephistos-GA-880GA-UD3H:~# mkdir /mnt/5

root@mephistos-GA-880GA-UD3H:~# mkdir /mnt/6

root@mephistos-GA-880GA-UD3H:~# mount /dev/3b9b96bf_vg/lv6231c27b /mnt/1

root@mephistos-GA-880GA-UD3H:~# mount /dev/3b9b96bf_vg/lv22a5c399 /mnt/2

NTFS signature is missing.

Failed to mount '/dev/mapper/3b9b96bf_vg-lv22a5c399': Invalid argument

The device '/dev/mapper/3b9b96bf_vg-lv22a5c399' doesn't seem to have a valid NTFS.

Maybe the wrong device is used? Or the whole disk instead of a

partition (e.g. /dev/sda, not /dev/sda1)? Or the other way around?

root@mephistos-GA-880GA-UD3H:~# mount /dev/3b9b96bf_vg/lv13ed5d5e /mnt/3

root@mephistos-GA-880GA-UD3H:~# mount /dev/3b9b96bf_vg/lv7a6430c7 /mnt/4

NTFS signature is missing.

Failed to mount '/dev/mapper/3b9b96bf_vg-lv7a6430c7': Invalid argument

The device '/dev/mapper/3b9b96bf_vg-lv7a6430c7' doesn't seem to have a valid NTFS.

Maybe the wrong device is used? Or the whole disk instead of a

partition (e.g. /dev/sda, not /dev/sda1)? Or the other way around?

root@mephistos-GA-880GA-UD3H:~# mount /dev/3b9b96bf_vg/lv7a6430c7 /mnt/5

NTFS signature is missing.

Failed to mount '/dev/mapper/3b9b96bf_vg-lv7a6430c7': Invalid argument

The device '/dev/mapper/3b9b96bf_vg-lv7a6430c7' doesn't seem to have a valid NTFS.

Maybe the wrong device is used? Or the whole disk instead of a

partition (e.g. /dev/sda, not /dev/sda1)? Or the other way around?

root@mephistos-GA-880GA-UD3H:~# mount /dev/3b9b96bf_vg/lv47d612ce /mnt/5

NTFS signature is missing.

Failed to mount '/dev/mapper/3b9b96bf_vg-lv47d612ce': Invalid argument

The device '/dev/mapper/3b9b96bf_vg-lv47d612ce' doesn't seem to have a valid NTFS.

Maybe the wrong device is used? Or the whole disk instead of a

partition (e.g. /dev/sda, not /dev/sda1)? Or the other way around?

root@mephistos-GA-880GA-UD3H:~# mount /dev/66e7945b_vg/vol1 /mnt/6As you can see, only part of the partitions was mounted. And all because on other sections - VMFS.

Step 4

root@mephistos-GA-880GA-UD3H:/mnt/3# apt-get install vmfs-tools

Alas, dancing with a tambourine could not be avoided ... VMFS did not want to mount directly (there is a suspicion that this is due to the new version of VMFS and the old vmfs-tools)

root@mephistos-GA-880GA-UD3H:/mnt/3# vmfs-fuse /dev/3b9b96bf_vg/lv7a6430c7 /mnt/4

VMFS VolInfo: invalid magic number 0x00000000

VMFS: Unable to read volume information

Trying to find partitions

Unable to open device/file "/dev/3b9b96bf_vg/lv7a6430c7".

Unable to open filesystemBreak a bunch of forums, a solution was found.

Create a loop device:

losetup -r /dev/loop0 /dev/mapper/3b9b96bf_vg-lv47d612ceA little bit about kpartx can be read HERE .

root@triplesxi:~# apt-get install kpartxroot@triplesxi:~# kpartx -a -v /dev/loop0

add map loop0p1 (253:6): 0 2831153119 linear /dev/loop0 2048We try to mount the resulting mapper:

root@triplesxi:~# vmfs-fuse /dev/mapper/loop0p1 /mnt/vmfs/

VMFS: Warning: Lun ID mismatch on /dev/mapper/loop0p1

ioctl: Invalid argument

ioctl: Invalid argumentMounted successfully! (errors can be scored since Lun ID mismatch)

Here we will skip a series of unsuccessful attempts to copy, from the mounted datastore, the virtual machines themselves.

As it turned out, files the size of a couple of hundred gigabytes cannot be copied:root@triplesxi:~# cp /mnt/vmfs/tstst/tstst_1-flat.vmdk /root/ cp: error reading '/mnt/vmfs/tstst/tstst_1-flat.vmdk': Input/output error cp: failed to extend '/root/tstst_1-flat.vmdk': Input/output error

Step number 5

Since it was not possible to merge the virtual machines from the mounted datastores ... Let's try to connect these partitions to the real ESXi and copy the virtual machines through it (it must be friends with VMFS).

We will connect our sections to ESXi using iSCSi (we will describe the process briefly):

1) Install the iscsitarget package

2) add the necessary parameters to /etc/iet/ietd.conf

3) Start the service iscsitarget start service

If everything is OK, create on ESXi Software iSCSi controller and register our server with mounted partitions in Dynamic discovery.

As you can see, the partitions successfully pulled up:

Since the LUN ID of the resulting partition does not match the one registered in the metadata on the partition itself, to add datastores to the host, we will use KB from VMware.

The datastores are restored and information can be easily drained from them.

PS I merge on scp by enabling access on the SSH server - this is much faster than merging a virtual machine through the web or a regular client.

Step 6

How to restore the storage itself - I can’t imagine. If someone has firmware files and information on how to connect the console, I will gladly accept help.

Only registered users can participate in the survey. Please come in.

Are you using backup?

- 28% Use only for a number of services 21

- 4% No, I do not use 3

- 29.3% Use for 100% services 22

- 28% Use, but do not check the integrity of copies 21

- 10.6% I'm not afraid of storage drops 8