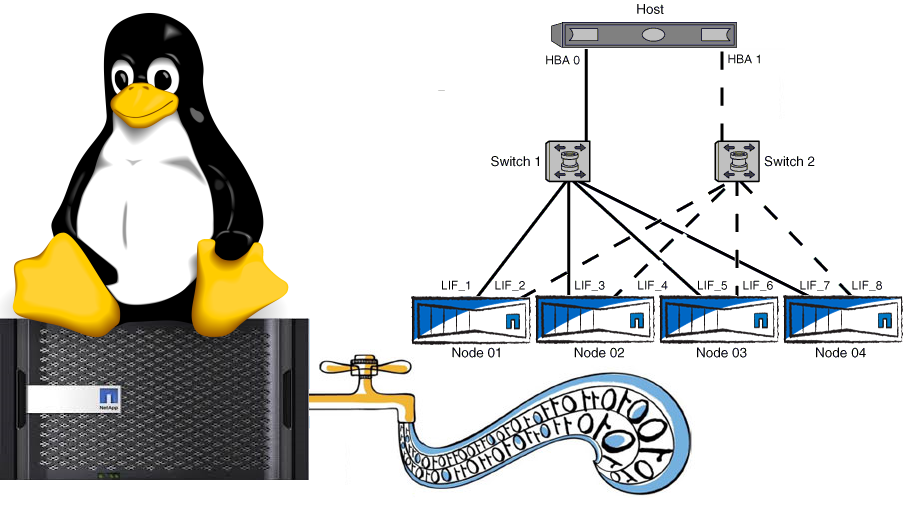

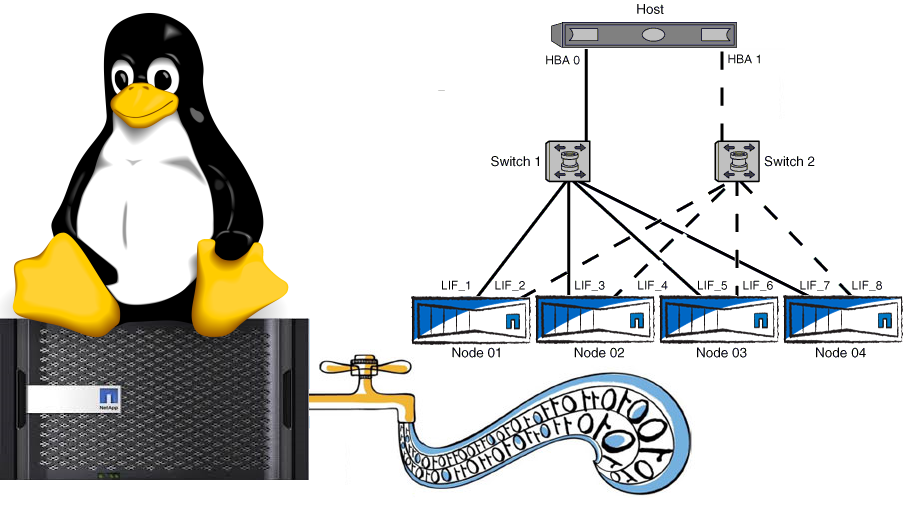

RedHat / Oracle Linux with NetApp FAS (SAN)

Continuing the topic of host optimization. In a previous article I wrote about optimizing Windows and SAN networks , in this article I would like to consider the topic of optimizing RedHat / Oracle Linux (with and without virtualization) using NetApp FAS storage in a SAN environment .

To find and eliminate bottlenecks in such an infrastructure, you need to decide on the components of the infrastructure, among which they are worth looking for. We divide the infrastructure into the following components:

To search for a bottleneck, a sequential exclusion technique is usually performed. I suggest that you start with storage first and foremost . And then move the storage -> Network ( Ethernet / FC) -> Host ( Windows / Linux / VMware ESXi 5.X and ESXi 6.X ) -> Application. Now we will stop on the Host.

If you connect the LUN directly to the OS without virtualization, it is advisable to install NetApp Host Utility. If you use virtualization with RDM or VMFS , you must configure Multipathing on the hypervisor .

Multipathing should by default use the preferred paths - the path to the LUN through the controller ports on which it is located. Messages in the FCP Partner Path Misconfigured storage console will indicate an improperly configured ALUA or MPIO. This is an important parameter, you should not ignore it, since there was one real case when the infuriated host multipasing driver non-stop switched between paths, thus creating large queues in the input / output system. For more information about SAN Booting, see the related article: Red Hat Enterprise Linux / Oracle Enterprise Linux , Cent OS , SUSE Linux Enterprise

Read more about zoning recommendations for NetApp in pictures .

If you are using iSCSI, it is highly recommended that you use Jumbo Frames on Ethernet at speeds greater than or equal to 1Gb. Read more in the Ethernet article with NetApp FAS . Don't forget that ping needs to be run 28 bytes less when using Jumbo Frames on Linux.

Flow Control is desirable to turn off all the way traffic from the server to the storage with 10GB links. More details .

Considering the “universality” of 10 GBE , when FCoE , NFS , CIFS , iSCSI can go through the same physics , along with the use of technologies such as vPC and LACP , as well as the ease of maintenance of Ethernet networks, it distinguishes the protocol and switches from FC thus providing the opportunity "Maneuver" and preservation of investments in case of changing business needs.

Internal tests of NetApp storage systems (for other storage vendors this situation may differ) FC 8G and 10 GBE iSCSI , CIFS and NFS show almost the same performance and latency , typical for OLTP and server and desktop virtualization, i.e. for loads with small blocks and random reading.

I suggest you familiarize yourself with the article describing the similarities, differences and prospects of Ethernet & FC .

In the case when the customer’s infrastructure involves two switches, we can talk about the same complexity of configuration as a SANand Ethernet networks. But for many customers, the SAN network does not boil down to two SAN switches where “everyone sees everyone,” as a rule, the configuration does not end there, in this regard, Ethernet maintenance is much simpler. Typically, SAN customer networks are many switches with redundant links and links to remote sites, which is by no means trivial to maintain. And if something goes wrong, Wireshark 's traffic is not "listen".

Modern converged switches such as the Cisco Nexus 5500 are capable of switching both Ethernet and FC traffic, allowing for greater future flexibility with a two-in-one solution.

In the case of using the “file” protocols NFS and CIFS, it is very simple to take advantage of the use of Thin Provitioning technology by returning the freed space inside the file sphere. But in the case of SAN, the use of ThinProvitioning leads to the need for constant control over free space plus the release of free space (the mechanism is available for modern operating systems ) does not happen “inside” the same LUN , but as if inside the Volume containing this LUN . I recommend reading the NetApp Thin-Provisioned LUNs on RHEL 6.2 Deployment Guide .

It’s not worth giving all the server resources to the guest OS , firstly, the hypervisor needs to leave at least 4GB of RAM , and secondly, the opposite effect is sometimes observed when adding resources to the guest OS , this must be selected empirically.

Learn more about ESXi host settings for NetApp FAS .

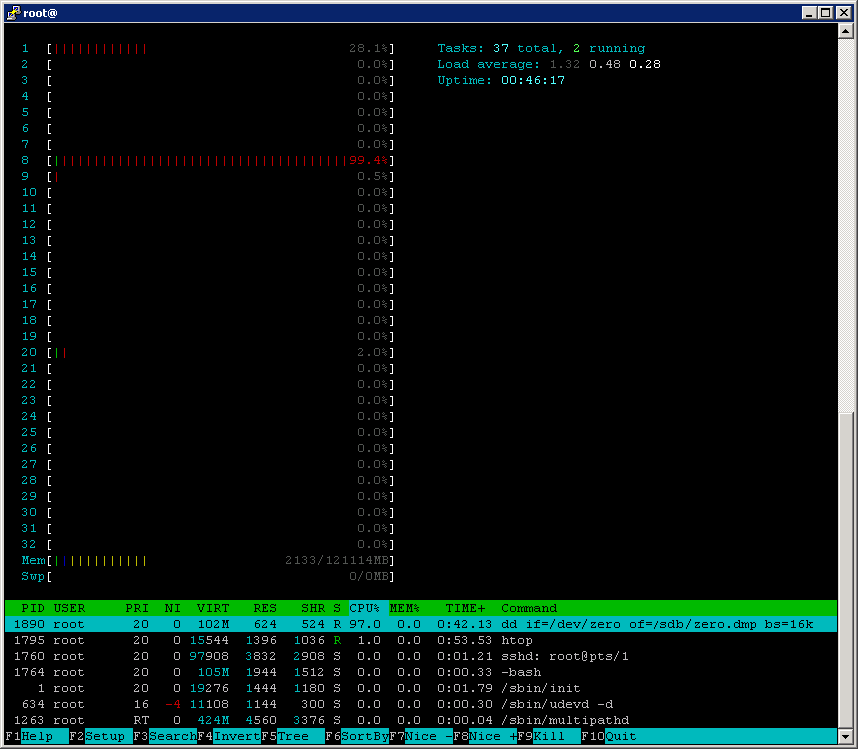

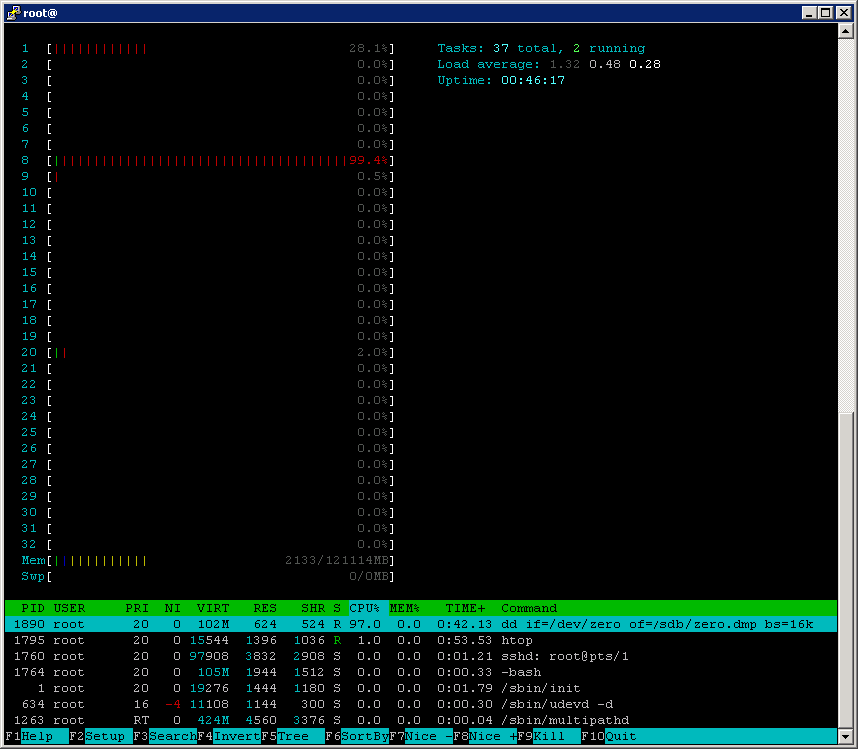

I want to draw your attention to the fact that in most Linux distributions, both as a virtual machine and BareMetal, the I / O scheduling parameter is set to a value not suitable for FAS systems, this can lead to high CPU utilization .

Pay attention to the output of the top command , the high CPU utilization caused by the dd process , which in general should only generate a load on the storage system.

Now let's look at the state of the disk subsystem on the host side

Pay attention to the high value of await = 150.26 ms . These two indirect signs, high CPU utilization and high latencies, may tell us that it is necessary to more optimally configure the OS and storage for interaction. It’s worth checking, for example: the adequacy of multipasing, ALUA, preferred paths and queues on the HBA.

Now about the values for elevator / scheduler :

By default, it is set to cfq or deadline :

It is recommended to set it to noop :

To make the settings permanent, add “ elevator = noop ” to the kernel boot parameters in the /etc/grub.conf file , they will be applied to all block devices. Or add the appropriate script to /etc/rc.local , in order to flexibly set the settings for each individual block device.

It is worthwhile to experimentally select the most optimal values for operating the virtual memory of the OS - sysctl parameters: vm.dirty_background_ratio , vm.dirty_ratio and vm.swappiness .

So for one customer, the most optimal values for RedHat Enterprice Linux 6 with NetApp storage with SSD cache and FC 8G connection were: vm.dirty_ratio = 2 and vm.dirty_background_ratio = 1 , which significantly reduced the host CPU load . Use the Linux Host Validator and Configurator utility to verify the optimal Linux host settings . When testing the SnapDrive utility for Linux (or other Unix-like) OSs, use the SnapDrive Configuration Checker for Unix . More information about the selection of optimal parameters vm.dirty * see the Supporthere . If you are using an Oracle database or virtualization, it is recommended that you set vm.swappiness = 0 inside such a Linux machine . This value will only allow swap to be used when the physical memory really runs out, which is most optimal for such tasks .

The default value is usually 128 , it must be selected manually. Increasing the queue length makes sense for random I / O operations that generate many disk seek operations on the disk subsystem. We change it like this:

In some cases, VMFS performs better than RDM . So in some tests with FC 4G you can get 300 MByte / sec using VMFS and about 200 MByte / sec with RDM .

FS can make significant adjustments when testing performance.

The FS block size must be a multiple of 4KB. For example, if we run a synthetic load similar to that generated by OLTP , where the size of the operated unit is on average 8K, then we set 8K. I also want to draw attention to the fact that, as the FS itself , its implementation for a specific OS and version can greatly affect the overall performance picture. So for recording 10 MB in blocks of 100 streams with the command dd files from the database to the UFS FS located on the LUN given over FC 4G with storage FAS 2240 and 21 + 2 disksSAS 600 10k in one unit showed a speed of 150 MB / s, while the same configuration but with FS ZFS showed twice as much (approaching the theoretical maximum of the network channel), and the Noatime parameter did not affect the situation at all.

At the file system level, you can configure the parameter when mounting noatime and nodiratime , which will not allow to update the access time to files, which often has a very positive effect on performance. For such FSs as UFS, EXT3, etc. For one of the customers, installing noatime when mounting the ext3 file system on Red Hat Enterprise Linux 6 greatly reduced the load on the host CPU .

For Linux machines you need to create the LUN 'and select the disk geometry : " the linux " (for machines without xen) or " xen " if this moon will be installed Linux the LVM from the Dom0.

For any OS, you need to create the correct geometry when creating the LUN in the storage settings. If the FS block size is incorrectly specified, the LUN geometry is incorrectly specified , the MBR / GPT parameter is not correctly selected on the host, we will observe, during peak loads, messages in the NetApp FAS console about a certain " LUN misalignment" event . Sometimes these messages may appear erroneously, if they rarely appear, simply ignore them. You can verify this by running the lun stats command on the storage system .

Do not ignore this item. A set of utilities sets the correct delays, queue size on the HBA, and other settings on the host. Install Host Utilities after installing the drivers. Displays connected LUNs and their detailed information from the storage side . The set of utilities is free and can be downloaded from the netap technical support site. After installation, run the utility

It is located

/ opt / netapp / santools /

After which, most likely, you will need to restart the host.

Remember to search for more information and ask your questions at https://linux.netapp.com/forum .

Depending on your configuration, protocols, workloads, and infrastructure, your applications may have various tuning recommendations and fine tuning. To do this, apply for the Best Practice Guide for the appropriate applications for your configuration. The most common may be

Do not forget to install the drivers for your HBA adapter (compiling them under your kernel) before installing NetApp Host Utility. Follow the recommendations for configuring the HBA adapter. Often, you need to change the queue length and timeout for the most optimal interaction with NetApp. If you are using VMware ESXi or another virtualization, do not forget to enable NPIV if you need to forward virtual HBA adapters inside the virtual machines. Some NPIV- based adapters can be configured with QoS , such as the Qlogic HBA 8100 series .

Widely apply the compatibility matrix in your practice to reduce potential problems in data center infrastructure .

I am sure that over time I will have something to add to this article on optimizing the Linux host, so check back here from time to time.

Please send messages about errors in the text to the LAN .

Comments and additions on the contrary please comment

- Host Settings with SAN ( FC / FCoE )

- Host Ethernet Network Settings for IP SAN ( iSCSI ).

- Actually the host with the OS

- Applications on the Host

- Checking driver and software version compatibility

To search for a bottleneck, a sequential exclusion technique is usually performed. I suggest that you start with storage first and foremost . And then move the storage -> Network ( Ethernet / FC) -> Host ( Windows / Linux / VMware ESXi 5.X and ESXi 6.X ) -> Application. Now we will stop on the Host.

SAN Multipathing

If you connect the LUN directly to the OS without virtualization, it is advisable to install NetApp Host Utility. If you use virtualization with RDM or VMFS , you must configure Multipathing on the hypervisor .

Multipathing should by default use the preferred paths - the path to the LUN through the controller ports on which it is located. Messages in the FCP Partner Path Misconfigured storage console will indicate an improperly configured ALUA or MPIO. This is an important parameter, you should not ignore it, since there was one real case when the infuriated host multipasing driver non-stop switched between paths, thus creating large queues in the input / output system. For more information about SAN Booting, see the related article: Red Hat Enterprise Linux / Oracle Enterprise Linux , Cent OS , SUSE Linux Enterprise

Read more about zoning recommendations for NetApp in pictures .

Ethernet

Jumbo frames

If you are using iSCSI, it is highly recommended that you use Jumbo Frames on Ethernet at speeds greater than or equal to 1Gb. Read more in the Ethernet article with NetApp FAS . Don't forget that ping needs to be run 28 bytes less when using Jumbo Frames on Linux.

ifconfig eth0 mtu 9000 up

echo MTU=9000 >> /etc/sysconfig/network-scripts/ifcfg-eth0

#ping for MTU 9000

ping -M do -s 8972 [destinationIP]

Flow control

Flow Control is desirable to turn off all the way traffic from the server to the storage with 10GB links. More details .

ethtool -A eth0 autoneg off

ethtool -A eth0 rx off

ethtool -A eth0 tx off

echo ethtool -A eth0 autoneg off >> /etc/sysconfig/network-scripts/ifcfg-eth0

echo ethtool -A eth0 rx off >> /etc/sysconfig/network-scripts/ifcfg-eth0

echo ethtool -A eth0 tx off >> /etc/sysconfig/network-scripts/ifcfg-eth0

ESXi & MTU9000

If you use the ESXi environment, do not forget to create the correct network adapter - E1000 for 1GB networks or VMXNET3 if your network is higher than 1Gb. E1000 and VMXNET3 support MTU 9000, and the standard “Flexible” virtual network adapter does not.

Learn more about optimizing VMware with NetApp FAS .

Converged network

Considering the “universality” of 10 GBE , when FCoE , NFS , CIFS , iSCSI can go through the same physics , along with the use of technologies such as vPC and LACP , as well as the ease of maintenance of Ethernet networks, it distinguishes the protocol and switches from FC thus providing the opportunity "Maneuver" and preservation of investments in case of changing business needs.

FC8 vs 10GBE: iSCSI, CIFS, NFS

Internal tests of NetApp storage systems (for other storage vendors this situation may differ) FC 8G and 10 GBE iSCSI , CIFS and NFS show almost the same performance and latency , typical for OLTP and server and desktop virtualization, i.e. for loads with small blocks and random reading.

I suggest you familiarize yourself with the article describing the similarities, differences and prospects of Ethernet & FC .

In the case when the customer’s infrastructure involves two switches, we can talk about the same complexity of configuration as a SANand Ethernet networks. But for many customers, the SAN network does not boil down to two SAN switches where “everyone sees everyone,” as a rule, the configuration does not end there, in this regard, Ethernet maintenance is much simpler. Typically, SAN customer networks are many switches with redundant links and links to remote sites, which is by no means trivial to maintain. And if something goes wrong, Wireshark 's traffic is not "listen".

Modern converged switches such as the Cisco Nexus 5500 are capable of switching both Ethernet and FC traffic, allowing for greater future flexibility with a two-in-one solution.

Thin provitioning

In the case of using the “file” protocols NFS and CIFS, it is very simple to take advantage of the use of Thin Provitioning technology by returning the freed space inside the file sphere. But in the case of SAN, the use of ThinProvitioning leads to the need for constant control over free space plus the release of free space (the mechanism is available for modern operating systems ) does not happen “inside” the same LUN , but as if inside the Volume containing this LUN . I recommend reading the NetApp Thin-Provisioned LUNs on RHEL 6.2 Deployment Guide .

ESXi host

It’s not worth giving all the server resources to the guest OS , firstly, the hypervisor needs to leave at least 4GB of RAM , and secondly, the opposite effect is sometimes observed when adding resources to the guest OS , this must be selected empirically.

Learn more about ESXi host settings for NetApp FAS .

Guest OS and host BareMetal Linux

I want to draw your attention to the fact that in most Linux distributions, both as a virtual machine and BareMetal, the I / O scheduling parameter is set to a value not suitable for FAS systems, this can lead to high CPU utilization .

Pay attention to the output of the top command , the high CPU utilization caused by the dd process , which in general should only generate a load on the storage system.

Host statistics collection

Linux and other Unix-like :

Now let's look at the state of the disk subsystem on the host side

iostat -dx 2

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

sdb 0.00 0.00 0.00 454.00 0.00 464896.00 1024.00 67.42 150.26 2.20 100.00

Pay attention to the high value of await = 150.26 ms . These two indirect signs, high CPU utilization and high latencies, may tell us that it is necessary to more optimally configure the OS and storage for interaction. It’s worth checking, for example: the adequacy of multipasing, ALUA, preferred paths and queues on the HBA.

Interpretation of iostat

More details here .

| Iostat | Windows analog |

|---|---|

| rrqm / s (The, number of merged read requests queued per second.) read I / O per second | LogicalDisk (*) \ Disk Transfers / sec = rrqm / s + wrqm / s., For Linux machines add the rrqm / s column, and LogicalDisk (*) \ Disk Transfers / sec skip |

| Wrqm / s (The number of merged write requests, queued per second.) Write I / O per second | LogicalDisk (*) \ Disk Transfers / sec = rrqm / s + wrqm / s., For Linux machines add the column wrqm / s, and LogicalDisk (*) \ Disk Transfers / sec skip |

| R / s (The number of read requests sent to the device per, second.) | Disk, reads / sec |

| W / s (The number of write requests sent to the device per, second.) | Disk, writes / sec |

| Rsec / s (The number of sectors read per second.) You need to know the size of the sector, usually 512 bytes. | Rsec / s * 512 =, "\ LogicalDisk (*) \ Disk, Read Bytes / sec", |

| Wsec / s (The number of sectors written per second.) You need to know the size of the sector, usually 512 bytes. | wsec / s * 512 =, "\ LogicalDisk (*) \ Disk Write Bytes / sec", |

| Avgrq-sz (The request size in sectors.) You need to know the size of the sector, usually 512 bytes. | avgrq-sz - the average size of the operated block - is needed. Add a column; in Windows, it is calculated from other parameters. |

| Avgqu-sz (The number of, requests waiting in the device's queue.) | "\ LogicalDisk (*) \ Avg., Disk Queue Length". Separately, on Read and Write it turns out not, but this is enough. The record reading ratio will be calculated by “rrqm / s” with “wrqm / s” or “r / s” with “w / s”. That is, for Linux, skip:, LogicalDisk (*) \ Avg., Disk Read Queue Length, LogicalDisk (_Total) \ Avg., Disk Write Queue Length. |

| Await (The number of milliseconds required to respond to, requests) | Average, Latency, in Windows this value does not give out, it is calculated from other parameters, add a column, the parameter is needed. |

| Svctm (The number of milliseconds spent servicing, requests, from beginning to end) | The time taken to complete the request. Add a separate column for Linux machines, useful |

| % Util (The percentage of CPU time during which requests were, issued) | "\ Processor (_total) \%, Processor Time", let it load on the CPU (add a column), from it the disk subsystem overload is indirectly understood. |

Linux elevator

Now about the values for elevator / scheduler :

By default, it is set to cfq or deadline :

cat /sys/block/sda/queue/scheduler

noop anticipatory deadline [cfq]

It is recommended to set it to noop :

echo noop > /sys/block/sda/queue/scheduler

cd /sys/block/sda/queue

grep .* *

scheduler:[noop] deadline cfq

To make the settings permanent, add “ elevator = noop ” to the kernel boot parameters in the /etc/grub.conf file , they will be applied to all block devices. Or add the appropriate script to /etc/rc.local , in order to flexibly set the settings for each individual block device.

Example script for installing scheduler in noop for sdb

Do not forget to change sdb to the name of your block device

cat /etc/rc.local | grep -iv "^exit" > /tmp/temp

echo -e "echo noop > /sys/block/sdb/queue/scheduler\nexit 0" >> /tmp/temp

cat /tmp/temp > /etc/rc.local; rm /tmp/temp

Sysctl settings with Virtual Memory

It is worthwhile to experimentally select the most optimal values for operating the virtual memory of the OS - sysctl parameters: vm.dirty_background_ratio , vm.dirty_ratio and vm.swappiness .

Checking sysctl values

sysctl -a | grep dirty

vm.dirty_background_bytes = 0

vm.dirty_background_ratio = 10

vm.dirty_bytes = 0

vm.dirty_expire_centisecs = 3000

vm.dirty_ratio = 20

vm.dirty_writeback_centisecs = 500

sysctl -a | grep swappiness

vm.swappiness = 60

So for one customer, the most optimal values for RedHat Enterprice Linux 6 with NetApp storage with SSD cache and FC 8G connection were: vm.dirty_ratio = 2 and vm.dirty_background_ratio = 1 , which significantly reduced the host CPU load . Use the Linux Host Validator and Configurator utility to verify the optimal Linux host settings . When testing the SnapDrive utility for Linux (or other Unix-like) OSs, use the SnapDrive Configuration Checker for Unix . More information about the selection of optimal parameters vm.dirty * see the Supporthere . If you are using an Oracle database or virtualization, it is recommended that you set vm.swappiness = 0 inside such a Linux machine . This value will only allow swap to be used when the physical memory really runs out, which is most optimal for such tasks .

Set sysctl values

sysctl -w vm.dirty_background_ratio=1

sysctl -w vm.dirty_ratio=2

echo vm.dirty_background_ratio=1 >> /etc/sysctl.conf

echo vm.dirty_ratio=2 >> /etc/sysctl.conf

#for Guest VMs or Oracle DB

sysctl -w vm.swappiness=0

echo vm.swappiness=0 >> /etc/sysctl.conf

#Reload data from /etc/sysctl.conf

sudo sysctl –p

I / O queue size or I / O queue length on HBA

The default value is usually 128 , it must be selected manually. Increasing the queue length makes sense for random I / O operations that generate many disk seek operations on the disk subsystem. We change it like this:

echo 100000 > /sys/block/[DEVICE]/queue/nr_requestsGuest OS

In some cases, VMFS performs better than RDM . So in some tests with FC 4G you can get 300 MByte / sec using VMFS and about 200 MByte / sec with RDM .

File system

FS can make significant adjustments when testing performance.

The FS block size must be a multiple of 4KB. For example, if we run a synthetic load similar to that generated by OLTP , where the size of the operated unit is on average 8K, then we set 8K. I also want to draw attention to the fact that, as the FS itself , its implementation for a specific OS and version can greatly affect the overall performance picture. So for recording 10 MB in blocks of 100 streams with the command dd files from the database to the UFS FS located on the LUN given over FC 4G with storage FAS 2240 and 21 + 2 disksSAS 600 10k in one unit showed a speed of 150 MB / s, while the same configuration but with FS ZFS showed twice as much (approaching the theoretical maximum of the network channel), and the Noatime parameter did not affect the situation at all.

Noatime on the host

At the file system level, you can configure the parameter when mounting noatime and nodiratime , which will not allow to update the access time to files, which often has a very positive effect on performance. For such FSs as UFS, EXT3, etc. For one of the customers, installing noatime when mounting the ext3 file system on Red Hat Enterprise Linux 6 greatly reduced the load on the host CPU .

Loading table

For Linux machines you need to create the LUN 'and select the disk geometry : " the linux " (for machines without xen) or " xen " if this moon will be installed Linux the LVM from the Dom0.

Misalignment

For any OS, you need to create the correct geometry when creating the LUN in the storage settings. If the FS block size is incorrectly specified, the LUN geometry is incorrectly specified , the MBR / GPT parameter is not correctly selected on the host, we will observe, during peak loads, messages in the NetApp FAS console about a certain " LUN misalignment" event . Sometimes these messages may appear erroneously, if they rarely appear, simply ignore them. You can verify this by running the lun stats command on the storage system .

NetApp Host Utilities

Do not ignore this item. A set of utilities sets the correct delays, queue size on the HBA, and other settings on the host. Install Host Utilities after installing the drivers. Displays connected LUNs and their detailed information from the storage side . The set of utilities is free and can be downloaded from the netap technical support site. After installation, run the utility

host_config <-setup> <-protocol fcp|iscsi|mixed> <-multipath mpxio|dmp|non> [-noalua]

It is located

/ opt / netapp / santools /

After which, most likely, you will need to restart the host.

NetApp Linux Forum

Remember to search for more information and ask your questions at https://linux.netapp.com/forum .

Applications

Depending on your configuration, protocols, workloads, and infrastructure, your applications may have various tuning recommendations and fine tuning. To do this, apply for the Best Practice Guide for the appropriate applications for your configuration. The most common may be

- NetApp SnapManager for Oracle & SAN Network

- Oracle DB on Clustered ONTAP , NetApp for Oracle Database , Best Practices for Oracle Databases on NetApp Storage , SnapManager for Oracle

- Oracle VM

- DB2 , DB2 and FlexClone , Deploying an IBM DB2 Multipartition Database

- MySQL

- Infrastructure for Web 2.0 / LAMP Applications powered by NetApp and MySQL

- Red Hat Enterprise Linux 6, KVM

- Lotus Domino 8.5 for Linux

- Citrix XenServer

- Deploying Red Hat Enterprise Linux OpenStack Platform 4 on NetApp Clustered Data ONTAP

- and etc.

Drivers

Do not forget to install the drivers for your HBA adapter (compiling them under your kernel) before installing NetApp Host Utility. Follow the recommendations for configuring the HBA adapter. Often, you need to change the queue length and timeout for the most optimal interaction with NetApp. If you are using VMware ESXi or another virtualization, do not forget to enable NPIV if you need to forward virtual HBA adapters inside the virtual machines. Some NPIV- based adapters can be configured with QoS , such as the Qlogic HBA 8100 series .

Compatibility

Widely apply the compatibility matrix in your practice to reduce potential problems in data center infrastructure .

I am sure that over time I will have something to add to this article on optimizing the Linux host, so check back here from time to time.

Please send messages about errors in the text to the LAN .

Comments and additions on the contrary please comment