Time measurement with nanosecond precision

A couple of months ago a historical moment came for me. I no longer have enough standard operating system tools for measuring time. It took time to measure with nanosecond accuracy and with nanosecond overhead.

I decided to write a library that would solve this problem. At first glance it seemed that there was nothing much to do. But upon closer examination, as always, it turned out that there were many interesting problems that had to be dealt with. In this article I will talk about the problems and how they were solved.

Since you can measure a lot of different types of time on a computer, I’ll just clarify that this is about “stopwatch time”. Or wall-clock time. It is real time, elapsed time, etc. That is, a simple "human" time, which we mark at the beginning of the task and stop at the end.

Microsecond - almost eternity

Developers of high-performance systems over the past few years have become accustomed to the microsecond time scale. For microseconds, you can read data from the NVMe disk. For microseconds, data can be sent over the network. Not for everyone, of course, but for the InifiniBand-network - easily.

At the same time, the microsecond also has a structure. A complete I / O stack consists of several software and hardware components. The delays introduced by some of them lie at the sub-microsecond level.

Microsecond accuracy is no longer sufficient to measure delays of this magnitude. However, not only accuracy is important, but also the overhead of measuring time. Linux system call clock_gettime () returns time with nanosecond precision. On a machine that is right here at my fingertips (Intel® Xeon® CPU E5-2630 v2 @ 2.60GHz), this call works in about 120 ns. Very good number. In addition, clock_gettime () works quite predictably. This allows you to take into account the overhead of his call and in fact to make measurements with an accuracy of the order of tens of nanoseconds. However, we now turn our attention to this. To measure the time interval, you need to make two such calls: at the beginning and at the end. Those. spend 240 ns. If tightly spaced intervals of the order of 1-10µs are measured,

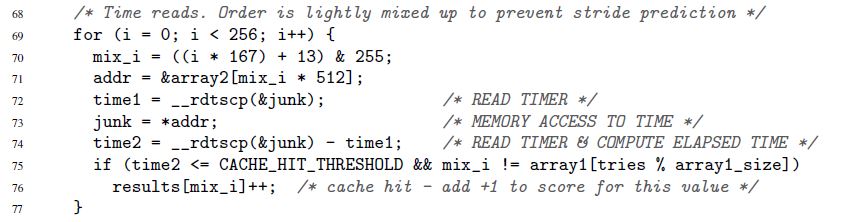

I started this section with how IO-stack accelerated in recent years. This is new, but not the only reason to want to measure time quickly and accurately. Such a need has always been. For example, there was always a code that I wanted to speed up at least 1 microprocessor clock. Or here's another example, from the original article about Specter's sensational vulnerability:

Here, in lines 72-74, the execution time of a single memory access operation is measured. True, Specter is not interested in nanoseconds. Time can be measured in "parrots". To parrots and seconds we will return.

Time-stamp counter

The key to fast and accurate time measurement is a special microprocessor counter. The value of this counter is usually stored in a separate register and is usually — but not always — accessible from user space. On different architectures, the counter is called differently:

- time-stamp counter on x86

- time base register on PowerPC

- interval time counter on Itanium

- etc.

Below, I will always use the name “time-stamp counter” or TSC, although in reality I will mean any such counter, regardless of architecture.

Reading the value of a TSC is usually - but again not always - possible with a single instruction. Here is an example for x86. Strictly speaking, this is not a pure assembler instruction, but a GNU inline assembler:

uint32_t eax, edx;

__asm__ __volatile__( "rdtsc" : "=a" (eax), "=d" (edx));

The “rdtsc” instruction places the two 32-bit halves of the TSC register in the eax and edx registers. Of these, you can "glue" a single 64-bit value.

Once again, this (and similar) instructions in most cases can be called directly from user space. No system calls. Minimum overhead.

What needs to be done now to measure time?

- Execute one such instruction at the beginning of the period of interest. Remember counter value

- Execute one such instruction at the end. We believe that the value of the counter from the first instruction to the second will increase. Otherwise, why is it needed? Remember the second value

- We consider the difference between the two saved values. This is our time.

It looks simple, but ... The

time measured by the described procedure is expressed in "parrots". It is not in seconds. But sometimes parrots are exactly what you need. There are situations when it is not the absolute values of time intervals that are important, but how the different intervals correspond to each other. The Specter example above demonstrates exactly this situation. The duration of each individual memory access does not matter. It is only important that calls to one address will be executed much faster than to others (depending on whether the data is stored in the cache or main memory).

What if no parrots are needed, but seconds / microseconds / nanoseconds, etc.? Here we can distinguish two fundamentally different cases:

- Nanoseconds are needed, but then. That is, it is permissible to make all the measurements in parrots first and save them somewhere for further processing (for example, in memory). And only after the measurements are completed, slowly convert the collected parrots in seconds

- Nanoseconds are needed on the fly. For example, your measurement process has some kind of “consumer” that you do not control and who expects time in the “human” format

The first case is simple, the second one requires resourcefulness. Conversion should be as efficient as possible. If it consumes a lot of resources, it can greatly distort the measurement process. We'll talk about effective conversion below. Here we have identified this problem so far and turn to another.

Time-stamp counter-s are not as simple as we would like. On some architectures:

- It is not guaranteed that the TSC is updated with high frequency. If TSC is updated, say, once a microsecond, then nanoseconds with its help will not be able to fix

- the frequency with which the TSC is updated may vary over time

- on different CPUs present in the system, TSC can be updated with different frequency

- there may be a shift between TSC ticking on different CPUs.

Here is an example illustrating the last problem. Suppose we have a system with two CPUs: CPU1 and CPU2. Suppose that the TSC on the first CPU lags behind the second by the number of ticks, which is equivalent to 5 seconds. Suppose further that the system runs a thread that measures the computation time, which he himself does. To do this, the stream first reads the TSC value, then does the calculation, and then reads the second TSC value. If during all its life the thread remains on only one CPU - on any - then there are no problems. But what if the thread started on CPU1, measured the first TSC value there, and then in the middle of the calculations was moved by the operating system to CPU2, where it read the second TSC value? In this case, the calculations will seem 5 seconds longer than they actually are.

Due to the problems listed above, TSC cannot serve as a reliable source of time on some systems. However, on other systems “suffering” from the same problems, TSC can still be used. This is made possible by special architectural features:

- hardware can generate a special interrupt each time the frequency with which the TSC is updated is changed. In this case, the equipment also provides the ability to find out the current frequency. Alternatively, the TSC update rate can be placed under the control of the operating system (see “Power ISA Version 2.06 Revision B, Book II, Chapter 5”)

- hardware along with the TSC value can also provide the ID of the CPU on which this value is read (see Intel's RDTSCP instruction, "Intel 64 and IA-32 Architects Software Developer's Manual," Volume 2)

- on some systems, you can programmatically adjust the TSC value for each CPU (see Intel’s WRMSR instruction and register IA32_TIME_STAMP_COUNTER, "Intel 64 and IA-32 Architects Software Developer's Manual, Volume 3)

In general, the topic of how time counters are implemented on different architectures is fascinating and extensive. If you have time and interest, I recommend to dive. Among other things, you will find out, for example, that some systems allow you to programmatically find out if a TSC can serve as a reliable source of time.

So, there are many architectural implementations of TSC, each with its own characteristics. But it is interesting that a general trend has been established throughout this zoo. Modern hardware and operating systems strive to ensure that :

- TSC ticks at the same frequency on every CPU in the system

- this frequency does not change in time

- between TSC ticking on different CPUs, there is no shift

When designing my library, I decided to proceed from this premise, and not from the vinaigrette of hardware implementations.

Library

I did not begin to lay on the hardware chips heaps of different architectures. Instead, I decided that my library would be focused on the modern trend. She has a purely empirical focus:

- It allows you to experimentally test the reliability of TSC as a source of time.

- also allows you to experimentally calculate the parameters necessary for the rapid conversion of "ticks" in nanoseconds

- in a natural way, the library provides convenient interfaces for reading TSC and converting ticks into nanoseconds on the fly

Library code is available here. It will be compiled and executed only on Linux.

In the code you can see the details of the implementation of all methods, which will be discussed further.

TSC reliability rating

The library provides an interface that returns two scores:

- maximum shift between counters belonging to different CPUs. Only CPUs available to the process are considered. For example, if there are three CPUs available to the process, and at the same time point the TSCs on these CPUs are 50, 150, 20, then the maximum shift will be 150-20 = 130. Naturally, experimentally, the library will not be able to get a real maximum shift, but it will give an estimate in which this shift will fit. What to do with the assessment next? How to use? This is already solved by client code. But the meaning is about the following. The maximum shift is the maximum value by which the measurement that the client code makes may be distorted. For example, in our example with three CPUs, the client code began to measure time on CPU3 (where TSC was 20), and finished on CPU2 (where TSC was 150). It turns out that extra 130 ticks will creep into the measured interval. And never again. The difference between CPU1 and CPU2 would be only 100 ticks. With a rating of 130 ticks (in fact, it will be much more conservative), the client can decide whether this is a distortion value or not.

- Do TSC values increase in series on the same or different CPUs? Here the idea is as follows. Suppose we have several CPUs. Suppose their watches are synchronized and ticking with the same frequency. Then, if you first measure the time on one CPU, and then measure it again — already on any of the available CPUs — then the second digit must be greater than the first.

I will call this assessment below the TSC monotony rating.

Now let's see how to get the first grade:

- one of the available process CPU is declared "basic"

- etc. are moving all the other CPU, and each of them is calculated shift:

TSC_на_текущем_CPU – TSC_на_базовом_CPU. This is done as follows:- a) come from three consecutively (one after another), the measured values:

TSC_base_1, TSC_current, TSC_base_2. Here, current indicates that the value was measured on the current CPU, and base is on the base - b) the shift

TSC_на_текущем_CPU – TSC_на_базовом_CPUmust lie in the interval[TSC_current – TSC_base_2, TSC_current – TSC_base_1]. This is on the assumption that TSC ticks with the same frequency on both CPUs. - c) steps a) -b) are repeated several times. Calculates the intersection of all intervals obtained in step b). The resulting interval is taken as the estimate of the shift

TSC_на_текущем_CPU – TSC_на_базовом_CPU

- a) come from three consecutively (one after another), the measured values:

- After the shift estimate for each CPU relative to the baseline is obtained, it is easy to get an estimate of the maximum shift between all available CPUs:

- a) a minimum interval is calculated that includes all the resulting intervals obtained in step 2

- b) the width of this interval is taken as an estimate of the maximum shift between TSC ticking on different CPUs.

To assess the monotony in the library, the following algorithm is implemented:

- Suppose a process is available N CPU

- Measure TSC on CPU1

- Measure TSC on CPU2

- ...

- Measure TSC on CPUN

- Measure TSC on CPU1 again

- Check that the measured values increase monotonically from the first to the last.

It is important here that the first and last values are measured on the same CPU. And that's why. Suppose we have 3 CPUs. Suppose that TSC on CPU2 is shifted by +100 ticks relative to TSC on CPU1. Also assume that TSC on CPU3 is shifted by +100 ticks relative to TSC on CPU2. Consider the following chain of events:

- Read TSC on CPU1. Let the value 10 be obtained

- 2 ticks passed

- Read TSC on CPU2. Must be 112

- 2 ticks passed

- Read TSC on CPU3. Must be 214

So far, the clock looks synchronized. But let's again measure TSC on CPU1:

- 2 ticks passed

- Read TSC on CPU1. Must be 16

Oops! Monotony is broken. It turns out that measuring the first and last values on the same CPU allows you to detect more or less large shifts between hours. The next question, of course: “How big is the shift?” The amount of shift that can be detected depends on the time that passes between successive TSC measurements. In the example above, this is just 2 ticks. Shifts between clocks greater than 2 ticks will be detected. Generally speaking, shifts that are less than the time elapsed between successive measurements will not be detected. So, the tighter the measurements in time, the better. The accuracy of both estimates depends on this. The tighter the measurements are made:

- the lower the maximum shift estimate

- the more confidence in the monotony assessment

In the next section we will talk about how to make dense measurements. Here I’ll add that while calculating the TSC reliability ratings, the library does many more simple checks for lice, for example:

- limited verification that TSC on different CPUs is ticking at the same speed

- check that the counters do change in time, and not just show the same value

Two methods for collecting meter values

In the library, I implemented two methods for collecting TSC values:

- Switch between CPUs . In this method, all the data necessary for assessing the reliability of a TSC is collected by a single stream, which “jumps” from one CPU to another. Both algorithms described in the previous section are suitable for this method and are not suitable for the other.

There is no practical use for switching between CPUs. The method was implemented just for the sake of "play." The problem with the method is that the time required to drag the thread from one CPU to another is very long. Accordingly, a lot of time passes between successive measurements of TSC, and the accuracy of the estimates is very low. For example, a typical estimate for the maximum shift between TSC is obtained in the region of 23,000 ticks.

Nevertheless, the method has a couple of advantages:- he is absolutely deterministic. If you need to consistently measure TSC on CPU1, CPU2, CPU3, then we just take and do it: switch to CPU1, read TSC, switch to CPU2, read TSC, and finally, switch to CPU3, read TSC

- presumably, if the number of CPUs in the system grows very quickly, then the time to switch between them should grow much slower. Therefore, in theory, apparently, there can be a system - a very large system! - in which the use of the method will be justified. But nevertheless it is improbable

- Measurements ordered by CAS . In this method, data is collected in parallel by multiple threads. On each available CPU, one thread is started. Measurements made by different threads are ordered in a single sequence using the “compare-and-swap” operation. Below is a piece of code that shows how this is done.

The idea of the method is borrowed from fio , a popular tool for generating I / O loads.

The reliability estimates obtained with the power of this method already look very good. For example, the estimate of the maximum shift is obtained at the level of several hundred ticks. And the monotony test allows you to catch the desynchronization of hours within hundreds of ticks.

However, the algorithms given in the previous section are not suitable for this method. For them, it is important that the TSC values are measured in a predetermined order. The method of "measurements ordered by CAS" does not allow this. Instead, a long sequence of random measurements is first collected, and then the algorithms (already others) attempt to find in this sequence the values read on the "suitable" CPUs.

I will not give these algorithms here, so as not to abuse your attention. They can be seen in the code. There are many comments. Ideally, these algorithms are the same. A fundamentally new moment is a test of how statistically typed TSC sequences are statistically “qualitative”. It is also possible to set the minimum acceptable level of statistical significance for TSC reliability ratings.

Theoretically, on VERY large systems, the method of “measurements ordered by CAS” can give poor results. The method requires that processors compete for access to a common memory cell. If there are a lot of processors, the match can be very tense. As a result, it will be difficult to create a measurement sequence with good statistical properties. However, at the moment this situation looks unlikely.

I promised some code. Here is how building the measurements in a single chain with the help of CAS.

for ( uint64_t i = 0; i < arg->probes_count; i++ )

{

uint64_t seq_num = 0;

uint64_t tsc_val = 0;

do

{

__atomic_load( seq_counter, &seq_num, __ATOMIC_ACQUIRE);

__sync_synchronize();

tsc_val = WTMLIB_GET_TSC();

} while ( !__atomic_compare_exchange_n( seq_counter, &seq_num, seq_num + 1, false, __ATOMIC_ACQ_REL, __ATOMIC_RELAXED));

arg->tsc_probes[i].seq_num = seq_num;

arg->tsc_probes[i].tsc_val = tsc_val;

}

This code is executed on every available CPU. All threads have access to a common variable

seq_counter. Before reading TSC, the stream reads the value of this variable and stores it in a variable seq_num. Then reads TSC. Then it tries to atomically increase seq_counter by one, but only if the value of the variable has not changed since it was read. If the operation is successful, it means that the thread managed to “stake out” the sequence number stored in the measured TSC value seq_num. The next sequence number, which can be staked out (perhaps already in another thread) will be one more. For this number is taken from a variable seq_counter, and each successful call __atomic_compare_exchange_n()increases this variable by one.__atomic with __sync ???

Занудства ради, надо отметить, что использование встроенных функций семейства

__atomic совместно с функцией из устаревшего семейства __sync выглядит некрасиво. __sync_synchronize() использована в коде для того, чтобы избежать переупорядочения операции чтения TSC с вышележащими операциями. Для этого нужен полный барьер по памяти. В семействе __atomic формально нет функции c соответствующими свойствами. Хотя по факту есть: __atomic_signal_fence(). Эта функция упорядочивает вычисления потока с обработчиками сигналов, исполняющимися в том же потоке. По сути, это и есть полный барьер. Тем не менее прямо это не заявлено. А я предпочитаю код, в котором нет скрытой семантики. Отсюда __sync_synchronize() – стопудовый полный барьер по памяти.Another point that is worth mentioning here is the concern that all measurement flows start more or less at the same time. We are interested in the fact that the TSC values read on different CPUs are mixed together as best as possible. We are not satisfied with the situation when, for example, first one thread starts, finishes its work, and only then all the others start. The resulting TSC sequence will have useless properties. From it will not work to extract any estimates. The simultaneous start of all threads is important - and for this the library has taken action.

Tick conversion to nanoseconds on the fly

After verifying the reliability of TSC, the second big library assignment is to convert ticks to nanoseconds on the fly. The idea of this conversion, I borrowed from the already mentioned fio. However, I had to make several significant improvements, because, as shown by my analysis, in fio itself, the conversion procedure does not work well enough. It turns out low accuracy.

Immediately begin with an example.

Ideally, it would be desirable to convert tics to nanoseconds like this:

ns_time = tsc_ticks / tsc_per_nsWe want the time spent on conversion to be minimal. Therefore, we aim to use only integer arithmetic. Let's see what it may threaten us.

If you

tsc_per_ns = 3, a simple integer division, in terms of accuracy, fine: ns_time = tsc_ticks / 3. But what if

tsc_per_ns = 3.333? If this number is rounded to 3, the accuracy of the conversion will be very low. To overcome this problem as follows: ns_time = (tsc_ticks * factor) / (3.333 * factor). If the multiplier

factoris large enough, then the accuracy will be good. But something will remain bad. Namely, the overhead of the conversion. Integer division is a very expensive operation. For example, on x86 it requires 10+ cycles. Plus, integer division operations are not always pipelined. We rewrite our formula in the equivalent form

ns_time = (tsc_ticks * factor / 3.333) / factor. The first division is not a problem. We can predict

(factor / 3.333)in advance. But the second division is still pain. To get rid of her, let's choosefactorequal to a power of two. After that, the second division can be replaced by a bit shift - a simple and fast operation. How big can you choose

factor? Unfortunately, factorit can not be arbitrarily large. It is limited by the condition that the multiplication in the numerator should not lead to overflow of 64-bit type. Yes, we want to use only native types. Again, to keep the overhead of the conversion at a minimum. Now let's see how big can be

factorin our particular example. Suppose we want to work with time intervals up to one year. During the year, TSC tiknet the following times: 3.333 * 1000000000 * 60 * 60 * 24 * 365 = 105109488000000000. Divide a maximum value of 64-bit type number is: 18446744073709551615 / 105109488000000000 ~ 175.5. Thus, the expression(factor / 3.333)should not be greater than this value. Then we have factor <= 175.5 * 3.333 ~ 584.9. The largest power of two, which does not exceed this number, is 512. Consequently, our conversion formula takes the form: ns_time = (tsc_ticks * 512 / 3.333) / 512Or:

ns_time = tsc_ticks * 153 / 512Fine. Let's now see what this formula has with precision. One year contains

1000000000 * 60 * 60 * 24 * 365 = 31536000000000000nanoseconds. Our formula gives: 105109488000000000 * 153 / 512 = 31409671218750000. The difference with the present value is 126328781250000 nanoseconds, or 126328781250000 / 1000000000 / 60 / 60 ~ 35hours. This is a big mistake. We want better accuracy. What if we measure time intervals no more than an hour? I omit the calculations. They are completely identical to what has just been done. The final formula will be:

ns_time = tsc_ticks * 1258417 / 4194304(1)The conversion error will be only 119305 nanoseconds for 1 hour (which is less than 0.2 milliseconds). Very, very good. If the maximum convertible value is even less than an hour, then the accuracy will be even better. But how do we use it? Do not limit the measurement of time to one hour?

Let's pay attention to the next point:

tsc_ticks = (tsc_ticks_per_1_hour * number_of_hours) + tsc_ticks_remainderIf we predict

tsc_ticks_per_1_hour, we can extract number_of_hoursfrom tsc_ticks. Further, we know how many nanoseconds are contained in one hour. Therefore, we will not be difficult to translate in nanoseconds the part tsc_ticksthat corresponds to the whole number of hours. To finish the conversion, we will need to convert to nanoseconds.tsc_ticks_remainder. However, we know that this number of ticks ranged in less than an hour. So, to convert it to nanoseconds, we can use the formula (1). Is done. This mechanism of conversion suits us. Let's now generalize and optimize it.

First of all, we want to have flexible control over conversion errors. We do not want to bind the conversion parameters to a time interval of 1 hour. Let it be an arbitrary time interval:

tsc_ticks = modulus * number_of_moduli_periods + tsc_ticks_remainderOnce again, remember how to convert the residue to nanoseconds:

ns_per_remainder = (tsc_ticks_remainder * factor / tsc_per_nsec) / factorCalculate the conversion parameters (we know that

tsc_ticks_remainder < modulus):modulus * (factor / tsc_per_nsec) <= UINT64_MAX

factor <= (UINT64_MAX / modulus) * tsc_per_nsec

2 ^ shift <= (UINT64_MAX / modulus) * tsc_per_nsec

For the sake of boredom, it should be noted that the last inequality is not equivalent to the first one in the framework of integer arithmetic. But I will not dwell on this for long. I can only say that the last inequality is tougher than the first, and therefore safe to use.

After

shiftwe have obtained from the last inequality , we calculate:

And then these parameters are used to convert the residue into nanoseconds:

So, we have sorted out the conversion of the residue. The next problem to be solved is extraction to and from . As always, we want to do it quickly. As always, we do not want to use division. Therefore, we simply choose to be equal to the power of two:

Then:

Excellent. We now know how to extract from andfactor = 2 ^ shift

mult = factor / tsc_per_nsec

ns_per_remainder = (tsc_ticks_remainder * mult) >> shift

tsc_ticks_remaindernumber_of_moduli_periodstsc_ticksmodulusmodulus = 2 ^ remainder_bit_lengthnumber_of_moduli_periods = tsc_ticks >> remainder_bit_length

tsc_ticks_remainder = tsc_ticks & (modulus - 1)tsc_ticksnumber_of_moduli_periodstsc_ticks_remainder. And we know how to convert tsc_ticks_remainderto nanoseconds. It remains to figure out how to convert that portion of ticks into nanoseconds, which is a multiple modulus. But everything is simple: ns_per_moduli = ns_per_modulus * number_of_moduli_periodsns_per_modulusyou can calculate in advance. And according to the same formula, according to which we convert the remainder. This formula can be used for periods of time that are no longer than modulus. Himself modulus, of course, no longer than modulus. ns_per_modulus = (modulus * mult) >> shiftEverything! We were able to predict all the parameters needed to convert ticks to nanoseconds on the fly. Let us now briefly summarize the conversion procedure:

- we have

tsc_ticks number_of_moduli_periods = tsc_ticks >> remainder_bit_lengthtsc_ticks_remainder = tsc_ticks & (modulus - 1)ns = ns_per_modulus * number_of_moduli_periods + (tsc_ticks_remainder * mult) >> shift

In this procedure, parameters

remainder_bit_length, modulus, ns_per_modulus, multand shiftprecalculation advance. If you are still reading this post, then you are a big or big fellow. It is even possible that you are a performance analyst or developer of high-performance software.

So here. It turns out that we have not finished yet :)

Remember how we calculated the parameter

mult? It was like this: mult = factor / tsc_per_nsecQuestion: where does it come from

tsc_per_nsec? The number of ticks per nanosecond is a very small amount. In fact, my library is

tsc_per_nsecused instead (tsc_per_sec / 1000000000). Ie: mult = factor * 1000000000 / tsc_per_secAnd here there are two interesting questions:

- Why

tsc_per_sec, nottsc_per_msec, for example? - Where to get these

tsc_per_sec?

Let's start with the first. In fio, the number of ticks in a millisecond is now used. And there are problems with this. On the machine, the parameters of which I named above

tsc_per_msec = 2599998. While tsc_per_sec = 2599998971. If we bring these numbers to the same scale, then their ratio will be very close to unity: 0.999999626. But if we use the first, not the second, then for every second we will have an error of 374 nanoseconds. So - tsc_per_sec. Further ... How to count

tsc_per_sec? This is done on the basis of direct measurement: “some time” is a configurable parameter. It can be more, less than or equal to one second. Let's say it's half a second. Suppose further that the real difference between and was equal to 0.6 seconds. Then .

start_sytem_time = clock_gettime()

start_tsc = WTMLIB_GET_TSC()

подождать сколько-то времени

end_system_time = clock_gettime()

end_tsc = WTMLIB_GET_TSC()

end_system_timestart_system_timetsc_per_sec = (end_tsc – start_tsc) / 0,6The library considers several values in this way

tsc_per_sec. And then the standard methods "cleans" them from statistical noise and gets a single value tsc_per_secthat you can trust. In the time measurement scheme above, the order of calls

clock_gettime()and is important WTMLIB_GET_TSC(). It is important WTMLIB_GET_TSC()that the same time passes between the two calls as between the two calls clock_gettime(). Then you can easily correlate the system time with TSC ticks. And then the spread of values tsc_per_seccan really be considered random. With this scheme of measurement values tsc_per_secwill deviate from the average value in any direction with the same probability. And you can apply standard filtering methods to them.Conclusion

Perhaps all.

But the topic of effective time measurement is not limited to this. There are many nuances. Interested I propose to independently work out the following questions:

- storage of conversion parameters in the cache or - even better - on the registers

- up to what limits can be reduced

modulus(thereby increasing the accuracy of conversion)? - as we have seen, the accuracy of the conversion is influenced not only by

modulus, but also by the magnitude of the time interval, which is related to ticks (tsc_per_msecortsc_per_sec). How to balance the influence of both factors? - TSC on a virtual machine. Is it possible to use?

- using standard operating system structures to store time. For example, fio saves its nanoseconds in the standard Linux format timespec. Here's how it happens:

tp->tv_sec = nsecs / 1000000000ULL;

It turns out that at first TSC tics are converted to nanoseconds using a fast and efficient procedure. And then the entire gain is leveled due to the integer division, which is necessary to select seconds from nanoseconds

The methods discussed in this article allow you to measure the time scale of a second with an accuracy of the order of several tens of nanoseconds. This is the accuracy that I actually observe when using my library.

Interestingly, fio, from which I borrowed some methods, on the scale of a second loses exactly 700-900 nanoseconds (and there are three reasons for this). Plus, it loses in the conversion speed due to the storage of time in the standard Linux format. However, I hasten to reassure fio fans. I sent to the developers a description of all the problems with conversion that I found . People are already working, they will be fixed soon.

I wish you all a lot of pleasant nanoseconds!

Only registered users can participate in the survey. Sign in , please.

Do you work with high-performance code?

- 33% Yes, I write it 39

- 1.6% Yes, I analyze it 2

- 60.1% No 71

- 5% Other 6

Do you use fio in your work (at your leisure?)?

- 7.2% yes 7

- 9.3% No, but using similar tools 9

- 83.3% No, I do not need such tools 80

What is your specialty?

- 83.3% Developer 95

- 0.8% Tester 1

- 0.8% Performance Analyst 1

- 1.7% DevOps 2

- 1.7% IT Manager 2

- 7.8% Other occupation in IT 9

- 3.5% Non-IT Specialist 4