Active analytics for your project

Take the condition of the task e-commerce project (online store or other b2c online service). We will give this store a cool team: clear leadership, quick marketing decisions, flexible development (ready to respond quickly to changing requirements). We will give him a certain level of quantitative success (let it be from 1000 orders per day). Suppose this project is still a startup (or it was recently). And someday he will surely take over the world. But so far I have not been able to implement the ERP / CRM discharge system for working with large volumes of orders / customers.

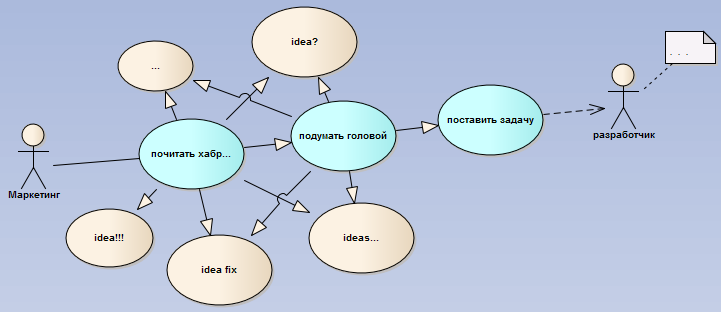

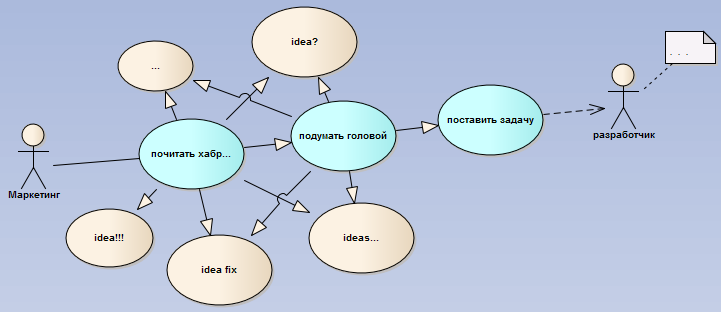

What usually happens in the active stage of development of such a business? Marketing is seeking ways to:

And he reads and invents other world-conquering business models.

And in development we get a queue of brilliant and not very (but necessarily urgent) tasks. Affecting all processes from landing a customer on a service, to repeatedly servicing it. The software part of the business is quickly boiled with vermicelli from the logic of registering new customers, placing orders, calculating costs / tariffs, ways to pay for orders / services, forming personal mailings, individual and group discount offers, holding promotions and so on.

Marketing, which at the start of the business was content with reports like “average order amount per month” now wants “the average order amount without special offers compared to two weeks in January and the same two weeks in September for a group of customers who made more than 1 order in the last six months and registered more than 2 years ago, and even separately please customers of this or that gender, and it is even better that they never contact the call center, and also divide all this separately for each channel for attracting customers and for the lunar phases Auger "...

From the haste and desperation, the base of active overgrowntrashHelper tables and fields that no one needs (except marketers). Happy marketing develops successful and discards unsuccessful business models. Fields and entire tables remain forever. Whipped up reports with the growth of the client and commercial base soon cease to fit within the reasonable limits of the load on the system, require putting them in background tasks (if not on a separate server) and want to replicate the database. Or even more fun: the marketer “learned sql” and sits directly in your server, periodically running to the administrator asking him to curse this “I don’t know why the query is taking a long time to complete”.

And the base structure looks something like this (not the worst option):

Why is that bad"? Because a bunch of users-orders-payments should solve the problem of receiving a service by a client and paying for it. As soon as we give these entities any extraneous tasks, we increase dangerous connectivity through the entire workflow.

First, imagine a simplified direct sales cycle in e-commerce (from the point of view of its most important part - the client):

In order for this process to be carried out efficiently, quickly and with a minimum number of logical errors (everything should be “type when ordering] -top ”, otherwise it will break the marketing“ hook ”), there should be nothing superfluous in it (and the probability of filling with bugs is minimal).

But here comes marketing and says:

And this is just one piece of the whole chain. Further it will only be “worse." Do you agree that the client himself does not participate in these tasks in any way and these secret intrigues do not satisfy his need? And when you program all these conditions directly in your class (component / controller), which processes calls and customer registrations, the code of this class will be thicker, and there will be more and more dependencies in it. And this class no longer registers customers! More precisely, he not only registers: he sends letters and makes difficult decisions (and he learns to play the guitar as well - guaranteed). You’ve done well if you selected letters in a separate component, but this component is constantly at risk of starting to turn to the customer database and the history of their orders in order to decide which letter to send and eventually learn to play the piano (I’m sure I once taught myself).

And now everything works something like this (and this is just the beginning):

To continue, I hope that the problem is understandable and even familiar.

But suddenly, a clear leadership not only heard about this problem, nor provided a “green light” for its solution. Attraction of generosity: a whole developer, a specialist in mailing, generating reports with graphs and ex-uploads. Or you are a happy owner of an offer to develop a project from scratch. What would be the technical task now to “forget” about the impact of marketing activity on the main (clear and stable) business process?

I propose one solution that will satisfy SOLID principles, design patterns, TDD and DDD, and other beautiful terms. This decision was born out of the belief that you can always just take ... and put the marketing functionality out the door of your important customer process (and never let it in again).

And to do this of course - events. We take marketing, ask him to move away from our simple system to the corner, and begin to rush there with information:

Now all the tasks from the “But marketing comes and says” list can be solved separately. Your main system is pristine from marketing, it can solve its own problems, send letters itself and decide when it needs to be done. He has much more initial data than in the “clean” base of the project. Because when receiving event data, the analytical module can sort them into detailed shelves and even perform all the related actions (send an email, in our case):

Imagine if all these nuances are calculated on the fly (or later in reporting scripts) and stored in the main database?

In our scheme, the conditional task of “sending a letter” becomes detached and does not threaten the main process. It turns out quite a CRM-module (and analysis, and decision-making, and their execution), which can even be crooked to disgrace, with a terrible (for your tasks) denormalized database, a bunch of all kinds of auxiliary fields in tables, brakes, poorly written code ... But this is not important, because it is far in the “marketing corner” (ideally - physically on another server) and does not concern the client process.

And there is nothing special about this decision. The habitual such pattern of “observer” with throwing events and listening to them turned out. But in our case, it is impossible to implement the “in place” pattern when events are heard and executed by the same process that received the request from the client, because if we mow in the “listener”, then the 500th errors will be returned from it just like without any patterns.

Therefore, the approach will be good only when:

Ha! - you think. Is the game worth the candle? We are trying to assess the pros and cons of implementing a separate “analytical project”:

It turns out interesting. All the disadvantages of a “separate project” are only that it will be necessary to complicate the server-software infrastructure and implement the project’s core for analytics, which will provide interfaces for reading events and saving them to your database (from a week to a month in especially running cases for implementation of this core). On the minus of synchronization - a little lower.

The fact that the decision to “do this” depends on the project and its business models. And the fact that it is not in vain that more serious projects are bought by very expensive (in implementation and support) customer / order / warehouse management systems and so on. And in no case do not trust this work online site. And in this way we also separate the “primary” marketing with all its frantic (sometimes - disposable) ideas and complex reports.

And here is this very separate project:

Instead of implementing complex analytics and indirect processes in the main project, we transfer this responsibility to the analytical project, realizing only reliable transport for events (red legend). This will need to be done once and sometimes maintained. The support will include tasks on the release of new events from the main project and reading them on the analytical project. But you must admit: in the case of programming these actions within the main project, there will be no less work, and there will be much more risks to break something in it.

There is a significant minus to this approach. The main project does not need to know anything about the existence of an analytical project (is there another meaning?). But with the analytic situation is the opposite. Most likely he will need to know all the processes of the main thing (since he was invented to solve all the problems that he “did not want to solve”).

Therefore, in the development process, firstly, it will be difficult (and often impossible) to solve problems simultaneously on both. In the main project, new events will appear - and in the analytical project they will be processed much later. Secondly, a program error or insufficient denormalization may occur in parsing data from the queue on the analytic server, and tasks have already been read ... the train has left.

To solve this problem, a “database of events” has been conceived that stores them (events) from the beginning of time, including (importantly) the photographic context (in which these events occurred). This is necessary for peace of mind and then, in extreme cases, it would always be possible to complete a complete reboot of the created matrix:

There is another obvious minus - the complexity of implementation. If you decide to do so, and you have everything “very long ago and badly”, there are few options and you will have to think carefully in all:

Based on reading the event stream from the main system, you can implement:

What usually happens in the active stage of development of such a business? Marketing is seeking ways to:

- expand the audience and channels for attracting customers;

- increase the quality of services;

- implement loyalty programs to retain good customers;

- Develop affiliate networks.

And he reads and invents other world-conquering business models.

And in development we get a queue of brilliant and not very (but necessarily urgent) tasks. Affecting all processes from landing a customer on a service, to repeatedly servicing it. The software part of the business is quickly boiled with vermicelli from the logic of registering new customers, placing orders, calculating costs / tariffs, ways to pay for orders / services, forming personal mailings, individual and group discount offers, holding promotions and so on.

Marketing, which at the start of the business was content with reports like “average order amount per month” now wants “the average order amount without special offers compared to two weeks in January and the same two weeks in September for a group of customers who made more than 1 order in the last six months and registered more than 2 years ago, and even separately please customers of this or that gender, and it is even better that they never contact the call center, and also divide all this separately for each channel for attracting customers and for the lunar phases Auger "...

From the haste and desperation, the base of active overgrown

And the base structure looks something like this (not the worst option):

Why is that bad"? Because a bunch of users-orders-payments should solve the problem of receiving a service by a client and paying for it. As soon as we give these entities any extraneous tasks, we increase dangerous connectivity through the entire workflow.

Life example

First, imagine a simplified direct sales cycle in e-commerce (from the point of view of its most important part - the client):

In order for this process to be carried out efficiently, quickly and with a minimum number of logical errors (everything should be “type when ordering] -top ”, otherwise it will break the marketing“ hook ”), there should be nothing superfluous in it (and the probability of filling with bugs is minimal).

But here comes marketing and says:

- After registration, you need to send a letter to the client.

- And if the client came from the stock, then replace “hello” with “hello” in it.

- Or maybe it is from the order for a trial period of the service - then a completely different template.

- If the client did not come for a long time, then send him a reminder letter.

- If the client came, but quickly left, then send-a letter-ask-why.

- If you come and trample for N minutes without putting anything in the basket, offer a discount.

- And also throw track-id from partners, then to share the percentage on the order.

And this is just one piece of the whole chain. Further it will only be “worse." Do you agree that the client himself does not participate in these tasks in any way and these secret intrigues do not satisfy his need? And when you program all these conditions directly in your class (component / controller), which processes calls and customer registrations, the code of this class will be thicker, and there will be more and more dependencies in it. And this class no longer registers customers! More precisely, he not only registers: he sends letters and makes difficult decisions (and he learns to play the guitar as well - guaranteed). You’ve done well if you selected letters in a separate component, but this component is constantly at risk of starting to turn to the customer database and the history of their orders in order to decide which letter to send and eventually learn to play the piano (I’m sure I once taught myself).

And now everything works something like this (and this is just the beginning):

To continue, I hope that the problem is understandable and even familiar.

But suddenly, a clear leadership not only heard about this problem, nor provided a “green light” for its solution. Attraction of generosity: a whole developer, a specialist in mailing, generating reports with graphs and ex-uploads. Or you are a happy owner of an offer to develop a project from scratch. What would be the technical task now to “forget” about the impact of marketing activity on the main (clear and stable) business process?

There is a solution, you can not eat

I propose one solution that will satisfy SOLID principles, design patterns, TDD and DDD, and other beautiful terms. This decision was born out of the belief that you can always just take ... and put the marketing functionality out the door of your important customer process (and never let it in again).

And to do this of course - events. We take marketing, ask him to move away from our simple system to the corner, and begin to rush there with information:

Now all the tasks from the “But marketing comes and says” list can be solved separately. Your main system is pristine from marketing, it can solve its own problems, send letters itself and decide when it needs to be done. He has much more initial data than in the “clean” base of the project. Because when receiving event data, the analytical module can sort them into detailed shelves and even perform all the related actions (send an email, in our case):

- save in separate fields and year-month-day to facilitate future reports / slices;

- accumulate history counters (for example, which product was viewed by the client on the account?);

- follow in detail the behavioral chain of a particular client;

- whether he did this in one session or the chain of action must be interrupted;

- send all the necessary information to external analytics;

- based on the history of the client’s behavior and the source of his appearance, form the correct letter;

- create a list of popular products / services, “they often buy with this product”;

- and so on and so forth.

Imagine if all these nuances are calculated on the fly (or later in reporting scripts) and stored in the main database?

In our scheme, the conditional task of “sending a letter” becomes detached and does not threaten the main process. It turns out quite a CRM-module (and analysis, and decision-making, and their execution), which can even be crooked to disgrace, with a terrible (for your tasks) denormalized database, a bunch of all kinds of auxiliary fields in tables, brakes, poorly written code ... But this is not important, because it is far in the “marketing corner” (ideally - physically on another server) and does not concern the client process.

And there is nothing special about this decision. The habitual such pattern of “observer” with throwing events and listening to them turned out. But in our case, it is impossible to implement the “in place” pattern when events are heard and executed by the same process that received the request from the client, because if we mow in the “listener”, then the 500th errors will be returned from it just like without any patterns.

Therefore, the approach will be good only when:

- You will make it asynchronous and fast.

- and on a separate server resource code + databases (as a separate project);

- you will also need a database with backups (this is in addition to the main database of the analytical module itself);

- and support for this structure will be needed as a whole project ...

Ha! - you think. Is the game worth the candle? We are trying to assess the pros and cons of implementing a separate “analytical project”:

It turns out interesting. All the disadvantages of a “separate project” are only that it will be necessary to complicate the server-software infrastructure and implement the project’s core for analytics, which will provide interfaces for reading events and saving them to your database (from a week to a month in especially running cases for implementation of this core). On the minus of synchronization - a little lower.

The fact that the decision to “do this” depends on the project and its business models. And the fact that it is not in vain that more serious projects are bought by very expensive (in implementation and support) customer / order / warehouse management systems and so on. And in no case do not trust this work online site. And in this way we also separate the “primary” marketing with all its frantic (sometimes - disposable) ideas and complex reports.

And here is this very separate project:

Instead of implementing complex analytics and indirect processes in the main project, we transfer this responsibility to the analytical project, realizing only reliable transport for events (red legend). This will need to be done once and sometimes maintained. The support will include tasks on the release of new events from the main project and reading them on the analytical project. But you must admit: in the case of programming these actions within the main project, there will be no less work, and there will be much more risks to break something in it.

A pair of dessert spoons of high quality tar

There is a significant minus to this approach. The main project does not need to know anything about the existence of an analytical project (is there another meaning?). But with the analytic situation is the opposite. Most likely he will need to know all the processes of the main thing (since he was invented to solve all the problems that he “did not want to solve”).

Therefore, in the development process, firstly, it will be difficult (and often impossible) to solve problems simultaneously on both. In the main project, new events will appear - and in the analytical project they will be processed much later. Secondly, a program error or insufficient denormalization may occur in parsing data from the queue on the analytic server, and tasks have already been read ... the train has left.

To solve this problem, a “database of events” has been conceived that stores them (events) from the beginning of time, including (importantly) the photographic context (in which these events occurred). This is necessary for peace of mind and then, in extreme cases, it would always be possible to complete a complete reboot of the created matrix:

- Errors are fixed on the analytical project and necessary changes are made (before the deployment)

- Next, the deployment script starts:

- We stop reading data from the queue (let it accumulate).

- We completely clean the analytical base

- Spread all the necessary fixes

- We run a special script that, in a separate queue (constantly read), will send all previously occurring events.

- Reading from the main queue is turned on again.

- As a result, the analytical “worker” takes the data from the queue as if nothing had happened.

There is another obvious minus - the complexity of implementation. If you decide to do so, and you have everything “very long ago and badly”, there are few options and you will have to think carefully in all:

- Refuse analytics over the past time (events accumulate only from the moment the analytical project is launched).

- Implement the proposed scheme in parallel with the current analytical developments (general analytics continue to live in the main project, new pieces in the new one).

- Upload data to the “event database” with one-time scripts for all the past time (we simulate the life cycle of sending events retroactively).

But we also have six bonuses

Based on reading the event stream from the main system, you can implement:

- and convenient process logs (for technical / customer support operators - notice / warning / error);

- asynchronous distribution of events to project personnel (notifications, reminders; the need to call the client, check the order, etc.);

- asynchronous distribution of commercial notifications (sms, emails, pushes) to customers;

- generation of flows of “secondary” events according to analytics conditions (the 99th client this quarter with a check of more than 1000 - send a “letter of happiness”);

- simple data reloading into ready-made existing OLAP analysis systems (QlikView, MS Business Solutions) at any time and any number of times - and there is no pain in generating interfaces for marketing and business analysis;

- simple garter and aggregation of external sources of events and data into a single database (Yandex metric, etc.);

- simple access to external partners (to the same call center, they will not “sit” in the core of your project);

- etc.