Why is the frequency not growing?

Many probably remember how fast the clock speed of the microprocessor increased in the 90s and early 2000s. Dozens of megahertz rapidly grew into hundreds, hundreds of megahertz almost instantly gave way to a whole gigahertz, then a gigahertz with shares, two gigahertz, etc.

Many probably remember how fast the clock speed of the microprocessor increased in the 90s and early 2000s. Dozens of megahertz rapidly grew into hundreds, hundreds of megahertz almost instantly gave way to a whole gigahertz, then a gigahertz with shares, two gigahertz, etc. But the last few years, the frequency is no longer growing so fast. From a dozen gigahertz we are now almost as far away as 5 years ago. So where did the previous pace disappear? What prevents, as before, “lifting” the frequency up?

The text below is aimed at people unfamiliar or poorly familiar with microprocessor architecture. For savvy readers, the appropriate yurav posts are recommended.

Hot gigahertz

There is an opinion that a further increase in the clock frequency is certainly associated with an increase in heat generation. That is, it seems, nothing prevents you from just turning in the right direction some “knife switch” responsible for increasing the frequency - and the frequency will go up. But the processor will heat up so much that it will melt.

This opinion is supported by many people who have something to do with computer technology. In addition, it is confirmed by the success of overclockers , overclocking processors in two or more times . The main thing is to put a more powerful cooling system.

Although the mentioned “circuit breaker” and the related heat release problem do exist, this is only part of the battle for gigahertz ...

Main brake

Different microprocessor architectures may have various overclocking difficulties. For definiteness, we will talk about superscalar architectures here . These include, for example, x86 architecture - the most popular among Intel's developments.

To understand the problems associated with raising the clock frequency, you must first determine what its growth rests on. At different levels of architecture consideration, various sets of parameters that limit the frequency can be distinguished. However, it turns out that there is a level at which there is only one such parameter. That is, you can select only one brake, which must be additionally released every time the hunt accelerates.

Conveyor

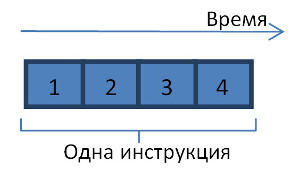

The main brake lies at the conveyor level. The conveyor is the heart of superscalar architecture. Its essence lies in the fact that the execution of each microprocessor instruction is divided into stages (see. Figure).

The stages follow each other in time, and each of them is executed on a separate computing device.

As soon as the execution of a certain stage is completed, the freed computing device can be involved in the execution of a similar stage, but of a different instruction.

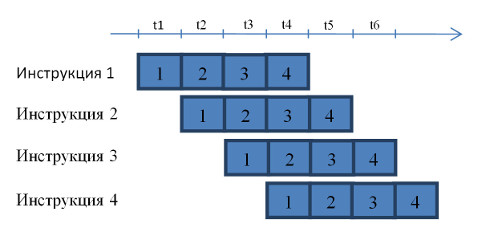

The figure shows how, in a period of time t1, the first stage of the first instruction is executed on the first computing device. By the beginning of the period t2, the first stage has already been completed, and therefore it is possible to proceed to the execution of the second stage on the second device. The first device is freed, and it can be given to the first stage of another instruction. And so on. In time period t4, various stages of the four instructions will be simultaneously executed on the conveyor .

But what does all this have to do with frequency? It turns out that the most immediate. In fact, the various stages may vary in duration. At the same time, different stages of the same instruction are executed in separate measures. The duration of the clock cycle (and with it the frequency) of the microprocessor should be such that the longest stage fits in it. In the figure below, the longest stage is shown. 3. It

makes no sense to make a measure shorter than the longest stage. Technically, this is possible, but will not lead to a real acceleration of the microprocessor.

Suppose that the longest stage takes 500ps (picoseconds) to complete. This is the cycle time in a car with a frequency of 2 GHz. Suppose now that we want to make the beat two times shorter - 250ps. Just like that ... We are not going to change anything but frequency. Such a move will only lead to the fact that the problematic stage will be executed in two cycles, but in time it will be the same 500 ps. In addition, the design complexity will increase significantly and the heat dissipation of the processor will increase.

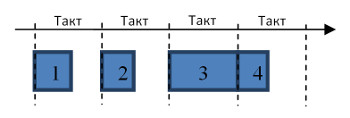

It could be objected that, due to the shorter beat, the shorter stages will begin to “fly” faster, which means that the average speed of calculations will increase. However, this is not so (see figure).

At first, the calculations really go faster. But already from the fourth measure, the third stage and any subsequent stages (in our example, only the fourth) will begin to linger. This is due to the fact that the third computing device will not be released every cycle, but every two cycles. While it is occupied by the third stage of one instruction, a similar stage of another instruction cannot be executed. Thus, our hypothetical processor with a clock cycle length of 250 ps will effectively work as a processor with a clock cycle of 500 ps, although formally its frequency will be twice as high.

The smaller, the better

So, from the point of view of the conveyor, the clock frequency can be increased in only one way - by reducing the duration of the longest stage. If it is possible to shorten the longest stage, it becomes possible to reduce the beat to the size of that stage, which will become the longest after the reduction. And the shorter the beat, the higher the frequency.

In modern technology, there are not many ways that you can influence the size of a stage.

One of such methods is the transition to a more advanced technological process. Roughly speaking, another decrease in the number of nanometers. The finer the components of the processor, the faster it runs. This happens due to the fact that the distances that must be overcome by electric pulses are reduced, the switching time of transistors is reduced, etc. It can be simplified to assume that everything is uniformly accelerated. The durations of all stages, including the longest, are approximately evenly reduced. After that, the frequency can be raised.

It sounds pretty simple, but moving down the nanometer scale is a very complicated process. It very much depends on the current level of technology, and you cannot go further than this level allows. However, microprocessor manufacturers are constantly improving the process, and the frequency due to this is gradually creeping up.

Cut the patient

Another way to raise the frequency is to “cut” the most problematic stage into smaller ones. After all, once the instructions were already managed to grind on stage. And it was possible repeatedly. Why not continue slicing? After that, the processor will only work faster!

Will be. However, cutting is very difficult.

We give an analogy with the construction of houses. Houses are being built floor by floor. Let the floor be an analogy to the instructions. We want to beat the construction of a floor on stage. To begin with, let it be two stages: the actual construction and decoration. While there is finishing on the last built floor, nothing prevents to begin the construction of a new floor. The main thing is that two different teams are engaged in construction and decoration. Everything seems to be fine.

Now we want to go further and grind the existing two stages. Finishing, for example, can be broken down into wallpapering and painting ceilings. There is no problem with this separation. After the painters painted one floor, they can go to the next, even if the previous one still has wallpaper gluing.

What about construction? Suppose we want to break down construction into walling and laying floors. Such a separation can be made, but there will be no sense in it. After the walls have been erected on one floor, you cannot send a team to erect them on the next floor until there are ceilings. Despite the fact that we formally managed to break down the construction into two stages, we were not able to fully occupy the individual brigades specializing in walls and ceilings. Only one of them will work at any given time!

Microprocessors have the same problem. There are stages that cling instructions to each other. It is very difficult to break such stages into smaller ones. This requires very serious changes in the processor architecture. In the same way, serious changes are needed in construction in order to simultaneously build several floors of one house.

Turn the switch

So we got to what overclockers are doing. They raise the voltage at which the processor works. Due to this, transistors (the main elements that make up the processor) begin to switch faster, and the lengths of all stages are more or less uniformly reduced. There is an opportunity to increase the frequency.

Very simple! But there are big problems with heat dissipation. Simplistically, the power allocated by the processor is described as follows:

P ~ C dyn * V 2 * f

Here C dyn is the dynamic capacity, V is the voltage, f is the processor frequency.

It doesn’t matter if you don’t know what dynamic capacity is. The main thing here is tension. It's squared! It looks awful ...

In fact, it’s even worse. As I said, voltage is needed to make transistors work faster. A transistor is a kind of switch. In order to switch, he needs to accumulate a sufficient charge. The time of such accumulation is proportional to the current: the longer it is, the faster the charge arrives at the transistor. The current, in turn, is proportional to the voltage. Thus, the response speed of the transistor is proportional to the voltage. Now let's pay attention to the fact that we can increase the processor frequency only in proportion to the increase in the response speed of transistors. Summing up, we have:

f ~ V and P ~ C dyn * V 3

With a linear increase in the frequency, the heat generation will increase according to the cubic law! If you increase the frequency by 2 times, it will be necessary to remove 8 times more heat from the processor. Otherwise, it will melt.

Obviously, this method of increasing the frequency is not very suitable for processor manufacturers due to low energy efficiency, although it is used by extreme accelerators.

And it's all?

And it's all. Well, or almost everything. Someone might recall that he was able to slightly increase the clock frequency of the microprocessor even without raising the voltage.

This is also possible, but to a very limited extent. The processors are designed by the manufacturer to operate in a wide range of external conditions (which affect the length of the stage), therefore they are produced with a certain margin in frequency. For example, the longest stage may take not a whole processor cycle, but, say, 95% of the cycle. Hence the opportunity to slightly increase the frequency.

By the way, I in no way urge anyone to engage in independent overclocking of processors. Everyone who does it does this at their own peril and risk. Improper overclocking is dangerous not only for your processors, but also for you personally!

I also note that in addition to those described, there are other ways to influence the length of the stage. But they are much less significant than those presented here. For example, a change in temperature affects the operation of the electronics as a whole. However, serious effects are achieved only at very low temperatures.

In general, it turns out that it is very difficult to fight for increasing the frequency ... Nevertheless, this battle is gradually being waged, and the frequency is slowly creeping up. At the same time, one does not have to be sad because the process has slowed down. After all, such a thing as multicore appeared. And what, in essence, is the difference to us, our computer began to work faster due to the fact that its frequency rose, or because tasks began to be executed in parallel? Moreover, in the second case we get additional features and more flexibility ...