Performance testing of several types of drives in a virtual environment

Virtualization technologies today are in demand not only in the “big business” segment, but also in SMB and among home users. In particular, for small companies, virtualization servers can be used to implement a number of not very resource-intensive utility services. In this case, we are usually talking about stand-alone servers based on single- or dual-processor platforms, with a relatively small amount of RAM in 32-64 GB and without special high-performance storage systems. But behind the whole succession of advantages, one needs to be aware that in terms of performance, virtual systems differ from real ones. In this article, we will compare the speed of local drives of different types (HDD, SSD and NVMe) for several virtual machine configurations in order to estimate the losses from their virtualization. No one argues

Testing was conducted on the server with the following configuration: Asus Z10PE-D16 motherboard, two Intel Xeon E5-2609 v3 processors, 64 GB of RAM. Proxmox VE version 5.2 was chosen as the virtualization environment - an open source system based on Debian. To install it, a separate SATA SSD was used, and the tested drives were connected separately to other interfaces and ports.

First we test the drive from the host platform. The second option is to transfer to a virtual machine (KVM and Debian 9 are used for it, 2 cores and 8 GB of RAM are allocated) as a physical disk. The third configuration is an LVM virtual disk. The fourth is a RAW file on an ext4 file system volume. In the last two versions, a disk size of 64 GB was chosen. So an additional result of the article may be a comparison of LVM and RAW for storing virtual disk images.

To measure the speed, the fio utility will be used with sequential read and write templates with a block of 256 KB and random operations with a block of 4 KB. Tests were performed with the iodepth parameter from 1 to 256 in order to emulate different loads. For sequential operations, we estimate the speed in MB / s, for random operations - IOPS. In addition, we look at the average delay (clat from the test report).

Let's start with the traditional hard drive, which was made by the elderly HGST HUH728080ALE640 - a drive with SATA interface and 2 TB capacity. The use of single hard drives, especially if there are no requirements for the volume, in the described scenario of low-cost virtualization for a small load can be considered a typical option if you completely save or “sculpt from what was” and not include this option in consideration would be wrong.

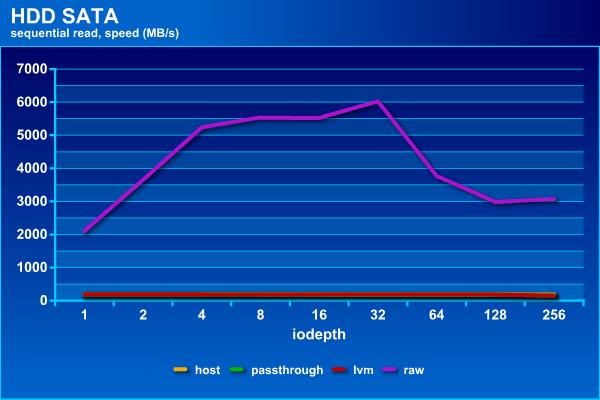

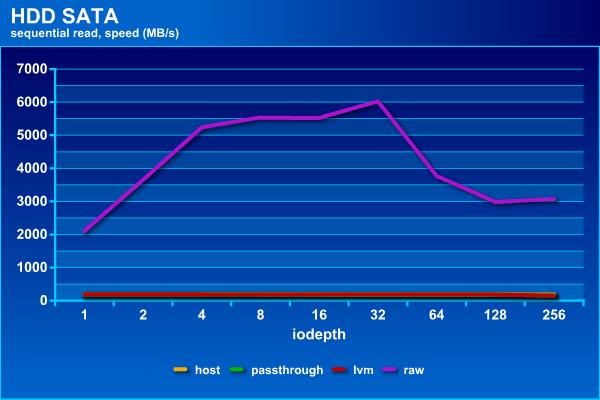

On reading, all the options, except the last, show approximately the same results at the level of 190 MB / s (only under heavy load with iodepth = 256, the passthrough and LVM results decrease to 150 MB / s). Whereas raw, thanks to caching on the host, “flies into space” and the rest are no longer visible against its background. On the one hand, we can say that the test used and the system settings do not allow to correctly estimate the speed of this configuration and show the performance of not RAM, but RAM. On the other hand, caching is one of the most effective and common technologies for increasing productivity and if it works, it would be strange to refuse it. But do not forget about reliable power in such configurations.

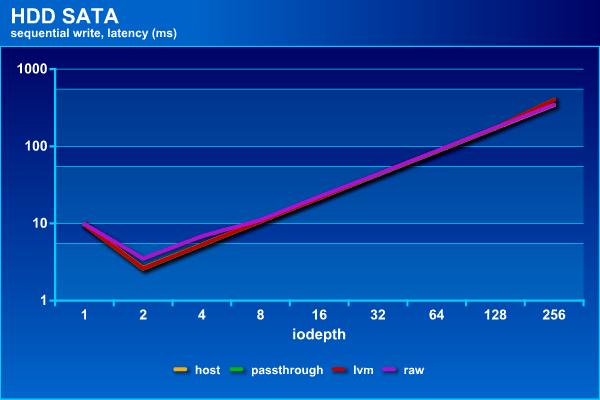

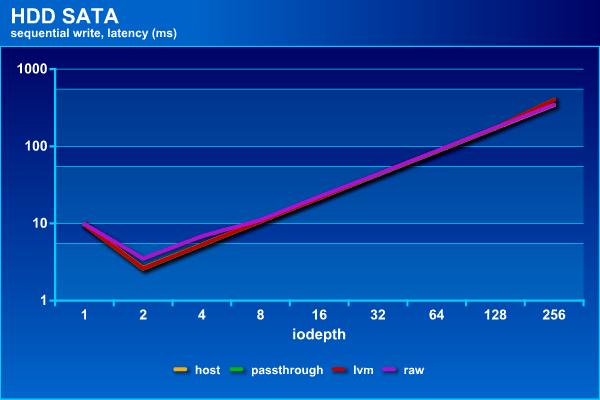

There is already no such effect on the recording, so on successive operations all configurations are about the same - the maximum speed is about 190 MB / s. Although raw still behaves differently than others - with a small load it is slower, but at maximum it does not slow down like the others. For delays there are no differences.

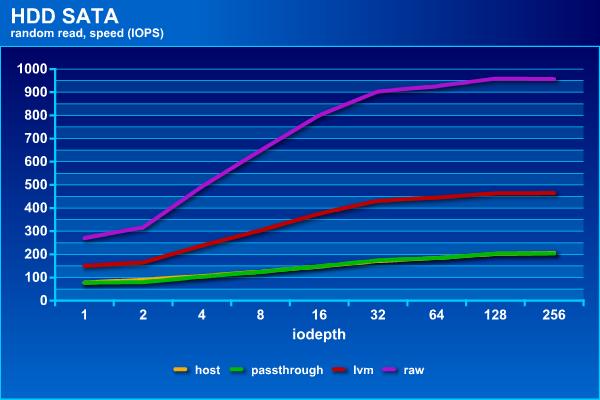

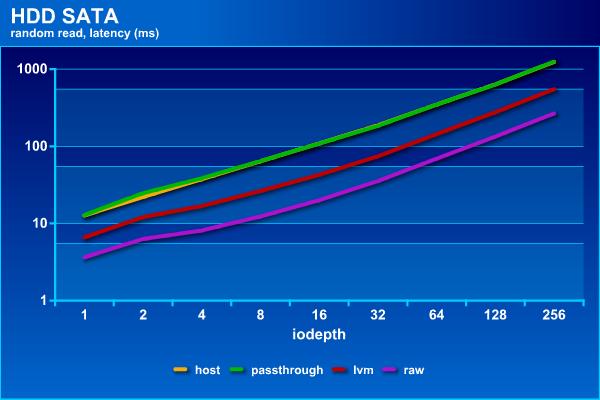

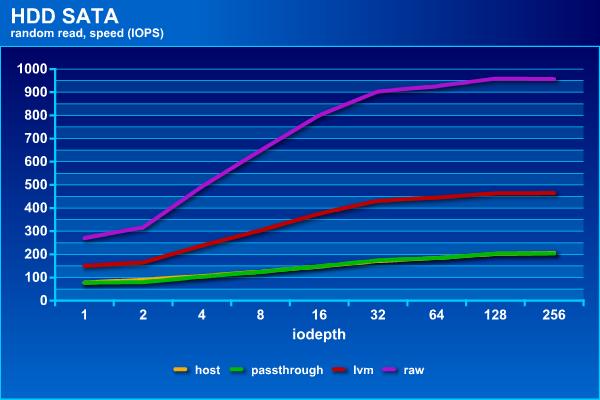

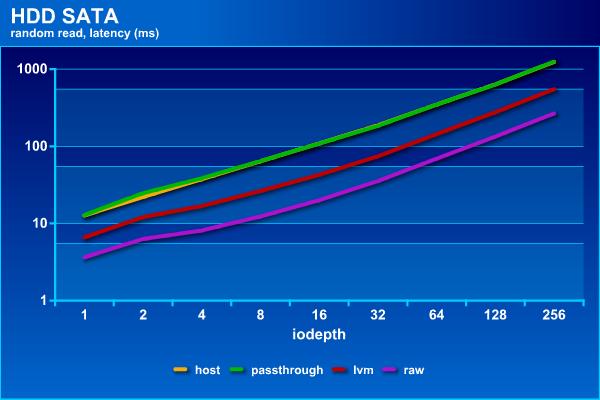

Using the host cache is also noticeable in random read operations - here raw is consistently the fastest and shows up to 950 IOPS. Approximately two times slower lvm - up to 450 IOPS. Well, the hard disk itself, including when “forwarding” into the guest system, shows about 200 IOPS. The distribution of participants on the delay graph is consistent with the speeds.

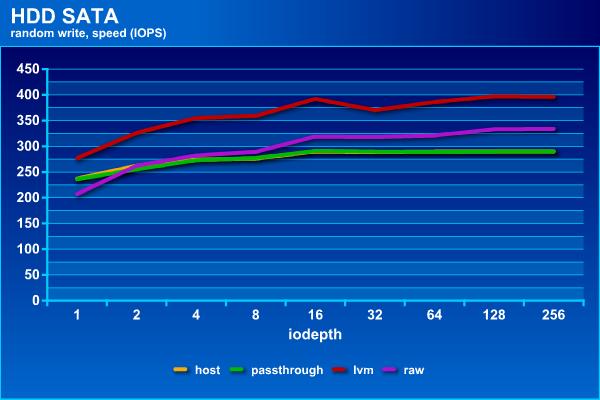

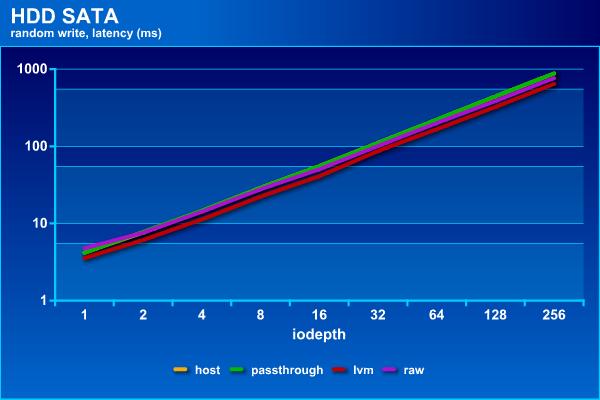

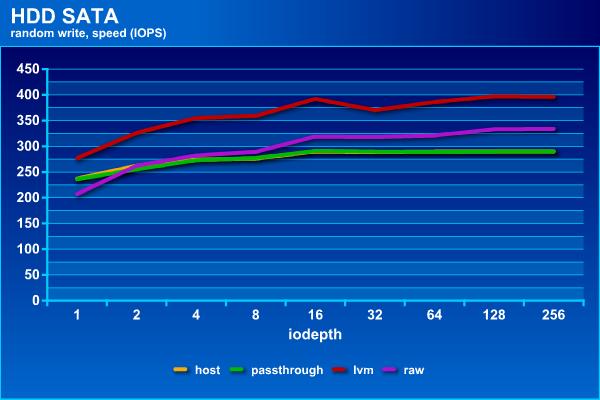

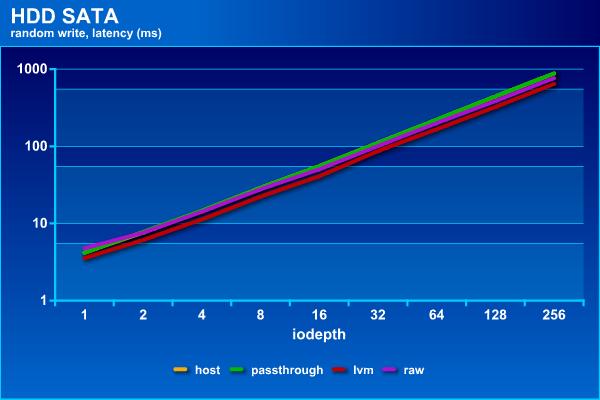

On random write operations, the configuration with lvm proved to be the best, providing up to 400 IOPS. It is followed by raw (~ 330 IOPS), and the last two participants close with a list with 290 IOPS. There are no noticeable differences in delays.

In general, if you do not need the functions provided by lvm, and the key criterion is not the speed of random writing, when placing virtual disks on the local storage in terms of speed, it is better to use raw. The use of technology for forwarding a physical disk to a virtual machine does not provide performance benefits in this case. But it can be interesting if you need to connect a drive with existing data to a virtual machine.

The second participant in the test is the Samsung 850 EVO SSD. Given his age and work in a system without TRIM, in some tests (in particular sequential recording), he already loses to the hard drive. Nevertheless, due to the significant performance gains on random operations in front of a traditional hard disk, it is even very interesting for virtual machines.

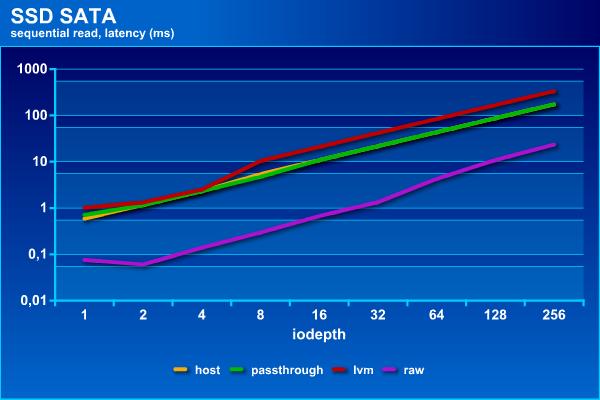

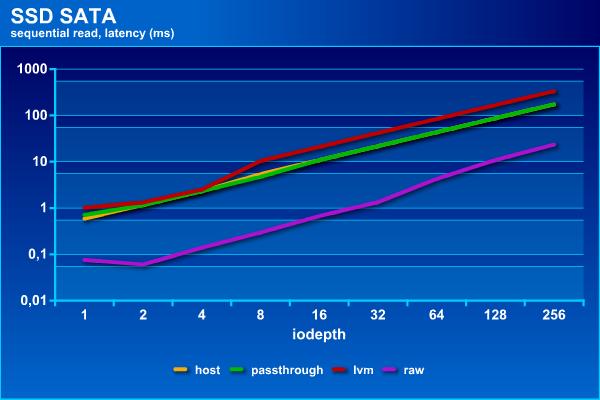

The result of sequential reading in raw mode can be commented on similarly to the version with hard disk. But here it is more interesting that the first two configurations show a stable 370 MB / s with a large load, whereas lvm is capable of only 190 MB / s. Delays for this mode are also higher.

On write operations, as already mentioned, this SSD in its current state does not look very interesting and shows a speed of 100 MB / s. As for the comparison of configurations, in this test raw loses at low load both in speed and in delay.

Random operations are the main trump card of the SSD. Here we see that any “virtual” variants noticeably lose to the “clean” drive - they provide only 30,000 IOPS, while the SSD itself is able to work three times faster. Apparently, here the software and hardware platform acts as a limitation. However, delays in this test do not exceed 7 ms, so it is unlikely that this difference in IOPS can be noticed by general applications.

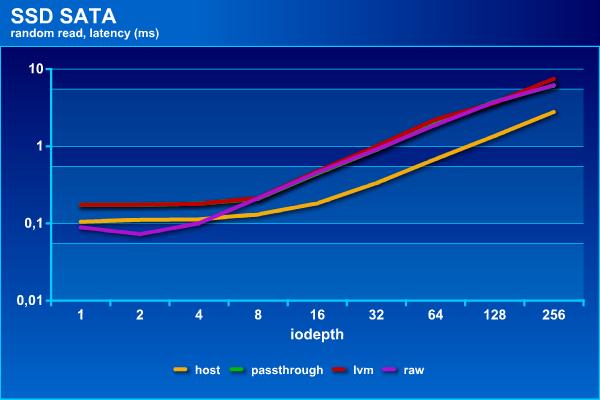

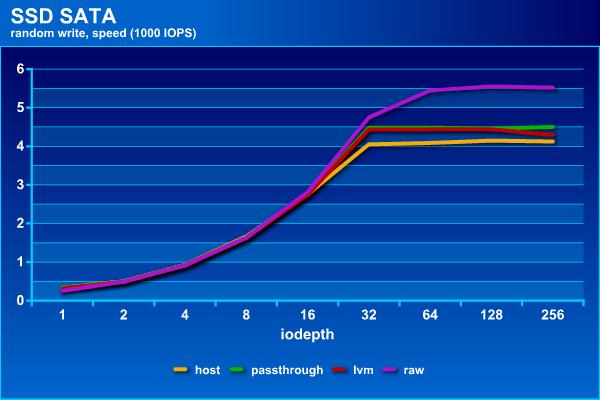

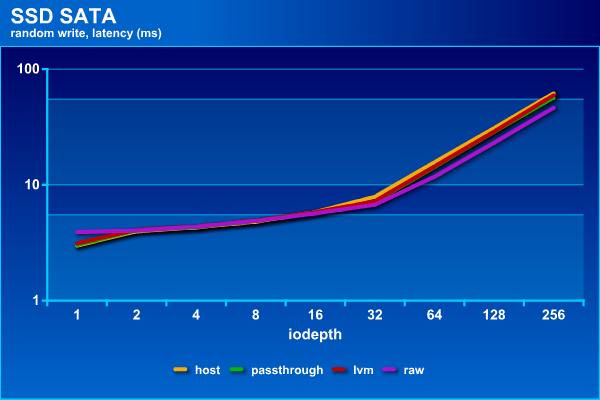

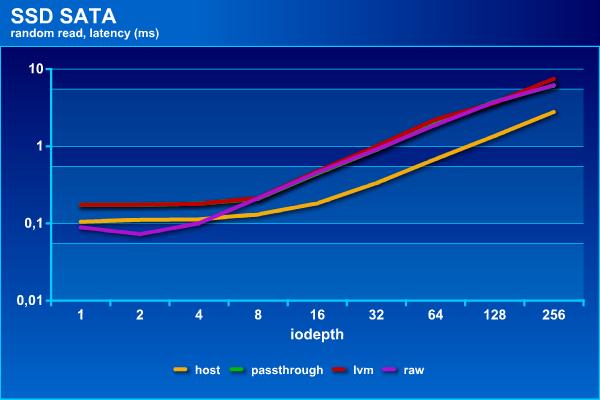

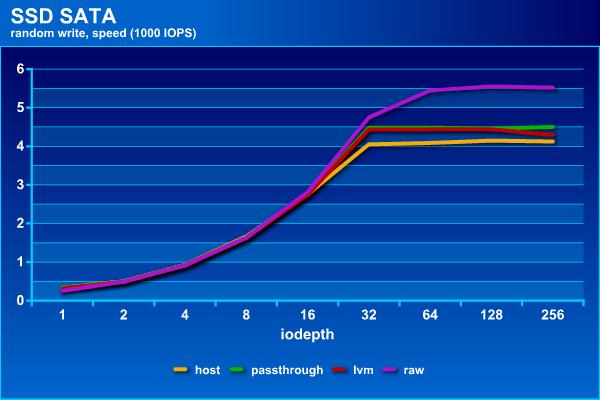

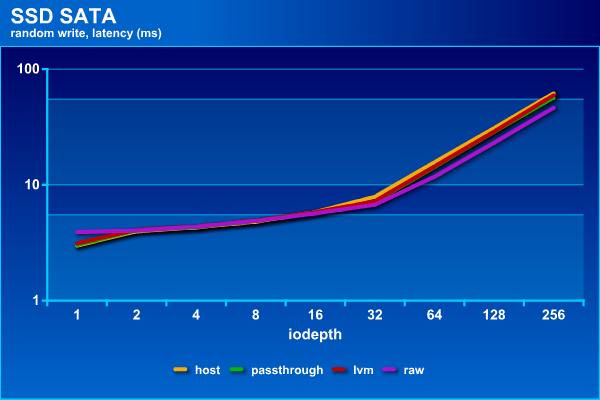

And on a random record, there is another arrangement of forces. The “real” disc is already losing here, albeit slightly. It can display up to 4,200 IOPS. lvm and passthrough for one or two hundred more, and raw already gets to 5 500 IOPS. On the graph of delays from the interesting video is clearly a fracture at iodepth = 32.

Testing showed that SSD behaves in this scenario in a different way from HDD. First, sequential reading with lvm is noticeably lagging behind other options. Secondly, virtual disks on SSD noticeably lose in IOPS on random reading.

The third participant is somewhat out of “inexpensive”, but this product itself is very interesting for the universal “accelerator” of disk operations and is able to compete in speed not only with single drives, but also with RAID-arrays. It's about Intel Optane. In this case, the model used 900P for PCIe 3.0 x4, with a capacity of 280 GB.

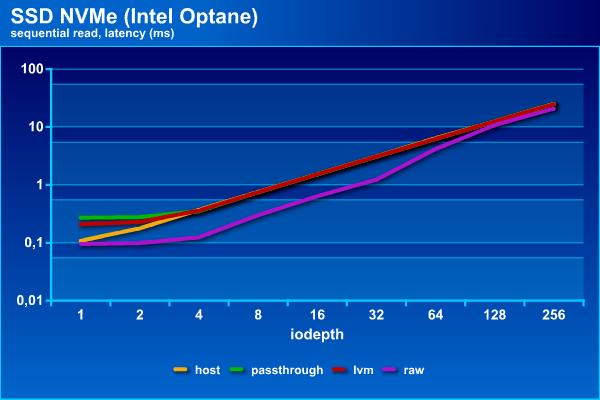

Intel Optane is already able to compete with the RAM in this test. The difference is no longer an order of magnitude, like the other participants, but only two or three times. At the same time, with increasing load, the values are practically compared. The delays are as in the tests above below for the raw configuration.

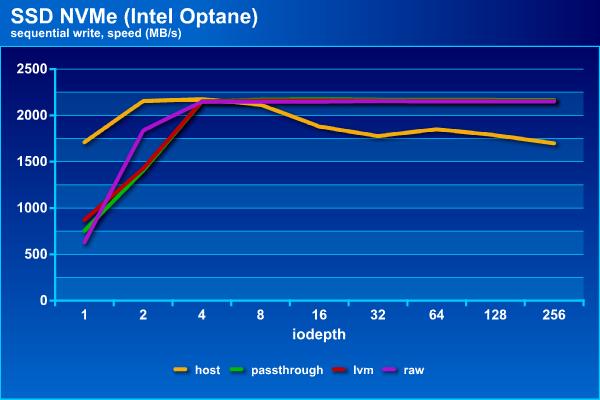

In sequential write operations, the “clean” drive even loses to other participants - with an increase in load, they go to a stable 2,150 MB / s, and it reduces the speed to about 1,700 MB / s. Delays in this case can not be compared.

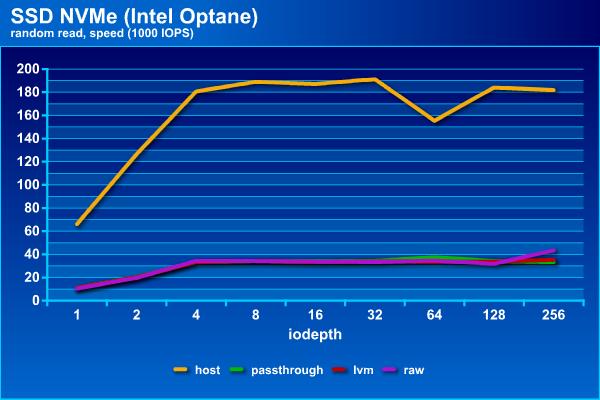

Random read operations for this model of a solid-state drive when accessing it from a host can provide almost 200,000 IOPS (the speed will be at the level of 760 MB / s). But all the other connection schemes, as we saw above for SSD with SATA interface, are limited to 35,000 IOPS, which can not but upset. Accordingly, they have a higher delay, about five times.

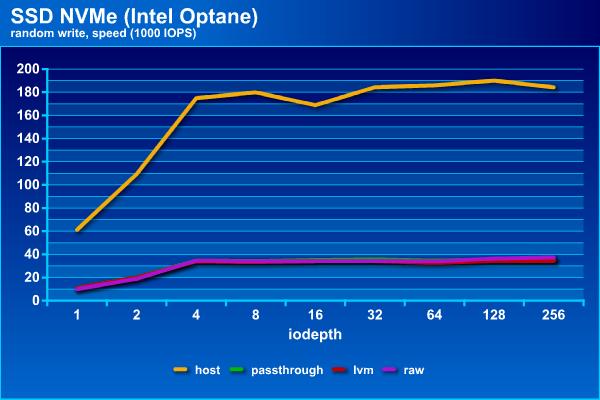

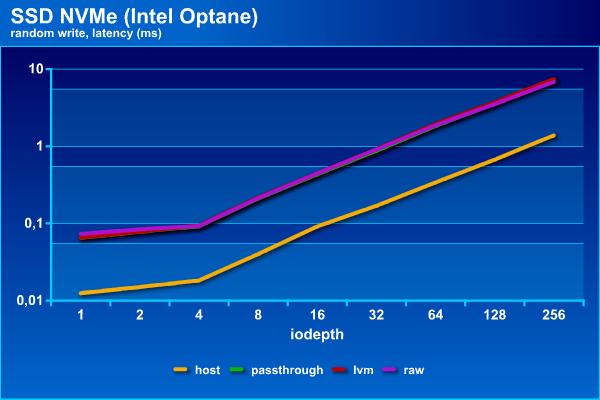

On random write, this unique drive model shows almost the same results as on random read — about 190,000 IOPS for direct connectivity and 35,000 IOPS for other options. Delays also coincide with the schedule for read operations. On the other hand, more than 700 MB / s in random recording in small blocks - such results must also be searched.

The use of an Intel Optane drive for the task under study shows that there will be no significant speed reduction on successive operations in guest operating systems. But if you need high IOPS on random reading or writing, then this platform will limit performance to 35,000 IOPS, although the drive itself is five times faster.

Testing has shown that when building storage systems for virtual servers, it is worth paying attention to certain losses from virtualization, if speed is important for your virtual machines. In most proven configurations, virtual disks show significantly different speeds from physical devices. At the same time, for traditional hard drives, the difference is usually relatively small, since they themselves are not that fast. For SATA solid-state drives, significant losses in IOPS with random access can be noted, but even with this in mind, they remain radically faster in these tasks than hard drives. The Intel Optane drive is certainly a lot lost in the virtual environment on random operations, but even in this case it continues to be phenomenally fast on the record. And there are no comments on the successive operations. Another significant advantage of this device is stable performance - it does not require any special cleaning operations, so regardless of the state and past history, as well as the OS and its settings, the speed will be constant at any time. But, as usual, nothing happens for free. Intel Optane 900P is not only uniquely fast, but uniquely expensive.

Testing was conducted on the server with the following configuration: Asus Z10PE-D16 motherboard, two Intel Xeon E5-2609 v3 processors, 64 GB of RAM. Proxmox VE version 5.2 was chosen as the virtualization environment - an open source system based on Debian. To install it, a separate SATA SSD was used, and the tested drives were connected separately to other interfaces and ports.

First we test the drive from the host platform. The second option is to transfer to a virtual machine (KVM and Debian 9 are used for it, 2 cores and 8 GB of RAM are allocated) as a physical disk. The third configuration is an LVM virtual disk. The fourth is a RAW file on an ext4 file system volume. In the last two versions, a disk size of 64 GB was chosen. So an additional result of the article may be a comparison of LVM and RAW for storing virtual disk images.

To measure the speed, the fio utility will be used with sequential read and write templates with a block of 256 KB and random operations with a block of 4 KB. Tests were performed with the iodepth parameter from 1 to 256 in order to emulate different loads. For sequential operations, we estimate the speed in MB / s, for random operations - IOPS. In addition, we look at the average delay (clat from the test report).

Let's start with the traditional hard drive, which was made by the elderly HGST HUH728080ALE640 - a drive with SATA interface and 2 TB capacity. The use of single hard drives, especially if there are no requirements for the volume, in the described scenario of low-cost virtualization for a small load can be considered a typical option if you completely save or “sculpt from what was” and not include this option in consideration would be wrong.

On reading, all the options, except the last, show approximately the same results at the level of 190 MB / s (only under heavy load with iodepth = 256, the passthrough and LVM results decrease to 150 MB / s). Whereas raw, thanks to caching on the host, “flies into space” and the rest are no longer visible against its background. On the one hand, we can say that the test used and the system settings do not allow to correctly estimate the speed of this configuration and show the performance of not RAM, but RAM. On the other hand, caching is one of the most effective and common technologies for increasing productivity and if it works, it would be strange to refuse it. But do not forget about reliable power in such configurations.

There is already no such effect on the recording, so on successive operations all configurations are about the same - the maximum speed is about 190 MB / s. Although raw still behaves differently than others - with a small load it is slower, but at maximum it does not slow down like the others. For delays there are no differences.

Using the host cache is also noticeable in random read operations - here raw is consistently the fastest and shows up to 950 IOPS. Approximately two times slower lvm - up to 450 IOPS. Well, the hard disk itself, including when “forwarding” into the guest system, shows about 200 IOPS. The distribution of participants on the delay graph is consistent with the speeds.

On random write operations, the configuration with lvm proved to be the best, providing up to 400 IOPS. It is followed by raw (~ 330 IOPS), and the last two participants close with a list with 290 IOPS. There are no noticeable differences in delays.

In general, if you do not need the functions provided by lvm, and the key criterion is not the speed of random writing, when placing virtual disks on the local storage in terms of speed, it is better to use raw. The use of technology for forwarding a physical disk to a virtual machine does not provide performance benefits in this case. But it can be interesting if you need to connect a drive with existing data to a virtual machine.

The second participant in the test is the Samsung 850 EVO SSD. Given his age and work in a system without TRIM, in some tests (in particular sequential recording), he already loses to the hard drive. Nevertheless, due to the significant performance gains on random operations in front of a traditional hard disk, it is even very interesting for virtual machines.

The result of sequential reading in raw mode can be commented on similarly to the version with hard disk. But here it is more interesting that the first two configurations show a stable 370 MB / s with a large load, whereas lvm is capable of only 190 MB / s. Delays for this mode are also higher.

On write operations, as already mentioned, this SSD in its current state does not look very interesting and shows a speed of 100 MB / s. As for the comparison of configurations, in this test raw loses at low load both in speed and in delay.

Random operations are the main trump card of the SSD. Here we see that any “virtual” variants noticeably lose to the “clean” drive - they provide only 30,000 IOPS, while the SSD itself is able to work three times faster. Apparently, here the software and hardware platform acts as a limitation. However, delays in this test do not exceed 7 ms, so it is unlikely that this difference in IOPS can be noticed by general applications.

And on a random record, there is another arrangement of forces. The “real” disc is already losing here, albeit slightly. It can display up to 4,200 IOPS. lvm and passthrough for one or two hundred more, and raw already gets to 5 500 IOPS. On the graph of delays from the interesting video is clearly a fracture at iodepth = 32.

Testing showed that SSD behaves in this scenario in a different way from HDD. First, sequential reading with lvm is noticeably lagging behind other options. Secondly, virtual disks on SSD noticeably lose in IOPS on random reading.

The third participant is somewhat out of “inexpensive”, but this product itself is very interesting for the universal “accelerator” of disk operations and is able to compete in speed not only with single drives, but also with RAID-arrays. It's about Intel Optane. In this case, the model used 900P for PCIe 3.0 x4, with a capacity of 280 GB.

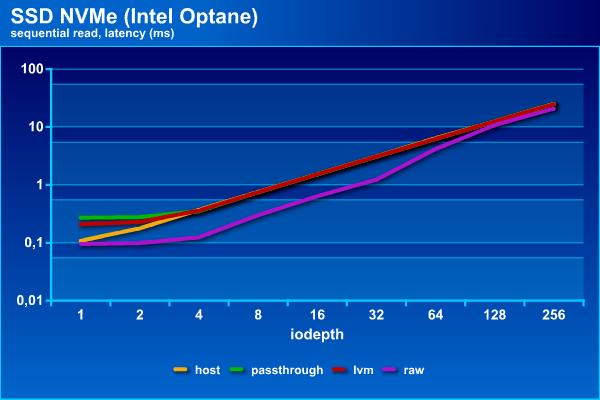

Intel Optane is already able to compete with the RAM in this test. The difference is no longer an order of magnitude, like the other participants, but only two or three times. At the same time, with increasing load, the values are practically compared. The delays are as in the tests above below for the raw configuration.

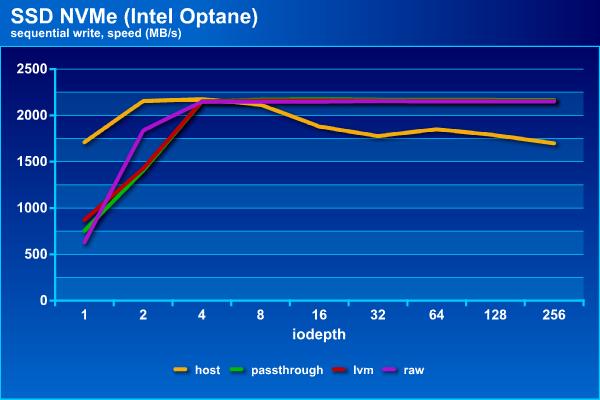

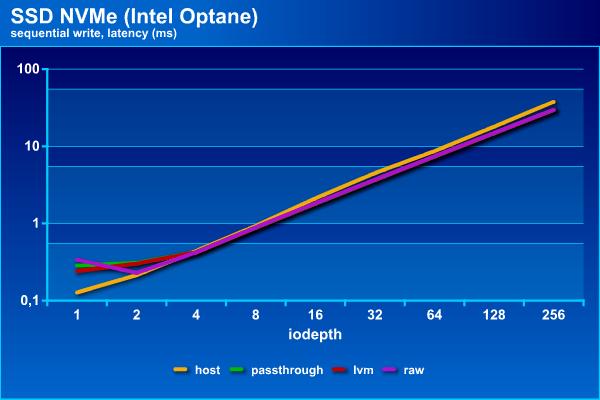

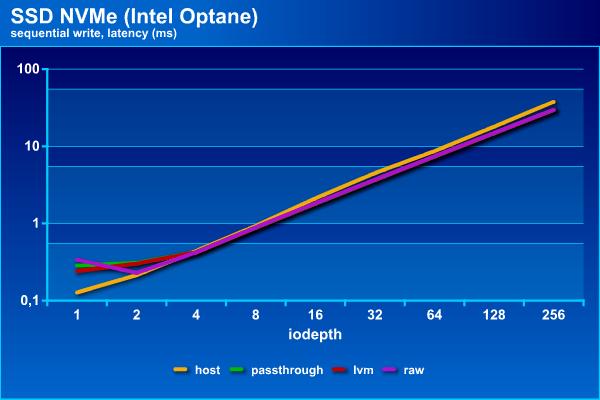

In sequential write operations, the “clean” drive even loses to other participants - with an increase in load, they go to a stable 2,150 MB / s, and it reduces the speed to about 1,700 MB / s. Delays in this case can not be compared.

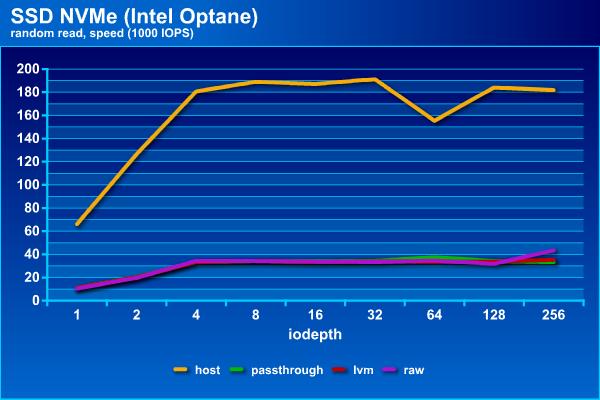

Random read operations for this model of a solid-state drive when accessing it from a host can provide almost 200,000 IOPS (the speed will be at the level of 760 MB / s). But all the other connection schemes, as we saw above for SSD with SATA interface, are limited to 35,000 IOPS, which can not but upset. Accordingly, they have a higher delay, about five times.

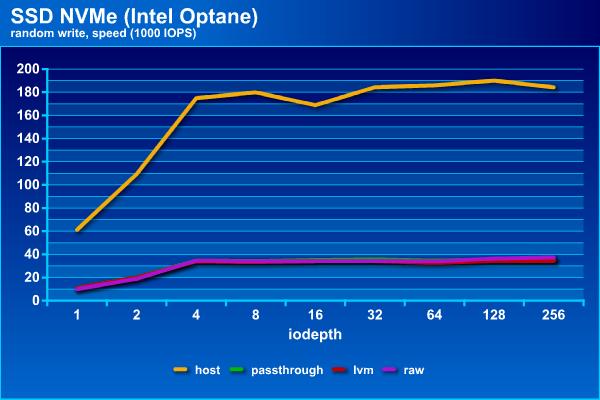

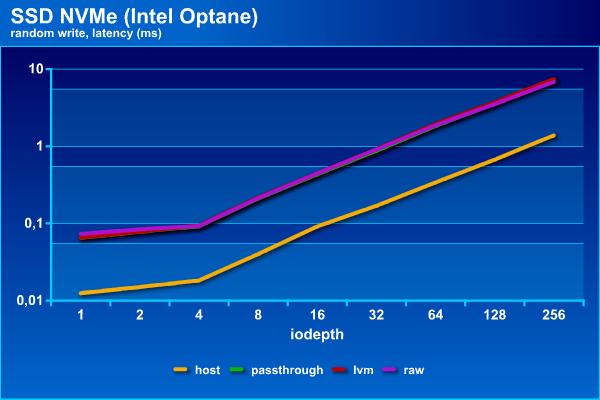

On random write, this unique drive model shows almost the same results as on random read — about 190,000 IOPS for direct connectivity and 35,000 IOPS for other options. Delays also coincide with the schedule for read operations. On the other hand, more than 700 MB / s in random recording in small blocks - such results must also be searched.

The use of an Intel Optane drive for the task under study shows that there will be no significant speed reduction on successive operations in guest operating systems. But if you need high IOPS on random reading or writing, then this platform will limit performance to 35,000 IOPS, although the drive itself is five times faster.

Testing has shown that when building storage systems for virtual servers, it is worth paying attention to certain losses from virtualization, if speed is important for your virtual machines. In most proven configurations, virtual disks show significantly different speeds from physical devices. At the same time, for traditional hard drives, the difference is usually relatively small, since they themselves are not that fast. For SATA solid-state drives, significant losses in IOPS with random access can be noted, but even with this in mind, they remain radically faster in these tasks than hard drives. The Intel Optane drive is certainly a lot lost in the virtual environment on random operations, but even in this case it continues to be phenomenally fast on the record. And there are no comments on the successive operations. Another significant advantage of this device is stable performance - it does not require any special cleaning operations, so regardless of the state and past history, as well as the OS and its settings, the speed will be constant at any time. But, as usual, nothing happens for free. Intel Optane 900P is not only uniquely fast, but uniquely expensive.