Corporate cluster. Thinking out loud

This post is a reflection on the most efficient use of idle resources on a corporate network.

I will give the following example of a medium-sized modern enterprise network.

Given:

Corporate network consisting of 150 or more machines.

Each workstation has a “under the hood” processor with several cores, 2 - 4 GB DDRIII, a SATAIII hard drive and a Gigabit Ethernet adapter (this configuration does not surprise us anymore and is the standard for an office computer. Progress does not stand still even now, even with much desire, buying a PC with more modest characteristics is not economically feasible).

Gigabit Ethernet network. There may be objections. Many work on the good old 5th category for which an adequate ceiling is 100Mbit / s. But again, new networks are more and more often being built at 1000Mbps.

"Vinaigrette" from the software.

Local software: email client, text and table editors.

Client-server: bank-clients, software for reporting, management and accounting.

Web software: workflow systems, portals, etc.

In order not to be unfounded, I decided to monitor the consumption of CPU and RAM resources at the workstations of my organization. The average percentage of use of workstation resources during the day very rarely exceeds 25-30%. This is due to downtime during user data collection, viewing documents, reading information from the screen, printing, smoke breaks and breaks. This is also due to the fact that web-based software and cloud services consume extremely few client-side resources.

Thought:

Combine the resources of idle and weakly active workstations and use them to solve the necessary computing problems. Preferably without compromising the efficiency of user workstations.

Now, actually, reflections:

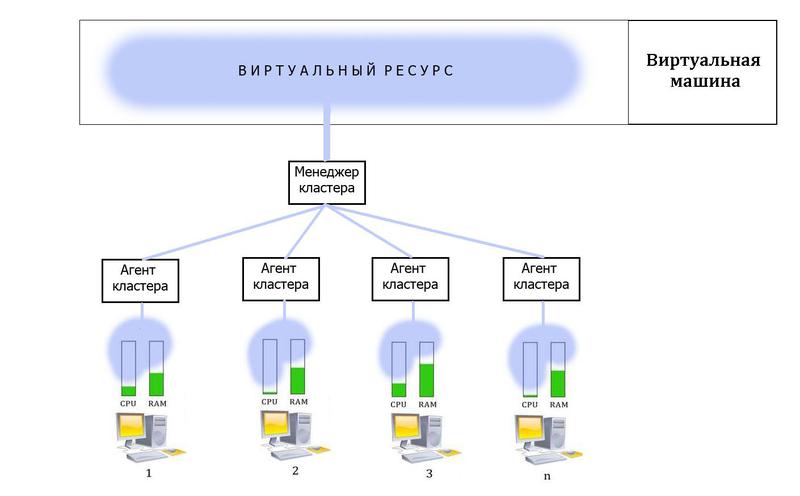

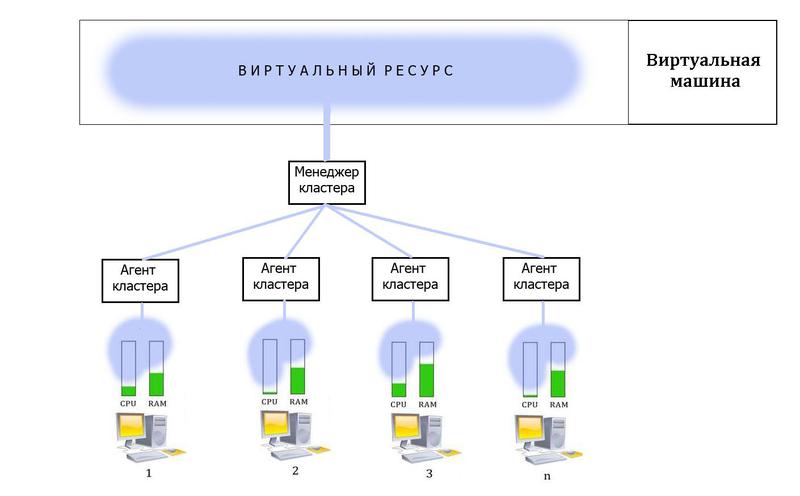

Theoretically, we have a corporate cluster. It consists of all the machines in the enterprise network and uses their performance according to the established quota. The quota can be set on the basis of an anasis of data on the average system load during the day / week / month ... Then the quota can be adjusted "on the fly" if the average load indicator changes.

The vulnerability of all parallel computing systems is the communication channel between nodes (latency in processing and parallelizing tasks between nodes and also the synchronization overhead). But at the moment, I repeat, more and more corporate networks operate at a speed of 1 Gbit / s, and this is good news.

Now about the tasks solved by this cluster. The cluster itself, theoretically, can be an additional resource pool for virtual machines, can provide a runtime environment for launching resource-intensive tasks. Everyone has different tasks, and therefore this technology can be useful precisely due to its versatility.

After I asked Google whether anyone was involved in this problem and did not find anything, I decided to write about it in Habré. Let us, comrades, discuss whether it makes sense to develop this direction or not.

I will give the following example of a medium-sized modern enterprise network.

Given:

Corporate network consisting of 150 or more machines.

Each workstation has a “under the hood” processor with several cores, 2 - 4 GB DDRIII, a SATAIII hard drive and a Gigabit Ethernet adapter (this configuration does not surprise us anymore and is the standard for an office computer. Progress does not stand still even now, even with much desire, buying a PC with more modest characteristics is not economically feasible).

Gigabit Ethernet network. There may be objections. Many work on the good old 5th category for which an adequate ceiling is 100Mbit / s. But again, new networks are more and more often being built at 1000Mbps.

"Vinaigrette" from the software.

Local software: email client, text and table editors.

Client-server: bank-clients, software for reporting, management and accounting.

Web software: workflow systems, portals, etc.

In order not to be unfounded, I decided to monitor the consumption of CPU and RAM resources at the workstations of my organization. The average percentage of use of workstation resources during the day very rarely exceeds 25-30%. This is due to downtime during user data collection, viewing documents, reading information from the screen, printing, smoke breaks and breaks. This is also due to the fact that web-based software and cloud services consume extremely few client-side resources.

Thought:

Combine the resources of idle and weakly active workstations and use them to solve the necessary computing problems. Preferably without compromising the efficiency of user workstations.

Now, actually, reflections:

Theoretically, we have a corporate cluster. It consists of all the machines in the enterprise network and uses their performance according to the established quota. The quota can be set on the basis of an anasis of data on the average system load during the day / week / month ... Then the quota can be adjusted "on the fly" if the average load indicator changes.

The vulnerability of all parallel computing systems is the communication channel between nodes (latency in processing and parallelizing tasks between nodes and also the synchronization overhead). But at the moment, I repeat, more and more corporate networks operate at a speed of 1 Gbit / s, and this is good news.

Now about the tasks solved by this cluster. The cluster itself, theoretically, can be an additional resource pool for virtual machines, can provide a runtime environment for launching resource-intensive tasks. Everyone has different tasks, and therefore this technology can be useful precisely due to its versatility.

After I asked Google whether anyone was involved in this problem and did not find anything, I decided to write about it in Habré. Let us, comrades, discuss whether it makes sense to develop this direction or not.