SQL Server Data Warehouse Fast Track (DWFT) certified architecture: what it means and how it works

Large manufacturers of popular software care about their customers in different ways. One way is to create a certification program. So that when customers wander in meditations between hardware configs for a specific software, the manufacturer of this software could come up and point out with confidence: “Take this and everything will be fine.”

Such a program for its SQL Server was developed by Microsoft - SQL Server Fast Track (DWFT). It is used to certify storage configurations — those that meet the requirements of the workload and can be implemented with less risk, cost, and complexity. It sounds great, but it's still interesting to evaluate these criteria in practice. To do this, we will analyze in detail one of the configurations certified by SQL Server Data Warehouse Fast Track.

For a start - a little more about DWFT. This program includes not only the certification of reference architectures from system suppliers, but also a number of best practice guides — if you want to put together something of your own. Microsoft updates the program for the development of new features, and on the basis of customer feedback.

With the DWFT storage, you can know for sure that all SQL Server features will be available. Column storage technologies increase productivity in transactional and analytical workloads. Both traditional structured relational and unstructured big data will be supported — in Hadoop, Spark, or Azure Data Lake. With the SQL Server “PolyBase” feature, you can merge big data into SQL Server Universe, query both relational and unstructured data, merging it together without having to move it.

A general list of certified architectures is at the bottom of this page . Next, we present one of them.

Now - to the analysis of one of the architectures certified by DWFT. It is based on our BullSequana S400 and S800 servers and the Dell EMC VMAX storage. Storage capacity can be increased to 4 PB, if you scale the BullSequana S server to 32 processors.

In order to ensure high availability of the database, failover clustering of Windows is additionally recommended. That is, the use of at least two servers. Through the Microsoft clustering services, one database server is configured as the primary (active) server, and the second as the secondary (passive) server. The passive server must have the same configuration as the main server.

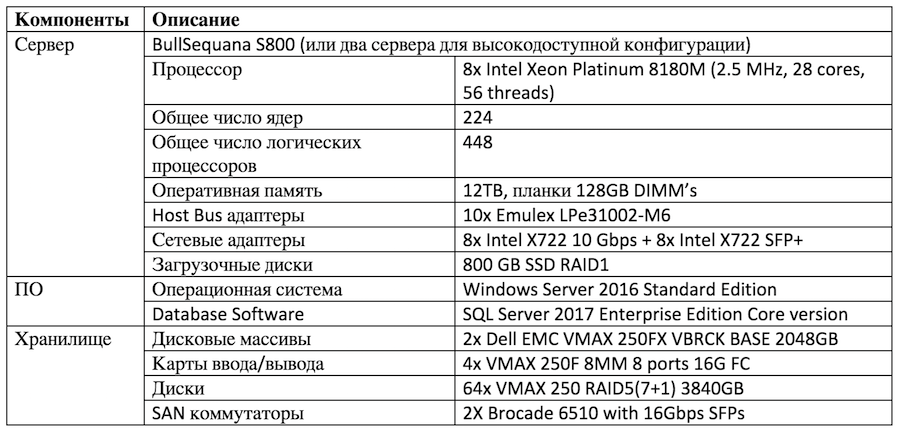

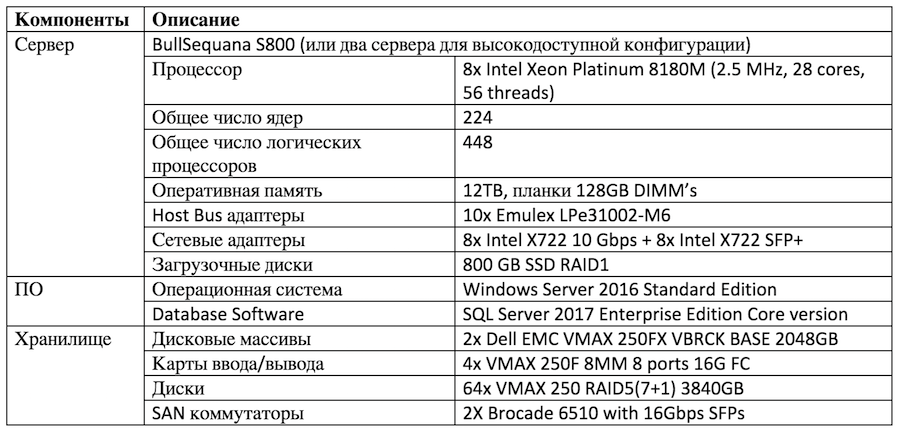

List and characteristics of infrastructure components

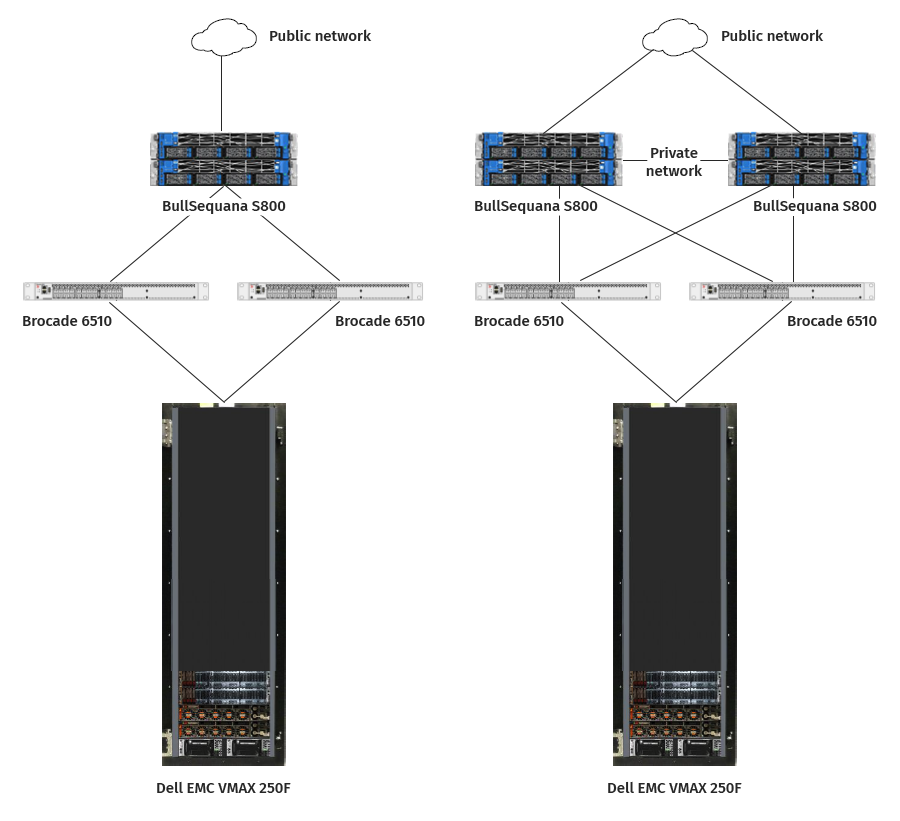

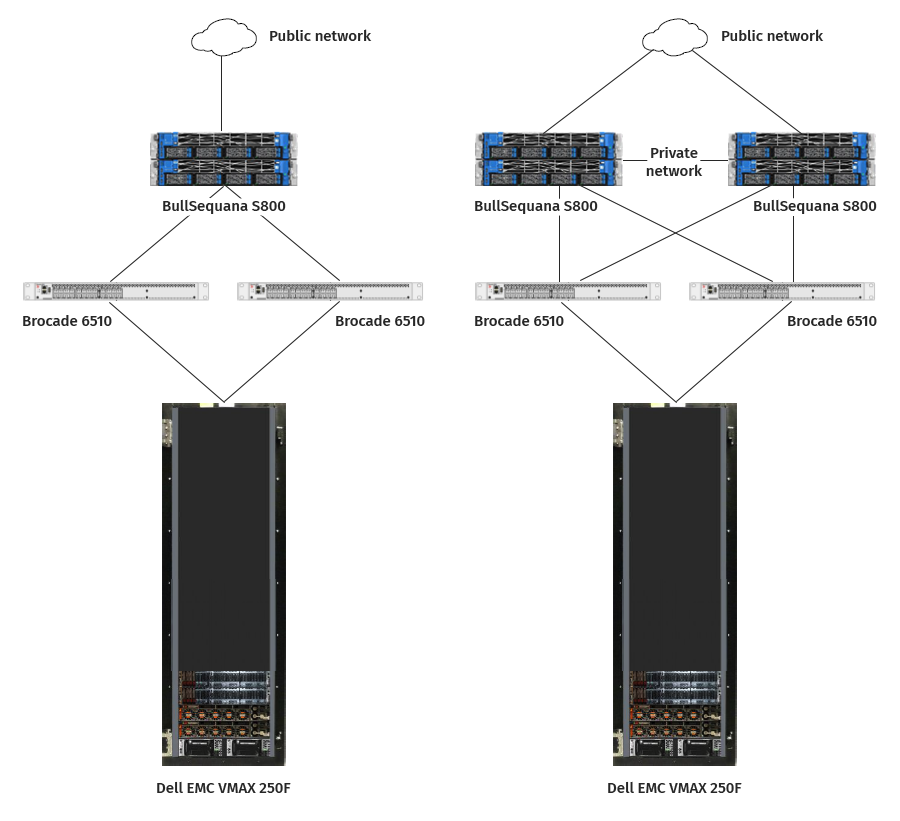

The general scheme of the two configurations

The BullSequana S800 server is an eight-processor server with up to 12 TB of memory, advanced I / O capabilities, and the addition of a module with SSD / HDD / NVMe storage or with NVidia Tesla GPUs.

The BullSequana S line is available in versions from S200 with two processors to S3200 with 32 processors. Line scale step - two processors. For the SQL Server 2017 DWFT architecture, the S200, S400 and S800 are equally certified.

Emulex Gen 6 FC adapters with a dynamic multicore architecture, compared with previous generations of devices, offer higher performance, lower latency, improved diagnostics and manageability. Provides speeds of up to 12,800 MB / s (two 32GFC ports or four 16GFC ports), a throughput of 1.6 million IOPS per adapter. The four-port version of the LPe32004 provides up to 3.2 million IOPS per adapter.

The Brocade 6510 switch from Broadcom is a 1U, 48-port Fiber Channel switch that can be installed in a server rack.

VMAX All Flash is focused on petabyte data volumes and large transaction processing. VMAX All Flash is scalable and allows you to combine hundreds of multi-core Intel processors for dynamic mixed workloads. The main element of VMAX All Flash is V-Brick — up to eight V-Bricks can be combined into a single system with fully separated connectivity, processing and bandwidth capabilities. Each V-Brick supports up to 72 processor cores for performance scaling — up to 576 cores per array. Flash Capacity Packs modules are used for scaling.

The storage system must clearly comply with the requirements for high-precision real-time transaction processing (OLTP), virtualized applications, and Oracle and SQL databases. Millions of IOPS, petabyte throughput and predicted performance (response time of 350 µs) are maintained.

Finally, the architecture should have enhanced error isolation, reliable data integrity checks, and proven, reliable hardware and software updates. Another requirement: accessibility for 24x7 operations using SRDF software.

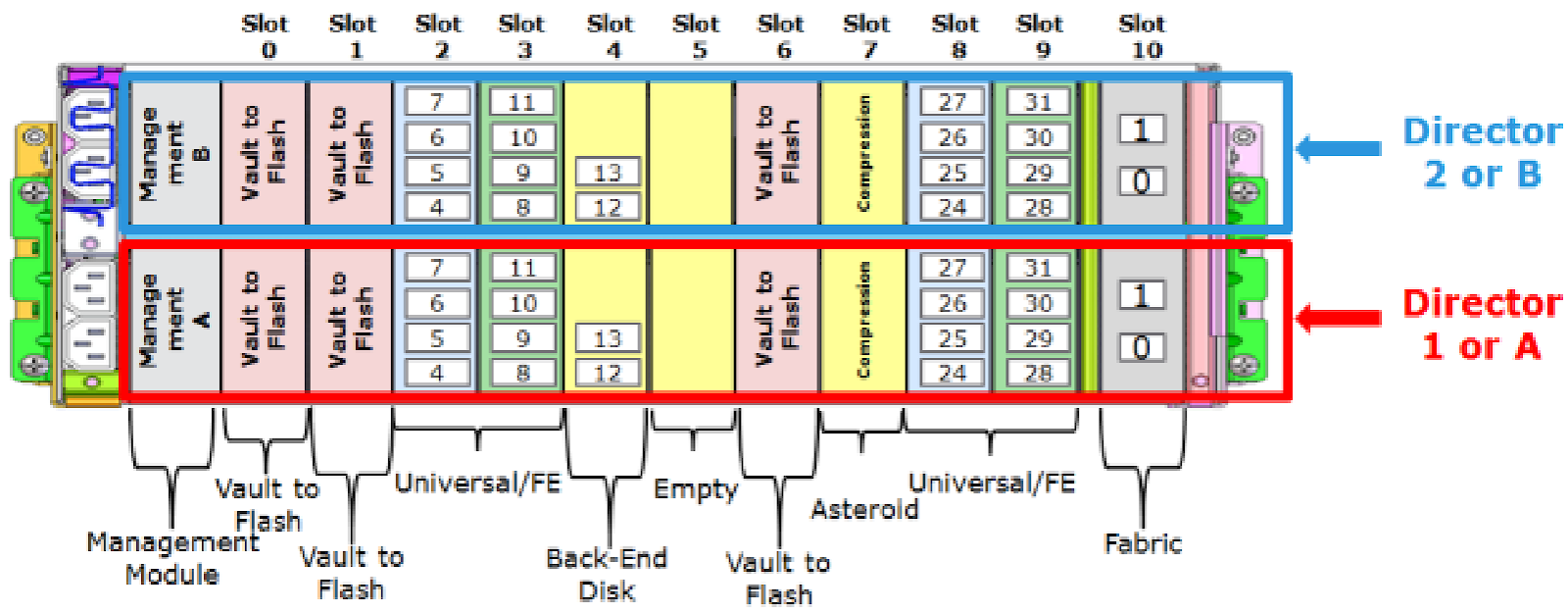

Configuration of VMAX 250F (All Flash):

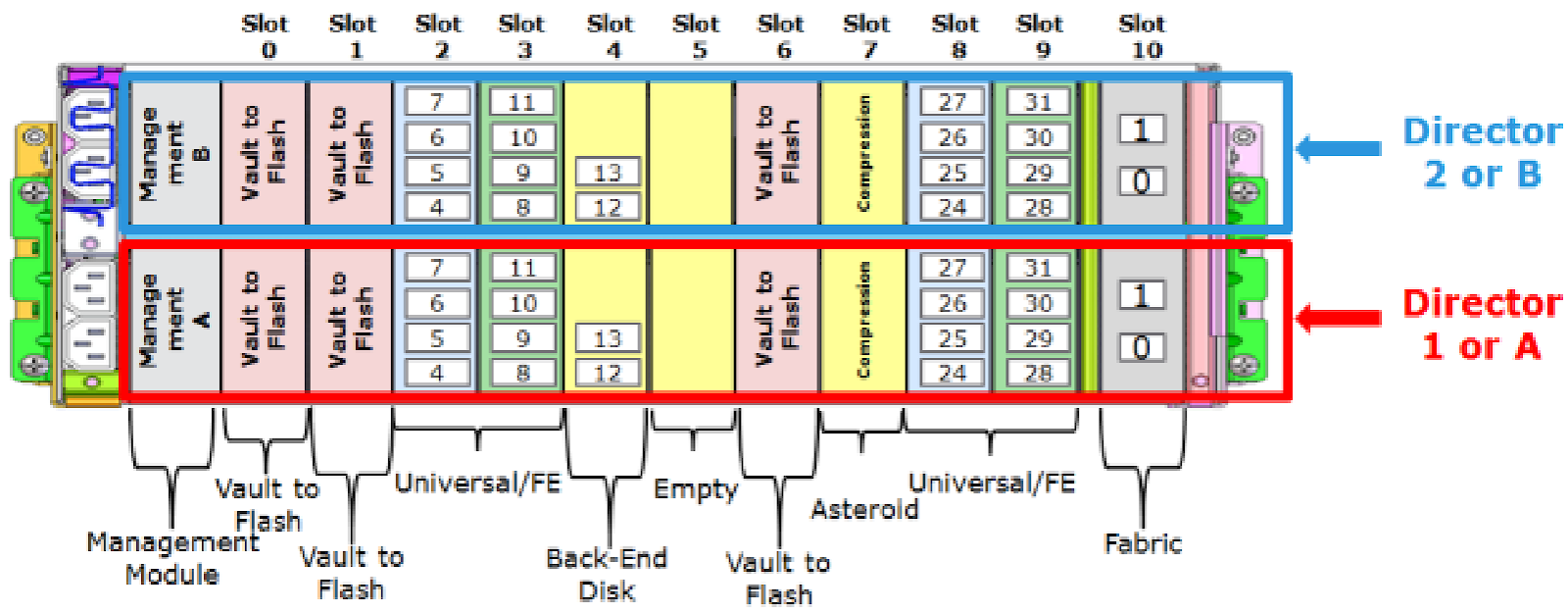

VMAX vBricks Appearance in a Rack:

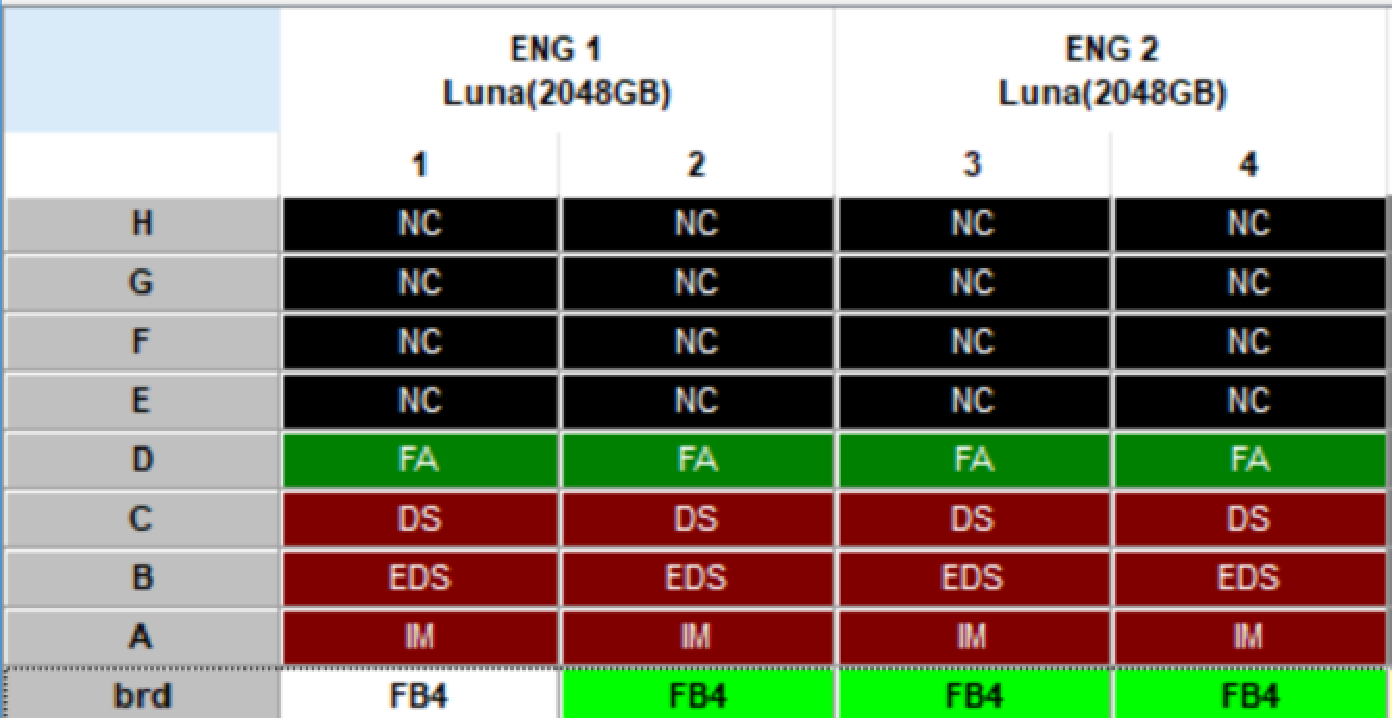

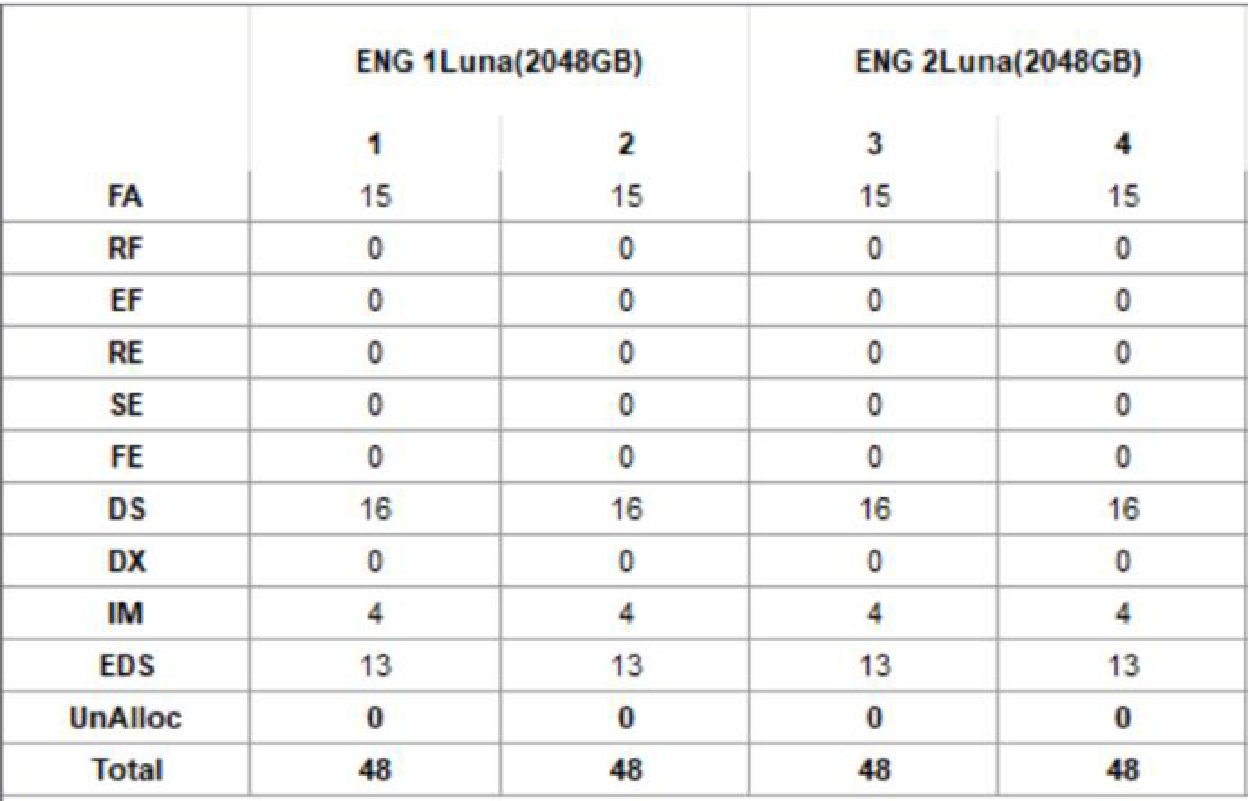

Port Arrangement: The

logical array configuration in a certified architecture is built according to the Dell EMC configuration and best performance practices.

Main distribution:

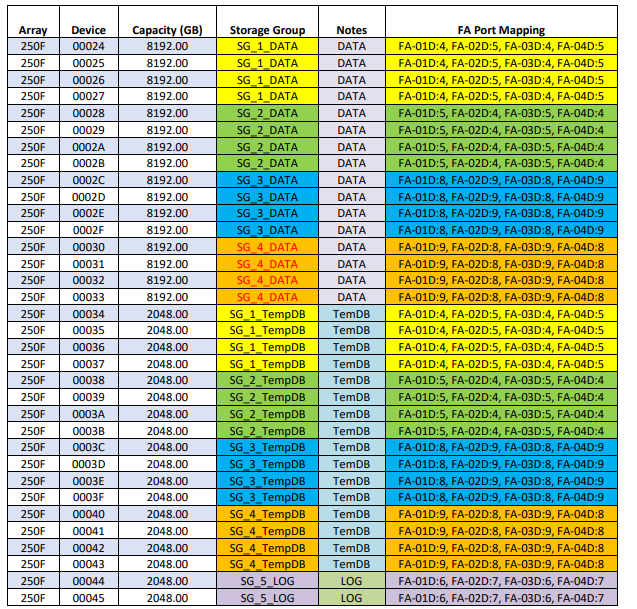

All LUNs are configured as THIN LUNs in a virtual pool created on 64 drives. Data is distributed across all flash drives on both vBricks. In total, 35 LUNs are defined on the Dell EMC VMAX:

Front Fiber Channel (FC) ports are configured to use four domains with failures in virtual port mode. Each port is one to one connected to a port on the VMAX, which serves only one data LUN and one Tempdb LUN. For each of them, there is a LUN to separate read and write operations on the LUN (when performing GROUP BY or ORDER BY, data is first written to Tempdb before being used in another part of the query or presented to the user). Each LUN is mapped to four FA ports and is available in four ways.

Mapping ports:

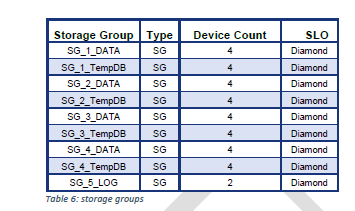

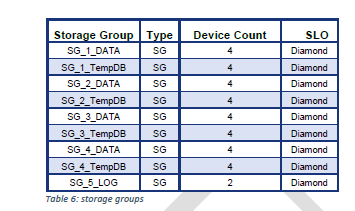

The following storage groups have been created:

The VMAX cache is global, dynamically used for reading and writing. The default settings are used to limit system write waiting. Since the system uses only one application, there is no need to create cache sections.

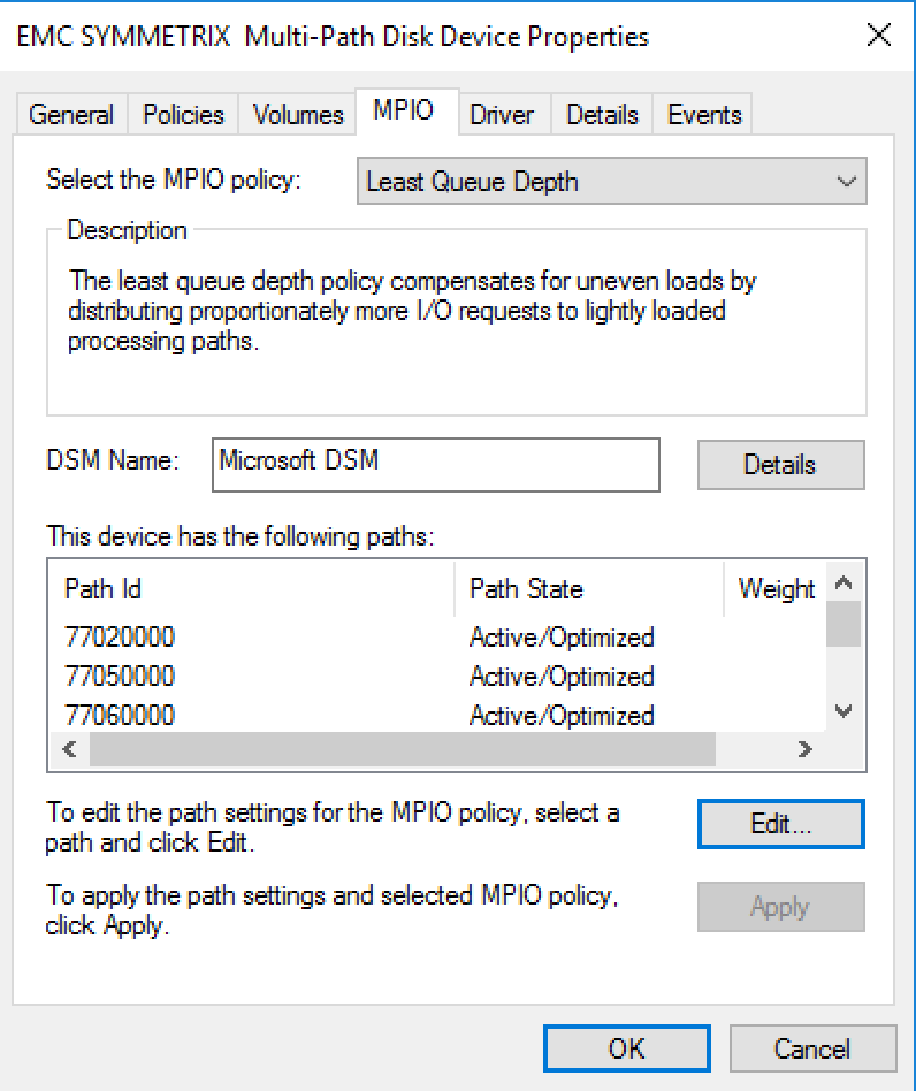

Windows Server 2016 used its own MPIO. Its alternative is PowerPath software for Dell EMC applications.

The top ports of the Brocade switches are used to connect the BullSequana S800 server. Each even-numbered Emulex HBA port is connected to the upper ports to the left of the Brocade switch. Each of the Outbus Emulex HBA ports is connected to the upper ports to the left of the Brocade_2 switch. Bottom ports are used only to connect FC Dell EMC VMAX ports.

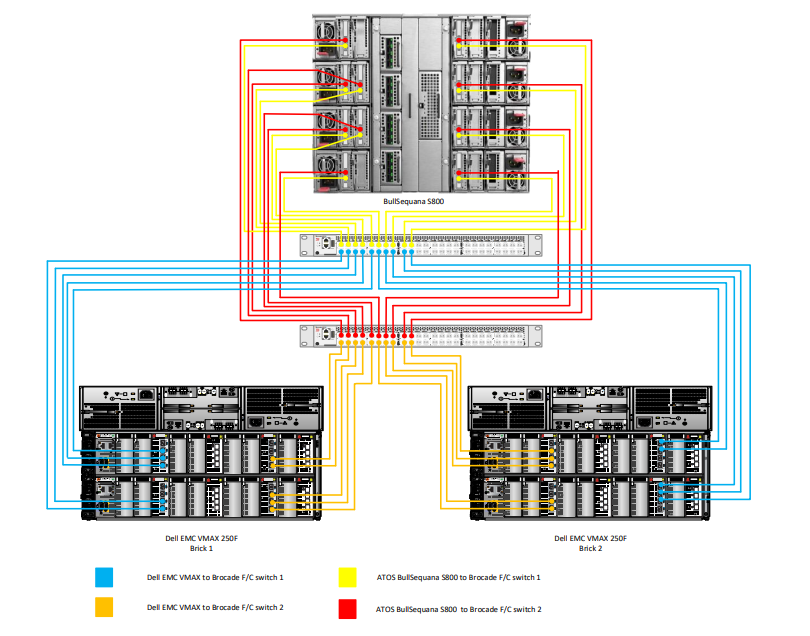

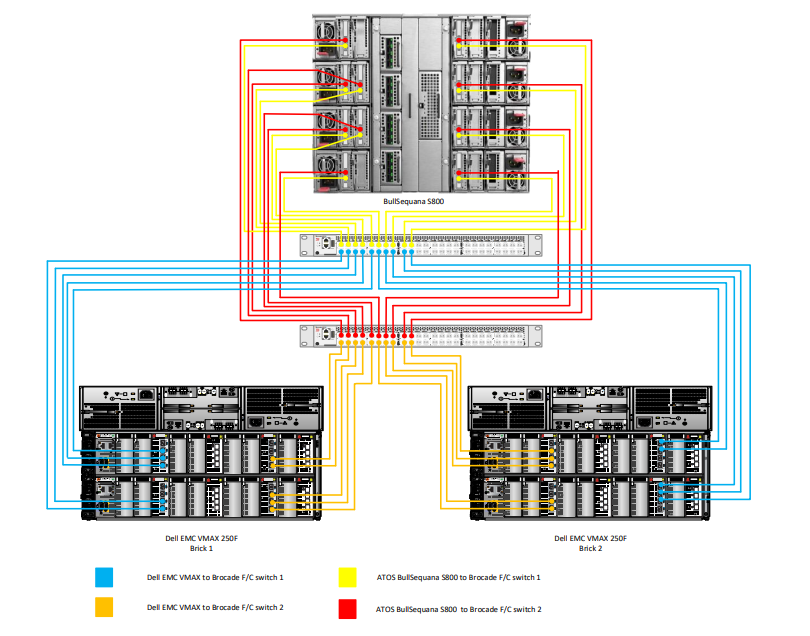

The following diagram shows the cabling between the BullSequana S800 and the Dell EMC VMAX 250F. The placement of the HBA may differ depending on the placement of the HBA modules.

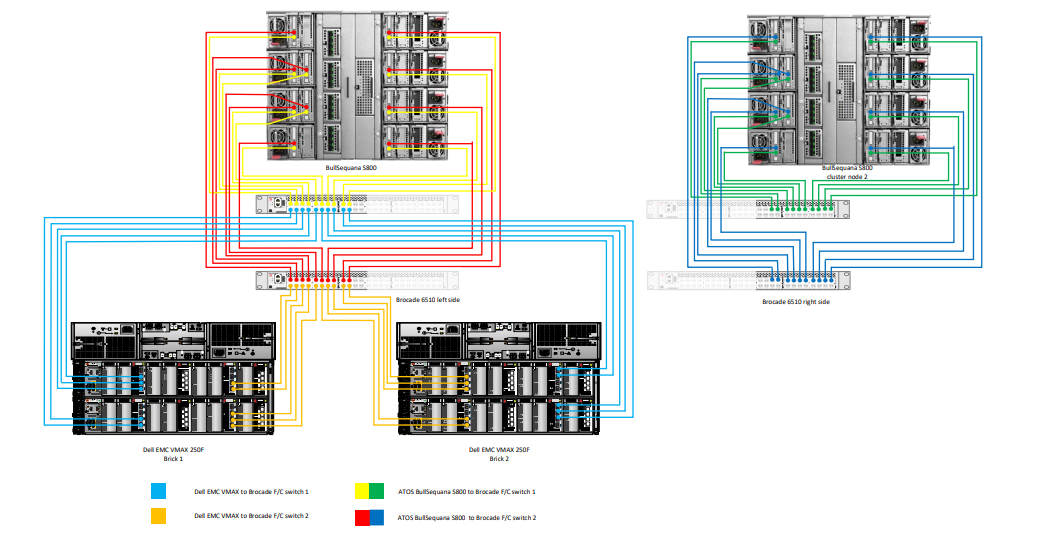

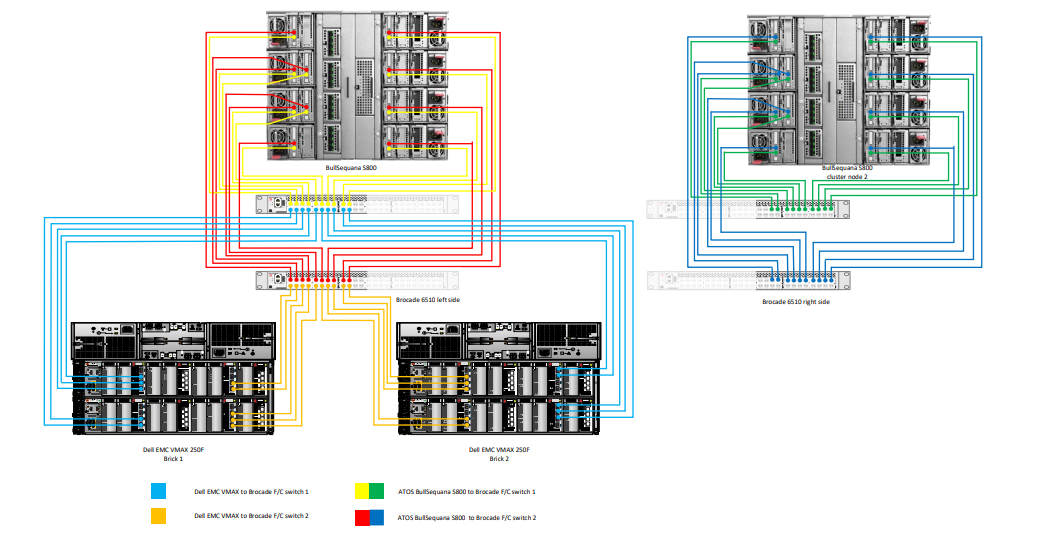

The diagram below shows how to connect two BullSequana S800 servers in a high-availability configuration and the Dell EMC VMAX 250F. Again, the placement of the HBA may differ depending on the placement of the HBA modules.

Above, on the left side of the Brocade switch, the left half of the ports for the first S800 server is highlighted, and on the right side of the picture, the right ports are highlighted, and the second S800 server is connected to them.

All parameters, except the BMC network configuration, were left at the factory default settings. The “Logic Processor” parameter in the “Processor Parameters” section remains on by default. This activates Intel Hyper-Threading Technology, which maximizes the number of logical processors available for SQL Server.

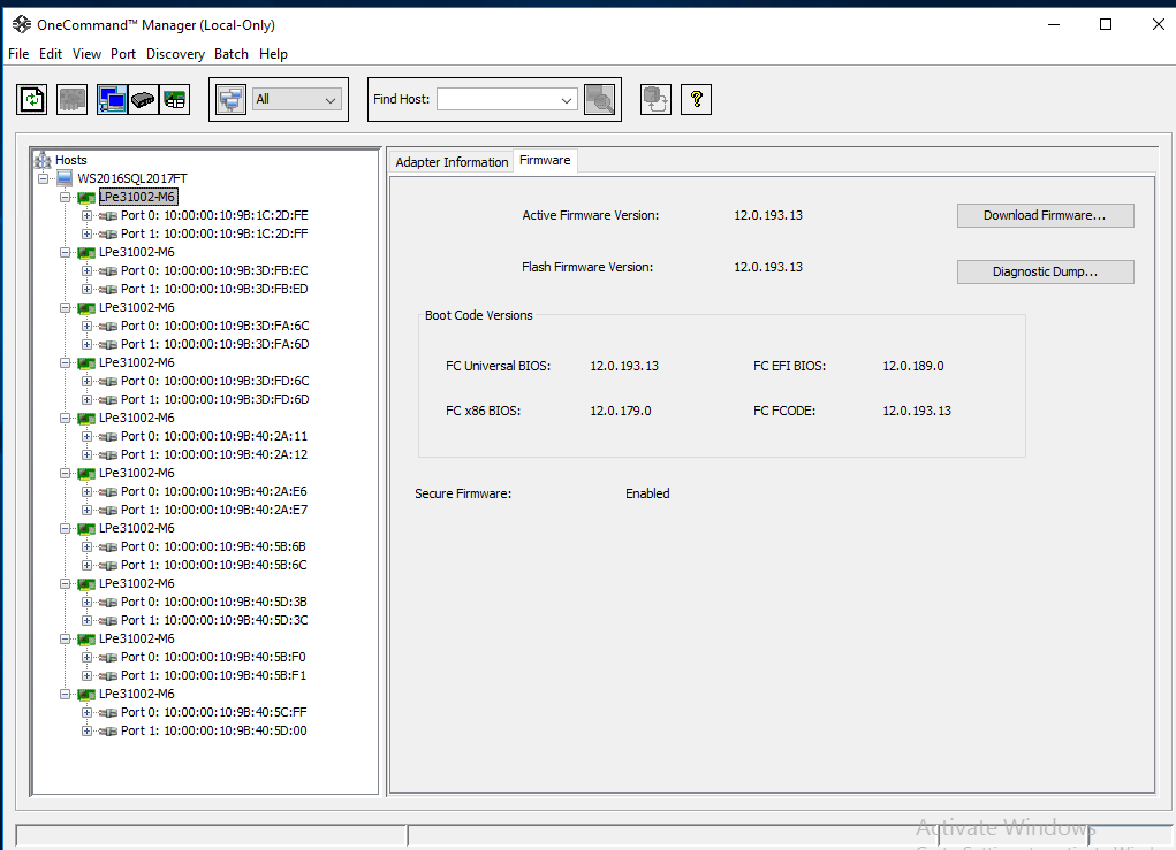

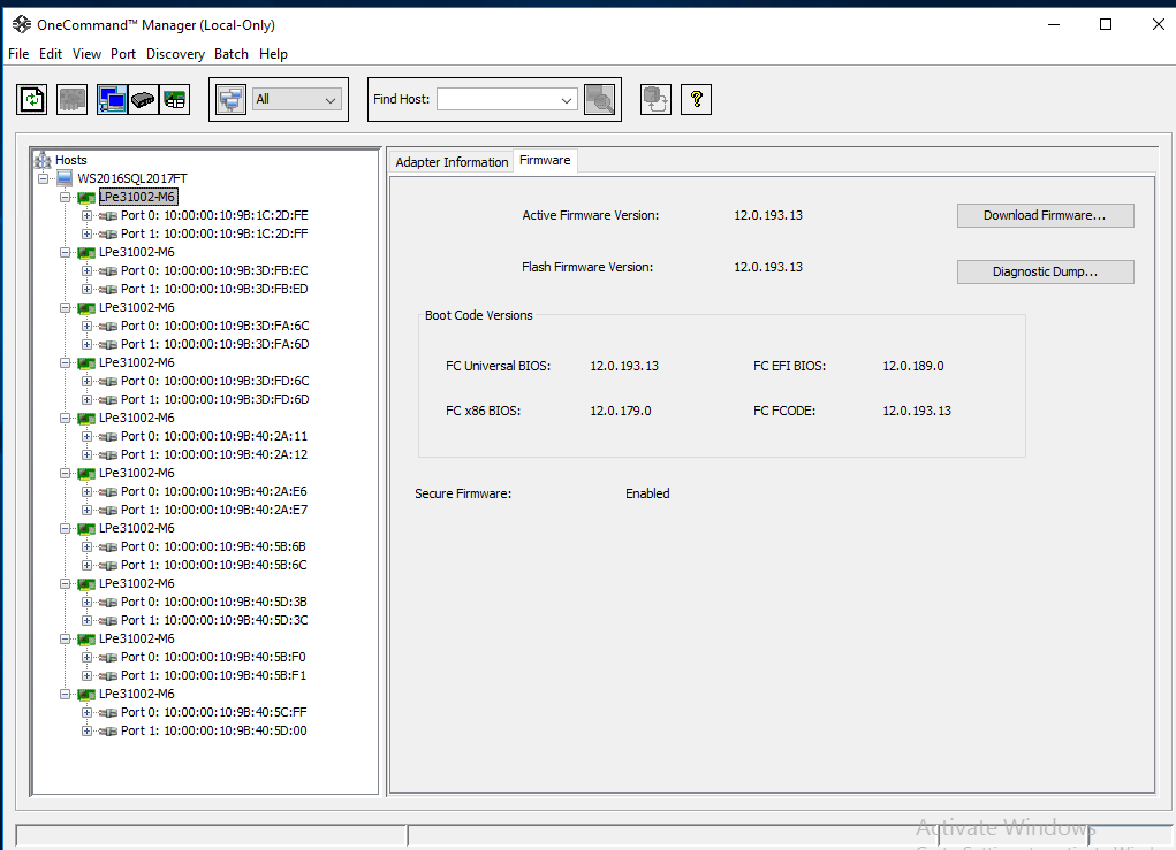

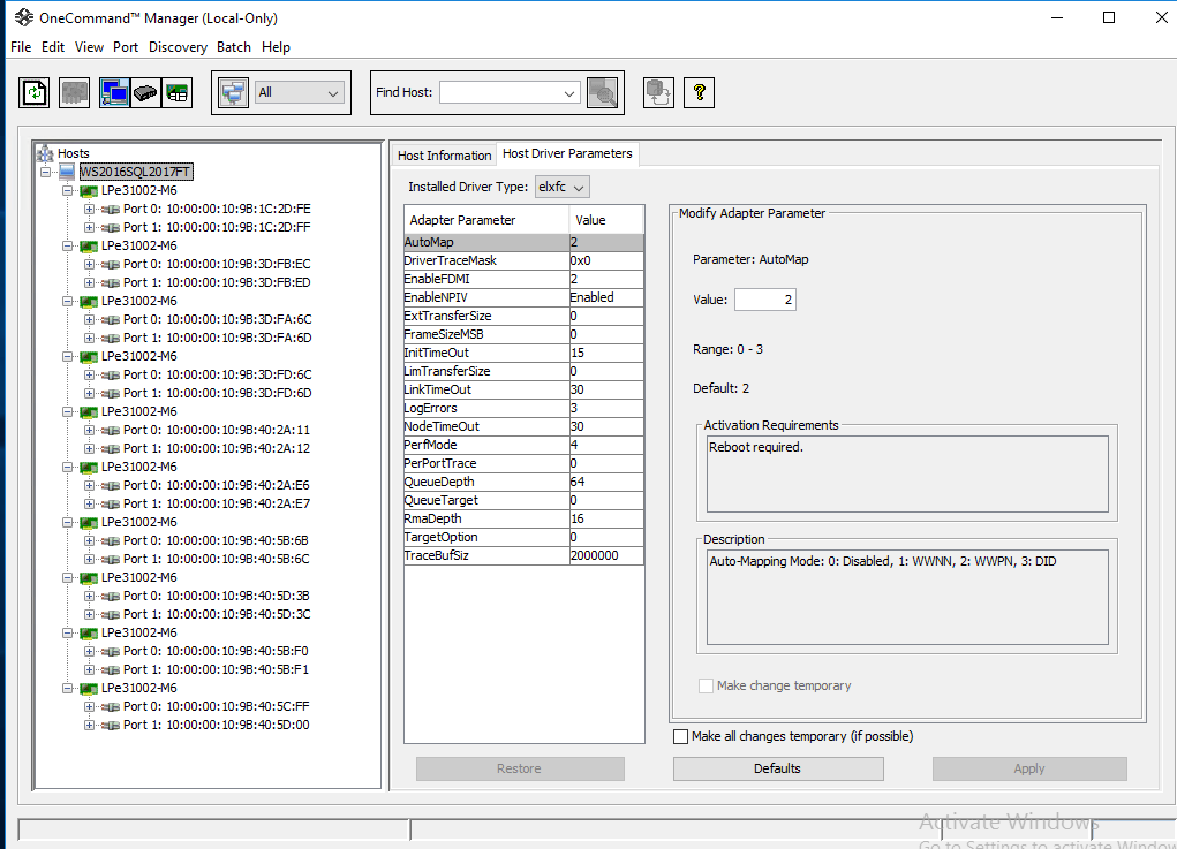

All Emulex HBAs are available through the Emulex OneCommand Manager. The firmware and driver version used during Microsoft DWH Fast Track certification is 12.0.193.13.

It is important that all HBAs have the same firmware. The latest firmware can be downloaded from the Broadcom website. Firmware updates can be performed using the “Download Firmware” button in the Emulex OneCommand Manager. Changing the driver and / or firmware may require rebooting the server.

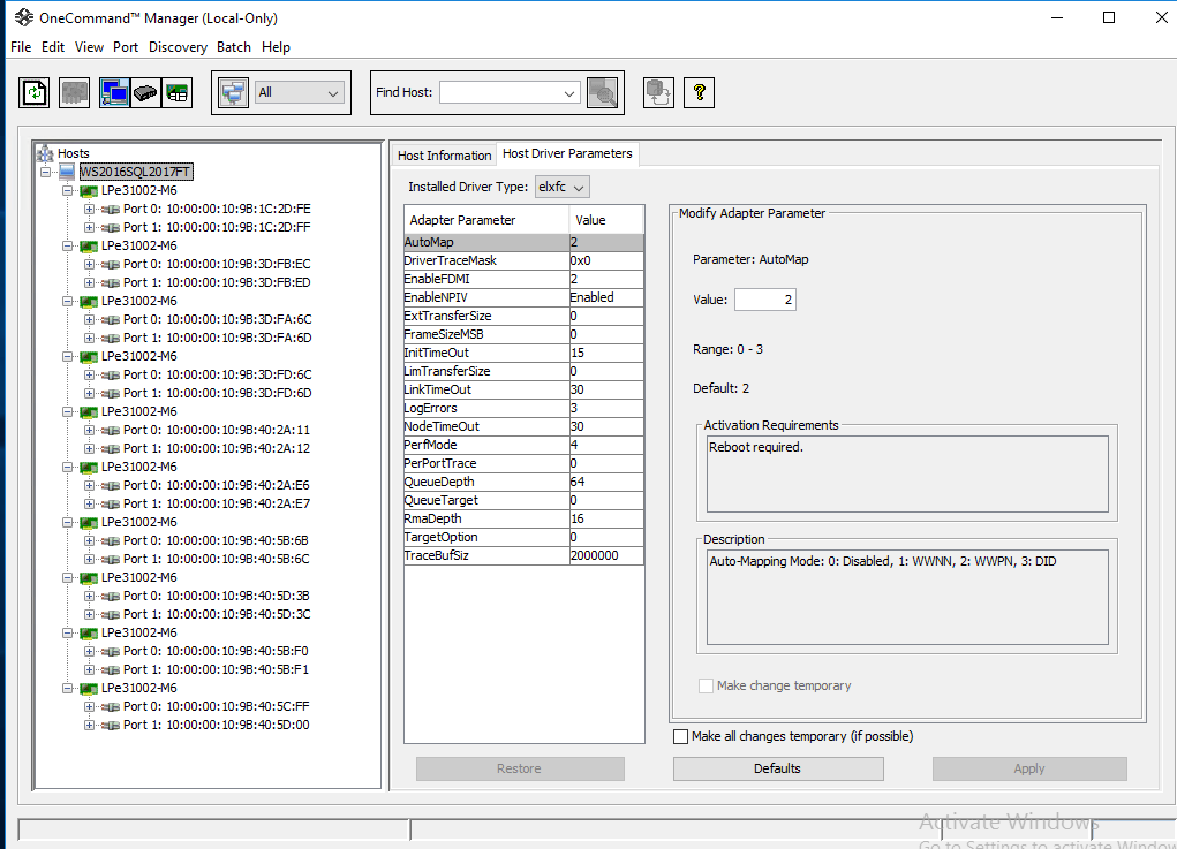

With the exception of the QueryDept parameter, which was changed from 32 to 64, all other host and HBA parameters remained by default.

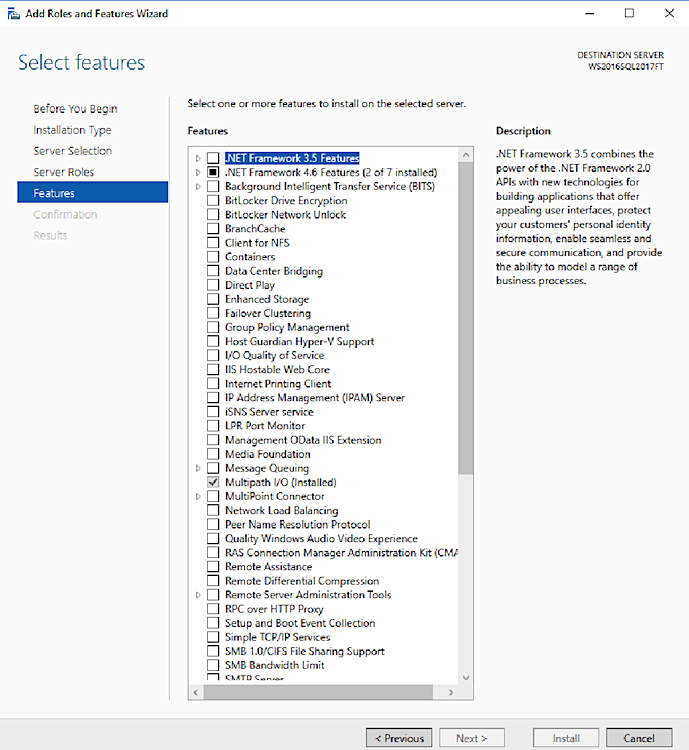

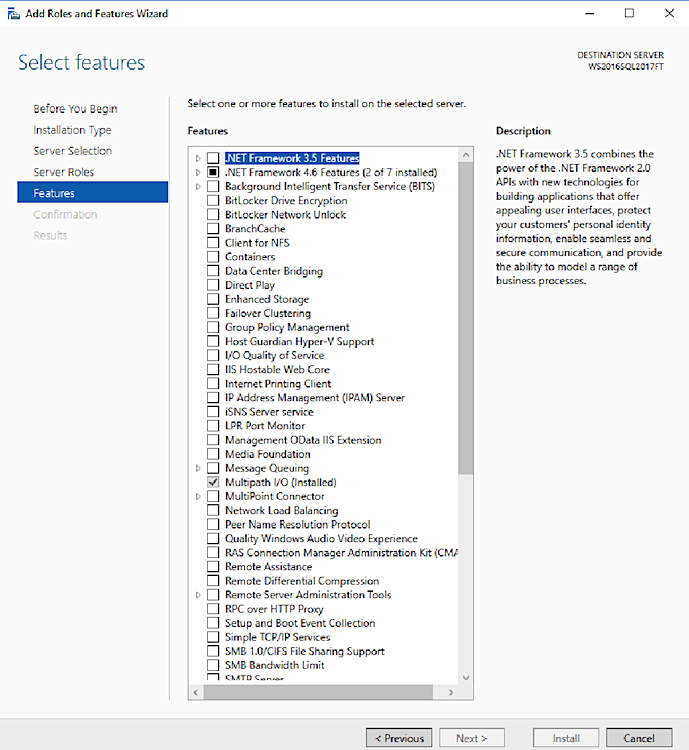

Windows installation was performed with default settings. After installation, the Windows MPIO feature was activated, as shown in the figure below.

After installing the MPIO function, Windows must be restarted.

It is important to have drivers and packages on USB storage, since they are not included in the Windows driver catalog included on the installation DVD (network adapters are newer than Windows Server 2016). After installing Windows and MPIO, the following drivers and packages were installed (in the specified order):

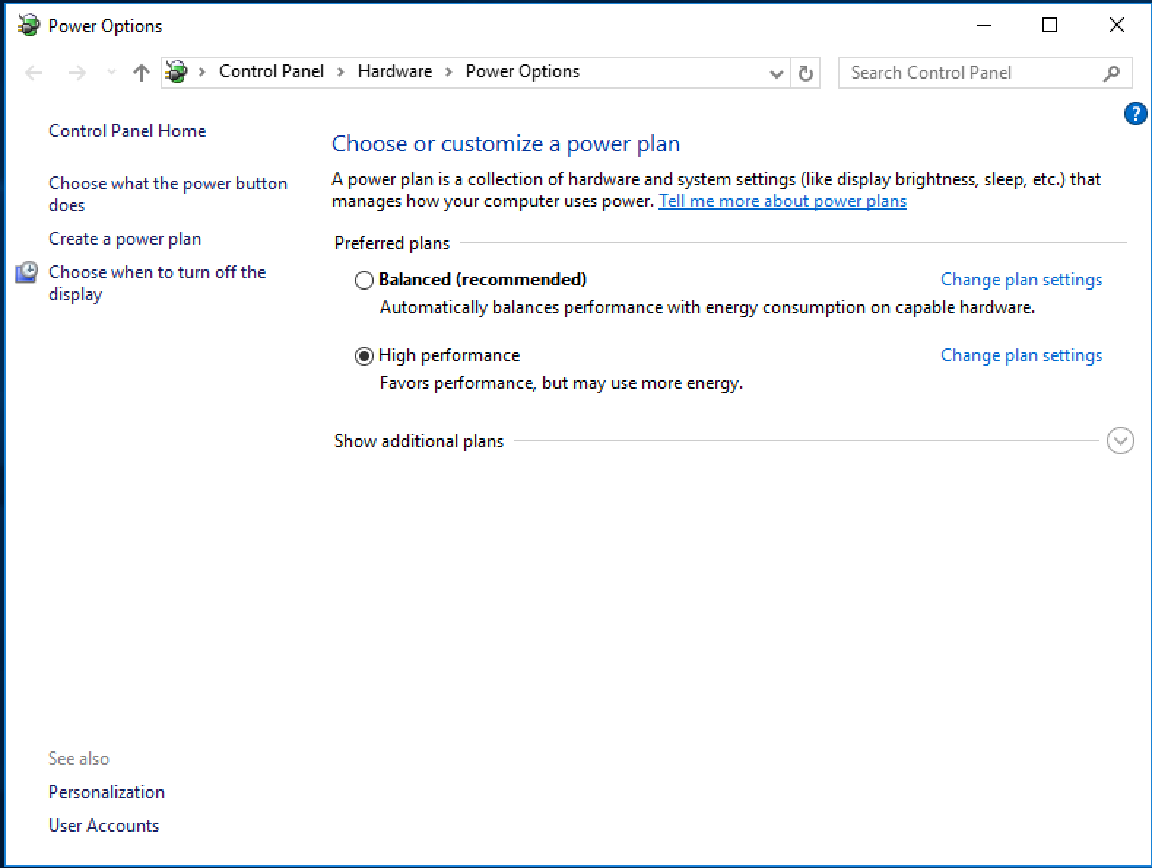

To maximize performance, the server was configured to use a high performance plan.

So that the Windows operating system did not send data pages to virtual memory on disk and SQL Server could use the process for storing data in physical (RAM) memory, the Lock pages in memory option for the SQL Server service account was enabled. You must restart SQL Server for this option to take effect.

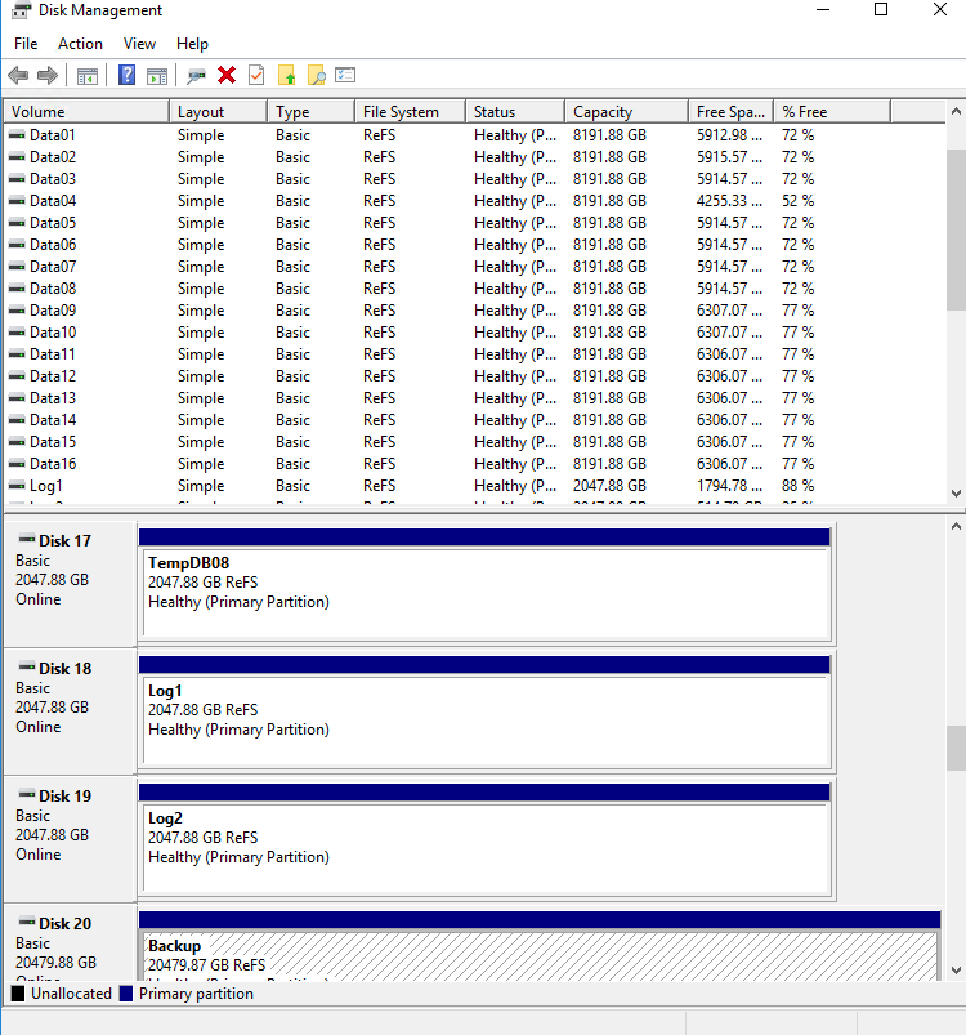

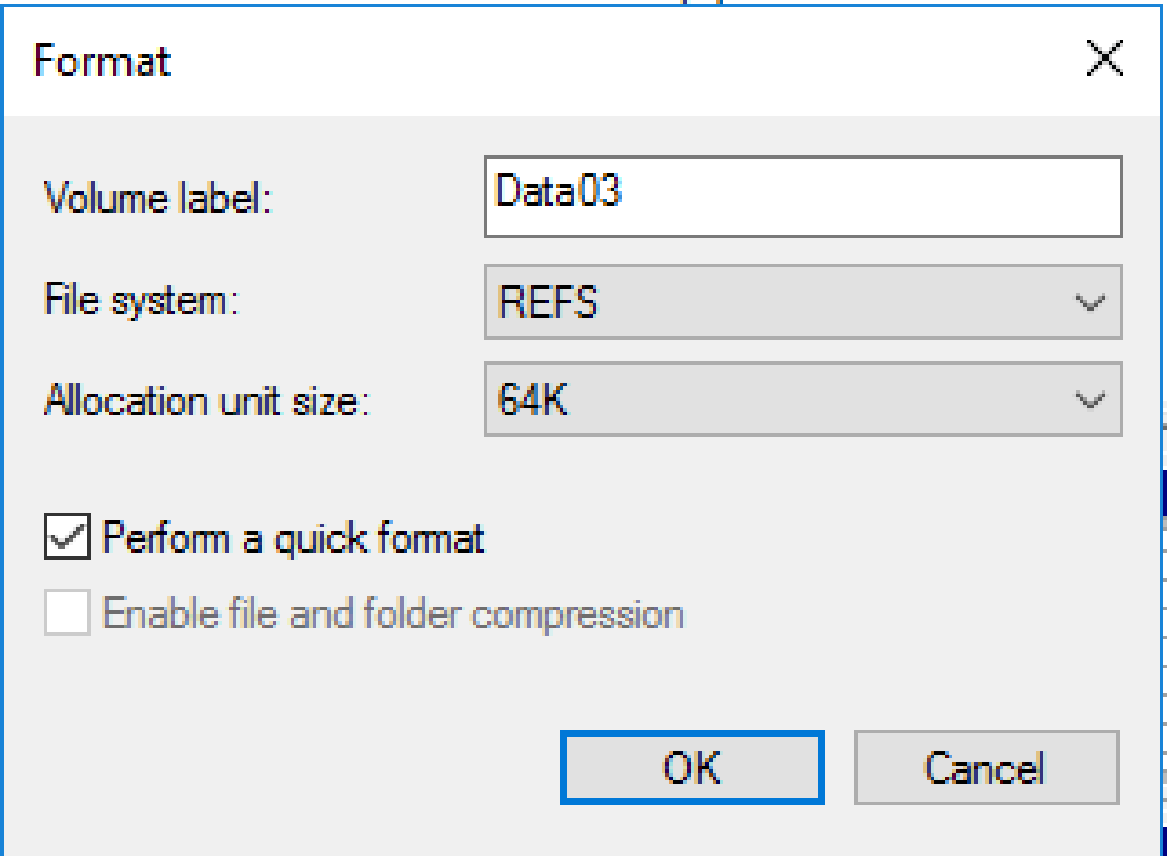

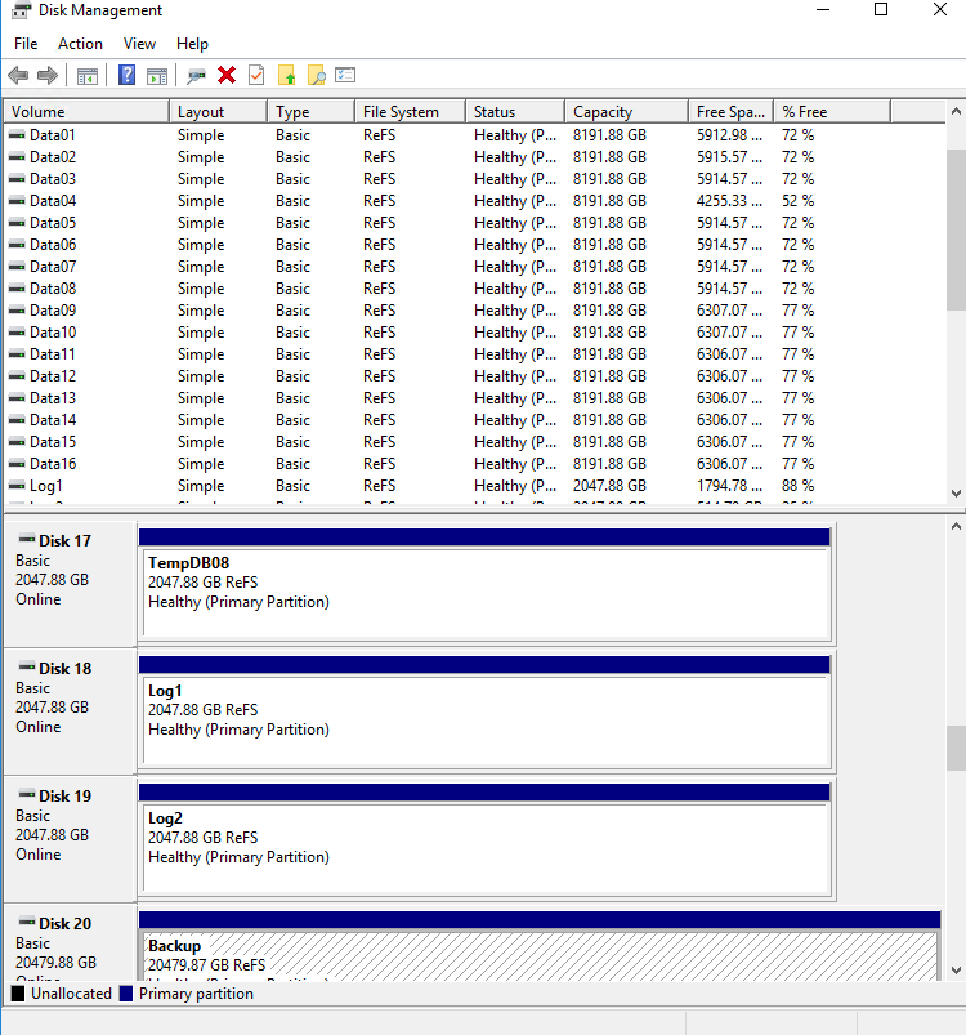

After zoning is completed, VMAX and LUN switches are displayed in Windows Disk Management.

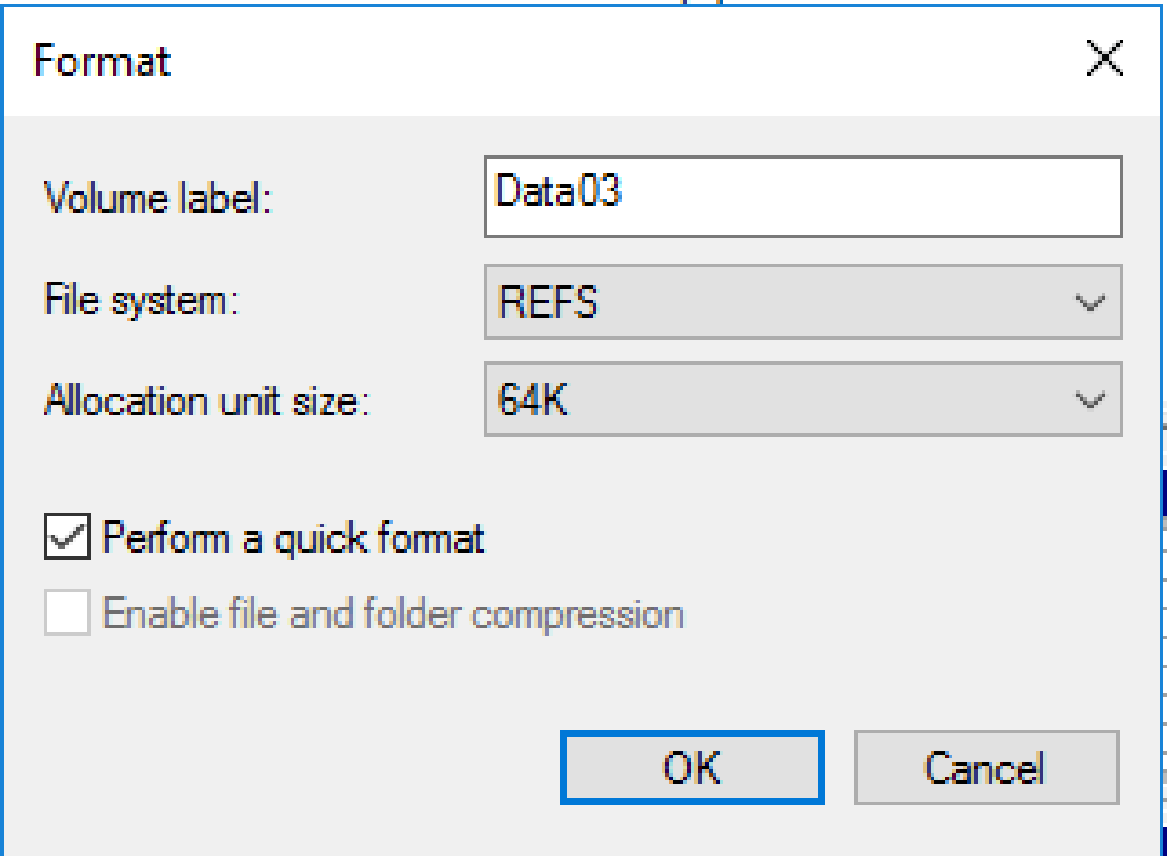

If all disks are formatted correctly, Windows Disk Management displays the following list:

There are 34 LUNs in total:

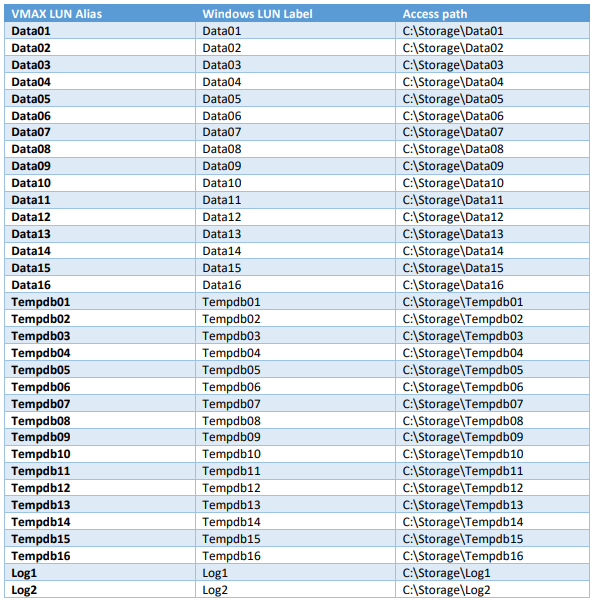

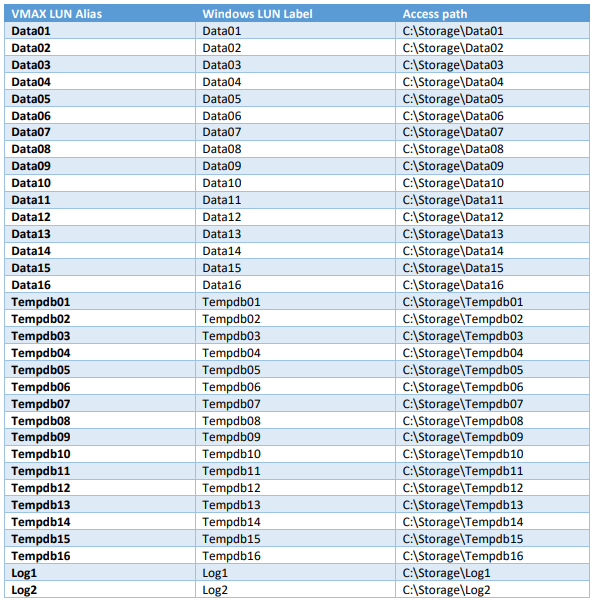

For DWFT reference architectures, we with Dell EMC recommend using mount points for volumes instead of drive letters. We recommend that you assign the appropriate volume and mount point names to simplify troubleshooting, and performance analysis. Ideally, mount point names should be assigned in such a way as to simplify the identification of the VMAX volume for a given Windows volume.

The following table shows the volume labels and access paths used for the reference configuration:

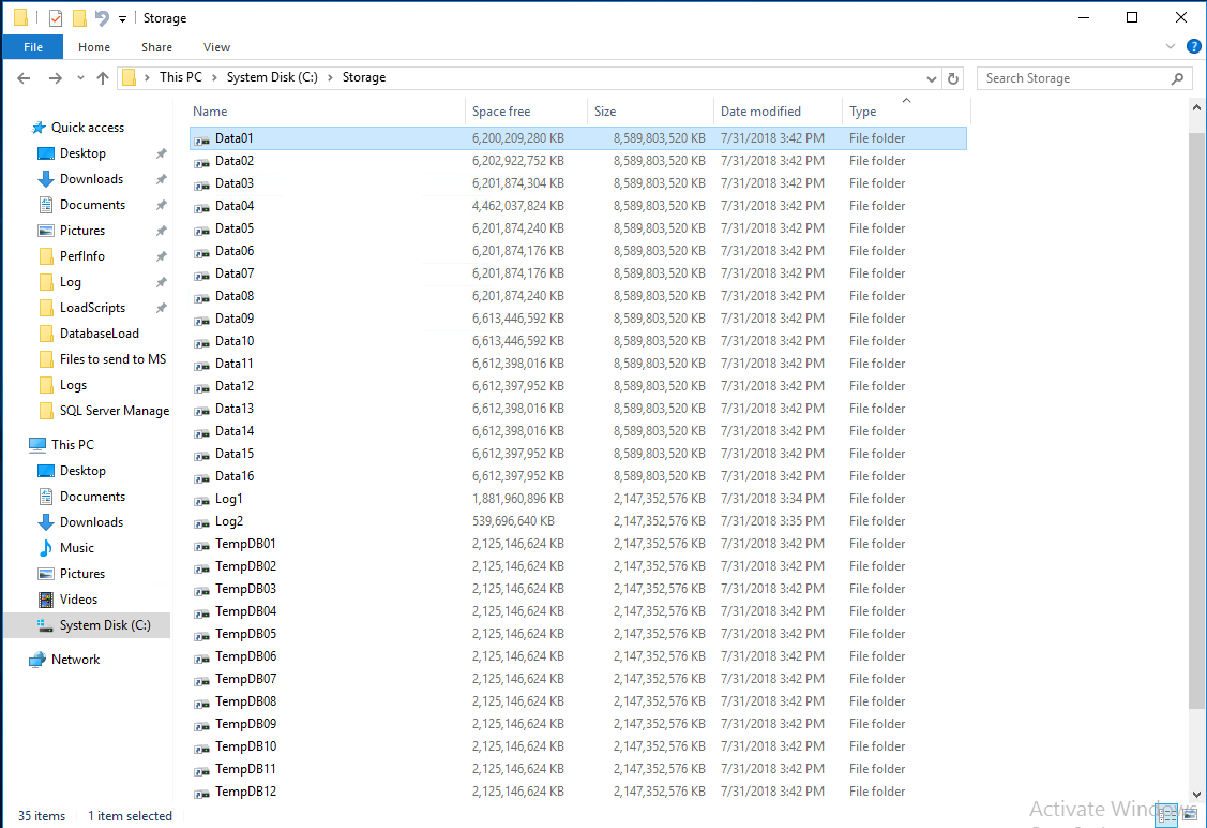

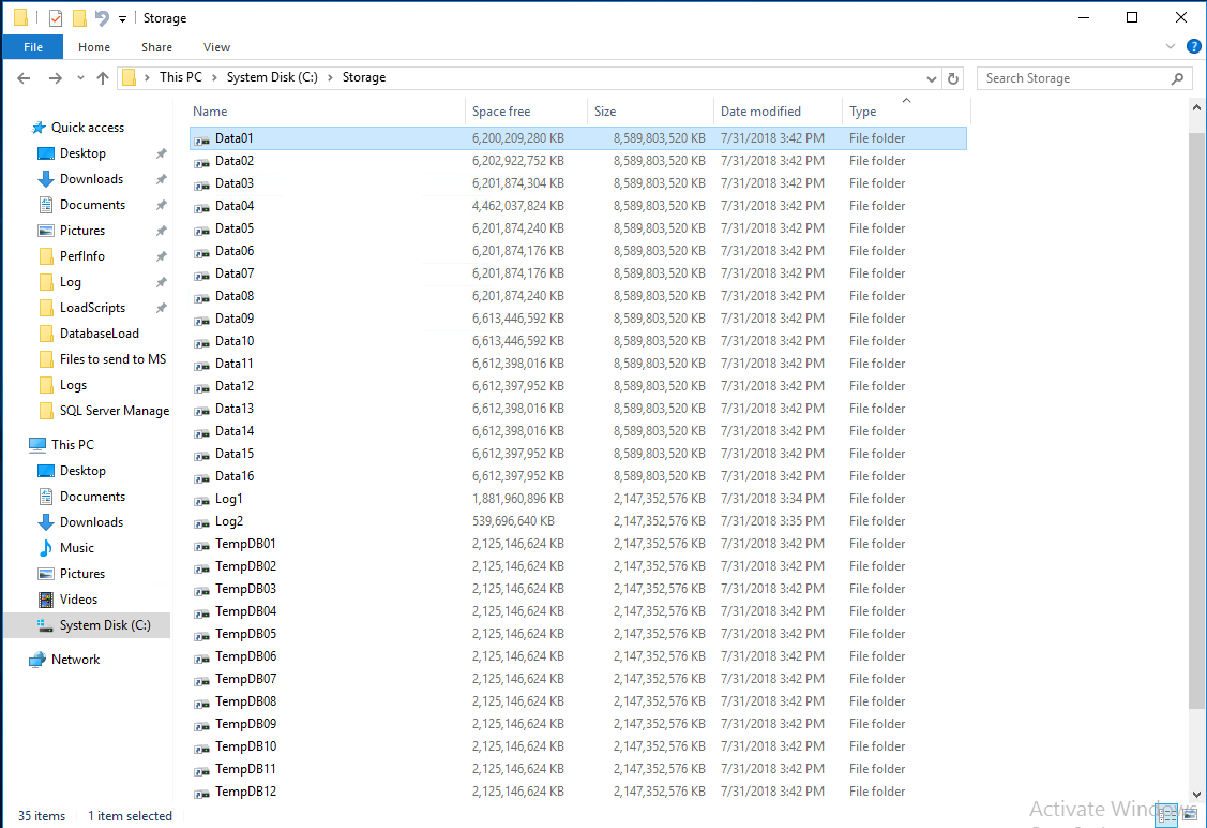

After all the LUNs are ready, the C: \ Storage directory looks like this:

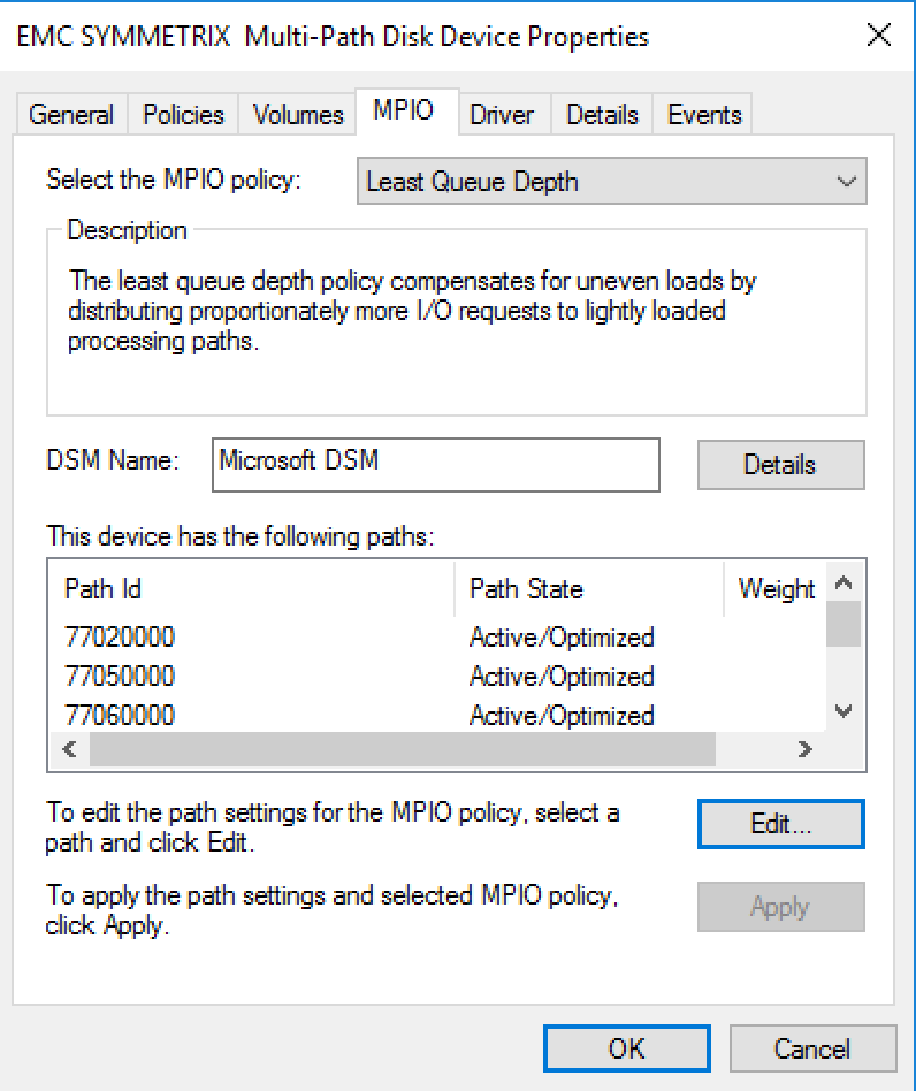

The MPIO policy for all volumes is set in the “Least Queue Dept” section.

Windows Defender is a standard antivirus and antispam component. To prevent SQL Server data and log files from being scanned (for performance), you need to add the following exceptions.

Folders in one server:

Folders in a high availability (HA) solution:

File types:

Installation is performed mainly using default settings. Some exceptions are listed below. During the installation of SQL Server 2017, the “Perform Volume Maintenance Task” option is selected.

The Tempdb database is configured to use 16 files of the same size. Tempdb data files are located on 16 volumes. The log file of transactions tempdb is placed on a disk where logs are kept. Auto zoom enabled.

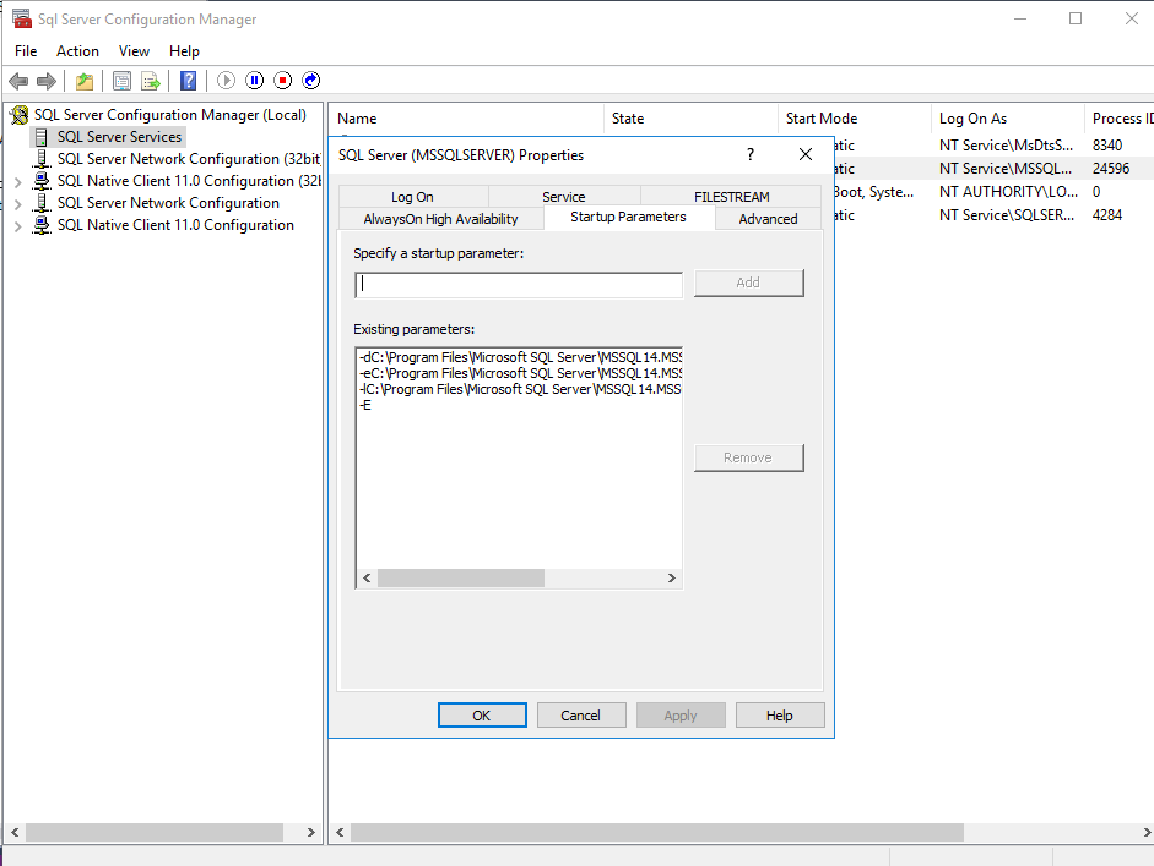

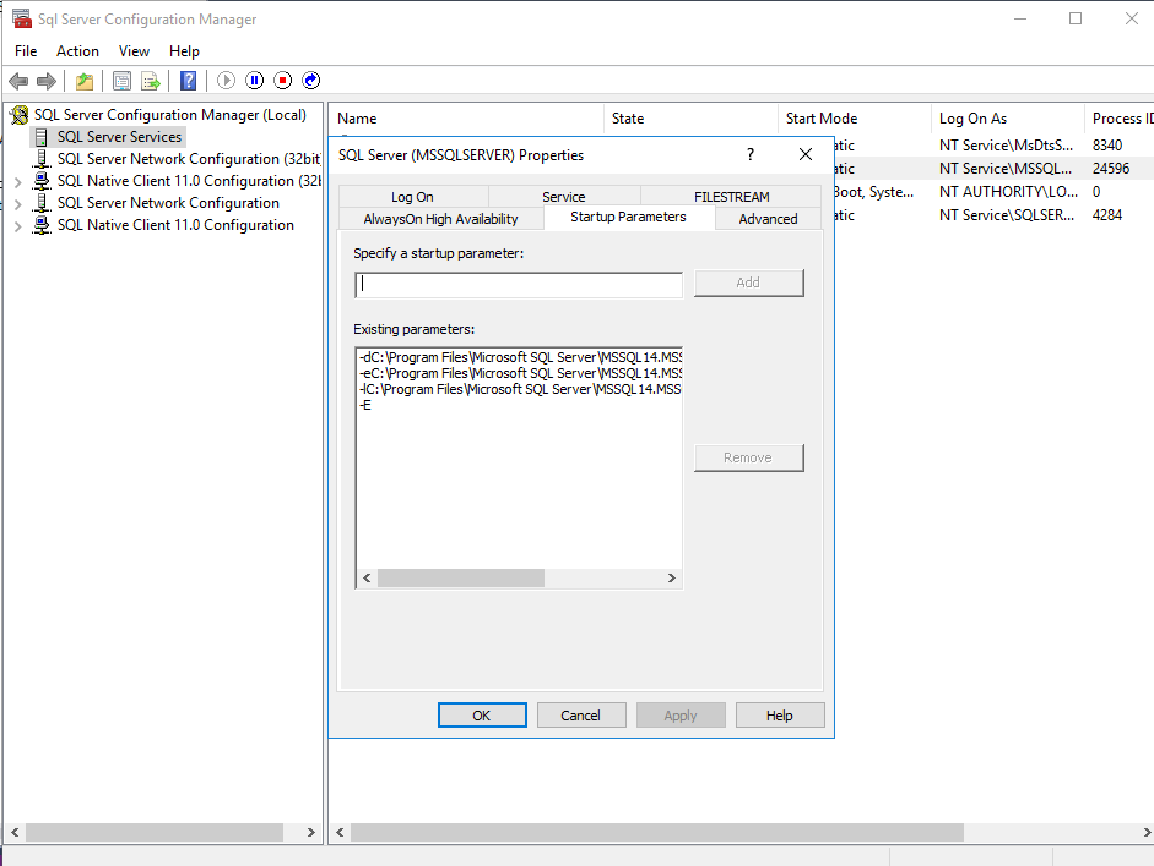

SQL Server 2017 automatically sets the trace flags -T1117 and -T1118, so you no longer need to add them to the instance startup parameters. If you do not use the trace flag -T834, the best performance is achieved. Microsoft recommends that you do not use this flag when using clustered column storage indexes.

The only launch parameter is the -E flag:

Additional information on the topic can be found in the Microsoft DBCC TRACEON - Trace Flags and Database Engine Service Startup Options articles .

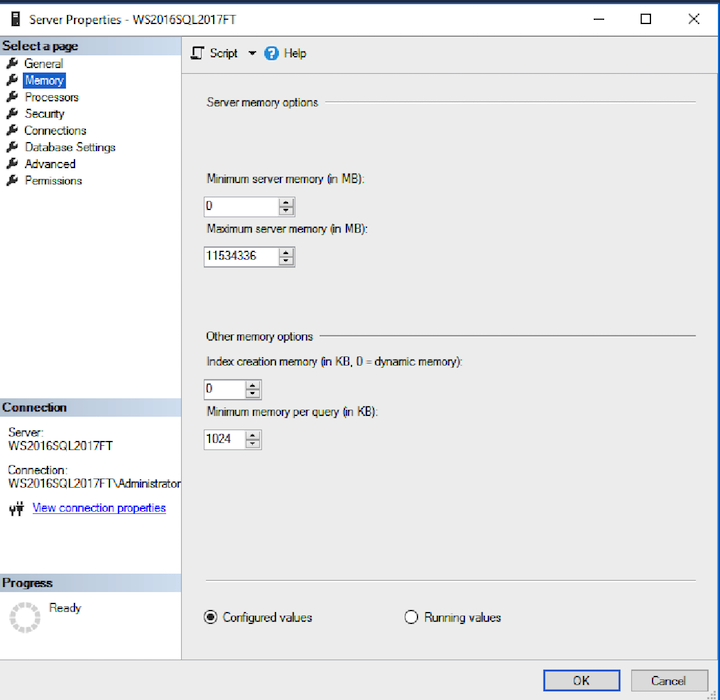

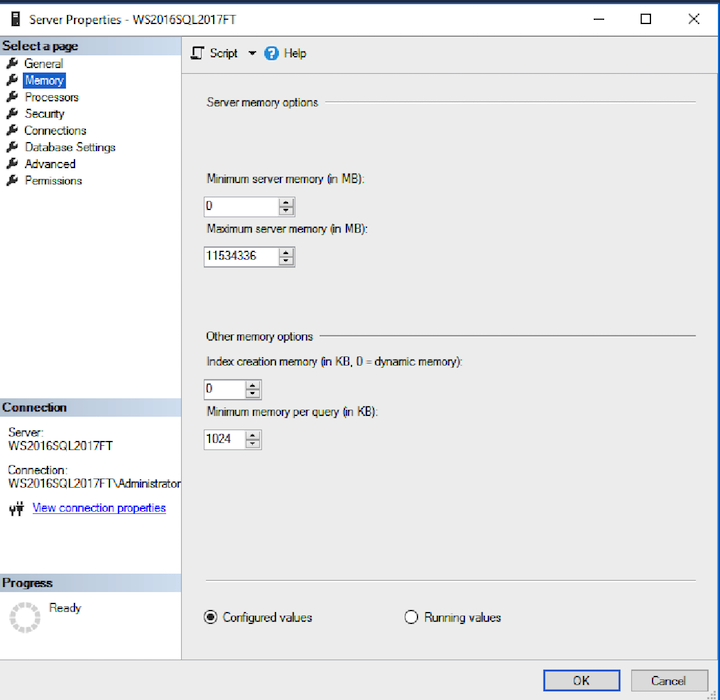

The maximum server memory for this reference architecture should be set to 11.534.336 MB or 11.264 GB, where 1.024 GB is allocated to the operating system. If additional applications share a server, you need to adjust the amount of memory available for the operating system.

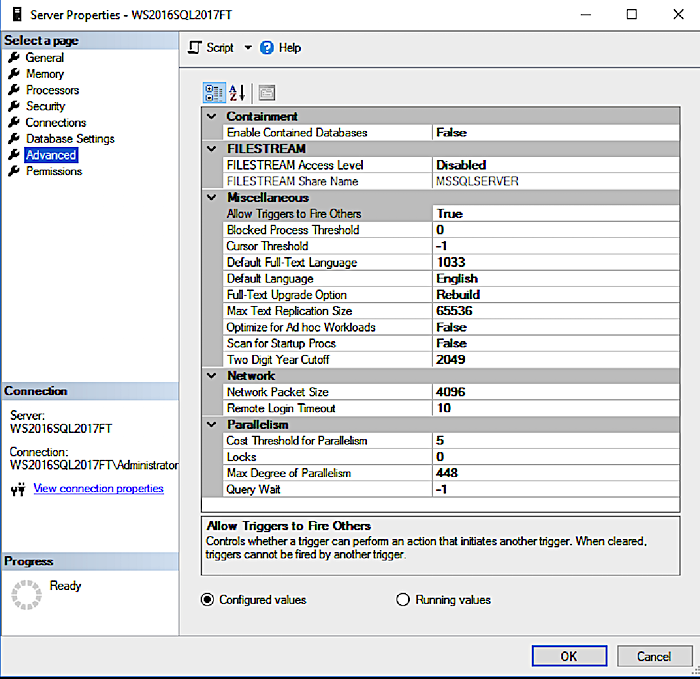

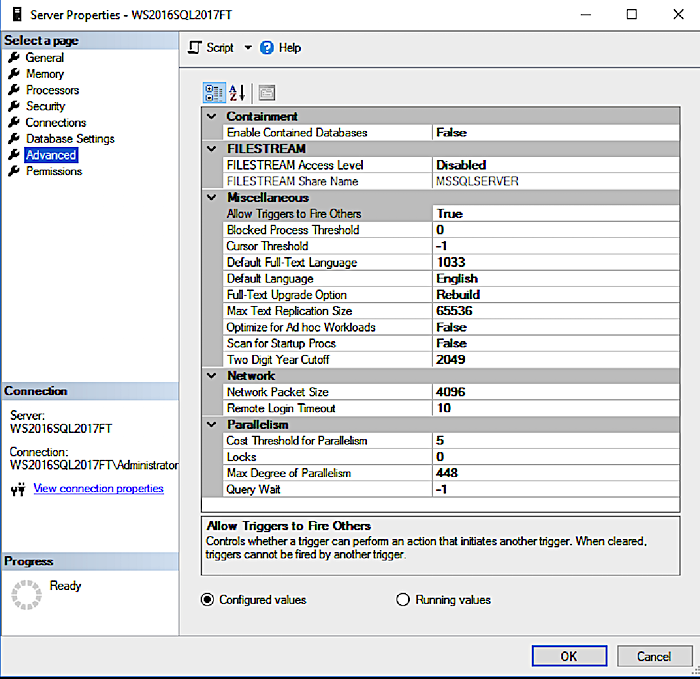

The maximum degree of parallelism is set at 448, which corresponds to the number of logical cores available on the server.

Depending on the type of workload used — the storage with the largest number of rows (RS) or cluster column storage (CS) —You need to properly configure the Resource Governor settings.

For row storage, the resource control parameter used to limit the maximum amount of memory is set to 12 percent.

For column storage, the resource governor parameter used to limit the maximum amount of memory is set to 25 percent.

The data warehouse database was configured to use several groups of files, each of which contains 16 files distributed evenly across sixteen data volumes. All files allowed to automatically grow. The file groups have been configured with the AUTOGROW_ALL_FILES parameter to ensure that all files in this file group remain the same.

HA Reference Architecture uses Windows Failover Clustering to provide high availability. When configuring a Windows failover cluster, there are additional storage issues. The recommended configuration allows all cluster votes to be allowed to vote for quorum and use the disk.

An additional volume must be created and configured as a witness disk. Dell EMC recommends using 2GB of disk space. All volumes must be mapped to each cluster node, configured as a cluster resource, and added to the SQL Server cluster resource group.

In conclusion, we highlight probably the most important advantage of the configuration - the ability to connect a large number of HBA adapters. This allows for a balanced increase in storage modules with them.

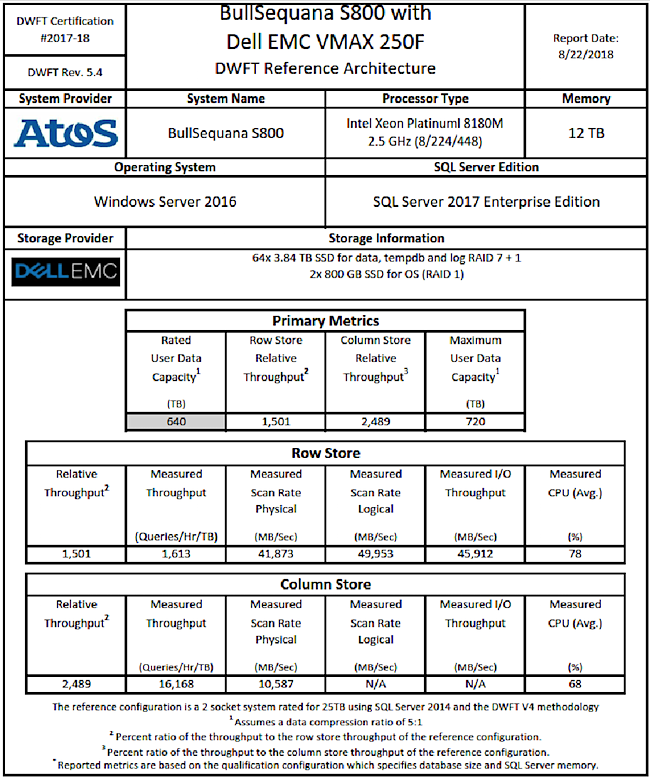

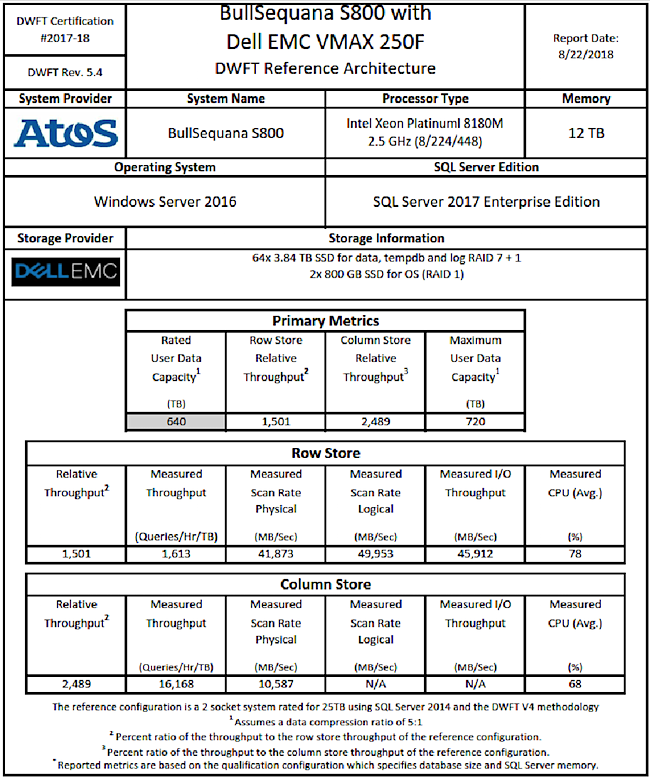

And this is the certificate for the ATOS BullSequana S800 and Dell EMC VMAX 250F configuration. You can see test metrics in it.

In the near future, we plan to translate solutions to the architecture of Cascade Lake. This will lead to the beginning of a new certification, which will complement the current one. We are pleased to answer your questions about certification and our architecture in the comments.

Such a program for its SQL Server was developed by Microsoft - SQL Server Fast Track (DWFT). It is used to certify storage configurations — those that meet the requirements of the workload and can be implemented with less risk, cost, and complexity. It sounds great, but it's still interesting to evaluate these criteria in practice. To do this, we will analyze in detail one of the configurations certified by SQL Server Data Warehouse Fast Track.

For a start - a little more about DWFT. This program includes not only the certification of reference architectures from system suppliers, but also a number of best practice guides — if you want to put together something of your own. Microsoft updates the program for the development of new features, and on the basis of customer feedback.

With the DWFT storage, you can know for sure that all SQL Server features will be available. Column storage technologies increase productivity in transactional and analytical workloads. Both traditional structured relational and unstructured big data will be supported — in Hadoop, Spark, or Azure Data Lake. With the SQL Server “PolyBase” feature, you can merge big data into SQL Server Universe, query both relational and unstructured data, merging it together without having to move it.

A general list of certified architectures is at the bottom of this page . Next, we present one of them.

"Reference" architecture

Now - to the analysis of one of the architectures certified by DWFT. It is based on our BullSequana S400 and S800 servers and the Dell EMC VMAX storage. Storage capacity can be increased to 4 PB, if you scale the BullSequana S server to 32 processors.

In order to ensure high availability of the database, failover clustering of Windows is additionally recommended. That is, the use of at least two servers. Through the Microsoft clustering services, one database server is configured as the primary (active) server, and the second as the secondary (passive) server. The passive server must have the same configuration as the main server.

List and characteristics of infrastructure components

The general scheme of the two configurations

Hardware components

BullSequana S800 server

The BullSequana S800 server is an eight-processor server with up to 12 TB of memory, advanced I / O capabilities, and the addition of a module with SSD / HDD / NVMe storage or with NVidia Tesla GPUs.

The BullSequana S line is available in versions from S200 with two processors to S3200 with 32 processors. Line scale step - two processors. For the SQL Server 2017 DWFT architecture, the S200, S400 and S800 are equally certified.

Host Bus Adapter Emulex LPe31002-M6 16 Gbps

Emulex Gen 6 FC adapters with a dynamic multicore architecture, compared with previous generations of devices, offer higher performance, lower latency, improved diagnostics and manageability. Provides speeds of up to 12,800 MB / s (two 32GFC ports or four 16GFC ports), a throughput of 1.6 million IOPS per adapter. The four-port version of the LPe32004 provides up to 3.2 million IOPS per adapter.

Brocade 6510 Switch

The Brocade 6510 switch from Broadcom is a 1U, 48-port Fiber Channel switch that can be installed in a server rack.

- Fiber Channel Performance: 16 Gbps

- Up to 48 ports providing aggregate bandwidth of 768 Gbps

- Frame trunking at speeds up to 128 Gbps

- Power Consumption: 14 W / Gbps

Dell EMC VMAX 250F

VMAX All Flash is focused on petabyte data volumes and large transaction processing. VMAX All Flash is scalable and allows you to combine hundreds of multi-core Intel processors for dynamic mixed workloads. The main element of VMAX All Flash is V-Brick — up to eight V-Bricks can be combined into a single system with fully separated connectivity, processing and bandwidth capabilities. Each V-Brick supports up to 72 processor cores for performance scaling — up to 576 cores per array. Flash Capacity Packs modules are used for scaling.

The storage system must clearly comply with the requirements for high-precision real-time transaction processing (OLTP), virtualized applications, and Oracle and SQL databases. Millions of IOPS, petabyte throughput and predicted performance (response time of 350 µs) are maintained.

Finally, the architecture should have enhanced error isolation, reliable data integrity checks, and proven, reliable hardware and software updates. Another requirement: accessibility for 24x7 operations using SRDF software.

How is the storage connected

Configuration of VMAX 250F (All Flash):

- 2 vBrick with 2 TB of cache memory per block.

- 32 * 16 Gbit / s host FC ports

- 64 * 3.84 TB flash (Raid5-7 + 1) + reserve

VMAX vBricks Appearance in a Rack:

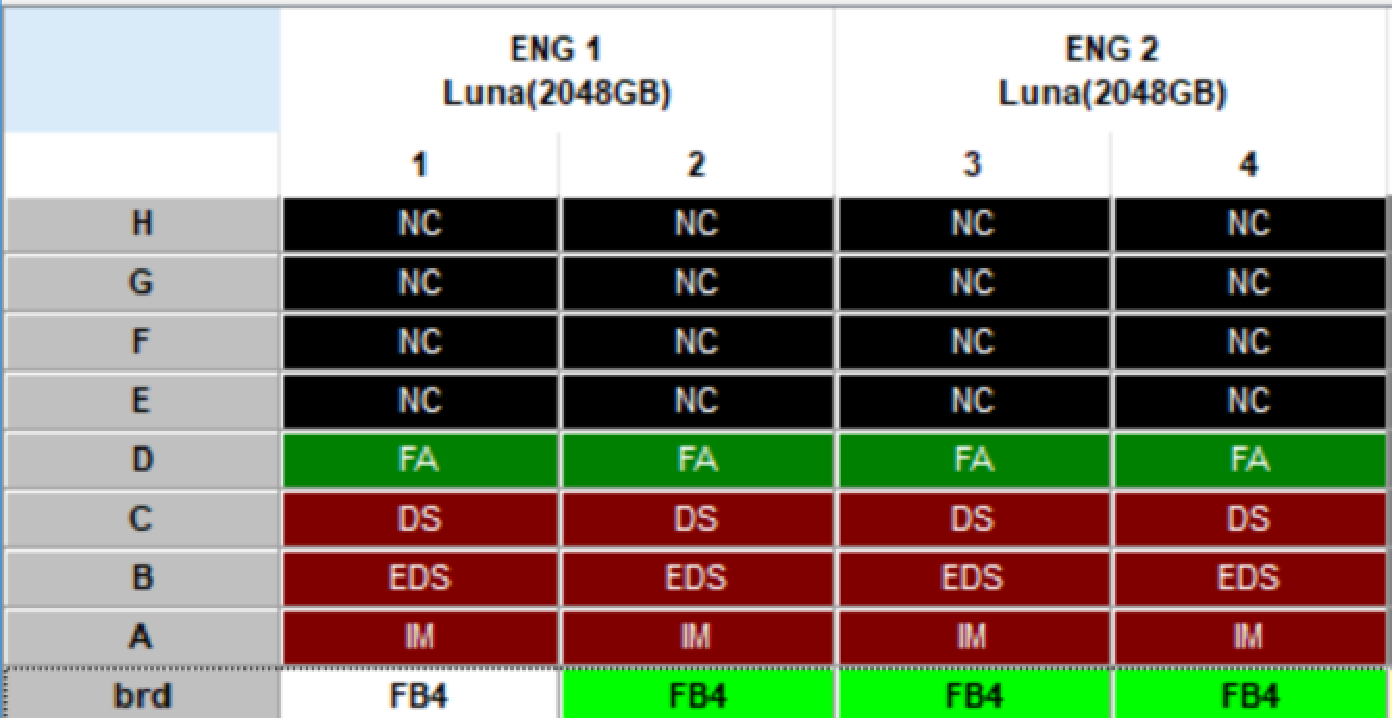

Port Arrangement: The

logical array configuration in a certified architecture is built according to the Dell EMC configuration and best performance practices.

- HyperMaxOS Version: 5977.1131.1131

- Encryption: enabled

- Compression: disabled

- System Performance Profile: Basic

Main distribution:

LUN configuration

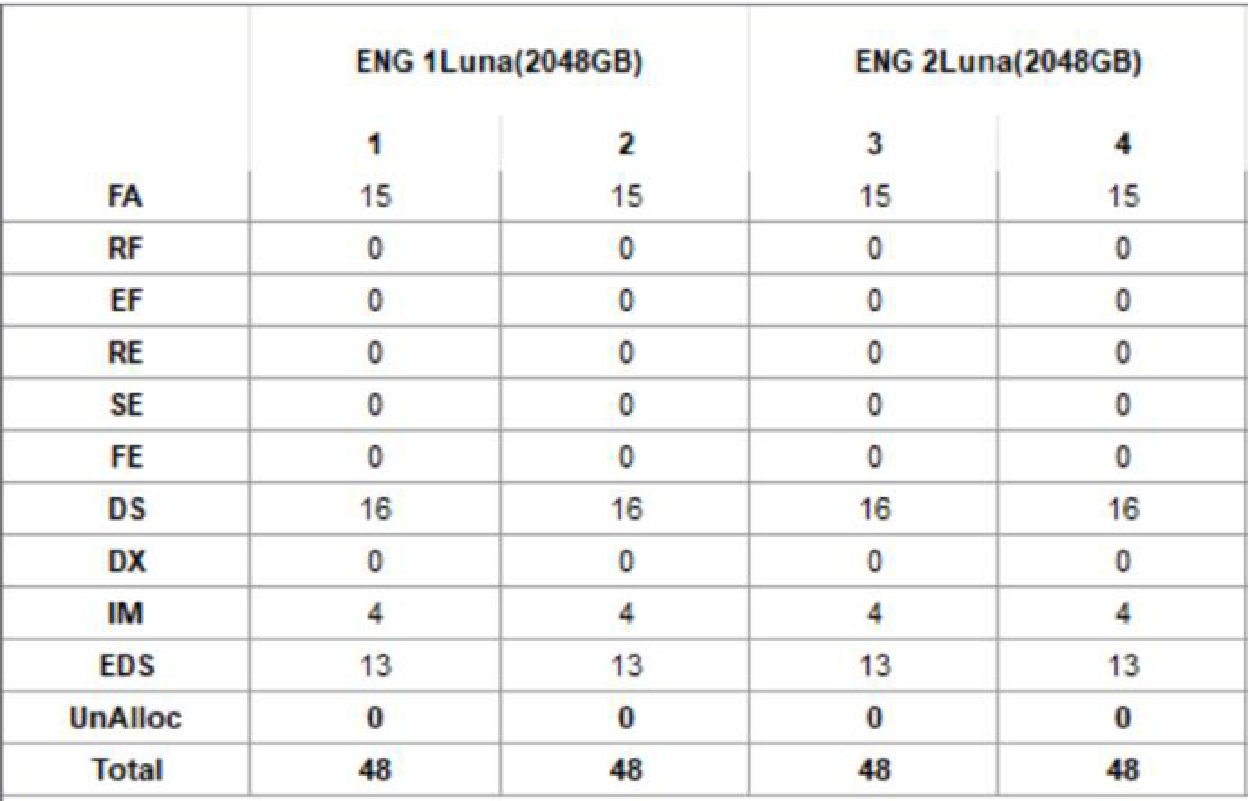

All LUNs are configured as THIN LUNs in a virtual pool created on 64 drives. Data is distributed across all flash drives on both vBricks. In total, 35 LUNs are defined on the Dell EMC VMAX:

- 16 LUN size 8 TB for user data (128 TB total)

- 16 LUN or 2 TB for tempdb (32 TB total)

- 2 LUN size 2 TB for the magazine (4 TB in total)

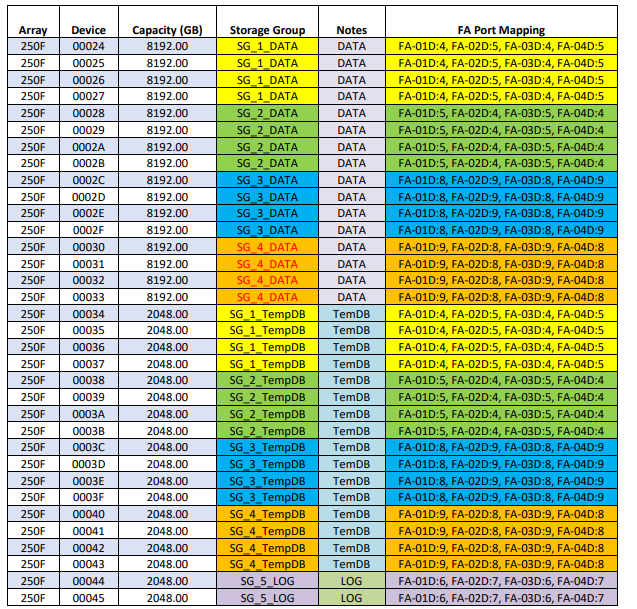

Front Fiber Channel (FC) ports are configured to use four domains with failures in virtual port mode. Each port is one to one connected to a port on the VMAX, which serves only one data LUN and one Tempdb LUN. For each of them, there is a LUN to separate read and write operations on the LUN (when performing GROUP BY or ORDER BY, data is first written to Tempdb before being used in another part of the query or presented to the user). Each LUN is mapped to four FA ports and is available in four ways.

Mapping ports:

VMAX Storage Groups (SG)

The following storage groups have been created:

Read and write cache

The VMAX cache is global, dynamically used for reading and writing. The default settings are used to limit system write waiting. Since the system uses only one application, there is no need to create cache sections.

Connect to server and MPIO

Windows Server 2016 used its own MPIO. Its alternative is PowerPath software for Dell EMC applications.

Cable laying

The top ports of the Brocade switches are used to connect the BullSequana S800 server. Each even-numbered Emulex HBA port is connected to the upper ports to the left of the Brocade switch. Each of the Outbus Emulex HBA ports is connected to the upper ports to the left of the Brocade_2 switch. Bottom ports are used only to connect FC Dell EMC VMAX ports.

Single Server Configuration

The following diagram shows the cabling between the BullSequana S800 and the Dell EMC VMAX 250F. The placement of the HBA may differ depending on the placement of the HBA modules.

Connecting cables in high available server configuration

The diagram below shows how to connect two BullSequana S800 servers in a high-availability configuration and the Dell EMC VMAX 250F. Again, the placement of the HBA may differ depending on the placement of the HBA modules.

Above, on the left side of the Brocade switch, the left half of the ports for the first S800 server is highlighted, and on the right side of the picture, the right ports are highlighted, and the second S800 server is connected to them.

Server Configuration BullSequana S800

System BIOS

All parameters, except the BMC network configuration, were left at the factory default settings. The “Logic Processor” parameter in the “Processor Parameters” section remains on by default. This activates Intel Hyper-Threading Technology, which maximizes the number of logical processors available for SQL Server.

Emulex LPe31002-M6 16 Gbps

All Emulex HBAs are available through the Emulex OneCommand Manager. The firmware and driver version used during Microsoft DWH Fast Track certification is 12.0.193.13.

It is important that all HBAs have the same firmware. The latest firmware can be downloaded from the Broadcom website. Firmware updates can be performed using the “Download Firmware” button in the Emulex OneCommand Manager. Changing the driver and / or firmware may require rebooting the server.

With the exception of the QueryDept parameter, which was changed from 32 to 64, all other host and HBA parameters remained by default.

Windows Server 2016 Configuration

Installation

Windows installation was performed with default settings. After installation, the Windows MPIO feature was activated, as shown in the figure below.

After installing the MPIO function, Windows must be restarted.

Installing drivers and packages

It is important to have drivers and packages on USB storage, since they are not included in the Windows driver catalog included on the installation DVD (network adapters are newer than Windows Server 2016). After installing Windows and MPIO, the following drivers and packages were installed (in the specified order):

- Intel INF Chipset version 10.1.17711.8088_PV or later (requires reboot)

- MegaRAID driver for Windows 2016 version 6.14-6.714.05.00-WHQL (reboot required)

- PROWinx64 for Intel XL7xx Family Version 23_2 or Newer

- OneInstall-Setep-12.0.193.18.exe or later

Power setting

To maximize performance, the server was configured to use a high performance plan.

Lock pages in memory

So that the Windows operating system did not send data pages to virtual memory on disk and SQL Server could use the process for storing data in physical (RAM) memory, the Lock pages in memory option for the SQL Server service account was enabled. You must restart SQL Server for this option to take effect.

Windows drives

After zoning is completed, VMAX and LUN switches are displayed in Windows Disk Management.

If all disks are formatted correctly, Windows Disk Management displays the following list:

There are 34 LUNs in total:

- 16 LUN size 8 TB for user data (128 TB total)

- 16 LUN or 2 TB for tempdb (32 TB total)

- 2 LUN size 2 TB for the magazine (4 TB in total)

For DWFT reference architectures, we with Dell EMC recommend using mount points for volumes instead of drive letters. We recommend that you assign the appropriate volume and mount point names to simplify troubleshooting, and performance analysis. Ideally, mount point names should be assigned in such a way as to simplify the identification of the VMAX volume for a given Windows volume.

The following table shows the volume labels and access paths used for the reference configuration:

After all the LUNs are ready, the C: \ Storage directory looks like this:

MPIO

The MPIO policy for all volumes is set in the “Least Queue Dept” section.

Windows Defender Configuration

Windows Defender is a standard antivirus and antispam component. To prevent SQL Server data and log files from being scanned (for performance), you need to add the following exceptions.

Folders in one server:

- C: \ Program Files (x86) \ Microsoft SQL Server

- C: \ Program Files \ Microsoft SQL Server

- C: \ Storage (single server solution)

Folders in a high availability (HA) solution:

- C: \ Program Files (x86) \ Microsoft SQL Server

- C: \ Program Files \ Microsoft SQL Server

- C: \ ClusterStorage (high available solution)

- C: \ Windows \ Cluster

- Quorum drive

- MSDTC Drive

File types:

- .ldf

- .mdf

- .ndf

SQL Server 2017 Enterprise Edition Configuration

Installation is performed mainly using default settings. Some exceptions are listed below. During the installation of SQL Server 2017, the “Perform Volume Maintenance Task” option is selected.

Tempdb configuration

The Tempdb database is configured to use 16 files of the same size. Tempdb data files are located on 16 volumes. The log file of transactions tempdb is placed on a disk where logs are kept. Auto zoom enabled.

Startup options for a SQL Server instance

SQL Server 2017 automatically sets the trace flags -T1117 and -T1118, so you no longer need to add them to the instance startup parameters. If you do not use the trace flag -T834, the best performance is achieved. Microsoft recommends that you do not use this flag when using clustered column storage indexes.

The only launch parameter is the -E flag:

Additional information on the topic can be found in the Microsoft DBCC TRACEON - Trace Flags and Database Engine Service Startup Options articles .

Maximum SQL Server Memory

The maximum server memory for this reference architecture should be set to 11.534.336 MB or 11.264 GB, where 1.024 GB is allocated to the operating system. If additional applications share a server, you need to adjust the amount of memory available for the operating system.

Maximum degree of parallelism (MAXDOP)

The maximum degree of parallelism is set at 448, which corresponds to the number of logical cores available on the server.

Resource management

Depending on the type of workload used — the storage with the largest number of rows (RS) or cluster column storage (CS) —You need to properly configure the Resource Governor settings.

For row storage, the resource control parameter used to limit the maximum amount of memory is set to 12 percent.

ALTER WORKLOAD GROUP [default] WITH (request_max_memory_grant_percent=12);

ALTER RESOURCE GOVERNOR RECONFIGURE; For column storage, the resource governor parameter used to limit the maximum amount of memory is set to 25 percent.

ALTER WORKLOAD GROUP [default] WITH (request_max_memory_grant_percent=25);

ALTER RESOURCE GOVERNOR RECONFIGURE; Database Setup

The data warehouse database was configured to use several groups of files, each of which contains 16 files distributed evenly across sixteen data volumes. All files allowed to automatically grow. The file groups have been configured with the AUTOGROW_ALL_FILES parameter to ensure that all files in this file group remain the same.

ALTER DATABASE <database name>

MODIFY FILEGROUP <file group name> AUTOGROW_ALL_FILES;Add-ons for high availability reference architecture (HA)

HA Reference Architecture uses Windows Failover Clustering to provide high availability. When configuring a Windows failover cluster, there are additional storage issues. The recommended configuration allows all cluster votes to be allowed to vote for quorum and use the disk.

An additional volume must be created and configured as a witness disk. Dell EMC recommends using 2GB of disk space. All volumes must be mapped to each cluster node, configured as a cluster resource, and added to the SQL Server cluster resource group.

Conclusion

In conclusion, we highlight probably the most important advantage of the configuration - the ability to connect a large number of HBA adapters. This allows for a balanced increase in storage modules with them.

And this is the certificate for the ATOS BullSequana S800 and Dell EMC VMAX 250F configuration. You can see test metrics in it.

In the near future, we plan to translate solutions to the architecture of Cascade Lake. This will lead to the beginning of a new certification, which will complement the current one. We are pleased to answer your questions about certification and our architecture in the comments.