Introducing GStreamer: Introduction

- From the sandbox

- Tutorial

Disclaimer

This article is aimed at beginning programmers who are not familiar with GStreamer and want to get to know him. Experienced developers are unlikely to find anything new for themselves in this article.

Preamble

Many probably heard that there is such a thing as GStreamer, or you saw how Ubuntu and similar distributions offer to install various packages, the name of which contains “gstreamer” when you first try to play mp3 or some other file with a “not free” media format . So, we will focus on this particular library.

Introduction

GStreamer is a powerful framework for building multimedia applications, which adopted the ideas of the "video pipeline" from the Oregon Graduate Institude, as well as took something from DirectShow. This framework allows you to create applications of various difficulty levels, ranging from a simple console player (you can play any file directly from the terminal without writing any code), ending with full-fledged audio / video players, multimedia editors and other applications.

GStreamer has a plug-in architecture, and comes with a very large set of plug-ins that can solve 99% of the needs of all multimedia developers.

Architecture

GStreamer has several main components:

- Items

- Pads

- Bin and pipeline containers

And now in more detail:

Items

Almost everything in GStreamer is an element. Everything from ordinary stream sources (filesrc, alsasrc, etc.) to stream handlers (demultiplexers, decoders, filters, etc.) and ending with output devices (alsasink, fakesink, filesink, etc.) .).

Pads

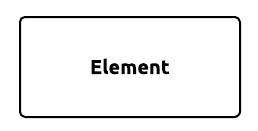

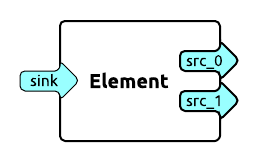

Pad is a certain point of connecting an element to another element, if more simply, these are the inputs and outputs of the element. Usually they are called "sink" - input and "src" - output.

Elements always have at least one pad. For example, filesrc - an element for reading data from the file system - has only one pad called “src”, because it has no input, and can only turn the stream from the file system into an internal representation with which other elements will already work. As well as the alsasink element, it has one pad with the name “sink”, because it can only receive the internal stream and output it to the sound card through alsa. Elements from the "filters" category (those that somehow transform the stream) have two or more connection points. For example, a volume element has a pad with the name “sink” onto which the stream flows, inside this element it is transformed (the volume changes), and through the pad with the name “src” it continues its path. There are also elements where there can be several inputs and outputs.

Containers

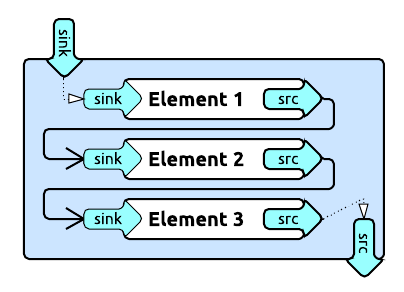

Inside containers, elements spend their life cycles. The container controls the distribution of messages from element to element, controls the status of the elements. Containers are divided into two types:

Inside containers, elements spend their life cycles. The container controls the distribution of messages from element to element, controls the status of the elements. Containers are divided into two types:- Bin

- Pipeline

Pipeline is a top-level container, it controls the synchronization of elements, sends statuses. For example, if the pipeline set the status to PAUSED, this status will be automatically sent to all the elements that are inside it. Pipeline is a Bin implementation.

Bin is a simple container that controls the distribution of messages from element to element that are inside it. Bin is usually used to create a group of elements that must perform an action. For example, decodebin is an element for decoding a stream, which automatically selects the necessary elements for processing the stream depending on the type of data (vorbisdec, theoradec, etc.) removing additional work from the developer.

There are also complete self-contained containers such as playbin. Playbin, in fact, is a full-fledged player that contains all the necessary elements for playing audio and video, but as you know, there is no flexibility ...

How does it work

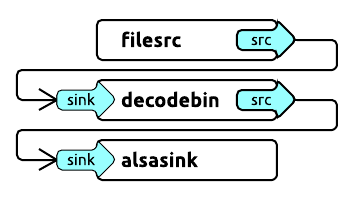

Consider an example diagram of a primitive player. The simplest player scheme should look something like this:

Consider what happens here. The filesrc element reads a file from the file system and sends the stream to the decodebin container, which in turn decodes the stream into the internal representation, and subsequently sends the stream to the alsasink element, which sends the audio stream to the sound card. To check this scheme, it is enough to run the command

gst-launch-1.0 filesrc location=/path/to/file.ogg ! decodebin ! alsasink

And if you heard your melody, then everything is fine.

Notes

- The gst-launch-1.0 utility comes in the gstreamer1.0-tools package

- In the case of using OSS / Jack / etc., this scheme may not work. Therefore, alsasink can be replaced with an autoaudiosink element, which itself will select the desired element for audio output.

What's next?

In the future, I plan to write a series of articles in which various elements and their capabilities will be considered, as well as examples of real use of GStreamer features will be given.

References

GStreamer 1.0 Core Reference Manual

GStreamer Application Development Manual

GStreamer Features

Next article