Object Storage for the OpenStack Cloud: Comparing Swift and Ceph

Author: Dmitry Ukov

Many people confuse object-oriented storage with block storage, for example, based on iSCSI or FibreChannel (Storage Area Network, SAN), although in fact there are many differences between them. While the system only sees block devices on the SAN (a good example of the device name is / dev / sdb linux), access to the object store can only be obtained using a specialized client application (for example, the box.com client application).

Block storage is an important part of cloud infrastructure. The main methods of its use are storing images of virtual machines or storing user files (for example, backups of various types, documents, images). The main advantage of object storage is the very low implementation cost compared to enterprise-level storage, while ensuring scalability and data redundancy. There are two most common ways to implement object storage. In this article, we compare the two methods that OpenStack provides an interface to.

OpenStack Object Storage (Swift) provides a scalable, distributed backup object storage that uses clusters of standardized servers. By “distribution” is meant that each piece of data is replicated across a cluster of storage nodes. The number of replicas can be configured, but it must be at least three for commercial infrastructures.

Access to objects in Swift is via the REST interface. These objects can be stored, retrieved or updated on demand. Object storage can be easily distributed across a large number of servers.

The access path to each object consists of three elements:

/ account / container / object

An object is a unique name that identifies an object. Accounts and containers provide a way to group objects. Nesting accounts and containers is not supported.

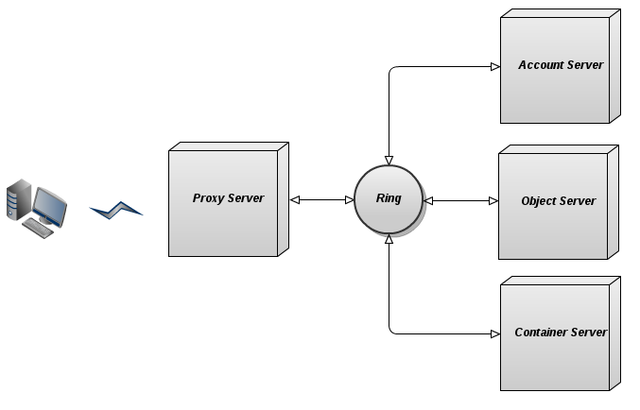

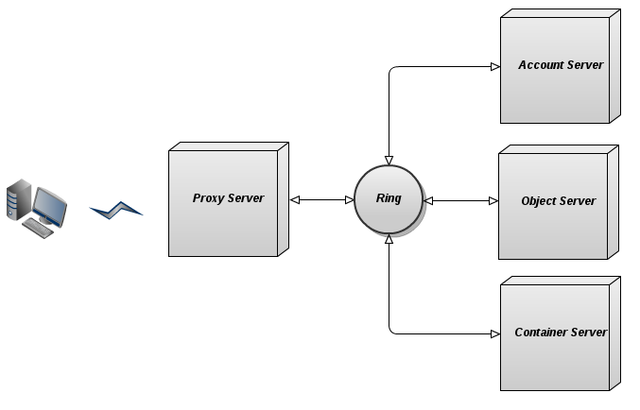

Swift software consists of components, including account processing servers, container processing servers, and object processing servers that perform storage, replication, and container and account management. In addition, another machine called a proxy server provides the Swift API to users and transfers objects from clients to clients upon request.

Account processing servers provide container lists for a specific account. Container processing servers provide lists of objects in specific containers. Object processing servers simply return or store the object itself if there is a full path.

Since user data is distributed across a set of computers, it is important to keep track of where it is located. In Swift, this is achieved using internal data structures called “rings”. Rings are located on all Swift cluster nodes (both repositories and proxies). In this way, Swift solves the problem of many distributed file systems that rely on a centralized metadata server when this metadata repository becomes a bottleneck for accessing reference metadata. Ring storage is not required for storing or deleting a single object, since rings reflect participation in clusters better than a central data card. This has a positive effect on I / O, which significantly reduces access latency.

There are separate rings for the databases of the account, container and individual objects, but all rings work the same way. In short - for a given account, container, or object name, the ring returns information about its physical location on the storage node. Technically, this action is performed using the sequential hashing method . A detailed explanation of the algorithm of the ring can be found in our blog and this link .

The proxy server provides access to the public API and serves requests to storage entities. For each request, the proxy server receives information about the location of the account, container and object using the ring. After receiving the location, the server routes the request. Objects are transferred directly from the proxy server to the client without buffering support (to put it more precisely: although the name contains “proxy”, the “proxy” server does not perform “proxying” as such, such as in http).

This is a simple BLOB (blob storage) repository where you can store, retrieve, and delete objects. Objects are stored as binary files in storage nodes, and metadata is located in the extended file attributes (xattrs). Therefore, the object server file system must support xattrs for files.

Each object is stored using the path obtained from the checksum of the file and the timestamp of the operation. The last record always outweighs (including in distributed scenarios, which causes global clock synchronization) and guarantees maintenance of the latest version of the object. Deletion is also considered a version of the file (a 0-byte file ending in “.ts”, which means tombstone). This ensures that the deleted files are correctly replicated. In this case, older versions of the files do not reappear on failure.

The container processing server processes the lists of objects. He does not know where the objects are, only the contents of a specific container. Lists are stored as sqlite3 database files and are replicated across the cluster in the same way as objects. Statistics are also tracked, including the total number of objects and the amount of storage used for this container.

A special process — swift-container-updater — constantly checks the container databases on the node it is running on and updates the account database when the container data changes. To find the account that needs to be updated, it uses a ring.

Works similar to the container processing server, but processes container lists.

- Replication: the number of copies of objects that can be configured manually.

- Downloading an object is a synchronous process: the proxy server returns the “201 Created” HTTP code only if more than half of the replicas are recorded.

- Integration with the authentication service OpenStack (Keystone): accounts are assigned to the participants.

- Validation of data: the sum of the md5 object in the file system compared to the metadata stored in xattrs.

- Synchronization of containers: it becomes possible to synchronize containers on several data centers.

- Transfer mechanism: it is possible to use an additional node to store the replica in case of failure.

- If the size of the object is more than 5 GB, it must be broken down: these parts are stored as separate objects. They can be read at the same time.

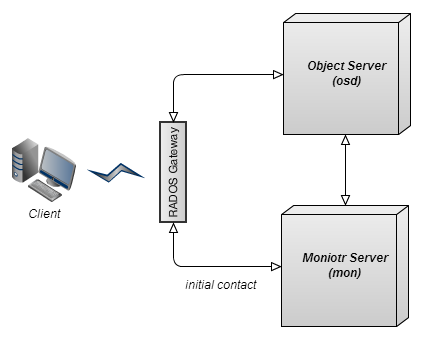

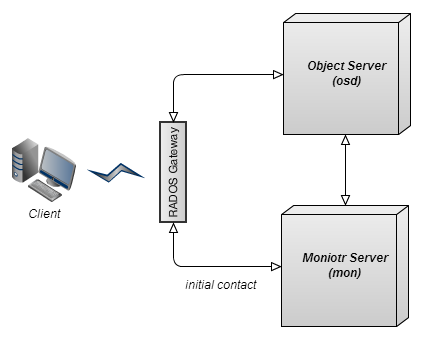

Ceph is a distributed network attached storage with distributed POSIX metadata and semantics management. Access to the Ceph object repository can be obtained through various clients, including the dedicated cmdline tool, FUSE and Amazon S3 clients (using the compatibility level called “ S3 Gateway “). Ceph has a high degree of modularity - various feature sets are provided by various components that can be combined and assembled. In particular, for the object store accessed via the s3 API, it is enough to launch three components: an object processing server, a monitoring server, and a RADOS gateway.

ceph-mon is a lightweight workflow that provides consistency for distributed decision making in a Ceph cluster. It is also the starting point of contact for new customers, which provides information about the cluster topology. Usually there are three ceph-mon workflows on three different physical machines, isolated from each other; for example, on different shelves or in different rows.

The actual data hosted by Ceph is stored on top of a cluster storage engine called RADOS , which is deployed on a set of storage nodes.

ceph-osd is a storage workflow that runs on each storage node (object processing server) in a cluster. Ceph.ceph-osd contacts ceph-mon to participate in the cluster. Its main purpose is to service requests for read / write and other requests from clients. It also interacts with other ceph-osd processes to replicate data. The data model is relatively simple at this level. There are several named pools, and within each pool there are named objects in a flat namespace (without directories). Each object has data and metadata. Object data is one potentially large series of bytes. Metadata is an unordered collection of key-value pairs. The Ceph file system uses metadata to store information about the file owner, etc. Under it, the ceph-osd workflow stores data on the local file system. We recommend Btrfs, but any POSIX file system with extended attributes will do.

While Swift uses rings ( mapping the md5 checksum range to a set of storage nodes) for sequential distribution and retrieval of data, Ceph uses an algorithm called CRUSH for this . In short, CRUSH is an algorithm that can calculate the physical location of data in Ceph based on the name of the object, cluster map, and CRUSH rules. CRUSH describes a storage cluster in a hierarchy that reflects its physical organization, thus ensuring that data is correctly replicated over physical equipment. In addition, CRUSH allows you to control the placement of data using a policy, which allows CRUSH to respond to changes in participation in the cluster.

radosgw is a FastCGI service that provides a RESTful HTTP API for storing objects and metadata on a Ceph cluster.

Features and Functions

- Partial or full read and write operations

- Snapshots

- Atomic transactions for functions such as adding, truncating and cloning a range

- Key-value correlation at the object level

- Managing replicas of objects

- Aggregating objects (a series of objects) into a group and assigning a group to an OSD series

- Shared Private Key Authentication: Both the client and the monitoring cluster have a copy of the client’s private key

- Compatibility with S3 / Swift API

Official Swift documentation - Source for the description of the data structure.

Swift Ring source code on Github - The source code base of the Swift Ring and RingBuilder classes.

Blog of Chmouel Boudjnah - Useful Swift Tips.

Official Ceph documentation - The main source of descriptions of data structures.

Original article in English

Overview

Many people confuse object-oriented storage with block storage, for example, based on iSCSI or FibreChannel (Storage Area Network, SAN), although in fact there are many differences between them. While the system only sees block devices on the SAN (a good example of the device name is / dev / sdb linux), access to the object store can only be obtained using a specialized client application (for example, the box.com client application).

Block storage is an important part of cloud infrastructure. The main methods of its use are storing images of virtual machines or storing user files (for example, backups of various types, documents, images). The main advantage of object storage is the very low implementation cost compared to enterprise-level storage, while ensuring scalability and data redundancy. There are two most common ways to implement object storage. In this article, we compare the two methods that OpenStack provides an interface to.

Openstack swift

Swift Network Architecture

OpenStack Object Storage (Swift) provides a scalable, distributed backup object storage that uses clusters of standardized servers. By “distribution” is meant that each piece of data is replicated across a cluster of storage nodes. The number of replicas can be configured, but it must be at least three for commercial infrastructures.

Access to objects in Swift is via the REST interface. These objects can be stored, retrieved or updated on demand. Object storage can be easily distributed across a large number of servers.

The access path to each object consists of three elements:

/ account / container / object

An object is a unique name that identifies an object. Accounts and containers provide a way to group objects. Nesting accounts and containers is not supported.

Swift software consists of components, including account processing servers, container processing servers, and object processing servers that perform storage, replication, and container and account management. In addition, another machine called a proxy server provides the Swift API to users and transfers objects from clients to clients upon request.

Account processing servers provide container lists for a specific account. Container processing servers provide lists of objects in specific containers. Object processing servers simply return or store the object itself if there is a full path.

Rings

Since user data is distributed across a set of computers, it is important to keep track of where it is located. In Swift, this is achieved using internal data structures called “rings”. Rings are located on all Swift cluster nodes (both repositories and proxies). In this way, Swift solves the problem of many distributed file systems that rely on a centralized metadata server when this metadata repository becomes a bottleneck for accessing reference metadata. Ring storage is not required for storing or deleting a single object, since rings reflect participation in clusters better than a central data card. This has a positive effect on I / O, which significantly reduces access latency.

There are separate rings for the databases of the account, container and individual objects, but all rings work the same way. In short - for a given account, container, or object name, the ring returns information about its physical location on the storage node. Technically, this action is performed using the sequential hashing method . A detailed explanation of the algorithm of the ring can be found in our blog and this link .

Proxy server

The proxy server provides access to the public API and serves requests to storage entities. For each request, the proxy server receives information about the location of the account, container and object using the ring. After receiving the location, the server routes the request. Objects are transferred directly from the proxy server to the client without buffering support (to put it more precisely: although the name contains “proxy”, the “proxy” server does not perform “proxying” as such, such as in http).

Object Processing Server

This is a simple BLOB (blob storage) repository where you can store, retrieve, and delete objects. Objects are stored as binary files in storage nodes, and metadata is located in the extended file attributes (xattrs). Therefore, the object server file system must support xattrs for files.

Each object is stored using the path obtained from the checksum of the file and the timestamp of the operation. The last record always outweighs (including in distributed scenarios, which causes global clock synchronization) and guarantees maintenance of the latest version of the object. Deletion is also considered a version of the file (a 0-byte file ending in “.ts”, which means tombstone). This ensures that the deleted files are correctly replicated. In this case, older versions of the files do not reappear on failure.

Container handling server

The container processing server processes the lists of objects. He does not know where the objects are, only the contents of a specific container. Lists are stored as sqlite3 database files and are replicated across the cluster in the same way as objects. Statistics are also tracked, including the total number of objects and the amount of storage used for this container.

A special process — swift-container-updater — constantly checks the container databases on the node it is running on and updates the account database when the container data changes. To find the account that needs to be updated, it uses a ring.

Account Processing Server

Works similar to the container processing server, but processes container lists.

Features and Functions

- Replication: the number of copies of objects that can be configured manually.

- Downloading an object is a synchronous process: the proxy server returns the “201 Created” HTTP code only if more than half of the replicas are recorded.

- Integration with the authentication service OpenStack (Keystone): accounts are assigned to the participants.

- Validation of data: the sum of the md5 object in the file system compared to the metadata stored in xattrs.

- Synchronization of containers: it becomes possible to synchronize containers on several data centers.

- Transfer mechanism: it is possible to use an additional node to store the replica in case of failure.

- If the size of the object is more than 5 GB, it must be broken down: these parts are stored as separate objects. They can be read at the same time.

Ceph

Ceph is a distributed network attached storage with distributed POSIX metadata and semantics management. Access to the Ceph object repository can be obtained through various clients, including the dedicated cmdline tool, FUSE and Amazon S3 clients (using the compatibility level called “ S3 Gateway “). Ceph has a high degree of modularity - various feature sets are provided by various components that can be combined and assembled. In particular, for the object store accessed via the s3 API, it is enough to launch three components: an object processing server, a monitoring server, and a RADOS gateway.

Monitoring server

ceph-mon is a lightweight workflow that provides consistency for distributed decision making in a Ceph cluster. It is also the starting point of contact for new customers, which provides information about the cluster topology. Usually there are three ceph-mon workflows on three different physical machines, isolated from each other; for example, on different shelves or in different rows.

Object Processing Server

The actual data hosted by Ceph is stored on top of a cluster storage engine called RADOS , which is deployed on a set of storage nodes.

ceph-osd is a storage workflow that runs on each storage node (object processing server) in a cluster. Ceph.ceph-osd contacts ceph-mon to participate in the cluster. Its main purpose is to service requests for read / write and other requests from clients. It also interacts with other ceph-osd processes to replicate data. The data model is relatively simple at this level. There are several named pools, and within each pool there are named objects in a flat namespace (without directories). Each object has data and metadata. Object data is one potentially large series of bytes. Metadata is an unordered collection of key-value pairs. The Ceph file system uses metadata to store information about the file owner, etc. Under it, the ceph-osd workflow stores data on the local file system. We recommend Btrfs, but any POSIX file system with extended attributes will do.

CRUSH Algorithm

While Swift uses rings ( mapping the md5 checksum range to a set of storage nodes) for sequential distribution and retrieval of data, Ceph uses an algorithm called CRUSH for this . In short, CRUSH is an algorithm that can calculate the physical location of data in Ceph based on the name of the object, cluster map, and CRUSH rules. CRUSH describes a storage cluster in a hierarchy that reflects its physical organization, thus ensuring that data is correctly replicated over physical equipment. In addition, CRUSH allows you to control the placement of data using a policy, which allows CRUSH to respond to changes in participation in the cluster.

Rados Gateway

radosgw is a FastCGI service that provides a RESTful HTTP API for storing objects and metadata on a Ceph cluster.

Features and Functions

- Partial or full read and write operations

- Snapshots

- Atomic transactions for functions such as adding, truncating and cloning a range

- Key-value correlation at the object level

- Managing replicas of objects

- Aggregating objects (a series of objects) into a group and assigning a group to an OSD series

- Shared Private Key Authentication: Both the client and the monitoring cluster have a copy of the client’s private key

- Compatibility with S3 / Swift API

Functional Overview

| Swift | Ceph | |

| Replication | Yes | Yes |

| Maximum object size | 5 GB (larger objects are segmented) | Is not limited |

| Multi DC installation (distribution between data centers) | Yes (replication only at the container level, but a scheme is proposed for full replication between the data centers) | No (requires asynchronous subsequent replication of data integrity, which Ceph does not yet support) |

| Integration with Openstack | Yes | Partial (lack of Keystone support) |

| Replica management | Not | Yes |

| Recording algorithm | Synchronous | Synchronous |

| Amazon S3 Compatible API | Yes | Yes |

| Data placement method | Rings (static mapping structure) | CRUSH (algorithm) |

Sources

Official Swift documentation - Source for the description of the data structure.

Swift Ring source code on Github - The source code base of the Swift Ring and RingBuilder classes.

Blog of Chmouel Boudjnah - Useful Swift Tips.

Official Ceph documentation - The main source of descriptions of data structures.

Original article in English