NL2API: Creating Natural Language Interfaces for the Web API

- Transfer

Hi, Habr! Most recently, we briefly talked about the Natural Language Interfaces (Natural Language Interfaces). Well, today we have not briefly. Under the cat you will find a full story about creating NL2API for the Web-API. Our colleagues from Research have tested a unique approach to collecting training data for the framework. Join now!

As the Internet evolves towards a service-oriented architecture, software interfaces (APIs) are becoming increasingly important as a way to provide access to data, services, and devices. We are working on the problem of creating a natural language API for the API (NL2API), focusing on web services. NL2API solutions have many potential benefits, for example, helping to simplify the integration of web services into virtual assistants.

We offer the first comprehensive platform (framework), which allows you to create NL2API for a specific web API. The key task is to collect data for training, that is, the “NL command - API call” pairs, which allow the NL2API to study the semantics of both NL commands that do not have a strictly defined format and formalized API calls. We offer our own unique approach to the collection of training data for NL2API using crowdsourcing - attracting numerous remote workers to the generation of various NL teams. We optimize the crowdsourcing process itself to reduce costs.

In particular, we offer a fundamentally new hierarchical probabilistic model that will help us distribute the budget for crowdsourcing, mainly between those API calls that have a high value for learning NL2API. We apply our framework to the real API and show that it allows you to collect high-quality training data with minimal costs, as well as create high-performance NL2API from scratch. We also demonstrate that our crowdsourcing model improves the efficiency of this process, that is, the training data collected within its framework provides higher NL2API performance, far exceeding the baseline.

Application programming interfaces (APIs) are playing an increasingly important role in the virtual and physical world due to the development of technologies such as service-oriented architecture (SOA), cloud computing and the Internet of things (IoT). For example, web services hosted in the cloud (weather, sports, finance, etc.) provide data and services to end users via a web API, and IoT devices allow other network devices to use their functionality.

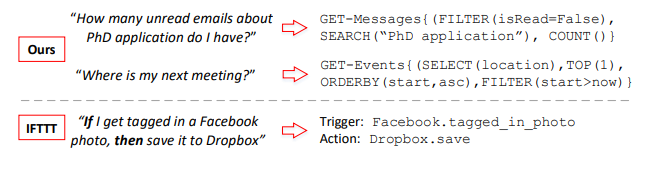

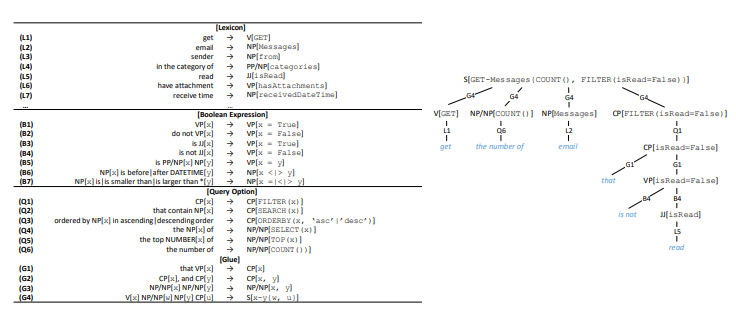

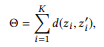

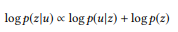

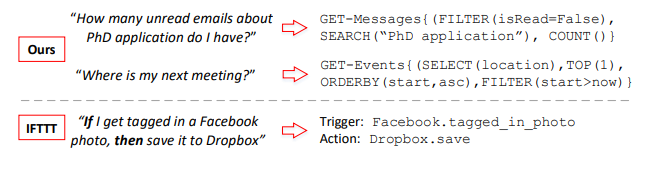

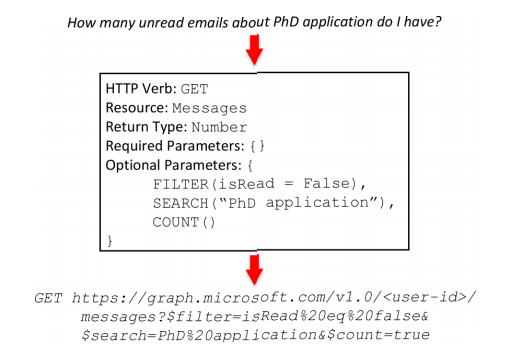

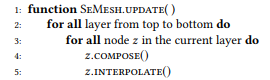

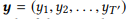

Figure 1. The “NL-command (left) and API call (right)” pairs, collected

our framework, and comparison with IFTTT. GET-Messages and GET-Events are two web APIs for searching emails and calendar events, respectively. The API can be called with various parameters. We concentrate on fully parameterized API calls, while IFTTT is limited to APIs with simple parameters.

APIs are commonly used in various software applications: desktop applications, websites, and mobile applications. They also serve users through a graphical user interface (GUI). The GUI made a great contribution to the popularization of computers, but, as computer technology developed, its numerous limitations increasingly manifest themselves. On the one hand, since devices are becoming smaller, more mobile and smarter, the requirements for a graphic image on the screen are constantly increasing, for example, with respect to portable devices or devices connected to IoT.

On the other hand, users have to adapt to various specialized GUIs for various services and devices. As the number of available services and devices increases, the cost of training and adapting users also grows. Natural language interfaces (NLI), such as Apple Siri and Microsoft Cortana virtual assistants, also known as conversational or conversational interfaces (CUI), demonstrate significant potential as a single intelligent tool for a wide range of server services and devices.

This paper deals with the problem of creating a natural language interface for an API (NL2API). But, unlike virtual assistants, this is not a general-purpose NLI,

we are developing approaches to creating NLIs for specific web APIs, that is, APIs for web services like the multisport service ESPN1. Such NL2APIs can solve the problem of scalability of general-purpose NLI, providing the possibility of distributed development. The usefulness of a virtual assistant largely depends on the breadth of its capabilities, that is, on the number of services it supports.

However, integrating web services into a virtual assistant one by one is incredibly hard work. If individual web services providers had an inexpensive way to create NLI for their APIs, the integration costs would be significantly reduced. A virtual assistant would not have to handle different interfaces for different web services. It would be enough for him to simply integrate the individual NL2API, which achieve uniformity due to natural language. On the other hand, NL2API can also simplify the discovery of web services and the programming of API recommendation and assistance systems, eliminating the need to memorize the large number of available web APIs and their syntax.

Example 1Two examples are shown in Figure 1. The API can be called with different parameters. In the case of the email search API, users can filter email by specific properties or search for emails by keywords. The main task of NL2API is to match NL commands with corresponding API calls.

Task.The collection of training data is one of the most important tasks related to research in the development of NLI interfaces and their practical application. NLI interfaces use supervised training data, which in the case of NL2API consists of NL command – API call pairs, to learn semantics and uniquely match NL commands with corresponding formalized representations. Natural language is very flexible, so users can describe an API call in syntactically different ways, that is, paraphrasing takes place.

Consider the second example in Figure 1. Users can rephrase this question as follows: “Where will the next meeting take place” or “Find a venue for the next meeting”. Therefore, it is extremely important to collect sufficient training data for the system to recognize such variants in the future. Existing NLIs generally adhere to the “best of principle” principle in the data collection process. For example, the closest analogue of our methodology for comparing NL commands with API calls is using the IF-This-Then-That (IFTTT) concept - “if it is, then” (Figure 1). The training data comes directly from the IFTTT website.

However, if the API is not supported or not fully supported, there is no way to correct the situation. In addition, the training data collected in this way is of little use for supporting extended commands with several parameters. For example, we analyzed the anonymized Microsoft API call logs to search emails for a month and found that about 90% of them use two or three parameters (approximately in equal amounts), and these parameters are quite diverse. Therefore, we strive to provide full support for API parameterization and to implement extended NL commands. The problem of deploying an active and customizable process of collecting training data for a specific API currently remains unresolved.

The use of NLI in combination with other formalized views, such as relational databases, knowledge bases and web tables, has been worked out fairly well, while the development of the NLI for the web API has received almost no attention. We offer the first comprehensive platform (framework), which allows you to create NL2API for a specific web API from scratch. In the implementation for the web API, our framework includes three steps: (1) Representation. The original HTTP Web API format contains many redundant and, therefore, distracting details from the point of view of the NLI interface.

We suggest using an intermediate semantic representation for the web API in order not to overload NLI with unnecessary information. (2) A set of training data. We propose a new approach to obtaining controlled training data based on crowdsourcing. (3) NL2API. We also offer two NL2API models: a language-based extraction model and a recurrent neural network model (Seq2Seq).

One of the key technical results of this work is a fundamentally new approach to the active collection of training data for NL2API based on crowdsourcing - we use remote performers to annotate API calls when they are compared with NL teams. This allows you to achieve three design goals by ensuring: (1) Customizability. It must be possible to specify which parameters for which API to use and how much training data to collect. (2) Low cost. The services of crowdsourcing workers are much cheaper than the services of specialized specialists, therefore, they need to be hired. (3) High quality. The quality of the training data should not be reduced.

When designing this approach, there are two main problems. First, API calls with extended parameterization, as in Figure 1, are incomprehensible to the average user, so you need to decide how to formulate the annotation problem so that crowdsourced workers can easily cope with it. We begin by developing an intermediate semantic representation for the web API (see Section 2.2), which allows us to seamlessly generate API calls with the required parameters.

Then we think up a grammar to automatically convert each API call to a canonical NL command, which can be quite cumbersome, but it will be understood by the average crowdsourced worker (see section 3.1). The performers will only have to rephrase the canonical team to make it sound more natural. This approach helps to prevent many errors in the collection of training data, since the task of rephrasing is much simpler and clearer for the average crowdsourcing worker.

Secondly, it is necessary to understand how to define and annotate only those API calls that are of real value for NL2API learning. The “combinatorial explosion” that arises during parameterization leads to the fact that the number of calls even for one API can be quite large. Annotate all calls does not make sense. We offer a fundamentally new hierarchical probabilistic model for the implementation of the crowdsourcing process (see Section 3.2). By analogy with language modeling in order to obtain information, we assume that NL commands are generated based on the corresponding API calls, so a language model should be used for each API call in order to register this “spawning” process.

Our model is based on the compositional nature of API calls or formalized representations of the semantic structure as a whole. At the intuitive level, if an API call consists of simpler calls (for example, “unread emails about the application for a candidate of science degree” = “unread emails” + “emails for an application for the degree of candidate of science”, we can build it a language model from simple API calls even without annotation, so by annotating a small number of API calls, we can calculate the language model for everyone else.

Of course, the calculated language models are far from ideal, otherwise we would have already solved the problem of creating an NL2API. Nevertheless, such an extrapolation of the language model to non-annotated API calls gives us a holistic view of the entire space of API calls, as well as the interaction of natural language and API calls, which allows us to optimize the crowdsourcing process. In Section 3.3, we describe an algorithm for selective annotation of API calls that helps to make API calls more distinct, that is, to ensure the maximum divergence of their language models.

We apply our framework to two deployed APIs from the Microsoft Graph API2 package. We demonstrate that high-quality training data can be collected at minimal cost, provided that the proposed approach is used3. We also show that our approach improves crowdsourcing efficiency. At similar costs, we collect higher-quality training data, significantly exceeding the basic indicators. As a result, our NL2API solutions provide higher accuracy.

In general, our main contribution includes three aspects:

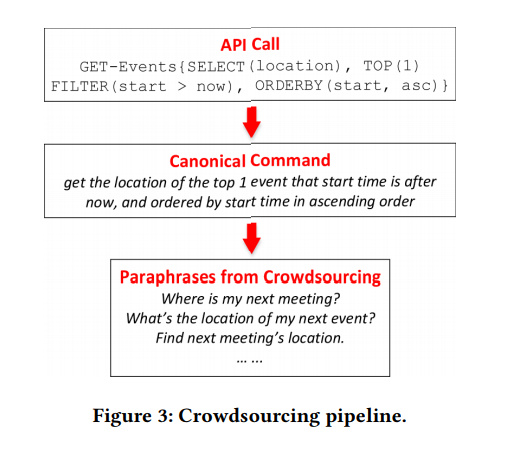

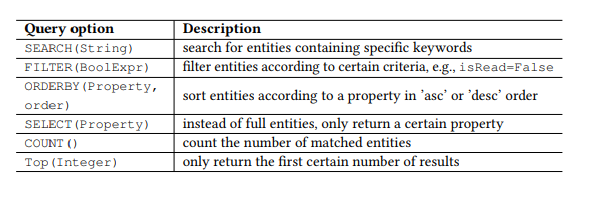

Table 1. OData request parameters.

Recently, web APIs that meet the architectural style of REST, that is, the RESTful API, have become increasingly popular due to their simplicity. RESTful APIs are also used in smartphones and IoT devices. Restful APIs work with resources that are addressed via a URI and provide access to these resources for a wide range of clients using simple HTTP commands: GET, PUT, POST, etc. We will mainly work with the RESTful API, but the basic methods can be used and other APIs.

For example, let's take the popular open data protocol (OData) for the RESTful API and two web APIs from the Microsoft Graph API (Figure 1), which, respectively, are used to search for emails and calendar events of the user. Resources in OData are entities, each of which is associated with a list of properties. For example, the Message entity — an email — has properties such as subject (subject), from (from), isRead (read), receivedDateTime (received date and time), and so on.

In addition, OData defines a set of query parameters, allowing you to perform advanced resource manipulations. For example, the FILTER parameter allows you to search for emails from a specific sender or emails received on a specific date. The request parameters that we will use are presented in Table 1. We call each combination of the HTTP command and an entity (or set of entities) as an API, for example, GET-Messages — to search for emails. Any parameterized request, for example, FILTER (isRead = False), is called a parameter, and an API call is an API with a list of parameters.

The main task of NLI is to compare statements (natural language commands) with a certain formalized representation of, for example, logical forms or SPARQL queries for knowledge bases or web API in our case. When it is necessary to focus on the semantic mapping, without being distracted by irrelevant details, an intermediate semantic representation is usually used in order not to work directly with the target. For example, combinatorial categorical grammar is widely used to create NLI interfaces for databases and knowledge bases. A similar approach to abstraction is also very important for NL2API. Many details, including URL conventions, HTTP headers and response codes, can “distract” NL2API from solving the main task - the semantic mapping.

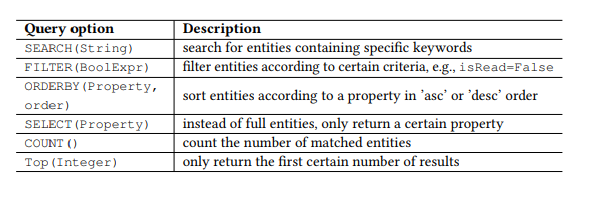

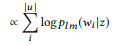

Therefore, we create an intermediate representation for the RESTful APIs (Figure 2) called the API frame, this representation reflects the semantics of the frame. Frame API consists of five parts. HTTP Verb (HTTP Command) and Resource (Resource) are basic elements for the RESTful API. Return Type allows you to create composite APIs, that is, combine several API calls to perform a more complex operation. Required Parameters are most often used in PUT or POST calls to the API, for example, for sending e-mail, the required parameters are the addressee, the header and the message body. Optional Parameters are often present in GET calls in the API, they help narrow down the information request.

If the required parameters are missing, we serialize the API frame, for example: GET-messages {FILTER (isRead = False), SEARCH (“PhD application”), COUNT ()}. An API frame can be deterministic and converted to a real API call. During the conversion process, the necessary contextual data will be added, including the user ID, location, date and time. In the second example (Figure 1), the now value in the FILTER parameter will be replaced with the date and time of the execution of the corresponding command during the conversion of an API frame to an actual API call. Further we will use the concepts of an API frame and an API call interchangeably.

Figure 2. Frame API. Above: team in natural language. Middle: Frame API. Below: API call.

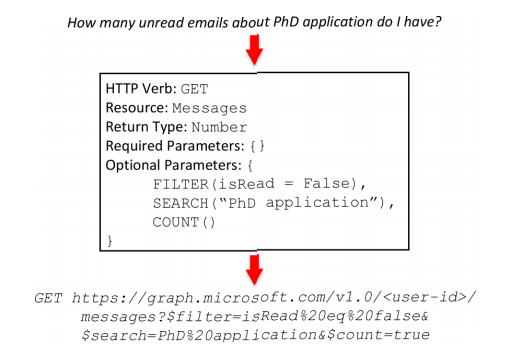

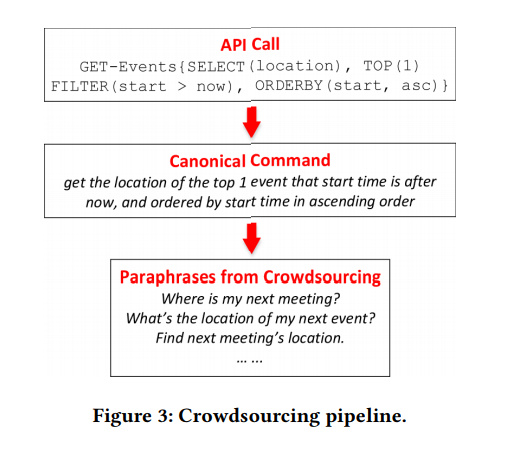

Figure 3. Crowdsourcing conveyor.

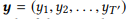

This section describes our proposed fundamentally new approach to collecting training data for NL2API solutions using crowdsourcing. First, we generate API calls and convert each of them into a canonical command, based on a simple grammar (section 3.1), and then use crowdsourcing workers to paraphrase canonical commands (Figure 3). Given the compositional nature of API calls, we proposed a hierarchical probabilistic model of crowdsourcing (section 3.2), as well as an algorithm for optimizing crowdsourcing (section 3.3).

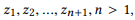

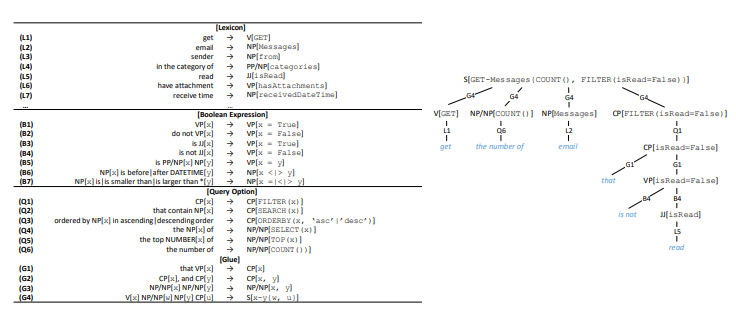

Figure 4. Generation of canonical command. Left: lexicon and grammar. Right: example of derivation.

We generate API calls based solely on the API specification. In addition to schema elements such as request parameters and entity properties, we need property values to generate API calls that are not provided by the API specification. For properties with enumerated values, such as Boolean, we list the possible values (True / False).

For properties with values of an unlimited type, such as Datetime, we will synthesize several representative values, such as today or this_week for receivedDateTime. It is necessary to understand that these are abstract values at the API frame level and they will be converted to real according to the context (for example, the actual date and time) when the API frame is converted to a real API call.

It’s easy to list all combinations of query parameters, properties, and property values to create API calls. Simple heuristics allow you to weed out not quite suitable combinations. For example, TOP is applied to a sorted list, so this parameter must be used in conjunction with ORDERBY. In addition, properties of type Boolean, for example isRead, cannot be used in ORDERBY. Nevertheless, the “combinatorial explosion” in any case determines the presence of a large number of API calls for each API.

The average user is hard to understand API calls. Similarly, we convert an API call into a canonical command. We form an API-specific lexicon and a common grammar for the API (Figure 4). Lexicon allows you to get the lexical form and syntactic category for each element (HTTP commands, entities, properties and property values). For example, the lexical notation ⟨sender → NP [from]⟩ indicates that the lexical form of the from property is “sender”, and the syntactic category is the noun phrase (NP) that will be used in grammar.

Syntactic categories can also be verbs (V), verb phrases (VP), adjectives (JJ), nominative word combinations (CP), generalized noun phrases followed by other noun phrases (NP / NP), generalized prepositional phrases (PP / NP), quotations (S), etc...

it should be noted that although the lexicon specific to each API and must be provided by the administrator, the grammar is designed as a general and can therefore be replicated to any RESTful API based OData protocol - "as is" and and after minor modifications. The 17 grammar rules in Figure 4 allow you to cover all the API calls used in the following experiments (section 5).

The grammar makes it clear how to get the canonical command step by step from an API call. This is a set of rules of the form ⟨t1, t2, ..., tn → c [z]⟩, where is a sequence of tokens, z is part of the API call, and cz is its syntactic category. Let us discuss the example in Figure 4. To the API call in the root of the derivation tree, which has the syntactic category S, we first apply the G4 rule to split the original API call into four parts. Taking into account their syntactic category, the first three can be directly converted into phrases in natural language, while the latter uses another subtree of derivation and therefore will be transformed into the phrase “that is not read”.

is a sequence of tokens, z is part of the API call, and cz is its syntactic category. Let us discuss the example in Figure 4. To the API call in the root of the derivation tree, which has the syntactic category S, we first apply the G4 rule to split the original API call into four parts. Taking into account their syntactic category, the first three can be directly converted into phrases in natural language, while the latter uses another subtree of derivation and therefore will be transformed into the phrase “that is not read”.

It should be noted that syntactic categories allow conditional derivation. For example, both rule B2 and rule B4 can be applied to VP [x = False], so the syntactic category x helps to decide. If x belongs to the syntactic category of VP, rule B2 (for example, x is hasAttachments → "do not have attachment"); and if it is JJ, then rule B4 is executed (for example, x is isRead → "is not read"). This avoids cumbersome canonical commands (“do not read” or “is not have attachment”) and makes the generated canonical commands more natural.

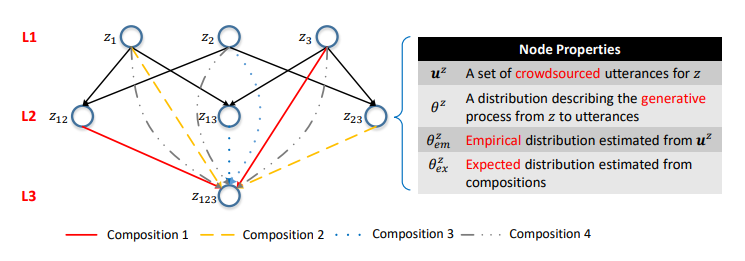

We can generate a large number of API calls using the above approach, but annotating them all with the help of crowdsourcing is not practical from an economic point of view. Therefore, we propose a hierarchical probabilistic model for organizing crowdsourcing, which helps to decide which API calls to annotate. As far as we know, this is the first probabilistic model of using crowdsourcing to create NLI interfaces, which allows solving a unique and intriguing task of modeling the interaction between natural language representations and formalized representations of the semantic structure. Formalized representations of the semantic structure in general and API calls in particular are of a composite nature. For example, z12 = GET-Messages {COUNT (),

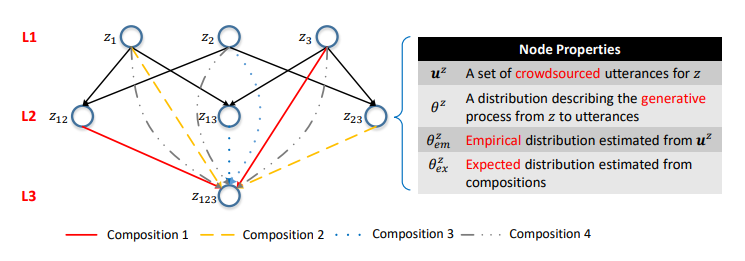

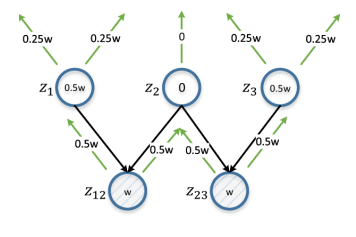

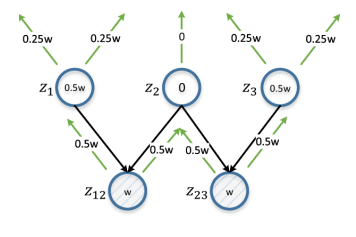

Figure 5. Semantic network. The i-th layer consists of API calls with i parameters. Ribs are compositions. The probability distributions on the vertices characterize the corresponding language models.

One of the key results of our research was the confirmation that such composition can be used to model the crowdsourcing process.

First, we define the composition based on a set of API call parameters.

Definition 3.1 (composition). Take an API and a set of API calls

, if we define r (z) as a parameter set for z, then it

, if we define r (z) as a parameter set for z, then it  is a composition

is a composition  if and only if it

if and only if it  is part of

is part of

Based on the compositional interrelationships of API calls, it is possible to organize all API calls into a single hierarchical structure. API calls with the same number of parameters are represented as vertices of one layer, and compositions are represented as

directed edges between layers. We call this structure the semantic network (or SeMesh).

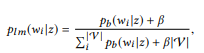

By analogy with the approach based on language modeling in information retrieval, we assume that statements that correspond to one API z call are generated using a stochastic process characterized by a language model . For the sake of simplicity, we will focus on the probabilities of words, thus

. For the sake of simplicity, we will focus on the probabilities of words, thus  where the

where the  dictionary stands.

dictionary stands.

For reasons that will become apparent a little later, instead of the standard language unigram model, we suggest using a set of Bernoulli distributions (Bag of Bernoulli, BoB). Each Bernoulli distribution corresponds to a random variable W, which determines whether the word w appears in a statement generated on the basis of z, and the BoB distribution is a set of Bernoulli distributions for all words . We will use

. We will use  as a short designation for

as a short designation for  .

.

Suppose we have formed a (multi) set of statements for z,

for z,

the maximum likelihood estimate (MLE) for the BoB distribution allows us to select statements containing w:

Example 2.Regarding the above API call z1, suppose that we received two statements u1 = “find unread emails” and u2 = “emails that are not read”, then u = {u1, u2}. pb (“emails” | z) = 1.0, since “emails” is present in both statements. Similarly, pb ("unread" | z) = 0.5 and pb ("meeting" | z) = 0.0.

In the semantic network, there are three basic operations at the vertex level:

annotation, layout, and interpolation.

ANNOTATE (annotate) means to collect statements to rephrase the canonical command of the vertex z using crowdsourcing and evaluate the empirical distribution

to rephrase the canonical command of the vertex z using crowdsourcing and evaluate the empirical distribution  using the maximum likelihood method.

using the maximum likelihood method.

COMPOSE(compose) attempts to derive a language model based on the compositions to calculate the expected distribution . As we show experimentally,

. As we show experimentally,  this is a composition for z. If we proceed from the assumption that the corresponding statements are characterized by the same compositional relationship, then it

this is a composition for z. If we proceed from the assumption that the corresponding statements are characterized by the same compositional relationship, then it  should be decomposed into

should be decomposed into  :

:

where f is a compositional function. For the BoB distribution, the composition function will be as follows:

In other words, if ui is a statement zi, u is a statement compositionally forms u, then the word w does not belong to u. If and only if it does not belong to any ui. When z has many compositions, θe x is calculated separately and then averaged. The standard language unigram model does not lead to a natural composition function. In the process of normalizing the probabilities of words, the length of statements is involved, which, in turn, takes into account the complexity of API calls, violating the decomposition in equation (2). That is why we offer a BoB distribution.

compositionally forms u, then the word w does not belong to u. If and only if it does not belong to any ui. When z has many compositions, θe x is calculated separately and then averaged. The standard language unigram model does not lead to a natural composition function. In the process of normalizing the probabilities of words, the length of statements is involved, which, in turn, takes into account the complexity of API calls, violating the decomposition in equation (2). That is why we offer a BoB distribution.

Example 3. Suppose we prepared an annotation for the API calls z1 and z2 mentioned earlier, each with two statements: = {"find unread emails", "emails that are not read"} and

= {"find unread emails", "emails that are not read"} and  = {"how many emails do I have "," Find the number of emails "}. We appreciated the language patterns

= {"how many emails do I have "," Find the number of emails "}. We appreciated the language patterns  and

and . The composition operation attempts to evaluate

. The composition operation attempts to evaluate  without querying

without querying  . For example, for the word "emails", pb ("emails" | z1) = 1.0 and pb ("emails" | z2) = 1.0, thus, from equation (3) it follows that pb ("emails" | z12) = 1.0, that is, we believe that this word will be included in any statement z12. Similarly, pb ("find" | z1) = 0.5 and pb ("find" | z2) = 0.5, so pb ("find" | z12) = 0.75. The word has a good chance of being generated from any z1 or z2, so its probability for z12 should be higher.

. For example, for the word "emails", pb ("emails" | z1) = 1.0 and pb ("emails" | z2) = 1.0, thus, from equation (3) it follows that pb ("emails" | z12) = 1.0, that is, we believe that this word will be included in any statement z12. Similarly, pb ("find" | z1) = 0.5 and pb ("find" | z2) = 0.5, so pb ("find" | z12) = 0.75. The word has a good chance of being generated from any z1 or z2, so its probability for z12 should be higher.

Of course, statements are not always combined compositionally. For example, several elements in a formalized representation of the semantic structure can be conveyed by a single word or a phrase in a natural language; this phenomenon is called sublexical compositionality. One such example is shown in Figure 3, where three parameters — TOP (1), FILTER (start> now), and ORDERBY (start, asc) —are represented by the single word “next”. However, it is impossible to obtain such information without annotating an API call, so the problem itself resembles the problem of chicken and eggs. In the absence of such information, it is reasonable to adhere to the default assumption that statements are characterized by the same compositional relationship as API calls.

This is a plausible assumption. It is worth noting that this assumption is used only to model the process of crowdsourcing in order to collect data. At the testing stage, the statements of real users may not correspond to this assumption. A natural-language interface will be able to cope with such non-compositional situations if they are covered by the collected training data.

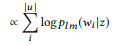

INTERPOLATE (interpolation) combines all available information about z, that is, annotated statements of z and information obtained from the compositions, and obtains a more accurate estimate by interpolation

by interpolation  and

and  .

.

Balance parameter α controls tradeoffs between annotations

the current vertices, which are accurate but sufficient, and information derived from compositions based on the compositionality assumption may not be as accurate, but it provides a wider coverage. In a certain sense, it serves the same purpose as smoothing in language modeling, which allows a better estimate of the probability distribution with insufficient data (annotations). The more

serves the same purpose as smoothing in language modeling, which allows a better estimate of the probability distribution with insufficient data (annotations). The more  , the more weight in

, the more weight in  . For a root vertex with no composition,

. For a root vertex with no composition,  =

=  . For non-annotated vertex

. For non-annotated vertex  =

=  .

.

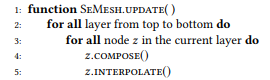

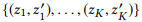

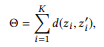

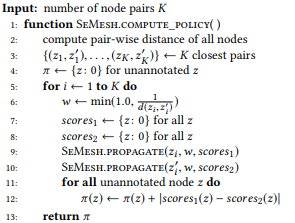

Next, we describe the algorithm for updating the semantic network, that is, the calculations for all z (algorithm 1), even if only a small part of the vertices were annotated. We assume that the value

for all z (algorithm 1), even if only a small part of the vertices were annotated. We assume that the value already updated for all annotated nodes. Going from top to bottom of the layers, we compute sequentially

already updated for all annotated nodes. Going from top to bottom of the layers, we compute sequentially  and

and  for each vertex z. First, you need to update the upper layers so that you can calculate the expected distribution of the peaks of the lower level. We annotated all root vertices, so we can compute

for each vertex z. First, you need to update the upper layers so that you can calculate the expected distribution of the peaks of the lower level. We annotated all root vertices, so we can compute  for all vertices.

for all vertices.

Algorithm 1. Update Node Distributions of Semantic Mesh

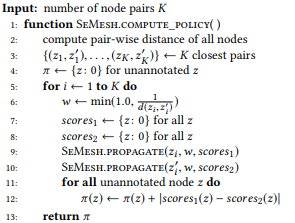

The semantic network forms a holistic view of the entire API call space, as well as the interaction of statements and calls. Based on this view, we can selectively annotate only a subset of high-value API calls. In this section, we describe our proposed differential distribution strategy for optimizing crowdsourcing.

Consider a semantic network with a set of vertices Z. Our task is to define, within an iterative process, a subset of vertices that will be annotated by crowdsourcing workers. The previously annotated vertices will be called the state,

that will be annotated by crowdsourcing workers. The previously annotated vertices will be called the state,

then we need to find a policy to evaluate each non-annotated vertex, taking into account the current state.

to evaluate each non-annotated vertex, taking into account the current state.

Before delving into a discussion of approaches to calculating an effective policy, suppose that we already have one, and give a high-level description of our crowdsourcing algorithm (algorithm 2) to describe the accompanying methods. More specifically, we first annotate all root vertices in order to estimate the distribution for all vertices in Z (line 3). At each iteration, we update the distribution of vertices (line 5), calculate the

policy based on the current state of the semantic network (line 6), choose a non-annotated vertex with a maximum rating (line 7), and annotate the top and result in a new state (line 8). In practical terms, you can annotate several vertices within an iteration to increase efficiency.

Figure 6. Differential distribution. z12 and z23 are the vertex pair under study. w is a score calculated on the basis of d (z12, z23), and is distributed iteratively from the bottom up, twice in each iteration. The estimate for the vertex is the absolute difference of its estimates from z12 and z23 (therefore differential). z2 gets a score of 0, since this is the common parent entity for z12 and z23; annotation in this case will be of little use in terms of ensuring the distinctiveness of z12 and z23.

In a broad sense, the tasks we solve can be attributed to the problem of active learning; we set ourselves the goal of defining a subset of examples for annotation in order to obtain a training set that can increase the effectiveness of training. However, several key differences do not directly apply classical active learning methods, such as “uncertainty of selection”. Usually in the process of active learning, the learner, who in our case would be the NLI interface, tries to study the f: X → Y mapping, where X is the input sample of the space, consisting of a small set of labeled and a large number of unlabelled samples, and Y is usually a set of markers class.

The learner evaluates the information content of unmarked examples and selects the most informative to get the label in Y from the crowdsourced workers. But within the framework of the problem we solve, the annotation problem is posed differently. We need to select an instance from Y, a large API call space, and ask the crowdsourced employees to label it, indicating the samples in X, the sentence space. In addition, we are not tied to a specific student. Thus, we offer a new solution for the problem at hand. We draw inspiration from numerous sources on active learning.

First, we will define a goal on the basis of which the information content of the nodes will be evaluated. Obviously, we want different API calls to be distinguished. In the semantic network, this means that different vertices have obvious differences. To begin with, we represent each distribution

different vertices have obvious differences. To begin with, we represent each distribution  as an n-dimensional vector

as an n-dimensional vector  , where n = |

, where n = |  | - size of the dictionary. By a certain metric of a vector distance d (in our experiments we use the distance between the pL1 vectors), we mean

| - size of the dictionary. By a certain metric of a vector distance d (in our experiments we use the distance between the pL1 vectors), we mean  , that is, the distance between two vertices is equal to the distance between their distributions.

, that is, the distance between two vertices is equal to the distance between their distributions.

The obvious goal is to maximize the total distance between all pairs of vertices. However, the optimization of all pairwise distances may be too difficult to calculate, and this is not necessary. A pair of distant peaks already has enough differences, so a further increase in distance does not make sense. Instead, we can focus on pairs of vertices that cause the greatest confusion, that is, the distance between which is the smallest.

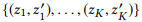

where it indicates the first K pairs of vertices if we rank all pairs of nodes by distance in ascending order.

indicates the first K pairs of vertices if we rank all pairs of nodes by distance in ascending order.

Algorithm 2. Iteratively Annotate a Semantic Mesh with a Policy

Algorithm 3. Compute Policy based on Diferential Propagation

Algorithm 4. Recursively Propagate a score from a vertex

With more informative after annotation, vertices with a higher information content potentially increase the value of Θ. For a quantitative assessment in this case, we propose to use the strategy of differential propagation. If the distance between a pair of vertices is small, we investigate all their parent vertices: if the parent vertex is common for a pair of vertices, it should receive a low estimate, since annotation will lead to similar changes for both vertices.

Otherwise, the vertex should receive a high estimate, and the closer the pair of vertices, the higher the score should be. For example, if the distance between the vertices “unread emails about PhD application” and “how many emails are about PhD application” is small, then annotate their parent top “emails about PhD application” does not make much sense from the point of view of ensuring the distinguishability of these vertices. It is more expedient to annotate the parent nodes that will not be common to them: “unread emails” and “how many emails”.

An example of such a situation is shown in Figure 6, and its algorithm is Algorithm 3. As an estimate, we take the reciprocal of the node distance, bounded by a constant (line 6), so the closest pairs of vertices have the greatest impact. Working with a pair of vertices, we in parallel assign the estimate of each vertex to all of its parent vertices (line 9, 10 and algorithm 4). The estimate of a non-annotated vertex is the absolute difference in the estimates of the corresponding pair of vertices with summation over all pairs of vertices (line 12).

To evaluate the proposed framework, it is necessary to train the NL2API model using the collected data. At the moment, the finished model NL2API is not available, but we are adapting two tested NLI models from other areas in order to apply them to the API.

Based on recent developments in the field of NLI for knowledge bases, we can consider the creation of NL2API in the context of the problem of extracting information in order to adapt the model of extraction based on the language model (LM) under our conditions.

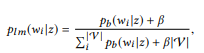

To say u, it is necessary to find an API call z in the semantic network with the best match for u. First we convert the BoB distribution of each API call z into a language unigram model:

each API call z into a language unigram model:

where we use additive smoothing, and 0 ≤ β ≤ 1 is the smoothing parameter. The greater the value , the greater the weight of words that have not yet been analyzed. API calls can be ranked by their logarithmic probability:

, the greater the weight of words that have not yet been analyzed. API calls can be ranked by their logarithmic probability:

(subject to a uniform a priori probability distribution)

The API call with the highest rating is used as the result of the simulation.

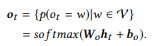

Neural networks are becoming more common as models for NLI, while the Seq2Seq model is better suited for this purpose because it allows you to naturally process input and output sequences of variable length. We are adapting this model for NL2API.

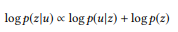

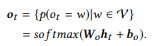

For an input sequence e , the model estimates the conditional probability distribution p (y | x) for all possible output sequences

, the model estimates the conditional probability distribution p (y | x) for all possible output sequences  . The lengths T and T ′ can vary and take any values. In NL2API, x is an output statement. y can be a serialized API call or its canonical command. We will use canonical commands as target output sequences, which actually turns our problem into a rephrasing problem.

. The lengths T and T ′ can vary and take any values. In NL2API, x is an output statement. y can be a serialized API call or its canonical command. We will use canonical commands as target output sequences, which actually turns our problem into a rephrasing problem.

The encoder, implemented as a recurrent neural network (RNN) with controlled recurrent blocks (GRU), first presents x as a fixed-size vector,

where RN N is a short representation for applying the GRU to the entire input sequence, marker by marker, followed by the last hidden states.

The decoder, which is also the RNN with the GRU, takes h0 as the initial state and processes the output sequence y, marker by marker, to generate a sequence of states, the

Output layer takes each decoder state as an input value and generates a dictionary distribution as output value. We simply use an affine transformation, followed by the soft variable logistic function softmax:

as output value. We simply use an affine transformation, followed by the soft variable logistic function softmax:

The final conditional probability, which allows us to estimate how well the canonical command y rephrases the input statement x, -

. API calls are then ranked by the conditional probability of their canonical command. We recommend to get acquainted with the source, where the process of learning the model is described in more detail.

. API calls are then ranked by the conditional probability of their canonical command. We recommend to get acquainted with the source, where the process of learning the model is described in more detail.

Experimentally, we study the following research subjects: [PI1]: Can we, using the proposed framework, collect high-quality training data at a reasonable price? [PI2]: Does the semantic network provide a more accurate assessment of language models than a maximum likelihood estimate? [PI3]: Does a differential distribution strategy allow for more efficient crowdsourcing?

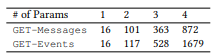

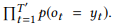

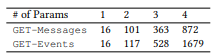

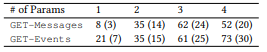

We apply the proposed framework to the two Microsoft web APIs - GET-Events and GET-Messages - which provide access to advanced search services for user emails and calendar events, respectively. We create a semantic network for each API, listing all API calls (section 3.1) and up to four parameters in each. The distribution of API calls is shown in Table 2. We use an internal crowdsourcing platform, similar to Amazon Mechanical Turk. To ensure the flexibility of the experiment, we annotated all API calls with a maximum of three parameters.

However, in each particular experiment, we will use only a specific subset for learning. Each API call is annotated with 10 statements, and we pay 10 cents for each statement. 201 participants are involved in annotation, all of them have been selected using the qualification test. On average, the performer took 44 seconds to rephrase the canonical team, so we can get 82 teaching examples from each artist per hour, and the cost will be $ 8.2, which, in our opinion, is quite a bit. Regarding the quality of annotations, we manually checked 400 collected statements and found that the proportion of errors is 17.4%.

The main causes of errors are related to the absence of certain parameters (for example, the performer did not specify the ORDERBY parameter or a COUNT parameter) or incorrect interpretation of the parameters (for example, ascending ranking is indicated when ranking is in descending order). These examples account for about half of the errors. The proportion of errors is comparable to that of other crowdsourcing projects in the field of NLI. Thus, we consider the answer to [PI1] to be positive. Data quality can be improved by engaging independent crowdsourced workers for post-testing.

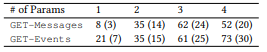

In addition, we received an annotated independent test set formed randomly from the entire semantic network and including, among other things, API calls with four parameters (Table 3). Each API call in the test set initially had three statements. We conducted a test and weed out statements with errors in order to improve the quality of testing. The final test set included 61 API calls and 157 statements for GET-Messages, as well as 77 API calls and 190 statements for GET-Events. Not all test statements were included in the training data, besides many test API calls (for example, calls with four parameters) were not involved in training, therefore, the test set was very complex.

Table 2. Distribution of API calls.

Таблица 3. Распределение тестового множества: высказывания (вызовы).

As an estimate, we use accuracy, that is, the proportion of test statements for which the maximum prediction was correct. Unless otherwise specified, the balance parameter is α = 0.3, and the smoothing parameter is LM β = 0.001. The number of pairs of vertices K used for differential propagation is 100,000. The set value for the state, size, encoder, and decoder in the Seq2Seq model is 500. Parameters are selected based on the results of a preliminary study on a separate test set (regardless of testing).

The semantic network is not only useful for optimizing crowdsourcing as the first of its kind model of the crowdsourcing process for NLI, it also has technical advantages. Therefore, we will evaluate the semantic network and the optimization algorithm separately.

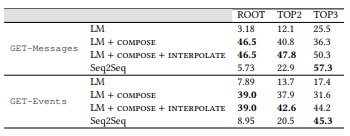

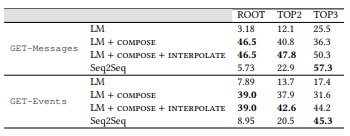

Overall effectiveness. In this experiment, we evaluate the model of the semantic network and, in particular, the effectiveness of the operations of composition and interpolation from the point of view of optimizing the assessment of language models. The quality of language models can be judged by the effectiveness of the LM model: the more accurate the assessment, the higher the efficiency. We use several training sets corresponding to different sets of annotated vertices. ROOT is the root vertices. TOP2 = ROOT + all nodes of layer 2; and TOP3 = TOP2 + all nodes of layer 3. This allows the semantic network to be evaluated using different amounts of training data.

The results are shown in table 4. When working with the base model LM, we use the maximum likelihood estimate (MLE) to analyze the language model, that is, we use for all non-annotated vertices, and uniform distribution for non-annotated vertices. It is not surprising that the efficiency is rather low, especially if the number of annotated vertices is small, since the MLE cannot provide information about non-annotated vertices.

for all non-annotated vertices, and uniform distribution for non-annotated vertices. It is not surprising that the efficiency is rather low, especially if the number of annotated vertices is small, since the MLE cannot provide information about non-annotated vertices.

By adding the composition to the MLE, we can estimate the expected distribution for non-annotated vertices, but

for non-annotated vertices, but still used for annotated vertices, i.e. no interpolation. This allows you to significantly optimize the API and a variety of training data. With just 16 annotated API calls (ROOT), a simple LM model with SeMesh can outperform a more sophisticated Seq2Seq model with more than a hundred annotated API calls (TOP2) and approach a model containing about 500 annotated API calls (TOP3).

still used for annotated vertices, i.e. no interpolation. This allows you to significantly optimize the API and a variety of training data. With just 16 annotated API calls (ROOT), a simple LM model with SeMesh can outperform a more sophisticated Seq2Seq model with more than a hundred annotated API calls (TOP2) and approach a model containing about 500 annotated API calls (TOP3).

These results clearly show that the language models evaluated using the composition operation are fairly accurate, the validity of the assumption that the expressiveness of the statements (Section 3.2) is proved empirically. It can be noted that working with GET-Events is generally more difficult than with GET-Messages. This is because GET-Events uses

more teams that are tied to time, and events may relate to the future or the past, whereas e-mails always refer only to the past.

Table 4. Overall Accuracy Percentage. The operations of the semantic network greatly optimize the simple LM model, making it better for the more complex Seq2Seq model, when the amount of training data is rather small. The results prove that the semantic network provides an accurate assessment of language models.

The effectiveness of the LM + composition decreases when we use more training data, which indicates the inexpediency of using only and the need to combine with θem with

and the need to combine with θem with  . When we interpolate

. When we interpolate  and

and then we achieve improvements everywhere, except for the level of ROOT, where none of the peaks can be simultaneously

then we achieve improvements everywhere, except for the level of ROOT, where none of the peaks can be simultaneously  and

and  . Unlike composition, the more training data we use for interpolation, the better the result of the operation. In general, the semantic network provides significant optimization compared to the basic MLE indicators. Thus, we consider the answer to [PI2] to be positive.

. Unlike composition, the more training data we use for interpolation, the better the result of the operation. In general, the semantic network provides significant optimization compared to the basic MLE indicators. Thus, we consider the answer to [PI2] to be positive.

The best indicators of accuracy are in the range from 0.45 to 0.6: they are not very high, but they are on the same level as indicators of modern methods of applying NLI to knowledge bases. This reflects the complexity of the problem, as the model must exactly find the best among thousands of related API calls. By collecting additional statements for each API call (see also Figure 7) and using more advanced models, such as bidirectional RNNs with an attention mechanism, you can further improve efficiency. We will leave these questions for future work.

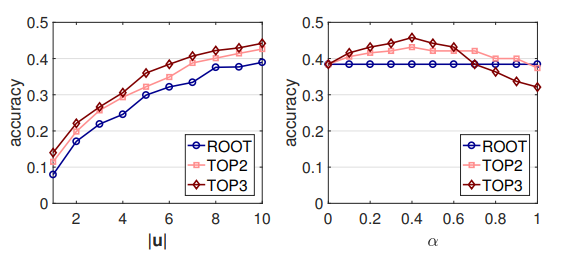

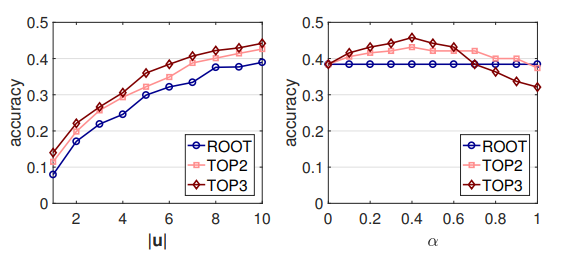

The impact of hyperparameters. Now consider the influence of two hyperparameters on the semantic network: the number of statements | u | and the balance parameter α. Here, we still rely on the effectiveness of the LM model (Figure 7). Sayings are randomly selected when | u | <10, and the model output is given average marks for 10 repeated runs. We show the results for GET-Events, for GET-Messages they are similar.

It is not surprising that the more statements we annotate for each vertex, the higher the efficiency, although the increment gradually decreases. Therefore, in the presence of such a possibility, it is recommended to collect additional statements. On the other hand, the model efficiency is practically independent of α, since this parameter lies in the allowable range ([0.1, 0.7]). The influence of the parameter α increases with the number of annotated vertices, which is quite expected, since interpolation affects only annotated vertices.

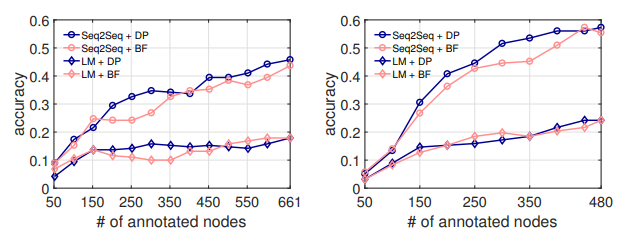

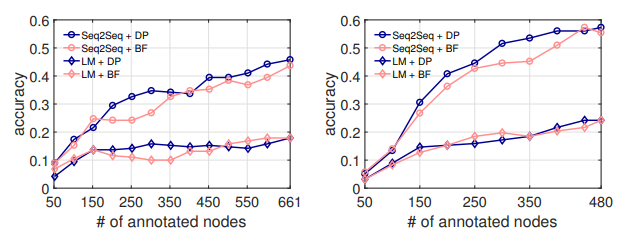

In this experiment, we evaluate the effectiveness of applying the proposed differential propagation strategy (DP) to optimize crowdsourcing. Various crowdsourcing strategies iteratively select API annotation calls. At each iteration, each strategy selects 50 API calls, and then they are annotated, and the two NL2API models use the accumulated annotated data for training.

Finally, models are evaluated on a test set. We use the basic LM model, which does not depend on the semantic network. The best crowdsourcing strategy should provide the best model performance for the same number of annotations. Instead of annotating vertices on the fly using crowdsourcing, we use annotations collected earlier as a pool of candidates (section 5.1), so all strategies will be selected only from existing API calls with three parameters.

Figure 7. Effects of hyperparameters.

Figure 8. Crowdsourcing optimization experiment. Left: GET-Events. Right: GET-Messages

We take the breadth first (BF) strategy as a basic strategy, which in the downstream direction gradually annotates each layer of the semantic network. It resembles a strategy out. So we get the basic indicators. Calling top-level APIs is usually more important because they are compositions of low-level API calls.

The results of the experiment are shown in Figure 8. For both NL2API models and both APIs, the DP strategy generally improves efficiency. When we annotate only 300 calls for each API, as applied to the Seq2Seq model, DP provides an absolute increase in accuracy of more than 7% for both APIs. When the pool of candidates is exhausted, the two algorithms converge, which is expected. The results show that DP allows you to define API calls that are highly valuable for learning NL2API. Thus, we consider the answer to [PI3] to be positive.

Natural language interface. Over the creation of natural language interfaces (NLI) experts have been working for several decades. The first NLI mainly used the rules. Learning-based methods have firmly taken the lead in recent years. The most popular learning algorithms are based on log-line models and relatively recently developed deep neural networks.

The application of NLI to relational databases, knowledge bases and web tables has been studied fairly well, but there is almost no research on the API. NL2API developers face two major problems: the lack of a single semantic representation for the API and, in part because of this, the lack of training data. We are working on solving both problems. We offer a unified semantic representation of the API based on the REST standard and a fundamentally new approach to the collection of training data in this representation.

Collection of training data for NLI.Existing training data collection solutions for NLI generally adhere to the principle of "the best of the possible". For example, questions in natural language are collected using the Google Suggest API, and the authors receive commands and corresponding API calls from the IFTTT website. Researchers have relatively recently begun to explore approaches to creating NLI, involving the collection of training data using crowdsourcing. Crowdsourcing has become a familiar practice in various language related studies.

However, there is little study of its application to create NLI interfaces, which allow solving the unique and intriguing task of modeling the interaction between natural language representations and formalized representations of the semantic structure. Most of these studies involve the application of NLI to knowledge bases, where a formalized presentation is expressed by logical forms in a certain logical formalism. The authors propose to transform logical forms into canonical commands with the help of grammar, and in described methods for refining generated logical forms and screening out those that do not correspond to a significant question in natural language.

A semi-automatic framework is proposed for interacting with users on the fly and comparing commands in natural language with API calls on smartphones. The authors propose a similar solution based on a crowdsourcing framework, where performers interactively perform annotation tasks for the web API. But no researcher has so far dealt with the use of the compositionality of formal representations to optimize the crowdsourcing process.

Semantic methods for web API.There are a number of other semantic methods developed for the web API. For example, the semantic descriptions of the web API are retrieved in order to simplify the composition of the API, while the proposed search mechanism for the web API to compose the compositions. The NL2API technology will potentially allow to solve such problems, for example, when used as a single search engine to find an API.

We formulated the problem of creating a natural language interface for web APIs (NL2API) and proposed a comprehensive framework for developing NL2API from scratch. One of the key technical results of the work is a fundamentally new approach to the collection of training data for NL2API based on crowdsourcing. The work opens up several areas for further research: (1) The language model. How, by way of generalization, to move from individual words to more complex language units, for example, phrases? (2) Crowdsourcing optimization.

How to use the semantic network as efficiently as possible? (3) Model NL2API. For example, the framework for filling slots in voice dialogue systems is optimally suited for our presentation of API frames. (4) Composition API. How to collect training data while using multiple APIs? (5) Optimization during the interaction: how to continue to improve the NL2API in the process of user interaction after the initial training?

annotation

As the Internet evolves towards a service-oriented architecture, software interfaces (APIs) are becoming increasingly important as a way to provide access to data, services, and devices. We are working on the problem of creating a natural language API for the API (NL2API), focusing on web services. NL2API solutions have many potential benefits, for example, helping to simplify the integration of web services into virtual assistants.

We offer the first comprehensive platform (framework), which allows you to create NL2API for a specific web API. The key task is to collect data for training, that is, the “NL command - API call” pairs, which allow the NL2API to study the semantics of both NL commands that do not have a strictly defined format and formalized API calls. We offer our own unique approach to the collection of training data for NL2API using crowdsourcing - attracting numerous remote workers to the generation of various NL teams. We optimize the crowdsourcing process itself to reduce costs.

In particular, we offer a fundamentally new hierarchical probabilistic model that will help us distribute the budget for crowdsourcing, mainly between those API calls that have a high value for learning NL2API. We apply our framework to the real API and show that it allows you to collect high-quality training data with minimal costs, as well as create high-performance NL2API from scratch. We also demonstrate that our crowdsourcing model improves the efficiency of this process, that is, the training data collected within its framework provides higher NL2API performance, far exceeding the baseline.

Introduction

Application programming interfaces (APIs) are playing an increasingly important role in the virtual and physical world due to the development of technologies such as service-oriented architecture (SOA), cloud computing and the Internet of things (IoT). For example, web services hosted in the cloud (weather, sports, finance, etc.) provide data and services to end users via a web API, and IoT devices allow other network devices to use their functionality.

Figure 1. The “NL-command (left) and API call (right)” pairs, collected

our framework, and comparison with IFTTT. GET-Messages and GET-Events are two web APIs for searching emails and calendar events, respectively. The API can be called with various parameters. We concentrate on fully parameterized API calls, while IFTTT is limited to APIs with simple parameters.

APIs are commonly used in various software applications: desktop applications, websites, and mobile applications. They also serve users through a graphical user interface (GUI). The GUI made a great contribution to the popularization of computers, but, as computer technology developed, its numerous limitations increasingly manifest themselves. On the one hand, since devices are becoming smaller, more mobile and smarter, the requirements for a graphic image on the screen are constantly increasing, for example, with respect to portable devices or devices connected to IoT.

On the other hand, users have to adapt to various specialized GUIs for various services and devices. As the number of available services and devices increases, the cost of training and adapting users also grows. Natural language interfaces (NLI), such as Apple Siri and Microsoft Cortana virtual assistants, also known as conversational or conversational interfaces (CUI), demonstrate significant potential as a single intelligent tool for a wide range of server services and devices.

This paper deals with the problem of creating a natural language interface for an API (NL2API). But, unlike virtual assistants, this is not a general-purpose NLI,

we are developing approaches to creating NLIs for specific web APIs, that is, APIs for web services like the multisport service ESPN1. Such NL2APIs can solve the problem of scalability of general-purpose NLI, providing the possibility of distributed development. The usefulness of a virtual assistant largely depends on the breadth of its capabilities, that is, on the number of services it supports.

However, integrating web services into a virtual assistant one by one is incredibly hard work. If individual web services providers had an inexpensive way to create NLI for their APIs, the integration costs would be significantly reduced. A virtual assistant would not have to handle different interfaces for different web services. It would be enough for him to simply integrate the individual NL2API, which achieve uniformity due to natural language. On the other hand, NL2API can also simplify the discovery of web services and the programming of API recommendation and assistance systems, eliminating the need to memorize the large number of available web APIs and their syntax.

Example 1Two examples are shown in Figure 1. The API can be called with different parameters. In the case of the email search API, users can filter email by specific properties or search for emails by keywords. The main task of NL2API is to match NL commands with corresponding API calls.

Task.The collection of training data is one of the most important tasks related to research in the development of NLI interfaces and their practical application. NLI interfaces use supervised training data, which in the case of NL2API consists of NL command – API call pairs, to learn semantics and uniquely match NL commands with corresponding formalized representations. Natural language is very flexible, so users can describe an API call in syntactically different ways, that is, paraphrasing takes place.

Consider the second example in Figure 1. Users can rephrase this question as follows: “Where will the next meeting take place” or “Find a venue for the next meeting”. Therefore, it is extremely important to collect sufficient training data for the system to recognize such variants in the future. Existing NLIs generally adhere to the “best of principle” principle in the data collection process. For example, the closest analogue of our methodology for comparing NL commands with API calls is using the IF-This-Then-That (IFTTT) concept - “if it is, then” (Figure 1). The training data comes directly from the IFTTT website.

However, if the API is not supported or not fully supported, there is no way to correct the situation. In addition, the training data collected in this way is of little use for supporting extended commands with several parameters. For example, we analyzed the anonymized Microsoft API call logs to search emails for a month and found that about 90% of them use two or three parameters (approximately in equal amounts), and these parameters are quite diverse. Therefore, we strive to provide full support for API parameterization and to implement extended NL commands. The problem of deploying an active and customizable process of collecting training data for a specific API currently remains unresolved.

The use of NLI in combination with other formalized views, such as relational databases, knowledge bases and web tables, has been worked out fairly well, while the development of the NLI for the web API has received almost no attention. We offer the first comprehensive platform (framework), which allows you to create NL2API for a specific web API from scratch. In the implementation for the web API, our framework includes three steps: (1) Representation. The original HTTP Web API format contains many redundant and, therefore, distracting details from the point of view of the NLI interface.

We suggest using an intermediate semantic representation for the web API in order not to overload NLI with unnecessary information. (2) A set of training data. We propose a new approach to obtaining controlled training data based on crowdsourcing. (3) NL2API. We also offer two NL2API models: a language-based extraction model and a recurrent neural network model (Seq2Seq).

One of the key technical results of this work is a fundamentally new approach to the active collection of training data for NL2API based on crowdsourcing - we use remote performers to annotate API calls when they are compared with NL teams. This allows you to achieve three design goals by ensuring: (1) Customizability. It must be possible to specify which parameters for which API to use and how much training data to collect. (2) Low cost. The services of crowdsourcing workers are much cheaper than the services of specialized specialists, therefore, they need to be hired. (3) High quality. The quality of the training data should not be reduced.

When designing this approach, there are two main problems. First, API calls with extended parameterization, as in Figure 1, are incomprehensible to the average user, so you need to decide how to formulate the annotation problem so that crowdsourced workers can easily cope with it. We begin by developing an intermediate semantic representation for the web API (see Section 2.2), which allows us to seamlessly generate API calls with the required parameters.

Then we think up a grammar to automatically convert each API call to a canonical NL command, which can be quite cumbersome, but it will be understood by the average crowdsourced worker (see section 3.1). The performers will only have to rephrase the canonical team to make it sound more natural. This approach helps to prevent many errors in the collection of training data, since the task of rephrasing is much simpler and clearer for the average crowdsourcing worker.

Secondly, it is necessary to understand how to define and annotate only those API calls that are of real value for NL2API learning. The “combinatorial explosion” that arises during parameterization leads to the fact that the number of calls even for one API can be quite large. Annotate all calls does not make sense. We offer a fundamentally new hierarchical probabilistic model for the implementation of the crowdsourcing process (see Section 3.2). By analogy with language modeling in order to obtain information, we assume that NL commands are generated based on the corresponding API calls, so a language model should be used for each API call in order to register this “spawning” process.

Our model is based on the compositional nature of API calls or formalized representations of the semantic structure as a whole. At the intuitive level, if an API call consists of simpler calls (for example, “unread emails about the application for a candidate of science degree” = “unread emails” + “emails for an application for the degree of candidate of science”, we can build it a language model from simple API calls even without annotation, so by annotating a small number of API calls, we can calculate the language model for everyone else.

Of course, the calculated language models are far from ideal, otherwise we would have already solved the problem of creating an NL2API. Nevertheless, such an extrapolation of the language model to non-annotated API calls gives us a holistic view of the entire space of API calls, as well as the interaction of natural language and API calls, which allows us to optimize the crowdsourcing process. In Section 3.3, we describe an algorithm for selective annotation of API calls that helps to make API calls more distinct, that is, to ensure the maximum divergence of their language models.

We apply our framework to two deployed APIs from the Microsoft Graph API2 package. We demonstrate that high-quality training data can be collected at minimal cost, provided that the proposed approach is used3. We also show that our approach improves crowdsourcing efficiency. At similar costs, we collect higher-quality training data, significantly exceeding the basic indicators. As a result, our NL2API solutions provide higher accuracy.

In general, our main contribution includes three aspects:

- We were one of the first to start exploring the NL2API problematics and suggested a comprehensive framework for creating NL2API from scratch.

- We proposed a unique approach to the collection of training data using crowdsourcing and a fundamentally new hierarchical probabilistic model for optimizing this process.

- We applied our framework to real web APIs and demonstrated that a sufficiently effective NL2API solution can be created from scratch.

Table 1. OData request parameters.

Preamble

RESTful API

Recently, web APIs that meet the architectural style of REST, that is, the RESTful API, have become increasingly popular due to their simplicity. RESTful APIs are also used in smartphones and IoT devices. Restful APIs work with resources that are addressed via a URI and provide access to these resources for a wide range of clients using simple HTTP commands: GET, PUT, POST, etc. We will mainly work with the RESTful API, but the basic methods can be used and other APIs.

For example, let's take the popular open data protocol (OData) for the RESTful API and two web APIs from the Microsoft Graph API (Figure 1), which, respectively, are used to search for emails and calendar events of the user. Resources in OData are entities, each of which is associated with a list of properties. For example, the Message entity — an email — has properties such as subject (subject), from (from), isRead (read), receivedDateTime (received date and time), and so on.

In addition, OData defines a set of query parameters, allowing you to perform advanced resource manipulations. For example, the FILTER parameter allows you to search for emails from a specific sender or emails received on a specific date. The request parameters that we will use are presented in Table 1. We call each combination of the HTTP command and an entity (or set of entities) as an API, for example, GET-Messages — to search for emails. Any parameterized request, for example, FILTER (isRead = False), is called a parameter, and an API call is an API with a list of parameters.

NL2API

The main task of NLI is to compare statements (natural language commands) with a certain formalized representation of, for example, logical forms or SPARQL queries for knowledge bases or web API in our case. When it is necessary to focus on the semantic mapping, without being distracted by irrelevant details, an intermediate semantic representation is usually used in order not to work directly with the target. For example, combinatorial categorical grammar is widely used to create NLI interfaces for databases and knowledge bases. A similar approach to abstraction is also very important for NL2API. Many details, including URL conventions, HTTP headers and response codes, can “distract” NL2API from solving the main task - the semantic mapping.

Therefore, we create an intermediate representation for the RESTful APIs (Figure 2) called the API frame, this representation reflects the semantics of the frame. Frame API consists of five parts. HTTP Verb (HTTP Command) and Resource (Resource) are basic elements for the RESTful API. Return Type allows you to create composite APIs, that is, combine several API calls to perform a more complex operation. Required Parameters are most often used in PUT or POST calls to the API, for example, for sending e-mail, the required parameters are the addressee, the header and the message body. Optional Parameters are often present in GET calls in the API, they help narrow down the information request.

If the required parameters are missing, we serialize the API frame, for example: GET-messages {FILTER (isRead = False), SEARCH (“PhD application”), COUNT ()}. An API frame can be deterministic and converted to a real API call. During the conversion process, the necessary contextual data will be added, including the user ID, location, date and time. In the second example (Figure 1), the now value in the FILTER parameter will be replaced with the date and time of the execution of the corresponding command during the conversion of an API frame to an actual API call. Further we will use the concepts of an API frame and an API call interchangeably.

Figure 2. Frame API. Above: team in natural language. Middle: Frame API. Below: API call.

Figure 3. Crowdsourcing conveyor.

Collection of training data

This section describes our proposed fundamentally new approach to collecting training data for NL2API solutions using crowdsourcing. First, we generate API calls and convert each of them into a canonical command, based on a simple grammar (section 3.1), and then use crowdsourcing workers to paraphrase canonical commands (Figure 3). Given the compositional nature of API calls, we proposed a hierarchical probabilistic model of crowdsourcing (section 3.2), as well as an algorithm for optimizing crowdsourcing (section 3.3).

Figure 4. Generation of canonical command. Left: lexicon and grammar. Right: example of derivation.

API call and canonical command

We generate API calls based solely on the API specification. In addition to schema elements such as request parameters and entity properties, we need property values to generate API calls that are not provided by the API specification. For properties with enumerated values, such as Boolean, we list the possible values (True / False).

For properties with values of an unlimited type, such as Datetime, we will synthesize several representative values, such as today or this_week for receivedDateTime. It is necessary to understand that these are abstract values at the API frame level and they will be converted to real according to the context (for example, the actual date and time) when the API frame is converted to a real API call.

It’s easy to list all combinations of query parameters, properties, and property values to create API calls. Simple heuristics allow you to weed out not quite suitable combinations. For example, TOP is applied to a sorted list, so this parameter must be used in conjunction with ORDERBY. In addition, properties of type Boolean, for example isRead, cannot be used in ORDERBY. Nevertheless, the “combinatorial explosion” in any case determines the presence of a large number of API calls for each API.

The average user is hard to understand API calls. Similarly, we convert an API call into a canonical command. We form an API-specific lexicon and a common grammar for the API (Figure 4). Lexicon allows you to get the lexical form and syntactic category for each element (HTTP commands, entities, properties and property values). For example, the lexical notation ⟨sender → NP [from]⟩ indicates that the lexical form of the from property is “sender”, and the syntactic category is the noun phrase (NP) that will be used in grammar.

Syntactic categories can also be verbs (V), verb phrases (VP), adjectives (JJ), nominative word combinations (CP), generalized noun phrases followed by other noun phrases (NP / NP), generalized prepositional phrases (PP / NP), quotations (S), etc...

it should be noted that although the lexicon specific to each API and must be provided by the administrator, the grammar is designed as a general and can therefore be replicated to any RESTful API based OData protocol - "as is" and and after minor modifications. The 17 grammar rules in Figure 4 allow you to cover all the API calls used in the following experiments (section 5).

The grammar makes it clear how to get the canonical command step by step from an API call. This is a set of rules of the form ⟨t1, t2, ..., tn → c [z]⟩, where

is a sequence of tokens, z is part of the API call, and cz is its syntactic category. Let us discuss the example in Figure 4. To the API call in the root of the derivation tree, which has the syntactic category S, we first apply the G4 rule to split the original API call into four parts. Taking into account their syntactic category, the first three can be directly converted into phrases in natural language, while the latter uses another subtree of derivation and therefore will be transformed into the phrase “that is not read”.

is a sequence of tokens, z is part of the API call, and cz is its syntactic category. Let us discuss the example in Figure 4. To the API call in the root of the derivation tree, which has the syntactic category S, we first apply the G4 rule to split the original API call into four parts. Taking into account their syntactic category, the first three can be directly converted into phrases in natural language, while the latter uses another subtree of derivation and therefore will be transformed into the phrase “that is not read”.It should be noted that syntactic categories allow conditional derivation. For example, both rule B2 and rule B4 can be applied to VP [x = False], so the syntactic category x helps to decide. If x belongs to the syntactic category of VP, rule B2 (for example, x is hasAttachments → "do not have attachment"); and if it is JJ, then rule B4 is executed (for example, x is isRead → "is not read"). This avoids cumbersome canonical commands (“do not read” or “is not have attachment”) and makes the generated canonical commands more natural.

Semantic network

We can generate a large number of API calls using the above approach, but annotating them all with the help of crowdsourcing is not practical from an economic point of view. Therefore, we propose a hierarchical probabilistic model for organizing crowdsourcing, which helps to decide which API calls to annotate. As far as we know, this is the first probabilistic model of using crowdsourcing to create NLI interfaces, which allows solving a unique and intriguing task of modeling the interaction between natural language representations and formalized representations of the semantic structure. Formalized representations of the semantic structure in general and API calls in particular are of a composite nature. For example, z12 = GET-Messages {COUNT (),

Figure 5. Semantic network. The i-th layer consists of API calls with i parameters. Ribs are compositions. The probability distributions on the vertices characterize the corresponding language models.

One of the key results of our research was the confirmation that such composition can be used to model the crowdsourcing process.

First, we define the composition based on a set of API call parameters.

Definition 3.1 (composition). Take an API and a set of API calls

, if we define r (z) as a parameter set for z, then it

, if we define r (z) as a parameter set for z, then it  is a composition