Optimization for CPU: how to find a black cat in a dark room

Invalid Operation Method:

Divide the cat by zero,

after which it will become infinitely large,

so that it will be impossible to miss.

[Absurdopedia]

Trying to find a performance problem with relatively simple code, I recalled some ridiculous solution methods described on Absurdopedia for the task of finding a black cat in a dark room. Oddly enough, the consistent use of the three methods that can be found at the link: Pragmatism, the Dichotomy Method and the Spear Method helped me a lot.

So, we have the task of sequentially rearranging bytes in each word of the array (big-endian <-> little-endian) and summing all the words into one (reduction). Let us leave aside the task of parallelization, because its solution is close to trivial, and so far it is not of interest to us.

Direct (forehead) implementation of an algorithm is usually called naive. This is probably due to the fact that we, programmers, naively rely on a compiler that will generate, if not optimal, then close to this code, and there will be nothing more to optimize. However, as we will see later in the disassembler, the compiler produces a fairly simple sequence of instructions, in principle, which should not cause special performance problems on the x86 platform.

void init()

{

int i;

for (i = 0; i < 1024; i++)

buf[i] = i;

}

unsigned int change_endianess(char *big)

{

char temp;

temp = big[0];

big[0] = big[3];

big[3] = temp;

temp = big[1];

big[1] = big[2];

big[2] = temp;

return *(unsigned int *)big;

}

void reduce()

{

int i, n;

unsigned int sum = 0;

init();

for (n = 0; n < ITER_NUM; n++){//repeat itarations

for (i = 0; i < 1024; i++){

sum += change_endianess((char *)(buf+i));}}

printf ("Sum is %d\n", sum);

}

main ()

{

reduce();

}

As our witty marketers put it, “evaluating performance without profiling is fortunetelling.” Therefore, we launch the General Exploration profile in VTune Amplifier XE in order to evaluate how effectively this code is executed on the Core i7 microarchitecture (codename Nehalem). Do not forget to specify the -Zi and / debug: inline-debug-info options for Intel Compiler (Windows) in order to save debugging information and information about function lines that were “inlined” (how to say “inlined” in Russian?) . The last option is very useful because it allows VTune Amplifier XE to attribute counter values directly along the lines of a nested function. This is a new VTune feature; it can be turned off so that the results are displayed only opposite the function call.

Since the test runs quite quickly (despite the rather large number of iterations chosen), it is useful to enable the Allow Multiple Runs option in the VTune project settings, which will force the tool to run the test several times, collecting counters in groups. Otherwise, the counters will be multiplexed, and with a short run time the accuracy may be affected.

From the results it is clear that nothing is clear. It can be seen that the performance of code execution in the reduce function is quite far from perfect, since CPI = 1.67 is more than 6 times more than the optimal 0.25. No, this of course does not mean that we have the potential to increase code performance by 6 times, but it would be quite possible to achieve a lower CPI on such a simple cycle. However, why the CPI is high is hard to say. The Retire Stalls metric is approximately 0.7 and tells us that at the end of the execution of the instructions there are pipeline downtimes of 70% of the clock cycles. The remaining performance indicators, judging by the results of the collected profile, are normal. Moreover, knowing that in most cases the memory subsystem is the cause of performance problems, you can specifically launch Memory Access analysis. But he will show the same thing - there are no memory problems. However, the code is so simple, and the memory access is so consistent that it wasn’t even worth it to run the memory analysis. For the same reasons, Branch Analysis will not help us either.

We start looking for a black cat in a dark room, or rather, the reason for the performance failure in the processor microarchitecture.

We use the improved cat search method “Pragmatism”. Initially, it is necessary to decide what we will get if we find this cat, and whether it is worth looking for it because of this. What kind of potential we have, the Execution Stalls and Retire Stalls metrics help to evaluate .

The first measure of Execution Stalls is the relationship between the values of the counters UOPS_EXECUTED.CORE_STALL_CYCLES and UOPS_EXECUTED.CORE_ACTIVE_CYCLES .

The UOPS_EXECUTED.CORE_STALL_CYCLES event counter measures the number of idle cycles in the computational part of the processor pipeline when the Reservation Station does not assign operations to any port.

The event counter UOPS_EXECUTED.CORE_ACTIVE_CYCLES appropriately counts cycles when at least one of the four computational ports and two read / write ports have a micro operation for execution.

The ratio of UOPS_EXECUTED.CORE_STALL_CYCLES / (UOPS_EXECUTED.CORE_ACTIVE_CYCLES + UOPS_EXECUTED.CORE_STALL_CYCLES) will allow us to judge how much of the execution time corresponded to the simulator’s downtime, and what our potential for increasing the productivity is simple, if we use some kind of processor.

By the way, the sum of these counters should give the counter value of all clock cycles CPU_CLK_UNHALTED.THREAD. And in VTune, the ratio UOPS_EXECUTED.CORE_STALL_CYCLES / CPU_CLK_UNHALTED.THREAD is used to automatically calculate the Execution Stalls metric.

In our case, the ratio is approximately 0.24 (a quarter of the idle time out of the total), and this means that if we eliminate downtime, we can potentially increase the throughput of the computer by at least a quarter - and this is only for one of the four slots.

The second Retire Stalls metric is the relationship between the values of UOPS_RETIRED.STALL_CYCLES / CPU_CLK_UNHALTED.THREAD, showing what part of the time from the total number of measures corresponds to the state when no micro-operation is retired, although theoretically, up to 4 operations could be completed per cycle.

This means that at 70% downtime, if we look for a cat well, eliminating the reason for waiting for operations, we can hope to accelerate the execution of the program by at least 2.3 times.

Using common sense, we conclude that for the sake of accelerating the execution of the algorithm, you can try.

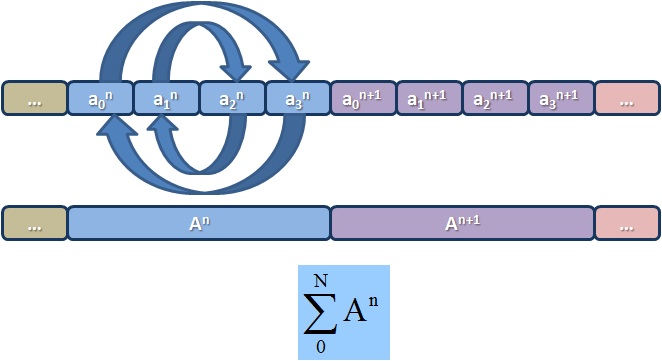

To find where we have a problem, we use the second cat search method - the “Dichotomy Method”: sequentially divide the room into equal parts, or rather divide the stages of the processor conveyor into logical parts, where you can try to find the cause of the problem. This method is well described inIntel® 64 and IA-32 Architectures Optimization Reference Manual , under “Using Performance Monitoring Events” as “Performance Events Drill-Down and Software Tuning Feedback Loop”. Here is a slightly simplified view of it:

The essence of the method is to sequentially separate the parts of the conveyor, finding with the help of appropriate counters the place where it is simple, that is, unemployment by the promotion of microoperations (bottleneck). Initially, it is necessary to determine whether micro-instructions are being carried out in the conveyor. If so, does their execution end with success (Retire). In the case of non-Retired operations, erroneous prediction and execution of the wrong program branches (Bad Speculation) takes place. In our case, we are dealing with a pipeline outage, and then we need to measure whether there are problems with the allocation of processor resources (internal registers, buffers, etc.). If not, it will mean that the processor does not have enough instructions for execution, and the problem is in the Frond-End, that is, there where instructions are fetched from the instruction cache, decoded, and queued for execution. If so, then the problem is somewhere in the darkest part of the room, called the Back-End, where the calculations are made, and where there may be problems both with the calculators themselves, and with the dispatch of micro instructions, allocating buffers, waiting for data from memory, and so on .

Determining if Allocation Stalls is very simple. You need to look at the meter reading with the name RESOURCE_STALLS.ANY . Switching the viewpoint VTune to Hardvare Event Count and looking at the readings of this counter (a value commensurate with the total number of CPU cycles), we realize that it is the lack of some resources, according to the counter, that can identify the problem in our code.

According to Absurdopedia, the method of dichotomy should lead to division of the room until the cat concentrates to a point. However, for the processor microarchitecture the possibilities of the method are not unlimited and end at a certain stage. And here, the best possible way, the “Spear Method” is used - one of the most effective scientific methods, in cases where other, more rigorous and regular methods, no longer help.

We need to measure all possible counters that characterize the lack of resources. There are few of them, and you just need to replace the ANY counter extension with specific resources. For my mobile version of Core i7: RESOURCE_STALLS.LOAD, RESOURCE_STALLS.STORE, RESOURCE_STALLS.ROB_FULL, RESOURCE_STALLS.RS_FULL, RESOURCE_STALLS.OTHER this data can be collected separately, or can be found in the results of the General Exploration profile. The total indicators hint to us that there is a problem of stopping the conveyor advance due to filling of the ROB-buffer, and the lack of a data recording buffer.

Store Buffer stacks data for writing to memory. Delays in operations will occur if there are many write instructions interspersed with reading. Reorder Buffer (ROB) contains micro-operations that wait for execution to complete, as well as completed operations that change the state of the processor in their in-order order. It is important to determine when waiting for which instruction the buffer was full of incoming micro-operations and blocked the further operation of the conveyor.

Here the Source View will help us along with the disassembly - Assembly View. To save space, we’ll place them separately, although they are nearby in VTune.

As you can see from the value of the counter CPU_CLK_UNHALTED.TREAD, most of the measures fell on the cycle of performing the summation of words, and not on the permutation function. Shifting data to the for loop line is somewhat confusing, but this always happens with loops. Results related to the body of the cycle may shift to its heading if the body of the cycle is small. To verify this, look at the disassembled version of the function.

For us, Basic Block 5 is interesting: it is a cycle of performing the operation of rearranging bytes and summing words - this is how the compiler generated the instructions. Starting with the address 0x4010d0 through 0x401105, there are instructions for rearranging bytes in a word. At the address 0x40110s - just the desired instruction for summing the word in the edx register. Again, due to the skid, the results are displayed on the inc statement below, which cannot take so many beats. Interestingly, the same add statement added the largest number of Resource Stalls counters. Looking ahead and applying the same Meta-tyka, we note that removing the cat, that is, this summing operation, we will know for sure that the room is empty, and the problems with Resource Stalls and performance have disappeared.

So what is the problem with this instruction, or rather, with several micro-operations of which it consists (reading an address, reading data from memory and the operation of summing in a register)? Here, as always, there is one trick: you need to either know the specifics of the implementation of data upload and download in the microarchitecture, or use a slightly different microprocessor. Instead of our mobile Core i7 (code name Nehalem), we measure on a platform with an Xeon processor or on a processor with the code name Westmere (so to speak, take a room more equipped), in which you can additionally get the counter LOAD_BLOCK.OVERLAP_STORE. It will just roll over to add asm instructions. In general, this counter signals micro data loading operations (load) blocked for various reasons. The most common reason for such an alarm is that the load microoperation is blocked by the previous write microoperation (store):

- Some bytes of read data were in the previous write operation, and some were not;

- Bytes of the reading word are the same as for the previous record, and the recording word is aligned in size, while 1-2 bytes of the record not aligned at the beginning of the word, or 4-8 bytes, and not aligned with the record data are read;

- The bytes of the reading word are the same as for the previous record, but the recording word is not aligned in size and the reading is not aligned at the beginning of the recording word.

Now let’s take a closer look at the sequence of asm instructions that implement the byte swapping and summing cycle. Here, nothing more than reading a word with a corresponding byte offset from memory to the ebx and ecx registers (temporary values) and byte-writing data from the bottom of the bl and cl registers to memory occurs. As long as we read and write by memory, there are no problems. However, we should try to load the whole word from memory (the micro operation load in the add statement) to the same address of the word as in the previous write operation, how this load operation will be blocked. The processor cannot transfer the entire word to the read buffer immediately after writing this word in parts - you have to wait. This issue is called Blocked Loads Due to No Store Forwarding. Remember her, as she will meet often,

It should be noted that, when investigating the problem on this mobile platform, I had to use the Modified Spear Method, since the collected counters clearly did not indicate the presence of No Store Forwarding. We pulled here the shrink version of the Nehalem microarchitecture - Westmere, or Xeon; and the General Exploration profile did not contain an event counter LOAD_BLOCK.OVERLAP_STORE. The next generation of processors - 2nd generation Intel® Core ™ processor, codenamed SandyBridge (already the current generation itself) - contains a special counter LD_BLOCKS_STORE_FORWARD , which is in the VTune profile of General Exploration for SandyBridge, which will directly tell us about the problem.

Now it remains to think about how to fix the code and solve the performance problem. From the description of the causes of its occurrence, it is clear what needs to be done so that loading a word from memory does not directly follow after it is written by byte to the same address. This means that in order to avoid the search for “this cat” in the future, you need to separate the room and the cat: we put out the operation of summing the words of the array in a separate cycle.

void reduce()

{

int i, n;

unsigned int sum = 0;

init();

for (n = 0; n < ITER_NUM; n++)

{

for (i = 0; i < 1024; i++)

change_endianess((char *)(buf+i));

for (i = 0; i < 1024; i++)

sum += buf [i];

}

printf ("Sum is %d\n", sum);

}

The difference in the execution time of the program can be seen by comparing the profiling results.

One small question remains, why the function accelerated more than 3 times, while at the beginning we determined that idle cycles were only 70 percent? The fact is that such a number of cycles the processor is idle with this set of instructions used by the compiler. By dividing the byte and sum permutation cycles in the source code, we helped the compiler to automatically vectorize the last cycle, and he replaced the usual scalar sum and read / write instructions with vector SIMD instructions, which run 4 times faster on data on a 32-bit platform for data of type int32 . Remember the potential calculated by CPI?