The Rosenblatt Perceptron - What Is Forgotten and Invented by History?

On a habr - already there are some articles about artificial neural networks. But more often they talk about the so-called multilayer perceptron and back propagation error algorithm. But do you know that this variation is no better than the elementary Rosenblatt perceptron?

For example, in this translation What are artificial neural networks? we can see that the following is written about the Rosenblatt perceptron:

Moreover, this occurs in a different way in various articles, books and even textbooks.

But this is probably the greatest AI ad. But in science this is called falsification.

What is invented?

In fact, all serious scientists know that such an idea of the Rosenblatt perceptron is not serious. But they prefer to write about it rather softly. But when history is presented to us in a substantially distorted form, although in such a narrow sphere of science, this is not good. I do not want to say that it was falsified intentionally. Just not, this is a good example of how young people do not read the originals, or read them diagonally, then I pretend that they understand what the problem is. And solve this problem. Then they become doctors and gain fame. And the next generation already believes them. Always, if seriously doing something, double-check the classics on the originals. Do not believe the reposts in the articles.

So, let's open the original describing the Rosenblatt perceptron:Rosenblatt, F. Principles of Neurodynamics: Perceptrons and Theory of Brain Mechanisms, 1965 . You will not find the so-called a single-layer perceptron - it does not exist in nature, at least for 1965. He was invented much later.

There you will immediately see an elementary perceptron, which has a hidden layer of A-elements.

Sometimes this is explained by the fact that the terminology has changed. Alas, do not change the words - but the perceptron has the right layer and ALWAYS was. Moreover, Rosenblatt himself writes that it makes no sense to consider perceptrons without a hidden layer. It was elementary for 1965, and everyone knows.

They want to add the authority of Minsky here further. But he also knew what a perceptron was. And he never proved that a perceptron cannot solve linearly non-separable problems. He proved completely different related to the problem of invariance. And no known ANN solves this problem now. (but this article is not about it, if you want to, make an order I will try to write about it.)

In this regard, the difference between the Rosenblatt perceptron and the Rumelhart multilayer perceptron (MLP) is only as follows:

1. The Rumelhart perceptron is trained by the error back propagation algorithm, when this learns the weights between the input and the hidden layer and the weights between the hidden and the output layer.

2. The Rosenblatt Perceptron is trained by the error correction algorithm. Only weights between the hidden and the output layer are trained with this algorithm. As for the scales between the input and the hidden layer, it is not trained consciously. There is no point in teaching him, because This layer performs a completely different task than the second. The weighting coefficients of the first layer, or rather the exciting and inhibitory bonds, are created in the perceptron randomly - simulating nature here. The task of this layer is precisely to transform a non-separable problem into a separable one. And the input pulses passing through the bonds of the first layer are mapped onto the space of A-elements. This random matrix provides transformation into a separable problem.

The second layer, both in the Rosenblatt perceptron and in the MLP, already only separates the linear problem obtained after the transformation.

Now I hope it’s clear why the first layer is needed - it provides a transformation from an inseparable (linearly inseparable) to a separable representation of the problem. This is also done in MLP, the selected backpropagation algorithm does not change this.

But if the Rosenblatt perceptron uses randomness, then MLP through its training creates this randomness. That’s the whole difference.

There are a number of other differences, consequences - but this article is still not for beginners, but those who are at least a little in the subject. Then I just wanted to note that for beginners, the Rosenblatt perceptron should be studied from the originals .

But what was forgotten?

The Rosenblatt surname is not deserved at all now remembered more often in historical reviews. It should be noted that Rosenblatt did not develop just one kind of artificial neural network. He developed a complete classification of all kinds of neural networks. Under the general name perceptron - ANY current ANN is included. Rosenblatt also has multilayer perceptrons, which, in his terminology, begin with two inner layers, and recurrent perceptrons, and many other subtypes. Moreover, in contrast to their characteristics that are currently being developed, Rosenblatt calculated more carefully. That is why it is simply necessary to compare the newly developed ANN first with the corresponding perceptron classification from Rosenblatt - if there is no such comparison, then the effectiveness of the new ANN is completely unclear.

PS I often met with skepticism when I told this. But if you suddenly do not believe me, read the article Kussul E., Baidyk T., Kasatkina L., Lukovich V., Rosenblatt Perceptrons for Handwritten Digit Recognition, 2001 .

In conclusion, I give a link where I will help to study the Rosenblatt perceptron not from myths, but from the originals: Here we study the possibilities of the Rosenblatt perceptron

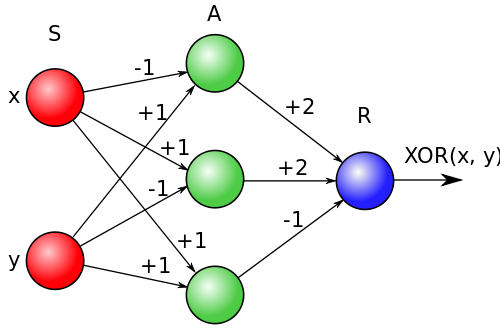

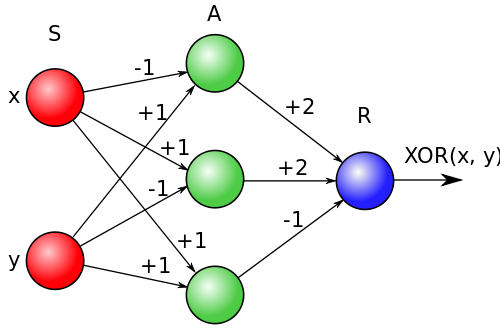

upd. Those who are still captivated by errors - is dedicated - the solution by the Rosenblatt perceptron of the problem XOR

upd2. Thank you all, training for those who do not know and do not read the originals - was not part of my task here. Want to talk normally, write in a personal. I no longer answer provocative comments, I recommend taking and reading the basics .

For example, in this translation What are artificial neural networks? we can see that the following is written about the Rosenblatt perceptron:

The Rosenblatt percepton demonstration showed that simple networks of such neurons can be trained using examples known in certain fields. Later, Minsky and Papert proved that simple preceptons can solve only a very narrow class of linearly separable problems, after which the activity of studying ANN decreased. Nevertheless, the method of backward propagation of learning errors, which can facilitate the task of training complex neural networks using examples, showed that these problems may not be separable.

Moreover, this occurs in a different way in various articles, books and even textbooks.

But this is probably the greatest AI ad. But in science this is called falsification.

What is invented?

In fact, all serious scientists know that such an idea of the Rosenblatt perceptron is not serious. But they prefer to write about it rather softly. But when history is presented to us in a substantially distorted form, although in such a narrow sphere of science, this is not good. I do not want to say that it was falsified intentionally. Just not, this is a good example of how young people do not read the originals, or read them diagonally, then I pretend that they understand what the problem is. And solve this problem. Then they become doctors and gain fame. And the next generation already believes them. Always, if seriously doing something, double-check the classics on the originals. Do not believe the reposts in the articles.

So, let's open the original describing the Rosenblatt perceptron:Rosenblatt, F. Principles of Neurodynamics: Perceptrons and Theory of Brain Mechanisms, 1965 . You will not find the so-called a single-layer perceptron - it does not exist in nature, at least for 1965. He was invented much later.

There you will immediately see an elementary perceptron, which has a hidden layer of A-elements.

Sometimes this is explained by the fact that the terminology has changed. Alas, do not change the words - but the perceptron has the right layer and ALWAYS was. Moreover, Rosenblatt himself writes that it makes no sense to consider perceptrons without a hidden layer. It was elementary for 1965, and everyone knows.

They want to add the authority of Minsky here further. But he also knew what a perceptron was. And he never proved that a perceptron cannot solve linearly non-separable problems. He proved completely different related to the problem of invariance. And no known ANN solves this problem now. (but this article is not about it, if you want to, make an order I will try to write about it.)

In this regard, the difference between the Rosenblatt perceptron and the Rumelhart multilayer perceptron (MLP) is only as follows:

1. The Rumelhart perceptron is trained by the error back propagation algorithm, when this learns the weights between the input and the hidden layer and the weights between the hidden and the output layer.

2. The Rosenblatt Perceptron is trained by the error correction algorithm. Only weights between the hidden and the output layer are trained with this algorithm. As for the scales between the input and the hidden layer, it is not trained consciously. There is no point in teaching him, because This layer performs a completely different task than the second. The weighting coefficients of the first layer, or rather the exciting and inhibitory bonds, are created in the perceptron randomly - simulating nature here. The task of this layer is precisely to transform a non-separable problem into a separable one. And the input pulses passing through the bonds of the first layer are mapped onto the space of A-elements. This random matrix provides transformation into a separable problem.

The second layer, both in the Rosenblatt perceptron and in the MLP, already only separates the linear problem obtained after the transformation.

Now I hope it’s clear why the first layer is needed - it provides a transformation from an inseparable (linearly inseparable) to a separable representation of the problem. This is also done in MLP, the selected backpropagation algorithm does not change this.

But if the Rosenblatt perceptron uses randomness, then MLP through its training creates this randomness. That’s the whole difference.

There are a number of other differences, consequences - but this article is still not for beginners, but those who are at least a little in the subject. Then I just wanted to note that for beginners, the Rosenblatt perceptron should be studied from the originals .

But what was forgotten?

The Rosenblatt surname is not deserved at all now remembered more often in historical reviews. It should be noted that Rosenblatt did not develop just one kind of artificial neural network. He developed a complete classification of all kinds of neural networks. Under the general name perceptron - ANY current ANN is included. Rosenblatt also has multilayer perceptrons, which, in his terminology, begin with two inner layers, and recurrent perceptrons, and many other subtypes. Moreover, in contrast to their characteristics that are currently being developed, Rosenblatt calculated more carefully. That is why it is simply necessary to compare the newly developed ANN first with the corresponding perceptron classification from Rosenblatt - if there is no such comparison, then the effectiveness of the new ANN is completely unclear.

PS I often met with skepticism when I told this. But if you suddenly do not believe me, read the article Kussul E., Baidyk T., Kasatkina L., Lukovich V., Rosenblatt Perceptrons for Handwritten Digit Recognition, 2001 .

In conclusion, I give a link where I will help to study the Rosenblatt perceptron not from myths, but from the originals: Here we study the possibilities of the Rosenblatt perceptron

upd. Those who are still captivated by errors - is dedicated - the solution by the Rosenblatt perceptron of the problem XOR

upd2. Thank you all, training for those who do not know and do not read the originals - was not part of my task here. Want to talk normally, write in a personal. I no longer answer provocative comments, I recommend taking and reading the basics .