ABBYY Cloud OCR SDK: Public Azure Cloud Recognition API for Windows Azure

Until recently, on the web, our recognition technologies "lived" only on the site www.abbyyonline.com , this service is intended for end users. And now we are ready to announce the launch of a beta version of the web recognition API for developers. Meet ABBYY Cloud OCR SDK , the “cloud brother” of the ABBYY FineReader Engine already familiar to our readers .

Until recently, on the web, our recognition technologies "lived" only on the site www.abbyyonline.com , this service is intended for end users. And now we are ready to announce the launch of a beta version of the web recognition API for developers. Meet ABBYY Cloud OCR SDK , the “cloud brother” of the ABBYY FineReader Engine already familiar to our readers .We have long wanted to release a product that would allow the use of OCR technology from all kinds of "thin" and not so devices and all kinds of operating systems and at the same time it was convenient and inexpensive. We hope we succeeded. ABBYY Cloud OCR SDK offers pay-as-you-go payments, so high-quality recognition features are available with minimal initial investment.

Under the cut, we will talk more about how we worked on it and what we did. So far, the service is in closed beta testing, but we believe that it is already stable enough, and the stage of open beta is getting closer. We would like to invite readers of Habr to become one of the first "external" beta testers of ABBYY Cloud OCR SDK. About how to get access - also under the cut.

The cloud recognition API can be used in many scenarios. For example, include recognition functionality in an application in which it is not core. Or you can make a “lightweight” application for a mobile phone in which the user takes a picture of a document, then this document is sent to the server for recognition, and the result comes back. In this scenario, you can make a program that recognizes business cards on almost all phones.

You can also add recognition to the web application. You can still install FineReader Engine on the server, but if you want to do without it, then the cloud service should help here.

Service API

For the first version, we really wanted the recognition API to be accessible from under any operating system and from any device with Internet access, while remaining as simple as possible. Therefore, we made it in the form of several RESTful requests for creating a task, obtaining status information and links to download results. Each processing request must be authorized, if desired, you can enable ssl and encrypt traffic.

A typical service scenario looks like this. The client program, transmitting images using one or more POST requests, generates a task on the server. After the task is formed, it is necessary to send it for processing, specifying the processing settings. The settings depend on the type of processing being performed.

For example, if a simple recognition of the whole document is made, it is possible (by default) to specify the language of the document and the format in which you want to get the result. Now supported pdf, docx, txt, xml and several others.

You can recognize barcodes (the engine itself finds the barcode in the picture and determines its type), you can recognize hand-written text, which usually fill out questionnaires. We also output a business card recognizer to the API: you send a business card image to the server, and in return you get a vCard with recognized text and all the fields found: name, surname, address, etc.

After each request, the server issues xml, which contains all the information about the task: its identifier, cost, status and approximate time until the end of processing.

Jobs ready for processing are placed in the server queue, from where the next freed handler takes them. The client program learns about changes in the status of tasks through a special request.

After the task is processed, a link appears in the server’s response where you can download the result.

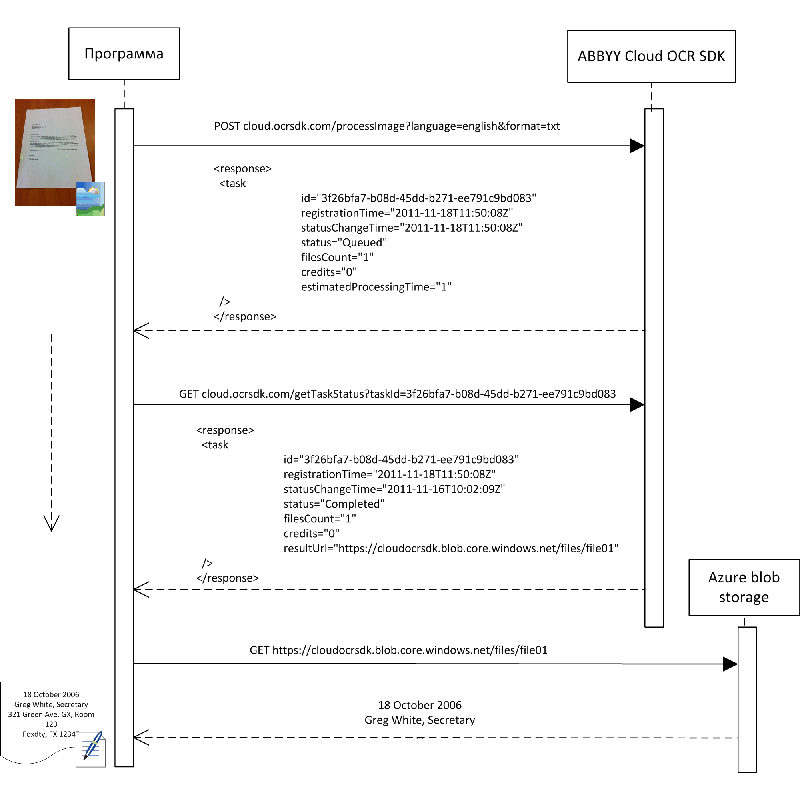

Schematically, the sequence of commands for processing one photo is shown in the figure:

Larger

In the ideal case, only 3 requests are required - in the first request, the image is sent to the server and queued for processing. In the second, it is found out that the task is ready, and a link to download is obtained. At the third request, the result is downloaded.

We plan to further expand the service API. You will see notifications about changes in job statuses through user-provided URLs, advanced job settings, and more. When designing the next version of the API, we also hope to get information from you about features that the product lacks in your use cases.

How everything works inside

The service runs on Windows Azure. It turned out to be quite convenient, there is no need to think about the hardware and operating system under which everything works, and you can focus on the application logic.

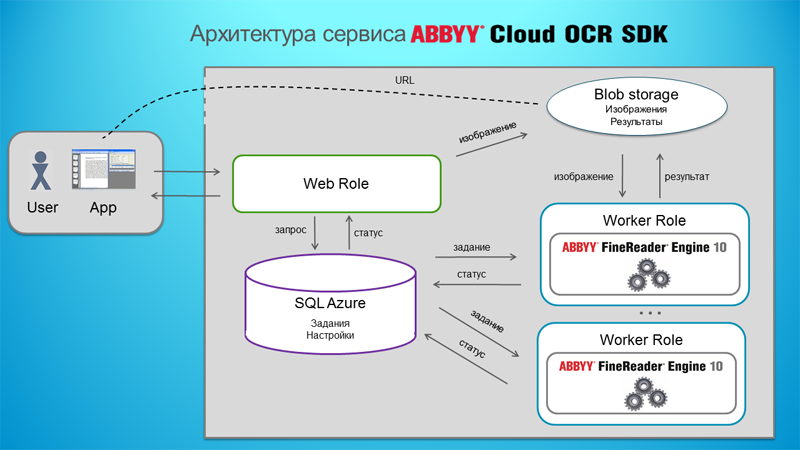

Schematically, the solution architecture looks like this: A

service consists of several parts. User data is stored in Blob storage, settings and tasks are stored in a database. Web roles are responsible for interacting with user applications and the web interface, and working roles are engaged in the actual recognition.

Web roles implement a RESTful service API. They authorize the user, receive tasks, add images to blob storage, job descriptions are placed in the database, and also generate answers.

Several worker roles are responsible for processing jobs. The identifier of the next task is taken from the database, and the files related to this task are taken from the blob. Everything is processed, then the results are placed in blob, and a note is made in the database that the task has been successfully processed.

Then, after the user application is once again interested in the status of its task, a special link to blob is generated for it, by which you can get the result. The link has a limited lifespan and a special checksum, so you can access the results, even knowing the identifier of your task, only through this link.

Processed jobs live on the server for some time, after which they are deleted.

Clients and platforms

For the Cloud OCR SDK API, just write a client in any programming language and for any operating system.

For example, for lovers of pure Linux, we have a script in bash + curl. A full file processing cycle - only 10 lines of code. Hopefully clear enough :-).

For proponents of more traditional solutions, there are client examples in .net, java and python, as well as Android application templates.

All source codes are posted as a project on github. We hope to gradually improve them, listening to your wishes.

Beta testing

We invite all Habr users to take part in the beta testing of the service. If you want to join the testing, go to the address http://ocrsdk.com . First you need to register and fill out an application form for using the ABBYY Cloud OCR SDK. Any user who completes the questionnaire immediately gets the opportunity to recognize 100 pages or 500 small text pieces for free. But if for some reason this was not enough for you - write to us, we will add more :-)

To make it easier for you to start working with the service, we made several examples in popular programming languages and selected a database of images on which you can test for free.

In addition, both during beta testing and after, we apply the principle of not taking money twice for recognizing the same image. If you already recognized the picture once, then you can re-recognize it with other settings, but for free. This is especially useful if you are debugging the logic of your application by driving it in a circle under the debugger. We are sure that such use should not be paid for by the developer. To check for coincidence of images, we verify their checksums, but, alas, we can not check for different photos of the same document for coincidence.

We are very interested in your feedback and suggestions! Write them in the comments on this text or at the technical support address in your personal account on http://ocrsdk.com .

Update:In the questionnaire and in letters we can write in Russian :-).

Vasily Panferov,

Department of Products for Developers