Data Center Cooling: A Song of Ice and Flame

One of the most frequent questions asked by customers of our data center is a question about cooling equipment. Today we will talk in detail about how we monitor the temperature in the server rooms.

Modern server hardware is highly dependent on the temperature conditions in which it operates. Overheating of processors leads, at best, to slowing down the work (the protection mechanism, the so-called “throttling”, comes into effect). In the worst case, overheating is able to completely disable processors.

Excessive cooling equipment also does not go to his advantage. Any material has a certain coefficient of thermal expansion, and low temperatures adversely affect the sensitive elements of the equipment.

In order to visually see the distribution of heat and cold, as well as measure the speed of air flow, we use two specific tools, which we now describe in detail.

Used tools.

Thermal imager

From the physics course, we remember that if any body has a temperature above −273.15 ° C (absolute zero), then it radiates electromagnetic thermal radiation. This radiation captures the imager, turning it into a color picture of the temperature distribution. Each color indicates a specific temperature. The most commonly used gamma in thermal imagers is the red-blue gradient. Red color means heat, and blue color - low.

Modern thermal imagers used in our data centers make it possible not only to measure the temperature range in which the equipment operates, but also to find out the temperature of a given point of an object with an accuracy of tenths of a degree.

One of the main tasks of using a thermal imager in a data center is to monitor the temperature on electrical installations under voltage. Just remember the famous demotivator:

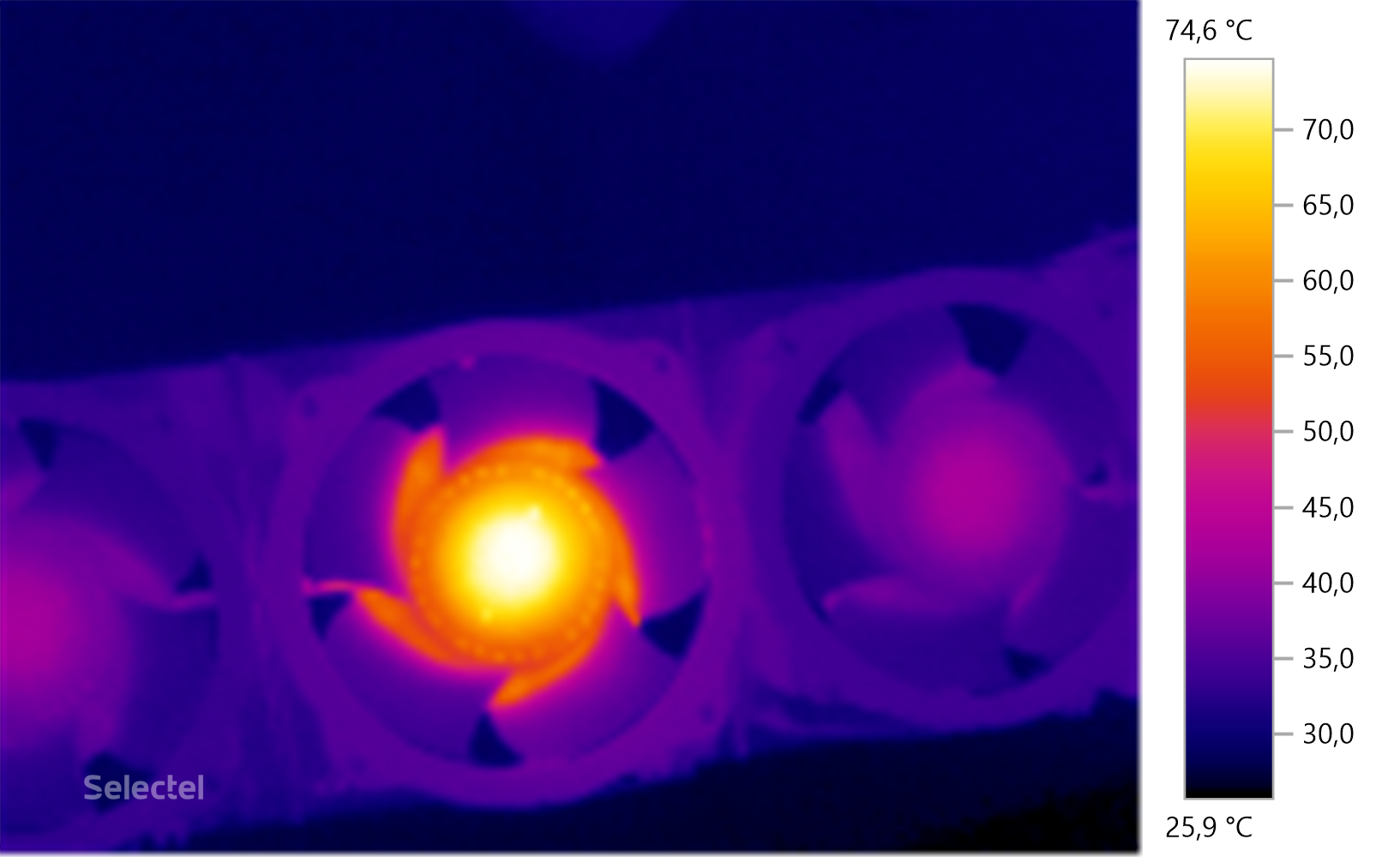

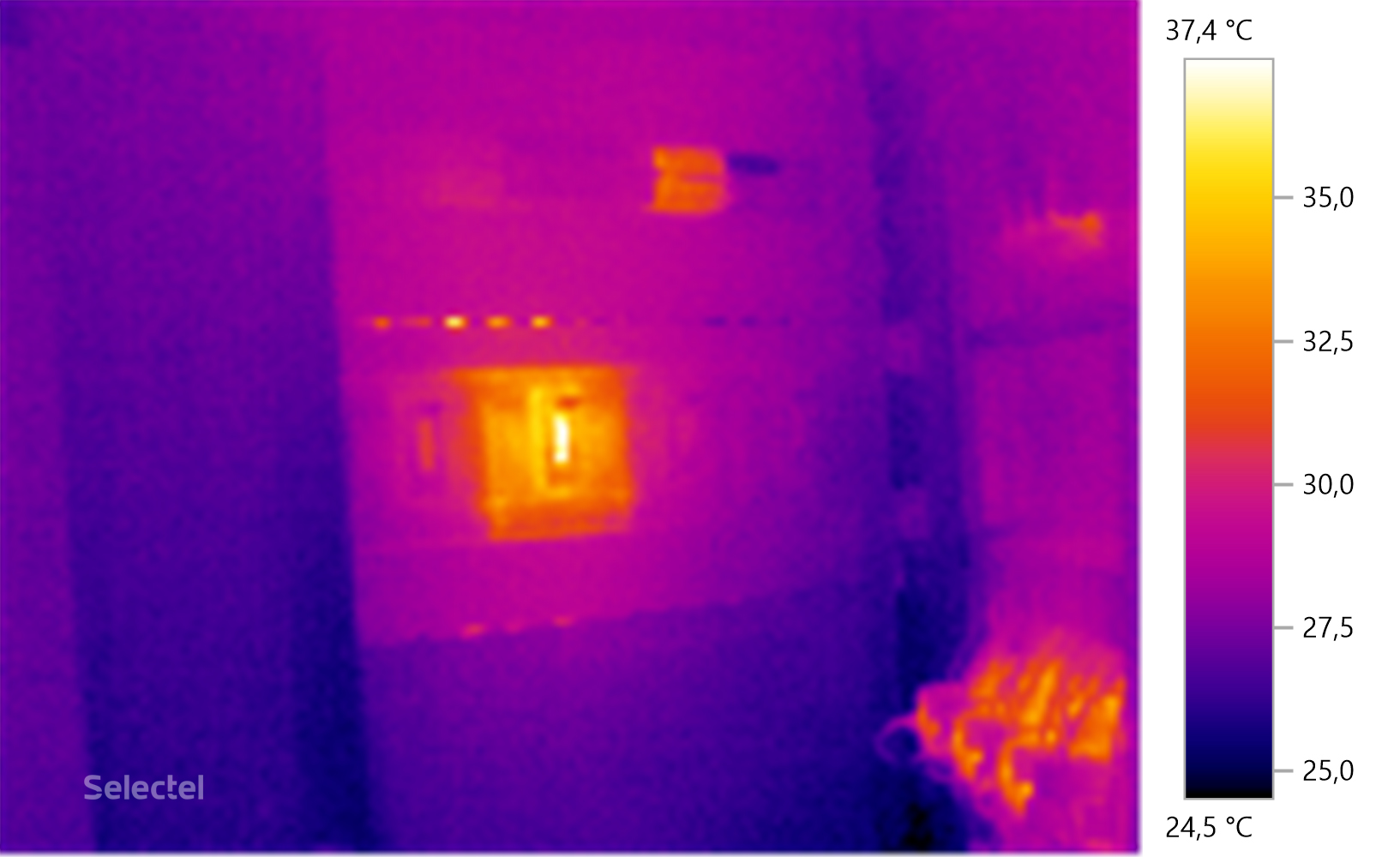

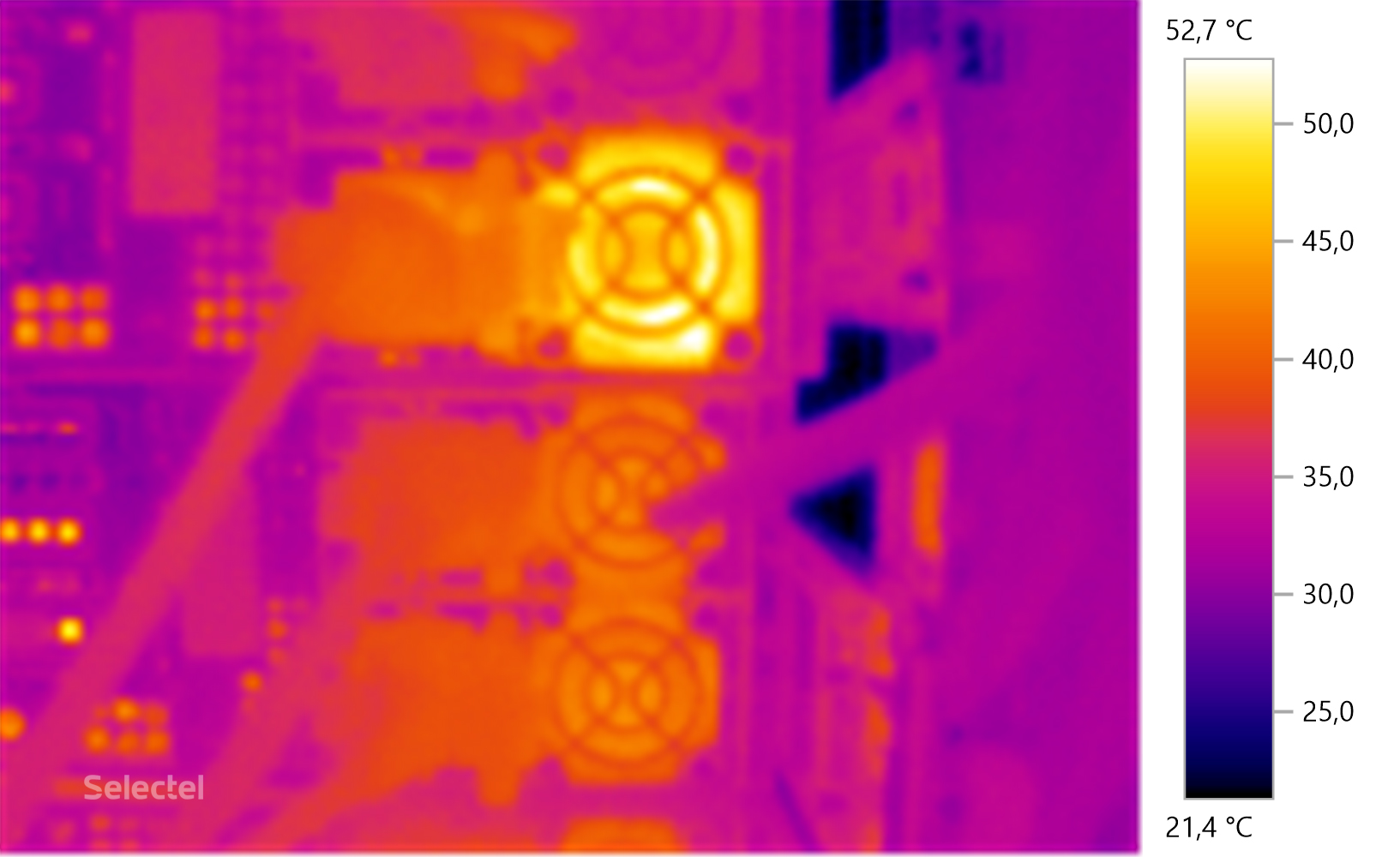

In real conditions, such situations are unacceptable. With the help of a thermal imager, you can easily identify the elements of electrical installations that are beginning to abnormally heat up. For example, you can identify the fan of the uninterruptible power supply, which will soon fail and will need urgent replacement.

Here is a small example of the detection of a problem area in the shield of automata. Everything works, but the anomalous heating of the automaton block from above and the introductory automaton in the middle is visible.

Using a thermal imager allows you to identify the problem at the origin stage, which reduces the risk of its negative impact on the infrastructure, so this device is indispensable for use in a data center.

Anemometer

Now briefly tell you about such a device as an anemometer. This is a device for measuring airspeed. Most often, these devices are used in meteorology to measure wind speed, as well as in construction to calculate the air volume in ventilation and air conditioning systems.

In data centers, anemometers with a wing probe are most often used, since they are mobile and convenient to use. The probe is a light impeller, which begins to rotate even with the slightest movement of air. Such a device looks like this.

What is an anemometer in the data center? Mainly to control the speed of air flow generated by air conditioning systems. For effective cooling of air in server rooms, there are pre-calculated speed values that must be maintained. This problem is solved with the help of an anemometer.

Our data centers use anemometers with probes and sensors that have passed the verification test, which allows us to accurately estimate parameters such as measuring the intensity of air flow, as well as measure the temperature and humidity of the air.

Suppose a survey of the server room revealed an inadequate supply of cold air. Using an anemometer, you can perform a control measurement of the air flow and correct the problem until it starts to affect the equipment.

What happens inside the server

Let's talk a little about why it is so important to observe the temperature regime for server equipment. Let's make a reservation right away - this is information for people who have little idea of the processes occurring inside such equipment.

Let's start with the fact that any server is an ordinary powerful computer, but it has many serious differences:

- all components are designed for round-the-clock operation in 24/7/365 mode;

- cooling is implemented in such a way that the maximum amount of air passes through the casing;

- Processor radiators are most often used passive, that is, without placing individual fans on them;

- The housing is designed to quickly replace any component.

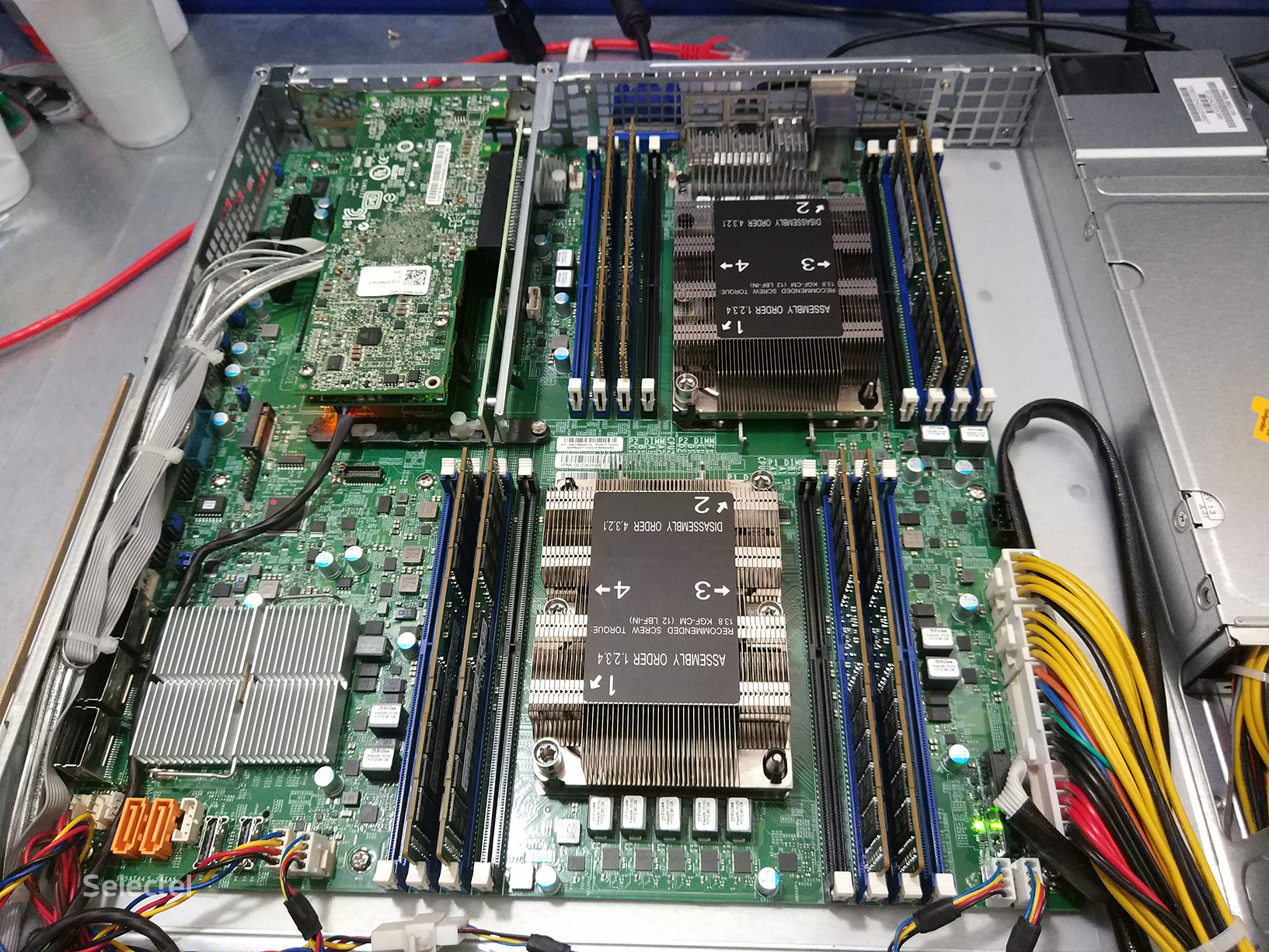

Modern servers emit a huge amount of heat in the process, but for each component has its own requirements for temperature. The standard cooling system for such servers is initially designed for operation in data centers. Let's see what it looks like from the inside.

CPU heating

The central element of any server is the central processor. Silicon crystal with billions of tiny transistors in the process of work is very hot. The stable cooling of this element in modern servers is ensured by passive radiators through which a powerful stream of air passes.

Disc heating

Regardless of the type of drives, whether solid-state drives or classic hard drives, they all emit heat in large quantities. In the first case, the memory chips are heated, and in the second case - the disk is heated by the spindle motor.

Heated RAM

Also, as in the case of SSD-drives, heat up the memory chips. Some types of RAM specially supplied with standard passive radiators to remove excess heat. If you happen to open such a server immediately after shutting it down, we recommend you be careful. The temperature of the memory radiators can easily leave a burn on the fingers.

Heating power supplies

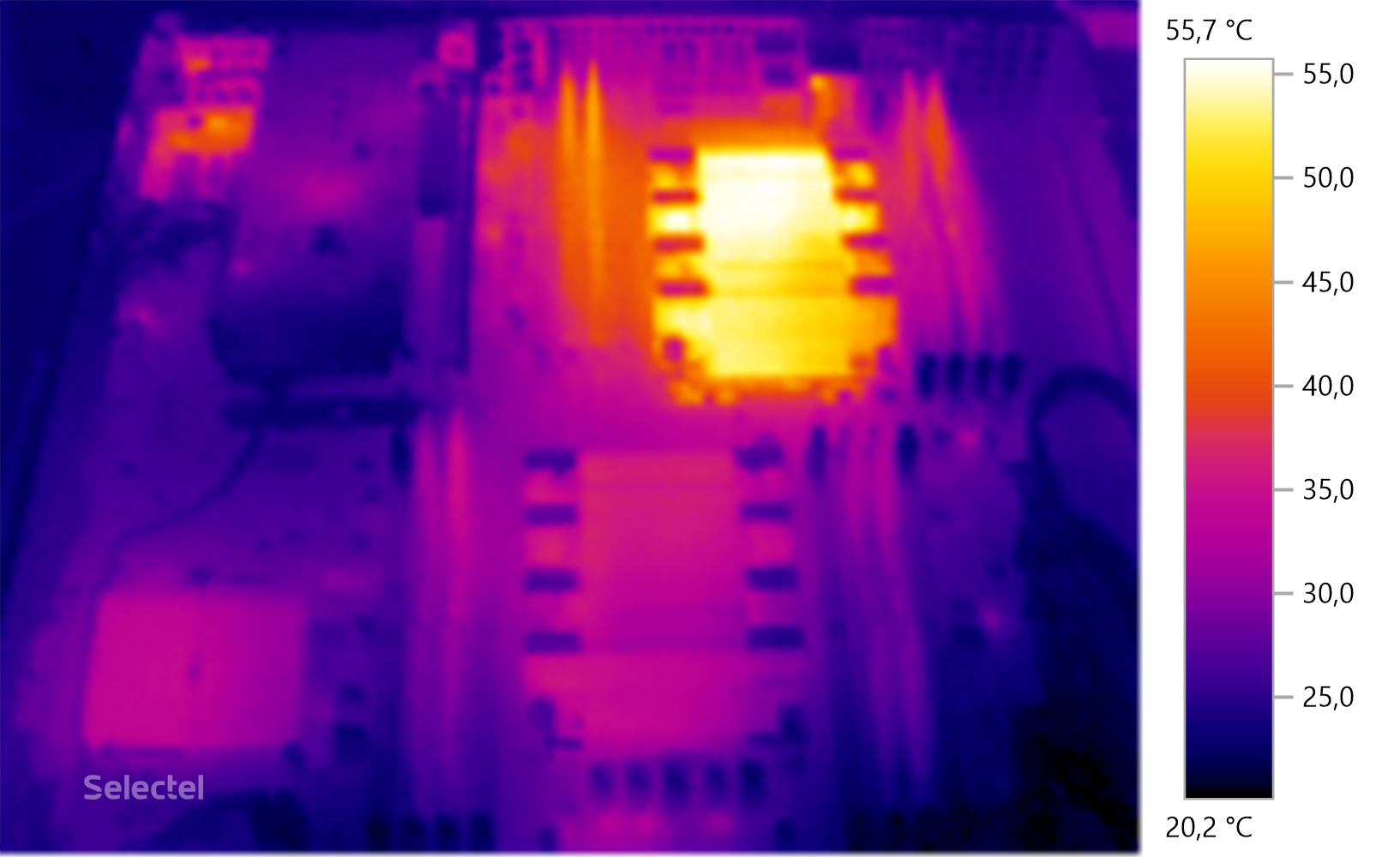

The task of any power supply is to convert standard 220 volts, 50 hertz into a direct current of various voltages to power the server components. A side effect of such a transformation is the release of heat, which is clearly seen in the next thermal photograph.

As can be seen in the photographs, all components heat up to significant temperatures, so we suggest to see how the data centers remove this heat and provide effective cooling of the equipment.

Our data centers use an air circulation scheme with the formation of two different temperature zones:

- “Cold corridor”, where air is supplied from air conditioners;

- “Hot corridor” where the air already heated by the equipment falls.

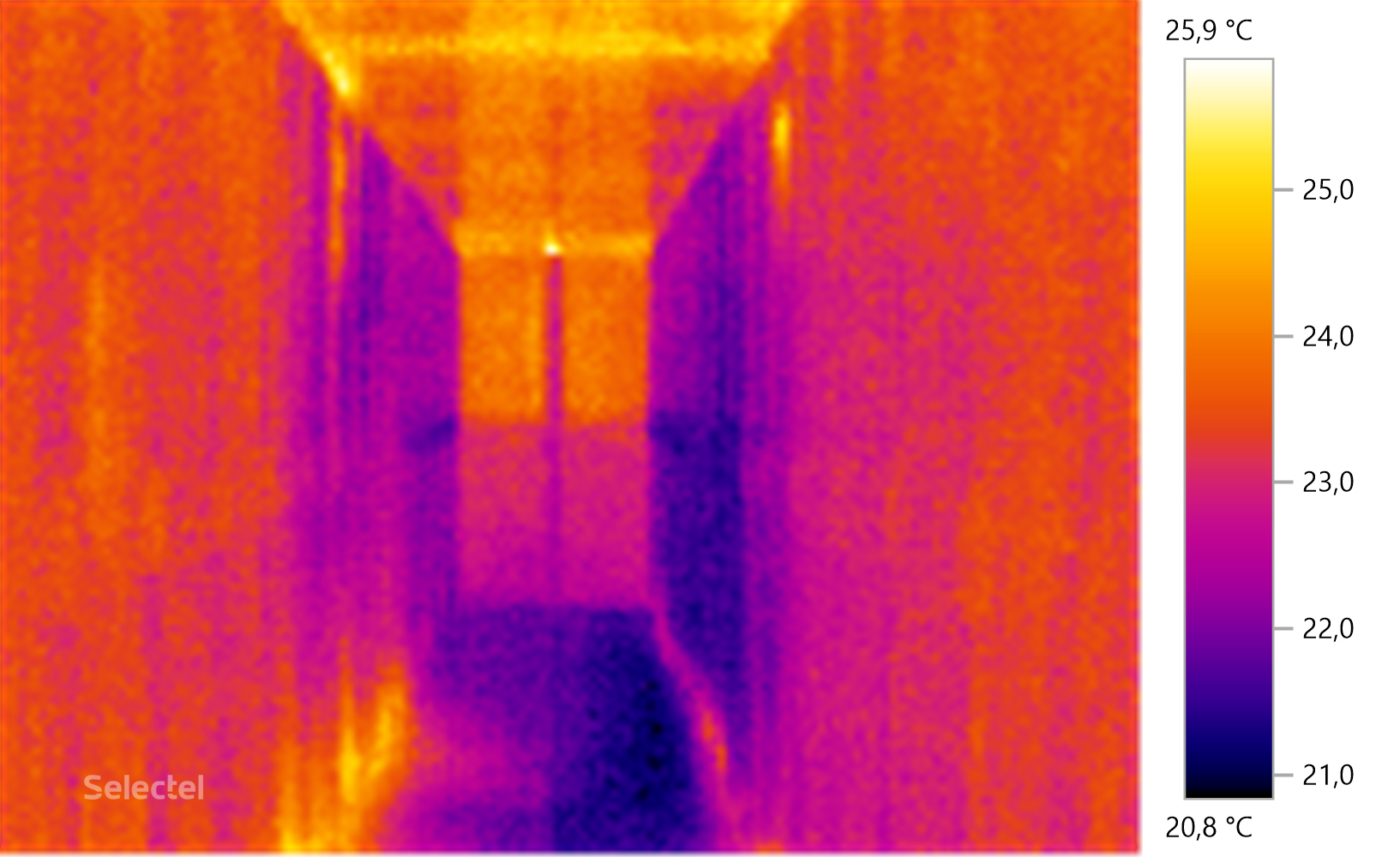

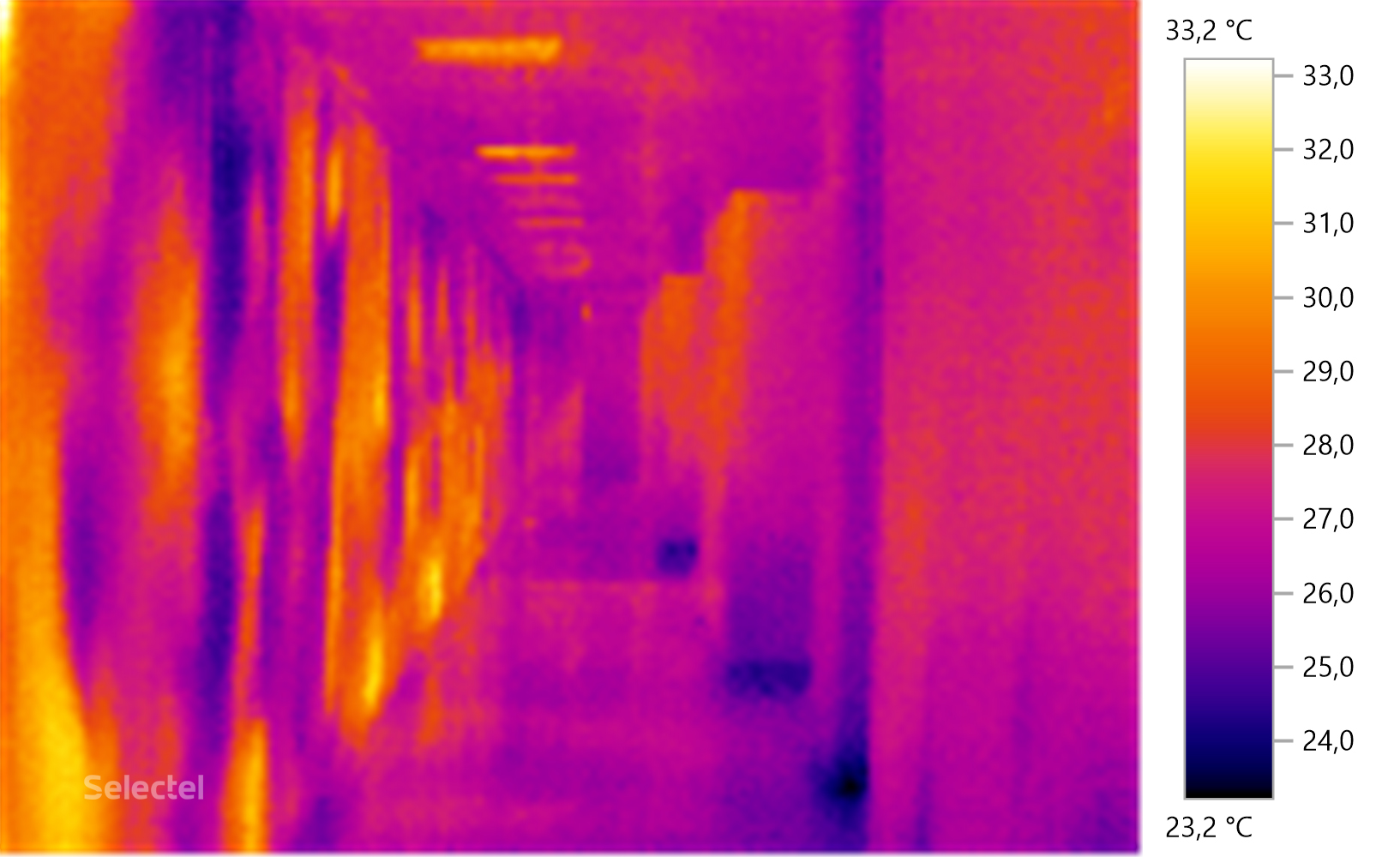

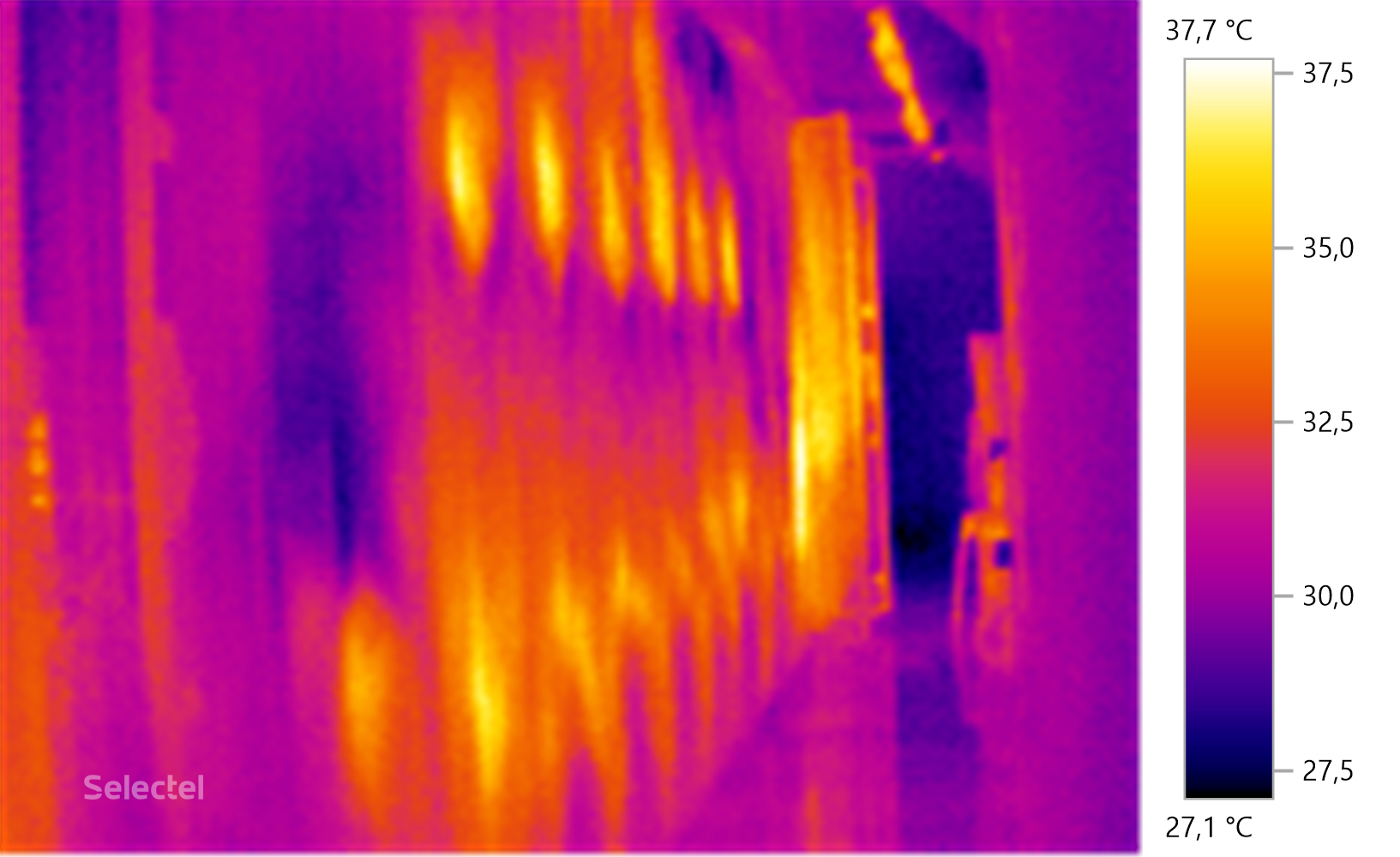

Here's what it looks like if you look at these areas with a thermal imager.

How does the "cold corridor" data center

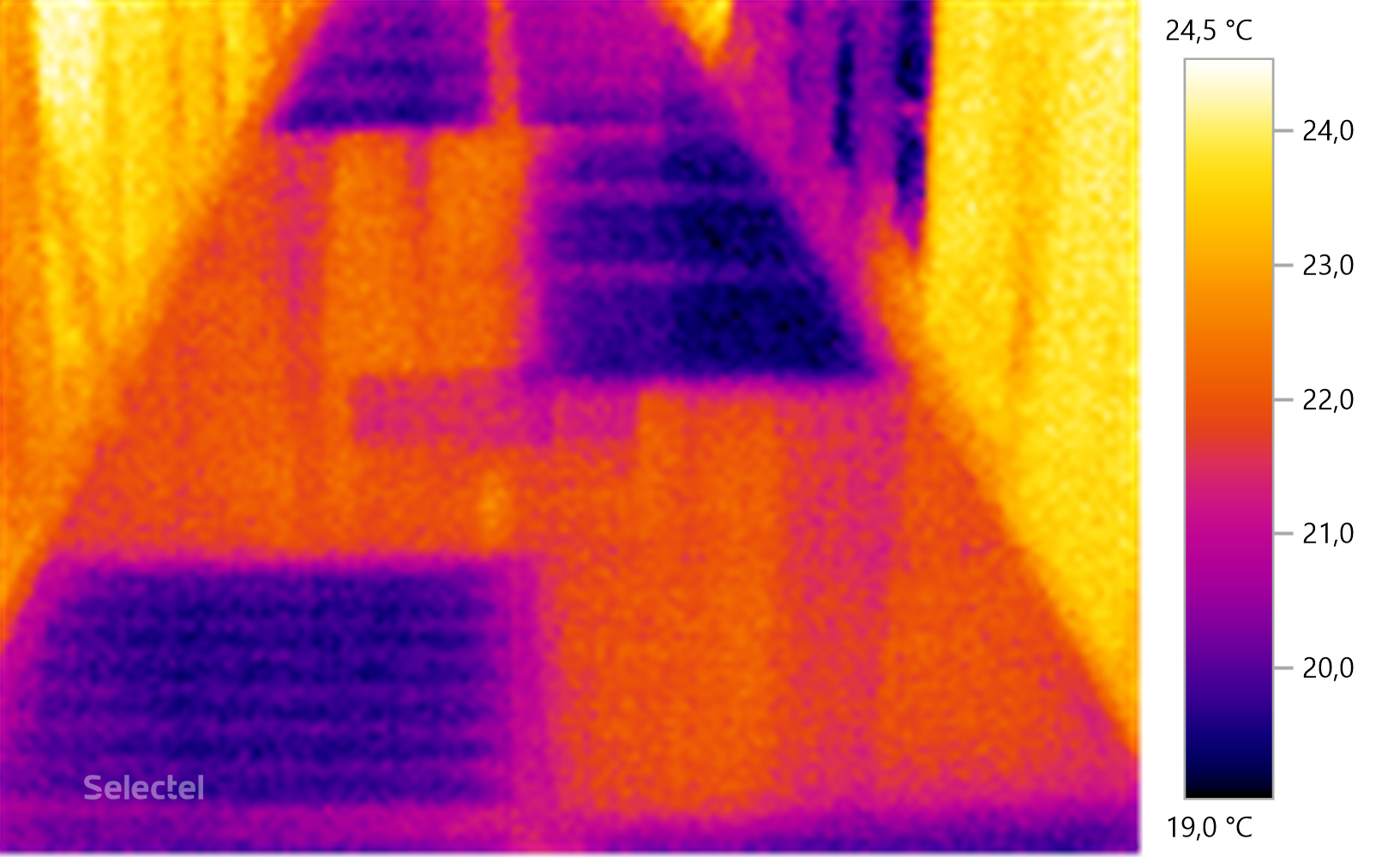

For clarity, a picture with a raised floor. Where the tiles are closed, the racks have not yet been commissioned. This was done on purpose; afterwards all the floor tiles were replaced with perforated ones.

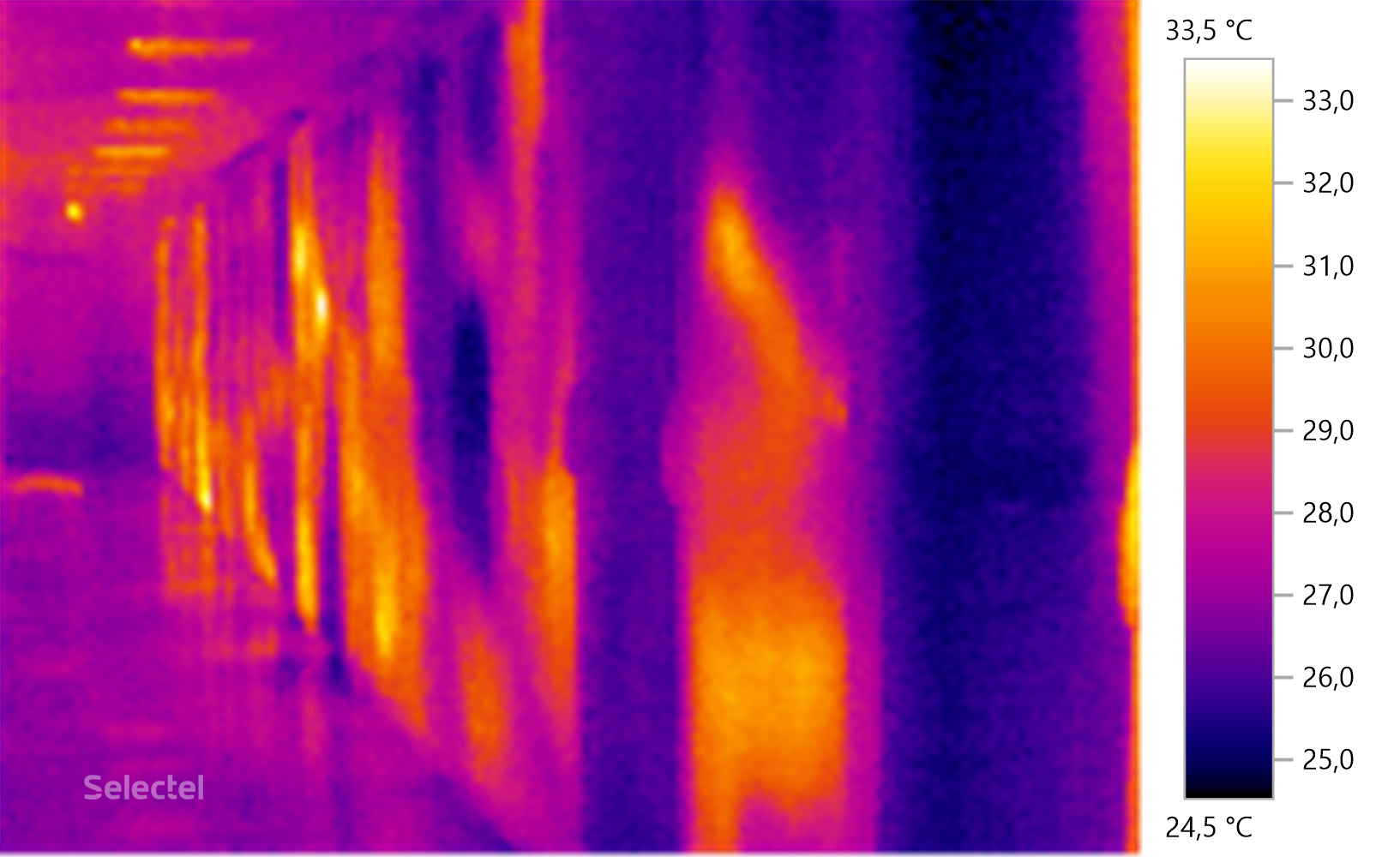

How does the "hot corridor" data center

Our data centers host a large number of server hardware operating under different loads. This explains the similar boundaries of thermal zones.

On the left side are located server racks, and on the right side you can see the precision air conditioners of the cooling system, which take up all the heat generated. The air flow passes through a heat exchanger with a coolant, giving off heat, after which it returns to the “cold corridor”. The heat carrier (40% ethylene glycol solution) enters the refrigeration machine (chiller), cools and returns for a new work cycle.

When a lot of identical equipment is installed in racks according to the same type scheme, then it looks quite interesting.

Conclusion

The organization of server hardware cooling stands in front of any company that owns even a small server room. The solution to this problem carries with it the large capital costs of building a cooling system. In addition, such systems must be regularly maintained, which also requires constant investment.

In our data centers, the air conditioning system is organized at a professional level, so you should not “reinvent the wheel” - you just need to place our equipment and focus on the really important issues of the services. Maintaining a comfortable temperature and uninterrupted power supply is our concern.

Tell us in the comments - what interesting cases did you encounter in the operation of cooling systems?