How we automated the launch of Selenium tests through the Moon and OpenShift

On December 14, at the mitap in St. Petersburg, I (Artem Sokovets), together with my colleague, Dmitry Markelov, spoke about the current infrastructure for autotests in SberTech. Retelling our performance - in this post.

Selenium is a tool to automate web browser actions. Today, this tool is a standard in the automation of WEB.

There are many clients for various programming languages that support the Selenium Webdriver API. Through the WebDriver API, via the JSON Wire protocol, the interaction with the driver of the selected browser takes place, which, in turn, works with the already real browser, performing the actions we need.

Today, the stable version of the client is Selenium 3.X.

Simon Stewart, by the way, promised to present Selenium 4.0 at the SeleniumConf Japan conference .

In 2008, Philippe Hanrigou announced Selenium GRID to create an auto-test infrastructure with support for various browsers.

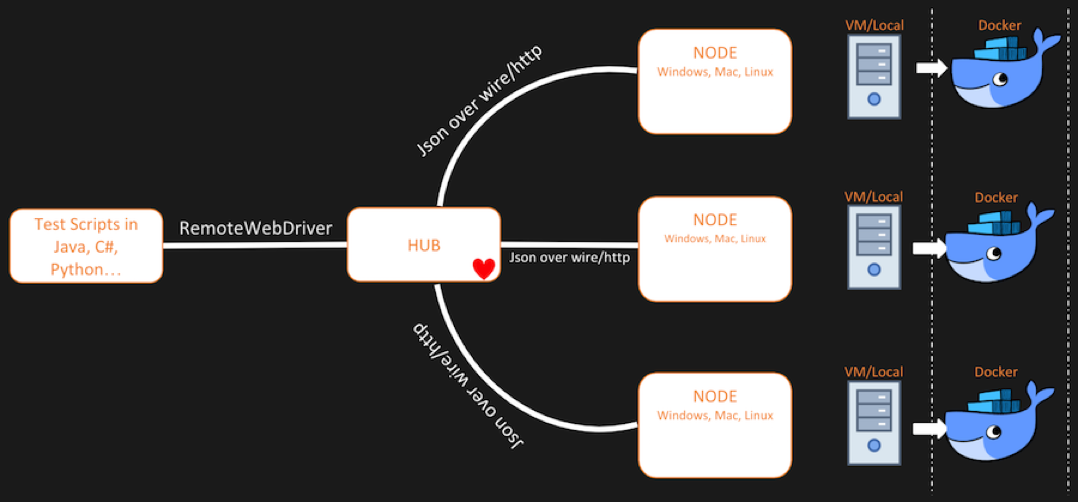

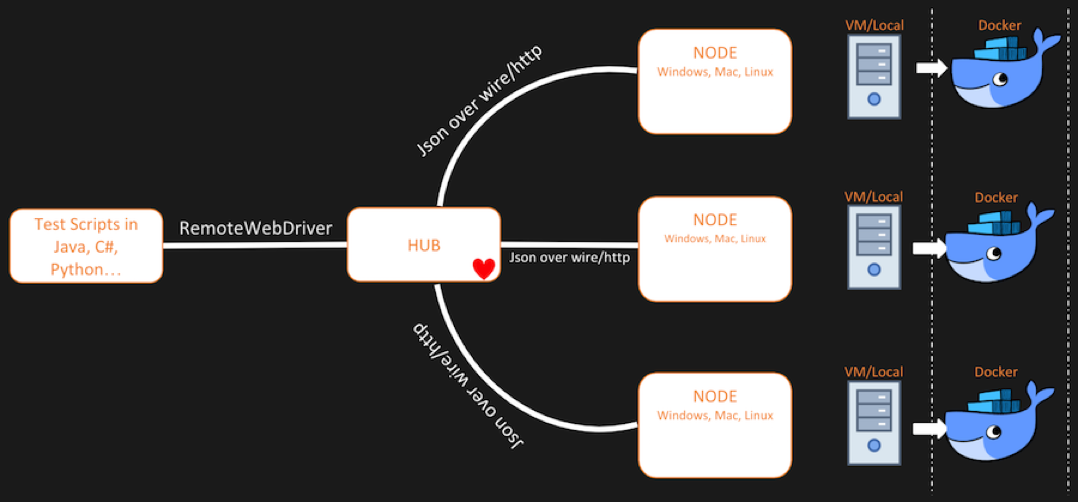

Selenium GRID consists of a hub and nodes (nodes). Node is just a java process. It can be on the same machine with Hub, maybe on another machine, maybe in the Docker container. Hub is essentially a balancer for auto-tests, which determines the node to which it should send the execution of a specific test. Mobile emulators can be connected to it.

Selenium GRID allows you to run tests on different operating systems and different versions of browsers. It also significantly saves time when running a large number of autotests, if, of course, autotests are run in parallel with the use of a maven-surfire-plugin or other parallelization mechanism.

Of course, Selenium GRID has its drawbacks. When using the standard implementation, one has to face the following problems:

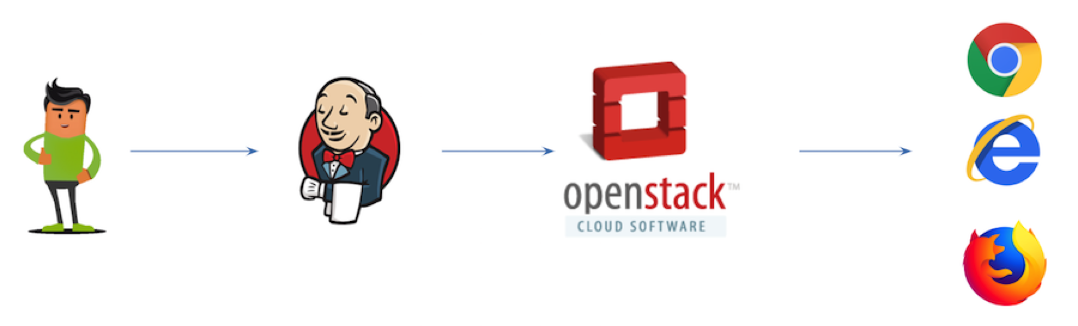

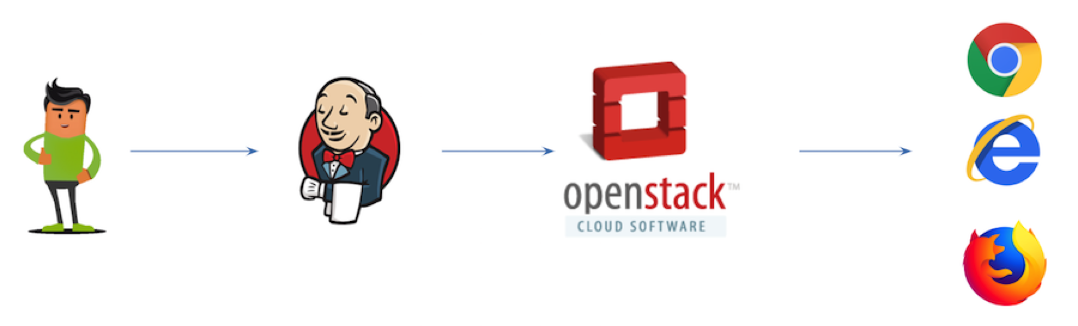

Earlier in SberTech was the following infrastructure for UI autotests. The user ran the build on Jenkins-e, which with the help of a plug-in applied to OpenStack for the allocation of a virtual machine. There was a selection of VM with a special "image" and the necessary browser, and only then on this VM automated tests were performed.

If you wanted to run tests in Chrome or FireFox browsers, then Docker containers were allocated. But when working with IE, it was necessary to raise a “clean” VM, which took up to 5 minutes. Unfortunately, Internet Explorer is a priority browser in our company.

The main problem was that this approach took a lot of time when running autotests in IE. It was necessary to divide tests on suites and start assemblies in parallel in order to achieve at least some reduction in time. We began to think about modernization.

Attending various conferences on automation, development and DevOps (Heisenbug, SQA Days, CodeOne, SeleniumConf and others) we gradually formed a list of requirements for the new infrastructure:

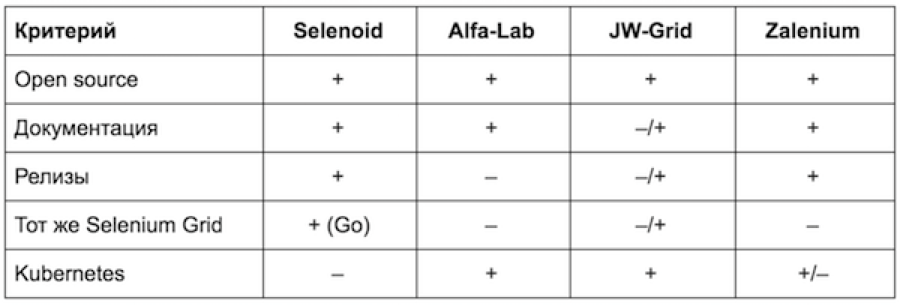

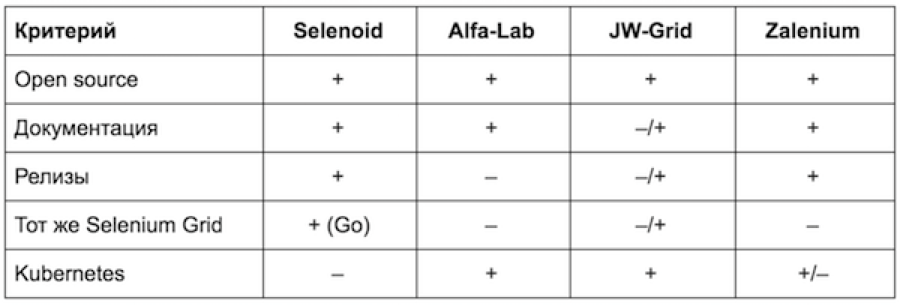

Having defined the objectives, we analyzed the existing solutions on the market. The main things we considered were the products of the Aerokube team (Selenoid and Moon), the solutions of Alfalab (Alpha Lab), JW-Grid (Avito) and Zalenium .

The key disadvantage of Selenoid was the lack of support for OpenShift (a wrapper over Kubernetes). About Alfalab solution there is an article on Habré . It turned out to be the same Selenium Grid. Avito's solution is described in the article . We saw the report at the Heisenbug conference. It also had disadvantages that we did not like. Zalenium is an open source project, also not without problems.

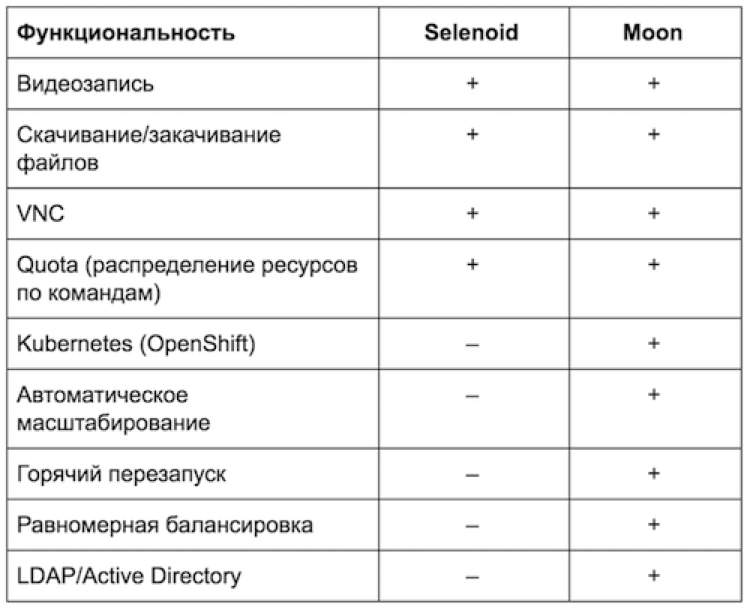

Considered by us the pros and cons of solutions are summarized in the table:

As a result, we chose the product from Aerokube - Selenoid.

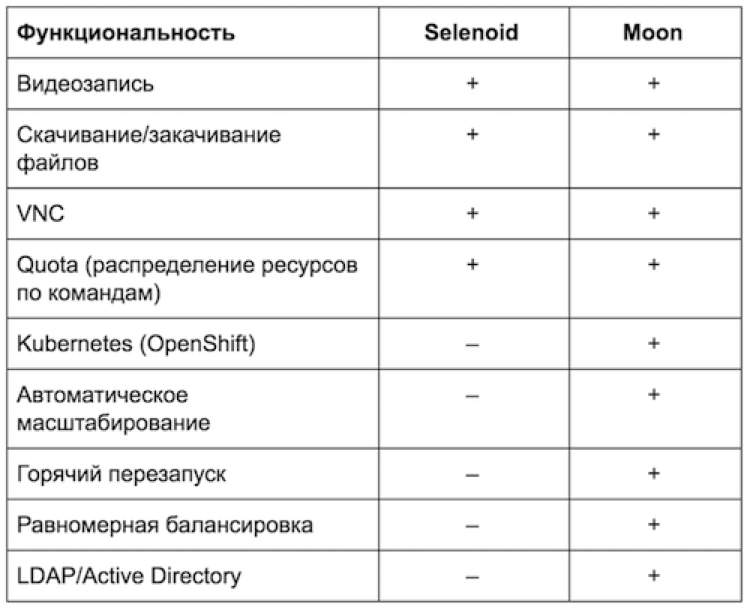

For four months we used Selenoid to automate the Sberbank ecosystem. This is a good decision, but the Bank is moving towards OpenShift, and deploying Selenoid to OpenShift is not a trivial task. The subtlety is that Selenoid in Kubernetes controls the docker of the latter, and Kubernetes does not know anything about it and cannot properly shed other nodes. In addition, Selenoid in Kubernetes requires a GGR (Go Grid Router), in which the load distribution is lame.

Having experimented with Selenoid, we are interested in the Moon paid tool, which is focused on working with Kubernetes and has several advantages compared to the free Selenoid. It has been developing for two years now and allows you to deploy an infrastructure under Selenium testing UI, without spending on DevOps engineers who have secret knowledge about how to deploy Selenoid in Kubernetes. This is an important advantage - try to upgrade a Selenoid cluster without downtime and decreasing capacity while running tests?

Moon was not the only option. For example, one could take the Zalenium mentioned above, but in fact it is the same Selenium Grid. He has a full list of sessions stored inside the hub, and if the hub drops, then the tests end. Against this background, Moon wins due to the fact that he does not have an internal state, therefore the fall of one of his replicas is generally imperceptible. Moon has all “gracefully” - it can be restarted fearlessly, without waiting for the session to end.

Zalenium has other limitations. For example, does not support Quota. You can not put two copies of it for the load balancer, because he does not know how to distribute his state between two or more "heads". On the whole, he will hardly start on his cluster. Zalenium uses PersistentVolume to store data: logs and recorded video tests, but mostly for disks in the clouds, and not for the more fault-tolerant S3.

The current infrastructure using Moon and OpenShift is as follows: The

user can run tests both locally and using the CI server (in our case, Jenkins, but there may be others). In both cases, we are using RemoteWebDriver to access OpenShift, in which we deploy a service with several replicas of the Moon. Further, the request, in which the required browser is indicated, is processed in the Moon, as a result of which the Kubernetes API initiates the creation of a submission with this browser. Then Moon directly proxies requests to the container, where they pass the tests.

At the end of the run, the session ends, they are deleted, resources are released.

Of course, not without complications. As previously mentioned, the target browser for us is Internet Explorer - most of our applications use ActiveX components. Since we use OpenShift, our Docker containers work on RedHat Enterprise Linux. Thus, the question arises: how to run Internet Explorer in a Docker container when the host machine is on Linux?

The guys from the Moon team shared their decision to launch Internet Explorer and Microsoft Edge.

The disadvantage of this solution is that the Docker container should run in privileged mode. So the initialization of the container with Internet Explorer after launching the test takes 10 seconds, which is 30 times faster than using the previous infrastructure.

In conclusion, we would like to share with you solutions to some of the problems encountered in the process of deploying and configuring the cluster.

The first problem is the distribution of service images. When the moon initiates the creation of the browser, in addition to the container with the browser, we launch additional service containers - logger, defender, video recorder.

It all starts in one poda. And if the images of these containers are not cached on the nodes, they will be received from the Docker-hub. At our stage, everything fell because the internal network was used. Therefore, the guys from Aerokube quickly rendered this setting in the config map. If you also use the internal network, we advise you to populate these images into your registry and specify the path to them in the moon-config configuration file. In the service.json file, add the images section:

The following problem was revealed already at the start of tests. The entire infrastructure was dynamically created, but the test fell after 30 seconds with the following error:

Why did this happen? The fact is that the test via RemoteWebDriver initially addresses the OpenShift routing layer, which is responsible for interacting with the external environment. In the role of this layer, we have Haproxy, which redirects requests for the containers we need. In practice, the test turned to this layer, it was redirected to our container, which was supposed to create a browser. But he could not create it, because the resources were running out. Therefore, the test went to the queue, and the proxy server after 30 seconds dropped it on timeout, since by default it was exactly this time interval that stood on it.

How to solve it? Everything turned out to be quite simple - you just had to redefine the haproxy.router.openshift.io/timeout annotation for the container's router.

The next case is working with an S3 compatible storage. Moon can record what is happening in the container with the browser. Service containers, one of which is a video recorder, are lifted along with the browser on the same node. It records everything that happens in the container and, after the end of the session, sends data to an S3 compatible storage. To send data to such storage, you need to specify in the settings url, attendance passwords, as well as the name of the basket.

It seems simple. We entered the data and started running tests, but there were no files in the storage. After parsing the logs, we realized that the client used to interact with S3 swore at the lack of certificates, since we indicated the address to S3 with https in the url field. The solution to the problem is to specify the unprotected http mode or add your own certificates to the container. The last option is more difficult if you do not know what is in the container and how it all works.

Each container with a browser can be configured independently - all available parameters are in the documentation of Moon. Pay attention to such custom settings as privileged and nodeSelector.

They are needed for this. The container with Internet Explorer, as mentioned above, should be launched only in privileged mode. Work in the required mode is provided by the privileged flag together with the issuance of rights to launch such containers to the service account.

To run on separate nodes, you need to register nodeSelector:

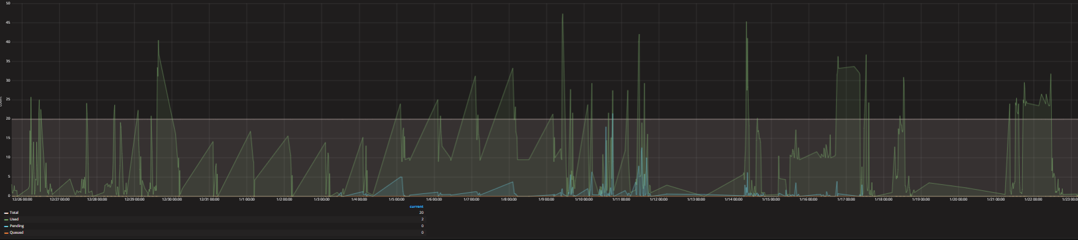

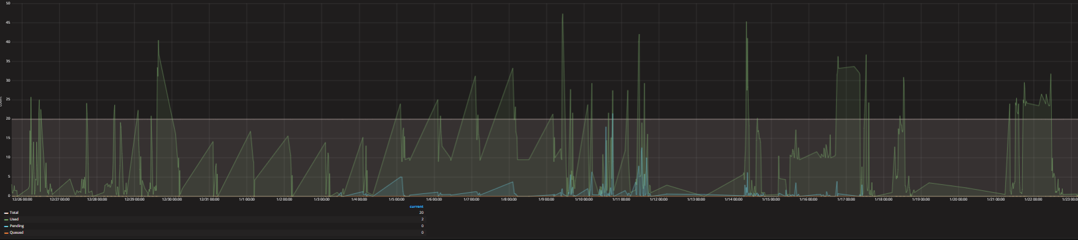

Last tip. Keep track of the number of running sessions. We display all launches in Grafana:

We are not satisfied with everything in the current infrastructure and the solution is not yet complete. In the near future, we plan to stabilize IE at Docker, get a “rich” UI interface in the Moon, and also test Appium for mobile autotests.

What is Selenium

Selenium is a tool to automate web browser actions. Today, this tool is a standard in the automation of WEB.

There are many clients for various programming languages that support the Selenium Webdriver API. Through the WebDriver API, via the JSON Wire protocol, the interaction with the driver of the selected browser takes place, which, in turn, works with the already real browser, performing the actions we need.

Today, the stable version of the client is Selenium 3.X.

Simon Stewart, by the way, promised to present Selenium 4.0 at the SeleniumConf Japan conference .

Selenium GRID

In 2008, Philippe Hanrigou announced Selenium GRID to create an auto-test infrastructure with support for various browsers.

Selenium GRID consists of a hub and nodes (nodes). Node is just a java process. It can be on the same machine with Hub, maybe on another machine, maybe in the Docker container. Hub is essentially a balancer for auto-tests, which determines the node to which it should send the execution of a specific test. Mobile emulators can be connected to it.

Selenium GRID allows you to run tests on different operating systems and different versions of browsers. It also significantly saves time when running a large number of autotests, if, of course, autotests are run in parallel with the use of a maven-surfire-plugin or other parallelization mechanism.

Of course, Selenium GRID has its drawbacks. When using the standard implementation, one has to face the following problems:

- permanent restart hub and node. If the hub and the node are not used for a long time, then at a subsequent connection there may be situations when, when creating a session on a node, this very session falls off by timeout. Restoring is required to restart;

- limit on the number of nodes. It strongly depends on the tests and settings of the grid. Without dancing with a tambourine, it begins to slow down with several dozen connected nodes;

- poor functionality;

- the inability to update without stopping the service.

Initial infrastructure of autotests in SberTech

Earlier in SberTech was the following infrastructure for UI autotests. The user ran the build on Jenkins-e, which with the help of a plug-in applied to OpenStack for the allocation of a virtual machine. There was a selection of VM with a special "image" and the necessary browser, and only then on this VM automated tests were performed.

If you wanted to run tests in Chrome or FireFox browsers, then Docker containers were allocated. But when working with IE, it was necessary to raise a “clean” VM, which took up to 5 minutes. Unfortunately, Internet Explorer is a priority browser in our company.

The main problem was that this approach took a lot of time when running autotests in IE. It was necessary to divide tests on suites and start assemblies in parallel in order to achieve at least some reduction in time. We began to think about modernization.

New infrastructure requirements

Attending various conferences on automation, development and DevOps (Heisenbug, SQA Days, CodeOne, SeleniumConf and others) we gradually formed a list of requirements for the new infrastructure:

- Reduce time to run regression tests;

- Provide a single point of entry for autotests, which will facilitate their debugging for an automation specialist. Cases where locally everything works are not uncommon, and as soon as the tests get into the pipeline there are solid drops.

- Provide cross-browser and mobile automation (Appium-tests).

- Follow the cloud architecture of the bank: Docker containers should be managed in OpenShift.

- Reduce memory consumption and CPU.

Overview of Existing Solutions

Having defined the objectives, we analyzed the existing solutions on the market. The main things we considered were the products of the Aerokube team (Selenoid and Moon), the solutions of Alfalab (Alpha Lab), JW-Grid (Avito) and Zalenium .

The key disadvantage of Selenoid was the lack of support for OpenShift (a wrapper over Kubernetes). About Alfalab solution there is an article on Habré . It turned out to be the same Selenium Grid. Avito's solution is described in the article . We saw the report at the Heisenbug conference. It also had disadvantages that we did not like. Zalenium is an open source project, also not without problems.

Considered by us the pros and cons of solutions are summarized in the table:

As a result, we chose the product from Aerokube - Selenoid.

Selenoid vs moon

For four months we used Selenoid to automate the Sberbank ecosystem. This is a good decision, but the Bank is moving towards OpenShift, and deploying Selenoid to OpenShift is not a trivial task. The subtlety is that Selenoid in Kubernetes controls the docker of the latter, and Kubernetes does not know anything about it and cannot properly shed other nodes. In addition, Selenoid in Kubernetes requires a GGR (Go Grid Router), in which the load distribution is lame.

Having experimented with Selenoid, we are interested in the Moon paid tool, which is focused on working with Kubernetes and has several advantages compared to the free Selenoid. It has been developing for two years now and allows you to deploy an infrastructure under Selenium testing UI, without spending on DevOps engineers who have secret knowledge about how to deploy Selenoid in Kubernetes. This is an important advantage - try to upgrade a Selenoid cluster without downtime and decreasing capacity while running tests?

Moon was not the only option. For example, one could take the Zalenium mentioned above, but in fact it is the same Selenium Grid. He has a full list of sessions stored inside the hub, and if the hub drops, then the tests end. Against this background, Moon wins due to the fact that he does not have an internal state, therefore the fall of one of his replicas is generally imperceptible. Moon has all “gracefully” - it can be restarted fearlessly, without waiting for the session to end.

Zalenium has other limitations. For example, does not support Quota. You can not put two copies of it for the load balancer, because he does not know how to distribute his state between two or more "heads". On the whole, he will hardly start on his cluster. Zalenium uses PersistentVolume to store data: logs and recorded video tests, but mostly for disks in the clouds, and not for the more fault-tolerant S3.

AutoTest Infrastructure

The current infrastructure using Moon and OpenShift is as follows: The

user can run tests both locally and using the CI server (in our case, Jenkins, but there may be others). In both cases, we are using RemoteWebDriver to access OpenShift, in which we deploy a service with several replicas of the Moon. Further, the request, in which the required browser is indicated, is processed in the Moon, as a result of which the Kubernetes API initiates the creation of a submission with this browser. Then Moon directly proxies requests to the container, where they pass the tests.

At the end of the run, the session ends, they are deleted, resources are released.

Start Internet Explorer

Of course, not without complications. As previously mentioned, the target browser for us is Internet Explorer - most of our applications use ActiveX components. Since we use OpenShift, our Docker containers work on RedHat Enterprise Linux. Thus, the question arises: how to run Internet Explorer in a Docker container when the host machine is on Linux?

The guys from the Moon team shared their decision to launch Internet Explorer and Microsoft Edge.

The disadvantage of this solution is that the Docker container should run in privileged mode. So the initialization of the container with Internet Explorer after launching the test takes 10 seconds, which is 30 times faster than using the previous infrastructure.

Troubleshooting

In conclusion, we would like to share with you solutions to some of the problems encountered in the process of deploying and configuring the cluster.

The first problem is the distribution of service images. When the moon initiates the creation of the browser, in addition to the container with the browser, we launch additional service containers - logger, defender, video recorder.

It all starts in one poda. And if the images of these containers are not cached on the nodes, they will be received from the Docker-hub. At our stage, everything fell because the internal network was used. Therefore, the guys from Aerokube quickly rendered this setting in the config map. If you also use the internal network, we advise you to populate these images into your registry and specify the path to them in the moon-config configuration file. In the service.json file, add the images section:

"images": {

"videoRecorder": "ufs-selenoid-cluster/moon-video-recorder:latest",

"defender": "ufs-selenoid-cluster/defender:latest",

"logger": "ufs-selenoid-cluster/logger:latest"

}

The following problem was revealed already at the start of tests. The entire infrastructure was dynamically created, but the test fell after 30 seconds with the following error:

Driver info: org.openqa.selenium.remote.RemoteWebDriver

Org.openqa.selenium.WebDriverException: <html><body><h1>504 Gateway Time-out</h1>

The server didn’t respond in time.

Why did this happen? The fact is that the test via RemoteWebDriver initially addresses the OpenShift routing layer, which is responsible for interacting with the external environment. In the role of this layer, we have Haproxy, which redirects requests for the containers we need. In practice, the test turned to this layer, it was redirected to our container, which was supposed to create a browser. But he could not create it, because the resources were running out. Therefore, the test went to the queue, and the proxy server after 30 seconds dropped it on timeout, since by default it was exactly this time interval that stood on it.

How to solve it? Everything turned out to be quite simple - you just had to redefine the haproxy.router.openshift.io/timeout annotation for the container's router.

$oc annotate route moon --overwrite haproxy.router.openshift.io/timeout=10m

The next case is working with an S3 compatible storage. Moon can record what is happening in the container with the browser. Service containers, one of which is a video recorder, are lifted along with the browser on the same node. It records everything that happens in the container and, after the end of the session, sends data to an S3 compatible storage. To send data to such storage, you need to specify in the settings url, attendance passwords, as well as the name of the basket.

It seems simple. We entered the data and started running tests, but there were no files in the storage. After parsing the logs, we realized that the client used to interact with S3 swore at the lack of certificates, since we indicated the address to S3 with https in the url field. The solution to the problem is to specify the unprotected http mode or add your own certificates to the container. The last option is more difficult if you do not know what is in the container and how it all works.

And finally ...

Each container with a browser can be configured independently - all available parameters are in the documentation of Moon. Pay attention to such custom settings as privileged and nodeSelector.

They are needed for this. The container with Internet Explorer, as mentioned above, should be launched only in privileged mode. Work in the required mode is provided by the privileged flag together with the issuance of rights to launch such containers to the service account.

To run on separate nodes, you need to register nodeSelector:

"internet explorer": {

"default": "latest",

"versions": {

"latest": {

"image": "docker-registry.default.svc:5000/ufs-selenoid-cluster/windows:7",

"port": "4444",

"path": "/wd/hub",

"nodeSelector": {

"kubernetes.io/hostname": "nirvana5.ca.sbrf.ru"

},

"volumes": ["/var/lib/docker/selenoid:/image"],

"privileged": true

}

}

}

Last tip. Keep track of the number of running sessions. We display all launches in Grafana:

Where are we going

We are not satisfied with everything in the current infrastructure and the solution is not yet complete. In the near future, we plan to stabilize IE at Docker, get a “rich” UI interface in the Moon, and also test Appium for mobile autotests.