Mail.Ru mail (even if you are Chinese)

We want to share our joy: we have successfully transferred our mail to UTF-8. Now you can easily correspond with Arabs, Chinese, Japanese, Greeks, Georgians, write letters in Hebrew and Yiddish, show off your knowledge of the Phoenician script or encrypt the message with notes. And at the same time, be sure that the addressee will receive exactly what they sent him, and not the squares or “crooks”.

Like many major changes, the transition process required serious preparation and had a large "underwater" part - the developers were faced with the task of processing 6 petabytes of letters in more than a hundred million mailboxes. The first experiments began in the fall of 2010, and in the spring of 2011 all the boxes were successfully transferred to the new system. At the same time, the domain of the project “mail” symbolically changed: instead of the main domain win.mail.ru and the historical koi.mail.ru and mac.mail.ru, which issued the site in the appropriate encodings, e.mail.ru is now used, issuing everything pages in UTF-8. All mail is also stored, processed and displayed in UTF-8. This means that in letters you can use any living and dead languages, mathematical and musical symbols, both in the form of plain-text, and with formatting.

To remind you of how things were with international communication recently, we prepared a short excursion into the history of encodings.

Surprisingly, the problem of message encoding, which in the eighties tormented the first network users, and in the nineties was the scourge of the nascent Runet, arose long before the advent of the computer. One of the very first germs of the information age was the telegraph, and now it takes some effort to remember that the method of communication that arose even before the telephone was originally digital.

Surprisingly, the problem of message encoding, which in the eighties tormented the first network users, and in the nineties was the scourge of the nascent Runet, arose long before the advent of the computer. One of the very first germs of the information age was the telegraph, and now it takes some effort to remember that the method of communication that arose even before the telephone was originally digital.

Apart from the pure Morse binary code, the first code that turned into a standard was the Bodo code. This 5-bit synchronous code allowed telegraphs to transmit approximately 190 characters per minute (and subsequently up to 760) or 16 bits per second. By the way, those who bought the first modems remember that the speed was listed in bauds - units of measure named after Emil Bodo, the inventor of the code and high-speed telegraph apparatus.

In addition to the name of the unit of speed, the Bodo code, or rather the ITA2 standard, created on its basis, is notable for the fact that it became the source of the first Cyrillic encoding - the MTK-2 telegraph code. To transfer Russian letters, a third register was added to the code (RUS in addition to the LAT and the Digital Number) and tricks began immediately, caused by the need to put our 31-letter (at that time) alphabet in the Procrustean box of the 5-bit standard, since there are six positions of each register were busy with service symbols. For example, the technique of replacing the letter H with the number 4, widely known to amateurs of SMS in Latin letters, was enshrined in the All-Union standard in the mid-20th century. Another interesting point that will emerge already in the Internet era is the correspondence of codes in different registers in sound and not in letter order (i.e.,

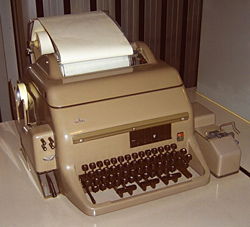

As soon as the computer age began, character encoding received a new branch of development - binary-decimal code (BCD - binary-coded decimal). In the distant 1928, the company's developers brought to the logical finale the technology for recording information on punched cards, which had appeared at the beginning of the 19th century. Gray-beige rectangles with 12 rows and 80 columns are remembered by the entire older generation of researchers. Thirty years later, in the late 50s, the 6-bit BCD code was introduced first, and soon the 8-bit EBCDIC.

As soon as the computer age began, character encoding received a new branch of development - binary-decimal code (BCD - binary-coded decimal). In the distant 1928, the company's developers brought to the logical finale the technology for recording information on punched cards, which had appeared at the beginning of the 19th century. Gray-beige rectangles with 12 rows and 80 columns are remembered by the entire older generation of researchers. Thirty years later, in the late 50s, the 6-bit BCD code was introduced first, and soon the 8-bit EBCDIC.

From this moment two interesting trends arise: the explosive growth of various encoding methods (there were at least six versions of EBCDIC, incompatible with each other) and the competition between the “telegraphic” and “computer” encoding standards. Then, in the early 60s, and more precisely in 1963, at Bell Laboratories, a new 7-bit ASCII encoding was developed to replace the Bodo code. The importance of this event can hardly be overestimated - for America (and other English-speaking countries) this once and for all solved the problem of standardization of data transmission (at that time by teletype).

However, having solved the encoding problem for themselves, the States, intentionally or not, created it for all other countries that do not speak English, and especially for those who do not write Latin letters. For languages that differed from English only in diacritics (additional strokes over the letters), a loophole was opened in the form of the BS symbol - a step back. It allowed you to print one character on top of another and get the character á from a sequence of type “a BS '”. For "national" symbols, "open positions" were reserved - as many as ten pieces.

However, having solved the encoding problem for themselves, the States, intentionally or not, created it for all other countries that do not speak English, and especially for those who do not write Latin letters. For languages that differed from English only in diacritics (additional strokes over the letters), a loophole was opened in the form of the BS symbol - a step back. It allowed you to print one character on top of another and get the character á from a sequence of type “a BS '”. For "national" symbols, "open positions" were reserved - as many as ten pieces.

The fundamental "communication" encoding, which led to "savings on bits" (it is believed that the idea of a fully 8-bit encoding was discussed, but was rejected as generating excessive data stream, making transmission more expensive) turned out to be a salvation for those who needed extra character space . In fact, teletypes allowed punching eight bits at one position, and this same eighth bit was used for parity. It was he who was involved in the development of national encodings for storing national characters, and in the global version ASCII became an 8-bit encoding. And it was on this eighth bit that the strict standardization ended, which made ASCII so convenient.

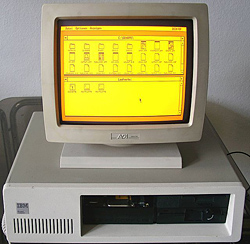

With the proliferation of personal computers, localized operating systems, and then the global network, it became obvious that one could not do one Latin in the encoding and different manufacturers began to create many national encodings. In practice, not so long ago, this meant that when you received a letter or opened an unsuccessful web page, you risked seeing “crackers" instead of text if your browser did not support the desired encoding. And, as often happens, every major manufacturer promoted exactly his own option, ignoring competitors.

Moreover, each encoding had its own unique advantages. For example, among server systems and, importantly, the first programs for working with e-mail, the KOI-8 encoding was most common. It had some very successful structural properties, as is often the case with things closely related to UNIX. In particular, the sequence of Russian letters was taken from the telegraph standard MTK-2, where the sound correspondence was preserved, and not the alphabetical order. Thus, with the loss of the eighth bit, the Cyrillic text turned into transliteration, while maintaining nominal readability, that is, “Hello world!” Without the high bit it will look like “pRIWET, MIR!”. And pay special attention to the inversion of the register, it is not random, but used specifically so that the loss of a bit was immediately noticeable. The same operation on the famous phrase,

Moreover, each encoding had its own unique advantages. For example, among server systems and, importantly, the first programs for working with e-mail, the KOI-8 encoding was most common. It had some very successful structural properties, as is often the case with things closely related to UNIX. In particular, the sequence of Russian letters was taken from the telegraph standard MTK-2, where the sound correspondence was preserved, and not the alphabetical order. Thus, with the loss of the eighth bit, the Cyrillic text turned into transliteration, while maintaining nominal readability, that is, “Hello world!” Without the high bit it will look like “pRIWET, MIR!”. And pay special attention to the inversion of the register, it is not random, but used specifically so that the loss of a bit was immediately noticeable. The same operation on the famous phrase,

And since we are talking about the legacy of the telegraph, we cannot but mention that perhaps the most important feature that makes email a virtual telegraph apparatus is the transmission of all data in 7-bit form. Yes, all the standards for sending emails in one way or another recode and transmit any data - text not written in Latin letters, images, videos - in the form of pure ASCII text. The most famous transport coding methods are Quoted-printable and Base64. In the first version, Latin ASCII characters are not recoded, which makes messages with a predominance of Latin characters conditionally readable. However, Base64 is more suitable for data transfer, which is used as the main method of transport coding today.

But back to the question of national alphabets. In the early 90s, globalization processes prevailed over the tendency to produce entities and several multinational corporations, including Apple, IBM and Microsoft, joined together to create a universal encoding. The organization was called "Unicode Consortium" and the result of its work was Unicode - a way of representing characters from almost all world languages (including the language of mathematics - symbols for formulas, operations, etc. - and musical notation) within a single system.

The capacity of communication channels has grown significantly since the telegraph and the first transatlantic cables, so it was already possible not to save on bits, and the universality and extensibility of encoding became a priority. That is, it was necessary not only to include all existing national languages and symbols (including hieroglyphs), but also to provide a reserve for the future. Therefore, the first version of Unicode used characters of a fixed size - 16 bits.

The capacity of communication channels has grown significantly since the telegraph and the first transatlantic cables, so it was already possible not to save on bits, and the universality and extensibility of encoding became a priority. That is, it was necessary not only to include all existing national languages and symbols (including hieroglyphs), but also to provide a reserve for the future. Therefore, the first version of Unicode used characters of a fixed size - 16 bits.

In this form, the standard allowed to encode up to 65,536 characters, this was more than enough to include the most frequently used characters, and for the rarest ones a “custom character area” was provided. And already at the first stage, all the most famous encodings were included in the standard so that it could be used as a means of conversion. Released in October 1991, Unicode version 1.0.0 contained, in addition to the usual Latin characters and their modifications, Cyrillic, Arabic and Greek scripts, Hebrew, Chinese and Japanese characters, Coptic and Tibetan writing. Subsequently, the character set grew, although sometimes languages were deleted, however, only then to return to a new place later (such as Tibetan writing and Korean characters).

However, the developers quite quickly decided to “lay” the possibility of an even more serious expansion and at the same time abandon the fixed size of the symbol. As a result, variable-length characters and various ways of representing code values began to be used. In 2001, with version 3.1, the standard actually crossed the 16-bit threshold and began to count 94,205 codes. In this version, signs were added for recording European and Byzantine music, as well as more than 40 thousand unified Sino-Japanese-Korean characters. Since 2006, cuneiform web pages can be published, from 2009 correspond in Vedic Sanskrit, and from 2010 to type horoscopes without inserting alchemical symbols in the form of pictures. As a result, currently the standard has 93 “languages” and uses 109,449 codes.

Currently, the Unicode standard has several forms of presentation, which differ in both structure and scope, although the latter difference is gradually smoothed out. The most popular form of presentation in the global network and for data transfer has become the UTF-8 encoding. This is an encoding with a variable long character - in theory, from one to six bytes, in practice - up to four. The first 128 positions are encoded in one byte and match ASCII characters to maintain backward compatibility - even programs without standard support will correctly display the Latin alphabet and Arabic numerals. Two bytes are used to encode the next 1920 characters, and in this area are Latin with diacritics, Greek, Cyrillic, Hebrew Arabic and Syriac scripts and some others. Almost all of the remaining writing,

Currently, the Unicode standard has several forms of presentation, which differ in both structure and scope, although the latter difference is gradually smoothed out. The most popular form of presentation in the global network and for data transfer has become the UTF-8 encoding. This is an encoding with a variable long character - in theory, from one to six bytes, in practice - up to four. The first 128 positions are encoded in one byte and match ASCII characters to maintain backward compatibility - even programs without standard support will correctly display the Latin alphabet and Arabic numerals. Two bytes are used to encode the next 1920 characters, and in this area are Latin with diacritics, Greek, Cyrillic, Hebrew Arabic and Syriac scripts and some others. Almost all of the remaining writing,

More than 15 years took even such a universal standard as Unicode to enter the direct road to network domination. Until now, not all sites support Unicode - according to Google, only in 2010 the share of Unicode pages in the global network approached 50%. However, large providers, whose services are used by a large number of users from different countries, recognized the convenience of the standard. The same technology is now applied in Mail.Ru Mail.

История развития кодировок наглядно показывает, что виртуальное пространство может удивительным образом наследовать границы и преграды реального мира. А внедрение и успех Unicode – насколько успешно можно эти преграды преодолевать. При этом, развитие новой технологии и успешное применение ее компаниями, деятельность которых определяет будущее информационного мира, настолько тесно связаны, что трудно определить, где причина, а где следствие. Зато легко увидеть, как постепенно было задействовано все больше и больше возможностей, заложенных в стандарт еще на стадии разработки. Это позволяет надеяться, что в информационном мире наибольший успех будут иметь проекты, с самого начала нацеленные в будущее, а не на извлечение сиюминутной выгоды или простейшее решение локальных проблем.

С уважением,

Сергей Мартынов

Head of Mail.Ru Mail

Like many major changes, the transition process required serious preparation and had a large "underwater" part - the developers were faced with the task of processing 6 petabytes of letters in more than a hundred million mailboxes. The first experiments began in the fall of 2010, and in the spring of 2011 all the boxes were successfully transferred to the new system. At the same time, the domain of the project “mail” symbolically changed: instead of the main domain win.mail.ru and the historical koi.mail.ru and mac.mail.ru, which issued the site in the appropriate encodings, e.mail.ru is now used, issuing everything pages in UTF-8. All mail is also stored, processed and displayed in UTF-8. This means that in letters you can use any living and dead languages, mathematical and musical symbols, both in the form of plain-text, and with formatting.

To remind you of how things were with international communication recently, we prepared a short excursion into the history of encodings.

In the beginning there was a figure

Surprisingly, the problem of message encoding, which in the eighties tormented the first network users, and in the nineties was the scourge of the nascent Runet, arose long before the advent of the computer. One of the very first germs of the information age was the telegraph, and now it takes some effort to remember that the method of communication that arose even before the telephone was originally digital.

Surprisingly, the problem of message encoding, which in the eighties tormented the first network users, and in the nineties was the scourge of the nascent Runet, arose long before the advent of the computer. One of the very first germs of the information age was the telegraph, and now it takes some effort to remember that the method of communication that arose even before the telephone was originally digital. Apart from the pure Morse binary code, the first code that turned into a standard was the Bodo code. This 5-bit synchronous code allowed telegraphs to transmit approximately 190 characters per minute (and subsequently up to 760) or 16 bits per second. By the way, those who bought the first modems remember that the speed was listed in bauds - units of measure named after Emil Bodo, the inventor of the code and high-speed telegraph apparatus.

In addition to the name of the unit of speed, the Bodo code, or rather the ITA2 standard, created on its basis, is notable for the fact that it became the source of the first Cyrillic encoding - the MTK-2 telegraph code. To transfer Russian letters, a third register was added to the code (RUS in addition to the LAT and the Digital Number) and tricks began immediately, caused by the need to put our 31-letter (at that time) alphabet in the Procrustean box of the 5-bit standard, since there are six positions of each register were busy with service symbols. For example, the technique of replacing the letter H with the number 4, widely known to amateurs of SMS in Latin letters, was enshrined in the All-Union standard in the mid-20th century. Another interesting point that will emerge already in the Internet era is the correspondence of codes in different registers in sound and not in letter order (i.e.,

Not all computer is equally useful

As soon as the computer age began, character encoding received a new branch of development - binary-decimal code (BCD - binary-coded decimal). In the distant 1928, the company's developers brought to the logical finale the technology for recording information on punched cards, which had appeared at the beginning of the 19th century. Gray-beige rectangles with 12 rows and 80 columns are remembered by the entire older generation of researchers. Thirty years later, in the late 50s, the 6-bit BCD code was introduced first, and soon the 8-bit EBCDIC.

As soon as the computer age began, character encoding received a new branch of development - binary-decimal code (BCD - binary-coded decimal). In the distant 1928, the company's developers brought to the logical finale the technology for recording information on punched cards, which had appeared at the beginning of the 19th century. Gray-beige rectangles with 12 rows and 80 columns are remembered by the entire older generation of researchers. Thirty years later, in the late 50s, the 6-bit BCD code was introduced first, and soon the 8-bit EBCDIC.From this moment two interesting trends arise: the explosive growth of various encoding methods (there were at least six versions of EBCDIC, incompatible with each other) and the competition between the “telegraphic” and “computer” encoding standards. Then, in the early 60s, and more precisely in 1963, at Bell Laboratories, a new 7-bit ASCII encoding was developed to replace the Bodo code. The importance of this event can hardly be overestimated - for America (and other English-speaking countries) this once and for all solved the problem of standardization of data transmission (at that time by teletype).

However, having solved the encoding problem for themselves, the States, intentionally or not, created it for all other countries that do not speak English, and especially for those who do not write Latin letters. For languages that differed from English only in diacritics (additional strokes over the letters), a loophole was opened in the form of the BS symbol - a step back. It allowed you to print one character on top of another and get the character á from a sequence of type “a BS '”. For "national" symbols, "open positions" were reserved - as many as ten pieces.

However, having solved the encoding problem for themselves, the States, intentionally or not, created it for all other countries that do not speak English, and especially for those who do not write Latin letters. For languages that differed from English only in diacritics (additional strokes over the letters), a loophole was opened in the form of the BS symbol - a step back. It allowed you to print one character on top of another and get the character á from a sequence of type “a BS '”. For "national" symbols, "open positions" were reserved - as many as ten pieces.The fundamental "communication" encoding, which led to "savings on bits" (it is believed that the idea of a fully 8-bit encoding was discussed, but was rejected as generating excessive data stream, making transmission more expensive) turned out to be a salvation for those who needed extra character space . In fact, teletypes allowed punching eight bits at one position, and this same eighth bit was used for parity. It was he who was involved in the development of national encodings for storing national characters, and in the global version ASCII became an 8-bit encoding. And it was on this eighth bit that the strict standardization ended, which made ASCII so convenient.

Babel

With the proliferation of personal computers, localized operating systems, and then the global network, it became obvious that one could not do one Latin in the encoding and different manufacturers began to create many national encodings. In practice, not so long ago, this meant that when you received a letter or opened an unsuccessful web page, you risked seeing “crackers" instead of text if your browser did not support the desired encoding. And, as often happens, every major manufacturer promoted exactly his own option, ignoring competitors.

Moreover, each encoding had its own unique advantages. For example, among server systems and, importantly, the first programs for working with e-mail, the KOI-8 encoding was most common. It had some very successful structural properties, as is often the case with things closely related to UNIX. In particular, the sequence of Russian letters was taken from the telegraph standard MTK-2, where the sound correspondence was preserved, and not the alphabetical order. Thus, with the loss of the eighth bit, the Cyrillic text turned into transliteration, while maintaining nominal readability, that is, “Hello world!” Without the high bit it will look like “pRIWET, MIR!”. And pay special attention to the inversion of the register, it is not random, but used specifically so that the loss of a bit was immediately noticeable. The same operation on the famous phrase,

Moreover, each encoding had its own unique advantages. For example, among server systems and, importantly, the first programs for working with e-mail, the KOI-8 encoding was most common. It had some very successful structural properties, as is often the case with things closely related to UNIX. In particular, the sequence of Russian letters was taken from the telegraph standard MTK-2, where the sound correspondence was preserved, and not the alphabetical order. Thus, with the loss of the eighth bit, the Cyrillic text turned into transliteration, while maintaining nominal readability, that is, “Hello world!” Without the high bit it will look like “pRIWET, MIR!”. And pay special attention to the inversion of the register, it is not random, but used specifically so that the loss of a bit was immediately noticeable. The same operation on the famous phrase,And since we are talking about the legacy of the telegraph, we cannot but mention that perhaps the most important feature that makes email a virtual telegraph apparatus is the transmission of all data in 7-bit form. Yes, all the standards for sending emails in one way or another recode and transmit any data - text not written in Latin letters, images, videos - in the form of pure ASCII text. The most famous transport coding methods are Quoted-printable and Base64. In the first version, Latin ASCII characters are not recoded, which makes messages with a predominance of Latin characters conditionally readable. However, Base64 is more suitable for data transfer, which is used as the main method of transport coding today.

How many stars in the sky and letters in all languages of the world

But back to the question of national alphabets. In the early 90s, globalization processes prevailed over the tendency to produce entities and several multinational corporations, including Apple, IBM and Microsoft, joined together to create a universal encoding. The organization was called "Unicode Consortium" and the result of its work was Unicode - a way of representing characters from almost all world languages (including the language of mathematics - symbols for formulas, operations, etc. - and musical notation) within a single system.

The capacity of communication channels has grown significantly since the telegraph and the first transatlantic cables, so it was already possible not to save on bits, and the universality and extensibility of encoding became a priority. That is, it was necessary not only to include all existing national languages and symbols (including hieroglyphs), but also to provide a reserve for the future. Therefore, the first version of Unicode used characters of a fixed size - 16 bits.

The capacity of communication channels has grown significantly since the telegraph and the first transatlantic cables, so it was already possible not to save on bits, and the universality and extensibility of encoding became a priority. That is, it was necessary not only to include all existing national languages and symbols (including hieroglyphs), but also to provide a reserve for the future. Therefore, the first version of Unicode used characters of a fixed size - 16 bits.In this form, the standard allowed to encode up to 65,536 characters, this was more than enough to include the most frequently used characters, and for the rarest ones a “custom character area” was provided. And already at the first stage, all the most famous encodings were included in the standard so that it could be used as a means of conversion. Released in October 1991, Unicode version 1.0.0 contained, in addition to the usual Latin characters and their modifications, Cyrillic, Arabic and Greek scripts, Hebrew, Chinese and Japanese characters, Coptic and Tibetan writing. Subsequently, the character set grew, although sometimes languages were deleted, however, only then to return to a new place later (such as Tibetan writing and Korean characters).

However, the developers quite quickly decided to “lay” the possibility of an even more serious expansion and at the same time abandon the fixed size of the symbol. As a result, variable-length characters and various ways of representing code values began to be used. In 2001, with version 3.1, the standard actually crossed the 16-bit threshold and began to count 94,205 codes. In this version, signs were added for recording European and Byzantine music, as well as more than 40 thousand unified Sino-Japanese-Korean characters. Since 2006, cuneiform web pages can be published, from 2009 correspond in Vedic Sanskrit, and from 2010 to type horoscopes without inserting alchemical symbols in the form of pictures. As a result, currently the standard has 93 “languages” and uses 109,449 codes.

Currently, the Unicode standard has several forms of presentation, which differ in both structure and scope, although the latter difference is gradually smoothed out. The most popular form of presentation in the global network and for data transfer has become the UTF-8 encoding. This is an encoding with a variable long character - in theory, from one to six bytes, in practice - up to four. The first 128 positions are encoded in one byte and match ASCII characters to maintain backward compatibility - even programs without standard support will correctly display the Latin alphabet and Arabic numerals. Two bytes are used to encode the next 1920 characters, and in this area are Latin with diacritics, Greek, Cyrillic, Hebrew Arabic and Syriac scripts and some others. Almost all of the remaining writing,

Currently, the Unicode standard has several forms of presentation, which differ in both structure and scope, although the latter difference is gradually smoothed out. The most popular form of presentation in the global network and for data transfer has become the UTF-8 encoding. This is an encoding with a variable long character - in theory, from one to six bytes, in practice - up to four. The first 128 positions are encoded in one byte and match ASCII characters to maintain backward compatibility - even programs without standard support will correctly display the Latin alphabet and Arabic numerals. Two bytes are used to encode the next 1920 characters, and in this area are Latin with diacritics, Greek, Cyrillic, Hebrew Arabic and Syriac scripts and some others. Almost all of the remaining writing,Through thorns to world domination

More than 15 years took even such a universal standard as Unicode to enter the direct road to network domination. Until now, not all sites support Unicode - according to Google, only in 2010 the share of Unicode pages in the global network approached 50%. However, large providers, whose services are used by a large number of users from different countries, recognized the convenience of the standard. The same technology is now applied in Mail.Ru Mail.

Instead of an afterword

История развития кодировок наглядно показывает, что виртуальное пространство может удивительным образом наследовать границы и преграды реального мира. А внедрение и успех Unicode – насколько успешно можно эти преграды преодолевать. При этом, развитие новой технологии и успешное применение ее компаниями, деятельность которых определяет будущее информационного мира, настолько тесно связаны, что трудно определить, где причина, а где следствие. Зато легко увидеть, как постепенно было задействовано все больше и больше возможностей, заложенных в стандарт еще на стадии разработки. Это позволяет надеяться, что в информационном мире наибольший успех будут иметь проекты, с самого начала нацеленные в будущее, а не на извлечение сиюминутной выгоды или простейшее решение локальных проблем.

С уважением,

Сергей Мартынов

Head of Mail.Ru Mail