Introduction to Gestures

or interpretation of gestures :)

Now we are working on a combat example with gestures in the Android environment. The application will be a client of the Astronomy Picture of the Day by NASA website . On this site, guys every day post some wonderful picture related to astronomy. With gestures we will go forward / backward and call up the date selection dialog. And to make it even more interesting - write it for Honeycomb.

The article consists of two parts: the first - shows how to create gestures using the Gestures Builder application and unload them from the emulator into a separate binary file. In the second part, we upload it to our application and start using it.

The android.gesture package appeared in the API version 1.6 and is designed to significantly facilitate the processing of gestures and reduce the amount of code that programmers will write. At the same time, the pre-installed Gestures Builder application has appeared in the emulator version 1.6 and higher, with it we can create a set of prepared gestures and add them as a binary resource to our application. To do this, we will make an emulator with the built-in SD card image, where the file with gestures will be saved (without the card Gestures Builder will say that he has nowhere to record gestures)

Step 1. Create an img image of the SD card using mksdcard. This utility can be found in the <path to where android-sdk is installed> \ tools folder. The gesture file will weigh a little, but the android emulator has a limit on the minimum card size of about 8-9 megabytes. Therefore, we will create with a margin and some even size, for example, 64 mb. We write:

mksdcard -l mySdCard 64M gestures.img

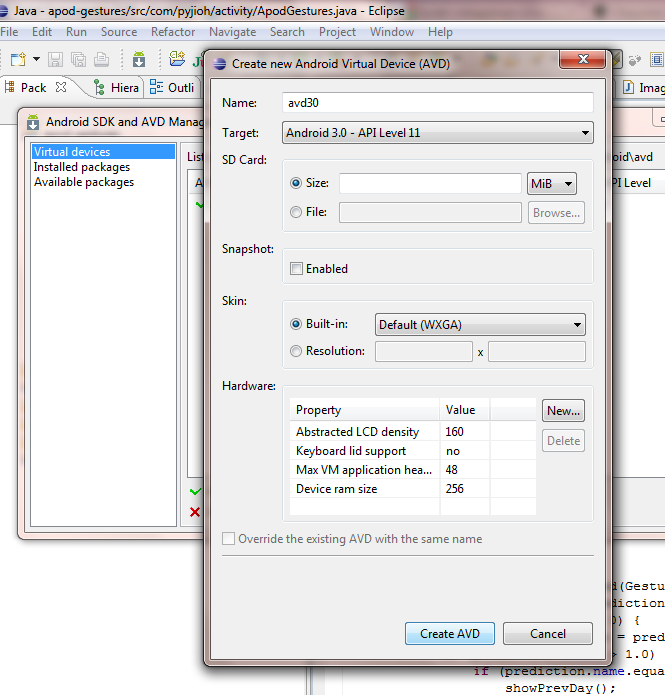

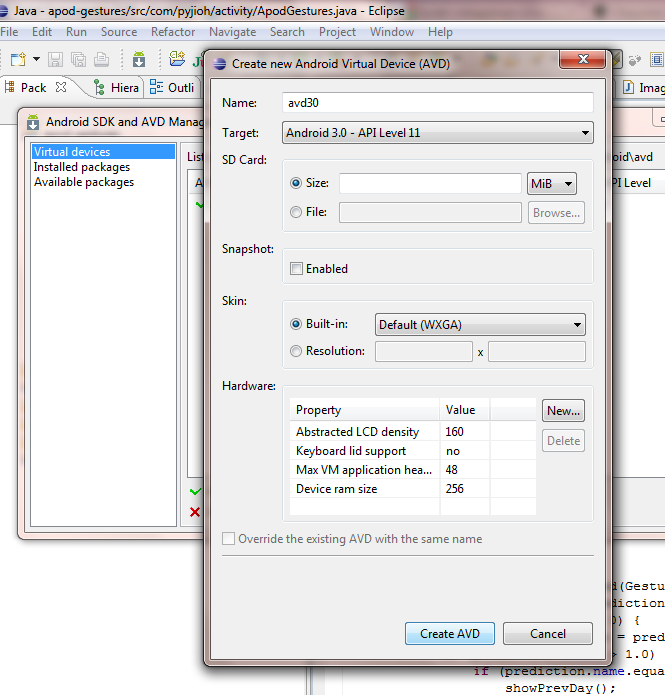

Step 2. Create an emulator and launch it with the image gestures.img. We act as in the picture:

To start the emulator with the image, go to <path where android-sdk is installed> \ tools and execute:

emulator -avd avd30 -sdcard gestures.img

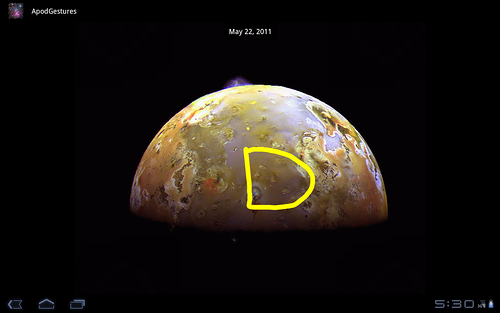

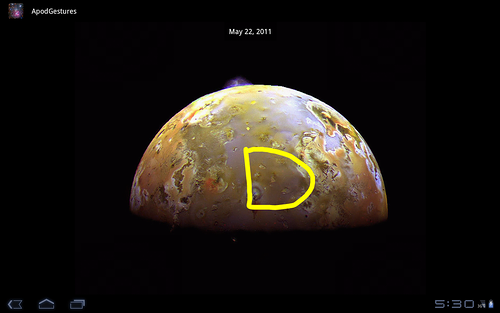

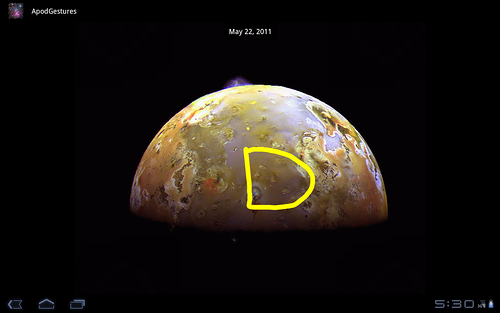

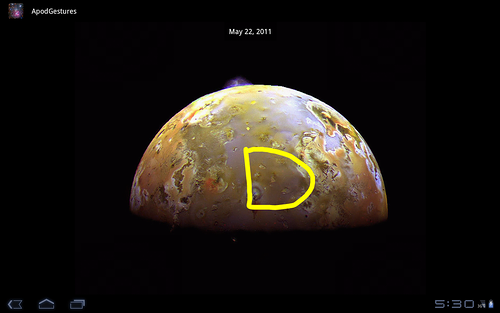

Step 3.The emulator is running and we need to make our own gestures. To do this, open the Gestures Builder, click Add gesture and a gesture editor will open in front of us. We draw a gesture from left to right with the left mouse button pressed and at the top give it a name - prev . Done Then, by analogy (in the opposite direction), we create the next gesture and as the last, write something similar to the Latin D and call date . In the pictures below - how we do it (clickable): Step 4. We take the binary resource with gestures from the running emulator by executing in the tools directory: adb pull / sdcard / gestures gestures After which the gestures file should appear. We will put it in our project along the path res \ raw \ gestures.

Our app will be called ApodGestures. In the second part, I will touch only on those related to gestures. All program code can be taken at code.google.com from the Mercurial repository, or run hg clone pyJIoH@apod-gestures.googlecode.com/hg apod-gestures in the console , or download the archive in the Downloads tab .

ApodGestures will connect to the APOD website , pump out the astronomical image in a separate stream and upload it to ImageView. With the help of gestures we will make navigation: forward, backward and a dialogue for selecting any date.

In principle, some special features from Honeycomb are not used here, therefore, having reduced the version, we will launch the application on phones without problems. The restrictions were rather imposed by Honeycomb itself, as From this version and above, the policy of what can be done in the main thread has been tightened. Here I came across an example of NetworkOnMainThreadException when working in the main HttpClient thread. You can read here .

Having created the project, put our binary resource along the path apod-gestures \ res \ raw \ gestures .

We will place the ImageView in the xml layout on android.gesture.GestureOverlayView, which is a transparent overlay capable of detecting gesture input. The code will be like this:

Next, add the field to ApodGestures activity: private GestureLibrary mGestureLib;

We will load our gestures from a raw resource into it:

To begin to track the entered gestures, we implement our OnGesturePerformedListener activity and implement the onGesturePerformed method:

As you can see, the name we entered in the Gestures Builder application is a gesture identifier. Gestures is our class with constants, where names are clogged fixedly. If someone is annoyed by bold yellow lines, we can set any color in xml for GestureOverlayView, the android: gestureColor and android: uncertainGestureColor properties. UncertainGestureColor is the color drawn on the view when there is no certainty that it is a gesture.

You can make colors gray and transparent:

An example of what happened in the end (still clickable). I hope you enjoyed it. UPD Added apk file to Download tab . Installation requires Android 3.0 and above.

Gesture creation

The android.gesture package appeared in the API version 1.6 and is designed to significantly facilitate the processing of gestures and reduce the amount of code that programmers will write. At the same time, the pre-installed Gestures Builder application has appeared in the emulator version 1.6 and higher, with it we can create a set of prepared gestures and add them as a binary resource to our application. To do this, we will make an emulator with the built-in SD card image, where the file with gestures will be saved (without the card Gestures Builder will say that he has nowhere to record gestures)

Step 1. Create an img image of the SD card using mksdcard. This utility can be found in the <path to where android-sdk is installed> \ tools folder. The gesture file will weigh a little, but the android emulator has a limit on the minimum card size of about 8-9 megabytes. Therefore, we will create with a margin and some even size, for example, 64 mb. We write:

mksdcard -l mySdCard 64M gestures.img

Step 2. Create an emulator and launch it with the image gestures.img. We act as in the picture:

To start the emulator with the image, go to <path where android-sdk is installed> \ tools and execute:

emulator -avd avd30 -sdcard gestures.img

Step 3.The emulator is running and we need to make our own gestures. To do this, open the Gestures Builder, click Add gesture and a gesture editor will open in front of us. We draw a gesture from left to right with the left mouse button pressed and at the top give it a name - prev . Done Then, by analogy (in the opposite direction), we create the next gesture and as the last, write something similar to the Latin D and call date . In the pictures below - how we do it (clickable): Step 4. We take the binary resource with gestures from the running emulator by executing in the tools directory: adb pull / sdcard / gestures gestures After which the gestures file should appear. We will put it in our project along the path res \ raw \ gestures.

Using gestures

Our app will be called ApodGestures. In the second part, I will touch only on those related to gestures. All program code can be taken at code.google.com from the Mercurial repository, or run hg clone pyJIoH@apod-gestures.googlecode.com/hg apod-gestures in the console , or download the archive in the Downloads tab .

ApodGestures will connect to the APOD website , pump out the astronomical image in a separate stream and upload it to ImageView. With the help of gestures we will make navigation: forward, backward and a dialogue for selecting any date.

In principle, some special features from Honeycomb are not used here, therefore, having reduced the version, we will launch the application on phones without problems. The restrictions were rather imposed by Honeycomb itself, as From this version and above, the policy of what can be done in the main thread has been tightened. Here I came across an example of NetworkOnMainThreadException when working in the main HttpClient thread. You can read here .

Having created the project, put our binary resource along the path apod-gestures \ res \ raw \ gestures .

We will place the ImageView in the xml layout on android.gesture.GestureOverlayView, which is a transparent overlay capable of detecting gesture input. The code will be like this:

Next, add the field to ApodGestures activity: private GestureLibrary mGestureLib;

We will load our gestures from a raw resource into it:

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

mGestureLib = GestureLibraries.fromRawResource(this, R.raw.gestures);

if (!mGestureLib.load()) {

finish();

}

GestureOverlayView gestures = (GestureOverlayView) findViewById(R.id.gestures);

gestures.addOnGesturePerformedListener(this);

refreshActivity();

}To begin to track the entered gestures, we implement our OnGesturePerformedListener activity and implement the onGesturePerformed method:

public void onGesturePerformed(GestureOverlayView overlay, Gesture gesture) {

ArrayList predictions = mGestureLib.recognize(gesture);

if (predictions.size() > 0) {

Prediction prediction = predictions.get(0);

if (prediction.score > 1.0) {

if (prediction.name.equals(Gestures.prev))

showPrevDay();

else if (prediction.name.equals(Gestures.next))

showNextDay();

else if (prediction.name.equals(Gestures.date))

showSelectDateDialog();

}

}

} As you can see, the name we entered in the Gestures Builder application is a gesture identifier. Gestures is our class with constants, where names are clogged fixedly. If someone is annoyed by bold yellow lines, we can set any color in xml for GestureOverlayView, the android: gestureColor and android: uncertainGestureColor properties. UncertainGestureColor is the color drawn on the view when there is no certainty that it is a gesture.

You can make colors gray and transparent:

android:gestureColor="#AA000000"

android:uncertainGestureColor="#AA000000">An example of what happened in the end (still clickable). I hope you enjoyed it. UPD Added apk file to Download tab . Installation requires Android 3.0 and above.