Measuring the distance to the object and its speed

- From the sandbox

- Tutorial

The technology that I am going to introduce to you, I have not met in the methods I found to determine the distance to an object in the image. It is neither universal nor complex, its essence is that the visible field (we assume that we are using a video camera) is calibrated with a ruler and then the coordinate of the object in the image is compared with the mark on the ruler. That is, the measurement is carried out along one line or axis. But we do not need to store a mark on the ruler for each pixel, the algorithm for calibration only needs to know the size of the ruler in pixels and meters, as well as the coordinate of the pixel, which is the actual middle of the ruler. An obvious limitation is that it only works on flat surfaces.

In addition to the method itself, the article discusses its implementation in Python using the OpenCV library, and also discusses the features of obtaining images from webcams in Linux using the video4linux2 API.

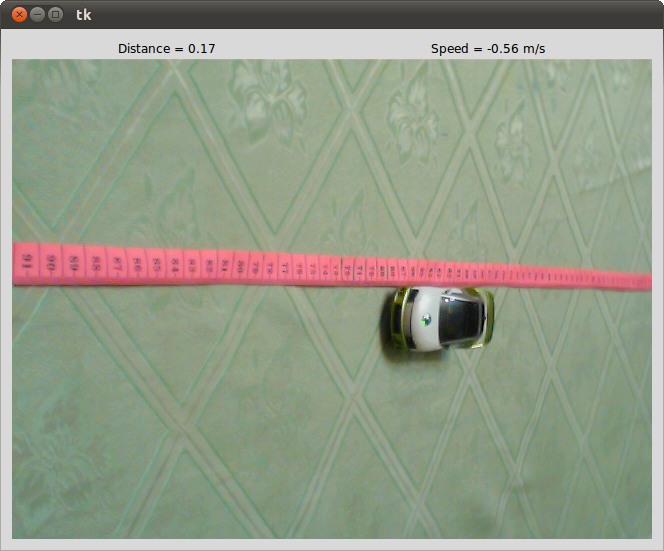

In practice, it was necessary to measure the distance to the car and its speed on some direct section of the road. I used a long roulette, stretched it along the road, in the middle of the canvas, then set up the camera so that the whole roulette just entered the field of vision of the camera and was aligned with the X axis of the image. The next step was to put something bright in the middle of the roulette, fix the camera so that it did not move anywhere, and record the pixel coordinates of this middle.

All calculations are reduced to one single formula:

l = L * K / (W / x - 1 + K) , where

l is the desired distance to the object, m;

L is the length of the "line", m;

W - the length of the "ruler" in pixels, usually the same as the width of the image;

x- the coordinate of the object in the image;

K = (W - M) / M is the coefficient reflecting the tilt of the camera, here M is the coordinate of the middle of the “ruler”.

In the conclusion of this formula, school knowledge of trigonometry was very useful to me.

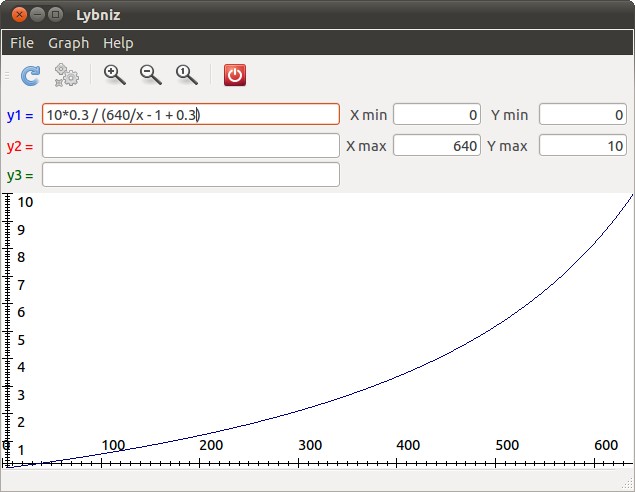

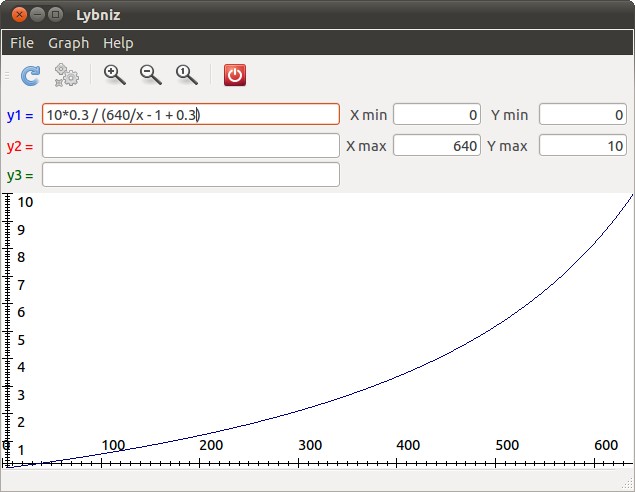

The dependency graph of this function is shown in the figure:

The greater the tilt of the camera, the steeper the graph. In the boundary case, when the axis of the camera is directed perpendicular to the plane of the “ruler” ( M = W / 2 ), the graph becomes a straight line.

But the article would be too short, if only to stop there. So I decided to make a demo program, which would connect to the computer’s webcam and follow some object, calculating the distance to it and its speed. As a programming language, I chose Python, a language with a very large number of advantages, I chose the Tkinter framework that comes with Python to build a graphical interface, so it does not need to be installed separately. OpenCV works well for tracking an object, I use version 2.2, but the repository of the current ubuntu version (10.10) has only version 2.1, and their API has changed a little for the better and the program under version 2.1 will not work. In principle, it would be possible to build the entire program on OpenCV, entrusting it with the functions of a graphical interface and image capture, but I wanted to separate it from the main part of the program, so that if something is possible, replace this library with something else or simply remove it by turning off tracking. I started to rework the old program, deleting everything unnecessary, and to my surprise there were only a few lines left with the direct calculation of distance and speed, which in principle was logical, since in the original the program does not use a graphical interface, it tracks the car using a different algorithm yes and instead of a webcam, a megapixel network camera with RTSP connection is used.

As for obtaining images from a webcam, it is not so simple. Under Windows, the program uses DirectX to connect to the camera through the VideoCapture library , everything is quite simple here. But under Linux, there are very few intelligible articles about using webcams from Python, and those examples that are usually turn out to be inoperable due to some next change of API. In the past, I used ffmpeg for these purposes and the program was in C, but ffmpeg is a bit “gun sparrow”, and I didn’t want to burden the final program with additional dependencies. You could use OpenCV, which also uses ffmpeg, but the way to write your own video4linux2 API wrapper for Python was chosen.

The source codes were taken from the page of some faculty of science.. Of these, I quickly deleted everything unnecessary for my purpose, eventually leaving two edited files:

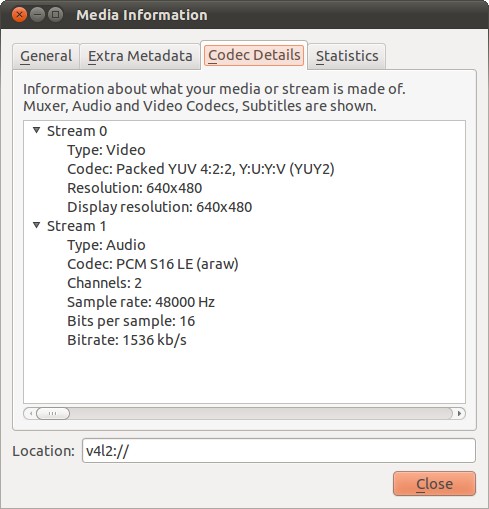

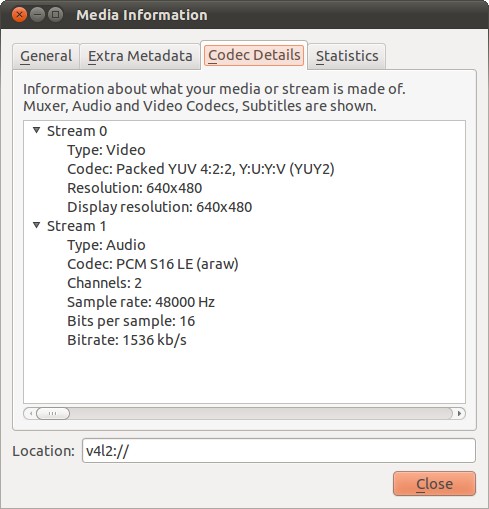

It is also understood that the webcam transmits the image in YUYV format (YUV422), it differs from RGB in that it has 2 times less color information. In YUYV, two pixels are encoded in 4 bytes, and in RGB by six, hence the saving is one and a half times. Y is the brightness component, for each pixel it is different. U and V are the color difference components that determine the color of the pixel, so every two pixels use the same values of U and V. If you represent the byte stream from the webcam in these notations, it will look like YUYV YUYV YUYV YUYV YUYV YUYV - this 12 pixels. You can find out in what format your webcam works using a VLC player, open the capturing device with it and then request information about the codec, it should be as in the figure:

Here is the source code of the library for accessing the webcam:

The algorithm is quite understandable - first we open the device whose name is given first ("/ dev / video0"), and then for each request we

And here is the wrapper of this library for Python:

As you can see, absolutely nothing complicated. The library is connected using the ctypes module. There were no problems writing the wrapper, except for the line:

To which I did not immediately come. The fact is that if you read data from

I will not provide here the source code for obtaining the image in Windows, but it also does not differ in any complexity and is located in the archive in the folder

And I will give better the source code of the tracking class for an object, which, I recall, is written using OpenCV. I took an example as a basis

First, we must tell him what point we want to monitor, there is a method for this

I’ll also say about converting an image from the Python Imaging Library to the OpenCV format, the fact is that OpenCV for color images uses a different order of color components - BGR, for complete conversion it would be necessary to supplement the code with a line

I also don’t provide the source code of the class for directly calculating the distance in the article, since there is only the simplest mathematics. It is located in the file

It remains only to show the source code of the main script, which forms a graphical interface and loads all the other modules.

As I said above, I chose the Tkinter library for creating a graphical interface, I also worked with other toolkits, such as GTK, QT and, of course, wxPython, but they had to be installed additionally, while Tkinter works right away and it is very it is easy to use, however, of course, you cannot create a complex interface on it, but its abilities are more than enough for the task. In the initialization of the class, I create a grid

Downloading the image and its time stamp is carried out by the function

Once again, I will provide a link to the archive with the program - distance-measure .

Required packages for ubuntu: python, python-imaging, python-imaging-tk, opencv version 2.2 and build-essential to compile the V4L2 wrapper.

The program starts through:

To start monitoring the object, you must click on it.

That's all.

In addition to the method itself, the article discusses its implementation in Python using the OpenCV library, and also discusses the features of obtaining images from webcams in Linux using the video4linux2 API.

In practice, it was necessary to measure the distance to the car and its speed on some direct section of the road. I used a long roulette, stretched it along the road, in the middle of the canvas, then set up the camera so that the whole roulette just entered the field of vision of the camera and was aligned with the X axis of the image. The next step was to put something bright in the middle of the roulette, fix the camera so that it did not move anywhere, and record the pixel coordinates of this middle.

All calculations are reduced to one single formula:

l = L * K / (W / x - 1 + K) , where

l is the desired distance to the object, m;

L is the length of the "line", m;

W - the length of the "ruler" in pixels, usually the same as the width of the image;

x- the coordinate of the object in the image;

K = (W - M) / M is the coefficient reflecting the tilt of the camera, here M is the coordinate of the middle of the “ruler”.

In the conclusion of this formula, school knowledge of trigonometry was very useful to me.

The dependency graph of this function is shown in the figure:

The greater the tilt of the camera, the steeper the graph. In the boundary case, when the axis of the camera is directed perpendicular to the plane of the “ruler” ( M = W / 2 ), the graph becomes a straight line.

But the article would be too short, if only to stop there. So I decided to make a demo program, which would connect to the computer’s webcam and follow some object, calculating the distance to it and its speed. As a programming language, I chose Python, a language with a very large number of advantages, I chose the Tkinter framework that comes with Python to build a graphical interface, so it does not need to be installed separately. OpenCV works well for tracking an object, I use version 2.2, but the repository of the current ubuntu version (10.10) has only version 2.1, and their API has changed a little for the better and the program under version 2.1 will not work. In principle, it would be possible to build the entire program on OpenCV, entrusting it with the functions of a graphical interface and image capture, but I wanted to separate it from the main part of the program, so that if something is possible, replace this library with something else or simply remove it by turning off tracking. I started to rework the old program, deleting everything unnecessary, and to my surprise there were only a few lines left with the direct calculation of distance and speed, which in principle was logical, since in the original the program does not use a graphical interface, it tracks the car using a different algorithm yes and instead of a webcam, a megapixel network camera with RTSP connection is used.

As for obtaining images from a webcam, it is not so simple. Under Windows, the program uses DirectX to connect to the camera through the VideoCapture library , everything is quite simple here. But under Linux, there are very few intelligible articles about using webcams from Python, and those examples that are usually turn out to be inoperable due to some next change of API. In the past, I used ffmpeg for these purposes and the program was in C, but ffmpeg is a bit “gun sparrow”, and I didn’t want to burden the final program with additional dependencies. You could use OpenCV, which also uses ffmpeg, but the way to write your own video4linux2 API wrapper for Python was chosen.

The source codes were taken from the page of some faculty of science.. Of these, I quickly deleted everything unnecessary for my purpose, eventually leaving two edited files:

V4L2.cppand V4L2.h. This is actually the minimum necessary API for connecting to a webcam. While working on a wrapper for Python, I found out that there are three ways to access video4linux2 devices: READ, MMAP and STREAM, but only the MMAP method works with my webcams. As it turned out, other examples of programs that did not work for me used the READ method.It is also understood that the webcam transmits the image in YUYV format (YUV422), it differs from RGB in that it has 2 times less color information. In YUYV, two pixels are encoded in 4 bytes, and in RGB by six, hence the saving is one and a half times. Y is the brightness component, for each pixel it is different. U and V are the color difference components that determine the color of the pixel, so every two pixels use the same values of U and V. If you represent the byte stream from the webcam in these notations, it will look like YUYV YUYV YUYV YUYV YUYV YUYV - this 12 pixels. You can find out in what format your webcam works using a VLC player, open the capturing device with it and then request information about the codec, it should be as in the figure:

Here is the source code of the library for accessing the webcam:

main_v4l2.cpp#include "V4L2.h"

#include

#include

using namespace std;

extern "C" {

// Specify the video device here

V4L2 v4l2("/dev/video0");

unsigned char *rgbFrame;

float clamp(float num) {

if (num < 0) num = 0;

if (num > 255) num = 255;

return num;

}

// Convert between YUV and RGB colorspaces

void yuv2rgb(unsigned char y, unsigned char u, unsigned char v, unsigned char &r, unsigned char &g, unsigned char &b) {

float C = y - 16;

float D = u - 128;

float E = v - 128;

r = (char)clamp(C + ( 1.402 * E )) ;

g = (char)clamp(C - ( 0.344136 * D + 0.714136 * E )) ;

b = (char)clamp(C + ( 1.772 * D )) ;

}

unsigned char *getFrame() {

unsigned char *frame = (unsigned char *)v4l2.getFrame();

int i = 0, k = 0;

unsigned char Y, U, V, R, G, B;

for (i=0;i<640*480*2;i+=4) {

Y = frame[i];

U = frame[i+1];

V = frame[i+3];

yuv2rgb(Y, U, V, R, G, B);

rgbFrame[k] = R; k++;

rgbFrame[k] = G; k++;

rgbFrame[k] = B; k++;

Y = frame[i+2];

yuv2rgb(Y, U, V, R, G, B);

rgbFrame[k] = R; k++;

rgbFrame[k] = G; k++;

rgbFrame[k] = B; k++;

}

return rgbFrame;

}

void stopCapture() {

v4l2.freeBuffers();

}

// Call this before using the device

void openDevice() {

// set format

struct v4l2_format fmt;

CLEAR(fmt);

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

// Adjust resolution

fmt.fmt.pix.width = 640;

fmt.fmt.pix.height = 480;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

if (!v4l2.set(fmt)) {

fprintf(stderr, "device does not support used settings.\n");

}

v4l2.initBuffers();

v4l2.startCapture();

rgbFrame = (unsigned char *)malloc(640*480*3);

}

}

The algorithm is quite understandable - first we open the device whose name is given first ("/ dev / video0"), and then for each request we

getFrameread the frame from the webcam, convert it to RGB format and give the link to the frame to the one who requested it. I also provide Makefilefor a quick compilation of this library, if you need it. And here is the wrapper of this library for Python:

v4l2.pyfrom ctypes import *

import Image

import time

lib = cdll.LoadLibrary("linux/libv4l2.so")

class VideoDevice(object):

def __init__(self):

lib.openDevice()

lib.getFrame.restype = c_void_p

def getImage(self):

buf = lib.getFrame()

frame = (c_char * (640*480*3)).from_address(buf)

img = Image.frombuffer('RGB',

(640, 480),

frame,

'raw',

'RGB',

0,

1)

return img, time.time()

As you can see, absolutely nothing complicated. The library is connected using the ctypes module. There were no problems writing the wrapper, except for the line:

frame = (c_char * (640*480*3)).from_address(buf)

To which I did not immediately come. The fact is that if you read data from

getFrame()how c_char_p, then ctypes will interpret the data as a string with a zero ending, that is, as soon as zero is encountered in the byte stream, the reading will stop. The same design allows you to clearly set how many bytes you need to read. In our case, it is always a fixed value - 640 * 480 * 3. I will not provide here the source code for obtaining the image in Windows, but it also does not differ in any complexity and is located in the archive in the folder

windowswith the name directx.py. And I will give better the source code of the tracking class for an object, which, I recall, is written using OpenCV. I took an example as a basis

lkdemo.pysupplied with OpenCV and again simplified it for our needs, by converting it into a class campaign:tracker.pyclass Tracker(object):

"Simple object tracking class"

def __init__(self):

self.grey = None

self.point = None

self.WIN_SIZE = 10

def target(self, x, y):

"Tell which object to track"

# It needs to be an array for the optical flow calculation

self.point = [(x, y)]

def takeImage(self, img):

"Loads and processes next frame"

# Convert it to IPL Image

frame = cv.CreateImageHeader(img.size, 8, 3)

cv.SetData(frame, img.tostring())

if self.grey is None:

# create the images we need

self.grey = cv.CreateImage (cv.GetSize (frame), 8, 1)

self.prev_grey = cv.CreateImage (cv.GetSize (frame), 8, 1)

self.pyramid = cv.CreateImage (cv.GetSize (frame), 8, 1)

self.prev_pyramid = cv.CreateImage (cv.GetSize (frame), 8, 1)

cv.CvtColor (frame, self.grey, cv.CV_BGR2GRAY)

if self.point:

# calculate the optical flow

new_point, status, something = cv.CalcOpticalFlowPyrLK (

self.prev_grey, self.grey, self.prev_pyramid, self.pyramid,

self.point,

(self.WIN_SIZE, self.WIN_SIZE), 3,

(cv.CV_TERMCRIT_ITER|cv.CV_TERMCRIT_EPS, 20, 0.03),

0)

# If the point is still alive

if status[0]:

self.point = new_point

else:

self.point = None

# swapping

self.prev_grey, self.grey = self.grey, self.prev_grey

self.prev_pyramid, self.pyramid = self.pyramid, self.prev_pyramid

First, we must tell him what point we want to monitor, there is a method for this

target. Then we give it frame by frame using the method takeImage, it in turn converts the image frame to a format that it understands, creates the image necessary for the algorithm to work, translates the frame from color to shades of gray and then feeds all these functions CalcOpticalFlowPyrLK, which considers the optical stream to be a pyramidal method Lucas Canada. At the exit of this function, we get the new coordinates of the point we are following. If the point is lost, it status[0]will be zero. Optical flux can be calculated not only for one point. Run the program lkdemo.pywith a webcam and see how well it handles a lot of points.I’ll also say about converting an image from the Python Imaging Library to the OpenCV format, the fact is that OpenCV for color images uses a different order of color components - BGR, for complete conversion it would be necessary to supplement the code with a line

cv.CvtColor(frame, frame, cv.CV_BGR2RGB), but most tracking algorithms are absolutely confused with You color components or not, our example generally uses only black and white images. Therefore, this line can not be included in the code. I also don’t provide the source code of the class for directly calculating the distance in the article, since there is only the simplest mathematics. It is located in the file

distance_measure.py. It remains only to show the source code of the main script, which forms a graphical interface and loads all the other modules.

main.pyfrom distance_measure import Calculator

from webcam import WebCam

from tracker import Tracker

from Tkinter import *

import ImageTk as PILImageTk

import time

class GUIFramework(Frame):

"This is the GUI"

def __init__(self,master=None):

Frame.__init__(self,master)

self.grid(padx=10,pady=10)

self.distanceLabel = Label(self, text='Distance =')

self.distanceLabel.grid(row=0, column=0)

self.speedLabel = Label(self, text='Speed =')

self.speedLabel.grid(row=0, column=1)

self.imageLabel = None

self.cameraImage = None

self.webcam = WebCam()

# M = 510, L = 0.5, W = 640

self.dist_calculator = Calculator(500, 0.5, 640, 1)

self.tracker = Tracker()

self.after(100, self.drawImage)

def updateMeasure(self, x):

(distance, speed) = self.dist_calculator.calculate(x, time.time())

self.distanceLabel.config(text = 'Distance = '+str(distance))

# If you want get km/h instead of m/s just multiply

# m/s value by 3.6

#speed *= 3.6

self.speedLabel.config(text = 'Speed = '+str(speed) + ' m/s')

def imgClicked(self, event):

"""

On left mouse button click calculate distance and

tell tracker which object to track

"""

self.updateMeasure(event.x)

self.tracker.target(event.x, event.y)

def drawImage(self):

"Load and display the image"

img, timestamp = self.webcam.getImage()

# Pass image to tracker

self.tracker.takeImage(img)

if self.tracker.point:

pt = self.tracker.point[0]

self.updateMeasure(pt[0])

# Draw rectangle around tracked point

img.paste((128, 255, 128), (int(pt[0])-2, int(pt[1])-2, int(pt[0])+2, int(pt[1])+2))

self.cameraImage = PILImageTk.PhotoImage(img)

if not self.imageLabel:

self.imageLabel = Label(self, image = self.cameraImage)

self.imageLabel.bind("", self.imgClicked)

self.imageLabel.grid(row=1, column=0, columnspan=2)

else:

self.imageLabel.config(image = self.cameraImage)

# 30 FPS refresh rate

self.after(1000/30, self.drawImage)

if __name__ == '__main__':

guiFrame = GUIFramework()

guiFrame.mainloop()

As I said above, I chose the Tkinter library for creating a graphical interface, I also worked with other toolkits, such as GTK, QT and, of course, wxPython, but they had to be installed additionally, while Tkinter works right away and it is very it is easy to use, however, of course, you cannot create a complex interface on it, but its abilities are more than enough for the task. In the initialization of the class, I create a grid

gridfor the location of other widgets in it: two text fields and one image. With Tkinter, I didn’t even have to separately create streams for downloading images from a webcam, because there is such a method afterthat allows you to perform the specified function after a certain period of time. You can update the text and image Labelbyconfig. Very simple! Processing the mouse click event using the method is bindtranslated to the method imgClicked. Downloading the image and its time stamp is carried out by the function

self.webcam.getImage. The webcam module only loads the appropriate module for working with the webcam, depending on which operating system the program is currently running on. Once again, I will provide a link to the archive with the program - distance-measure .

Required packages for ubuntu: python, python-imaging, python-imaging-tk, opencv version 2.2 and build-essential to compile the V4L2 wrapper.

The program starts through:

python main.pyTo start monitoring the object, you must click on it.

That's all.