FlashCache How to use Flash in SHD NOT as SSD?

The use of Flash-memory for modern storage systems has become almost an everyday occurrence, the concept of SSD - Solid-State Disk, has become widespread practice in enterprise storage systems and servers. Moreover, for many, the concepts of Flash and SSD have become almost synonymous. However, NetApp would not be itself if it were not for Flash to find a better, own way of using it.

How can Flash be used for storage, but NOT as an SSD?

I'll start, perhaps, with the definition of what an SSD is.

SSD or Solid-State Disk (literally: "Solid State Disk", a disk without moving, mechanical parts) is a way to present the OS space of non-volatile memory using Flash technology as a virtual, emulated hard disk.

The most traditional type of "non-volatile memory", that is, non-volatile memory, for OS and applications are "hard drives", HDD. All OSs can work with hard drives, so it would be natural, to simplify work and shorten the "path to the consumer’s desk," not for every OS support its own architecture for using Flash, but to emulate Flash-memory itself simple and intuitive for a driver of any OS, it is a pseudo-“hard disk”, and later work with it as a hard disk.

The advantages of this approach are simple. This allows you to use existing drivers in any OS and not to block specific support for each of them.

Cons - emulation is still emulation. A number of features of Flash can be quite difficult to use and take into account, working with it as a "disk". An example is a rather complicated organization of rewriting a data byte in Flash.

NetApp has chosen its own special path for using Flash. It uses Flash-memory not in the form of an emulated "disk" (storage), but directly as a memory (memory).

It's no secret that in data stored on disks a significant part is occupied by data that is rarely accessed. In English literature they are called “cold data”, and, according to estimates, the amount of cold data in the total storage volume can reach 80%. That is, approximately 80% of stored application data is accessed relatively rarely. This means that approximately 80% of the money we spent on the still very expensive SSDs was wasted and did not give us any actual return.

The cold data that lies on the SSD could just as well lie on the cheap SATA, anyway it is currently lying motionless. Due to the fact that it lies and takes up space on super-expensive SSDs, our system as a whole will not work faster, because the advantage of SSD is manifested only at the time of accessing data, and data can simply “lie” on any disk. But we lose this place for those active data that, if placed on an SSD, would give a performance boost. Cold Data is the classic hay dog. And the SSD capabilities themselves are not used, and others who are in need are not given.

What would be the problem? Identify the “cold” data, separate it, and store it in a separate storage.

But the trouble is that different data fall into these 80% at every moment of time. The data in the database on postings for the last quarter, the whole next quarter will lie untouched and “cold”, however, during the preparation of the annual report they will again become very “hot”.

It would be very good if such a movement along the “storage levels" occurred automatically. But such a system will greatly complicate the entire storage architecture. After all, the storage system will have to not only receive and transmit data as quickly as possible, but also evaluate the activity of the fragment, move it somehow, and also remember where the fragment is located today in order to properly “reroute” the client’s request.

A lot of difficulties, and all because we chose the wrong tool. I already made this funny analogy once, about how it is difficult and difficult to hammer bolts into nuts with a hammer. You can take an increasingly heavy hammer to accomplish this task, or you can simply change the hammer to a wrench, and use the bolts for their intended purpose. :)

Using Flash not as storage, but as memory, NetApp very elegantly solved the cold data problem.

All of you, I am sure, know about the principles of the RAM cache in modern data storage systems.

Cache is an intermediate operational data storage in fast RAM.

If the client requests a block of data from the storage, then it is read and thus gets into the cache.

If this block is accessed again later, it will be returned to the client not from slow disks, but from the fast cache. If this block is not accessed for a long time, it will automatically be forced out of the cache, and in its place it will be used by the more popular block.

Thus, we see that the data in the cache is always, "by definition", 100% hot data!

Now let's imagine that we use flash not as a disk, but as a memory, as a kind of “second level cache”, additional space. Usually we have a relatively slow disk space in the storage system, and a significantly faster RAM cache. Alas, we cannot infinitely increase the size of the RAM cache. It is at least very expensive. However, if we have a fairly capacious, though not as fast as DDR DRAM Flash memory, we can put it as a “second level cache” to the existing RAM cache.

Now the cache mechanism works as follows: the data falls into the RAM cache (usually its size, depending on the type and power of the controller, is from 1 to 96GB), and is there until it is replaced by more relevant data. Being squeezed out of the RAM cache, they are not deleted, but transferred to the Flash cache (usually the size, depending on the power of the controller, its type and the number of possible modules, from 256GB to 4TB). There, the cached blocks are located until they are "out of date", in turn, and are not replaced by actual data. And only then reading will go from disks. But while this data is in FlashCache, reading it is about 10 times faster than from disks!

* Please note that the pictures are taken from the manual for using FlashCache, and there it is called PAM - Performance Acceleration Module, it is its “old” and “internal” name, that is, “PAM” is not “RAM”.

What are the results of using FlashCache? How much does claimed effectiveness show in practice?

To compare performance, NetApp is participating in a test program using the industry standard and widely accepted SPECsfs benchmark . This is a test of “network file systems”, that is, NAS, using the NFS and CIFS protocols, the results of which are easy to extrapolate to other tasks.

The midrange model FAS3140 with 224 FC disks was taken as a reference system (these are 16 shelves with 14 disks in each, a complete 48U-case of FC disks!).

It is no secret that very often I / O, when configuring it, are forced to put a lot of disks (we usually use “disk spindles” - disk spindles). And very often it is the performance in IOPS, and not the storage capacity, that dictates the number of disks used by the system. Many disks in such a system are a necessary measure to ensure high-speed input-output.

You can see that such a system showed SPECsfs2008 CIFS 55476 Speckmark "parrots" in terms of I / O performance.

Now let's take the same system, but now with the FlashCache board inside, but this time with only 56 of the same FC drives (these are just 4 disk shelves, four times smaller than the disk “spindles” that usually determine IOPS performance)

We see that for a system that is standing (total drives, shelves, plus FlashCache) is 54% cheaper, while the same level of performance in the "parrots", a significantly lower level of delays is achieved, and due to the smaller number of disks, energy consumption and Rack space occupied by the system.

But that is not all. The third system contains instead of FC disks 96 SATA disks , usually characterized by low performance in IOPS, and again FlashCache.

We see that even with the use of relatively “slow” SATA at 1TB, our system shows all the same performance indicators, while providing 50% more available capacity than the system on FC disks without FlashCache, and 66% less power consumption, with approximately equal to the level of delays.

The practical result is so powerful and convincing that NetApp sold its customers a petabyte of flash in the form of FlashCache, only six months after the announcement of this product, and as far as I know, sales continue to grow (NetApp even claims that today they are the second largest Flash seller -memory in the world, after Apple, with their iPod / iPad / iPhone).

But FlashCache would not work so efficiently if it were not for (again!) The capabilities of the WAFL structure below it. Remember, telling about WAFL , and then about these or those “chips” of NetApp storage systems, I repeated several times already that WAFL is the very “foundation”, the foundation of everything that NetApp can do.

What did “play” in this case?

Those who are seriously and deeply engaged in the prospects of using SSDs in productive applications already know one, extremely unpleasant feature of Flash as a technology - very low performance on random writing (random write).

Almost always, when it comes to SSD performance, manufacturers show grandiose results of random reading, however, they almost always bypass the issue of random write performance in small blocks anyway, and this, in a typical workload, accounts for about 30% of all operations.

These are IOPS indicators of the popular high-performance enterprise-class SSD FusionIO

And this is the IOPS performance label for the popular Intel X25M, “hidden” in the “for partners” documentation. Pay attention to the highlighted and underlined.

Such "surprises" are found in almost any SSD, any manufacturer.

This is due to the difficult procedure of writing to a Flash cell organized in blocks. To (re) write even a small block, you must completely erase and write again the entire Flash "page" containing it. This operation is not fast and in itself, even if you do not take into account the limitations of these cycles.

How does WAFL “play” in this case?

The fact is that in NetApp systems that use WAFL, which is “optimized for recording”, and if more in detail, then the records, as I already said, are written in long sequential “strips”, and due to the fact that such a scheme allows you to not keep records in the cache, and write them directly to disks, at the highest possible speed for these disks, the cache in NetApp systems is practically not used for writing. Accordingly, FlashCache is not used for recording, the algorithmic part is greatly simplified, and the problem with low Flash write performance is solved. Flash in this case is not used at all for writing, recordings directly, through the RAM buffer, go to the disks, you just don’t need to keep them in the cache, that is, we use Flash only in the most efficient mode working with it - random read (sometimes filling it with new data

Thus, it is easy to see that using Flash “as a memory” in the form of a cache, we automatically store only active, hot data in it, that data, which increases the access speed for the real benefit of use, and eliminates problems with low performance and finiteness Flash Record Resource.

Yes, FlashCache is also expensive. But you are guaranteed to use the money spent on it, everything, not just 20%. And that’s exactly what NetApp calls “storage efficiency.”

You can read more about FlashCache in Russian in the technical library of Netwell , which publishes translations into Russian of the official NetApp technical manuals.

The idea of using Flash memory in a more efficient and “direct” way than just emulating “hard drives” on it, in my opinion, is spreading more and more in the industry. Among those who took up this direction were the practical Adaptec, which introduced its MaxIQ, CacheZilla in ZFS, and Microsoft Research, which published an interesting scientific paper Speeding Up Cloud / Server Applications Using Flash Memory (a brief overview in the form of a presentation)

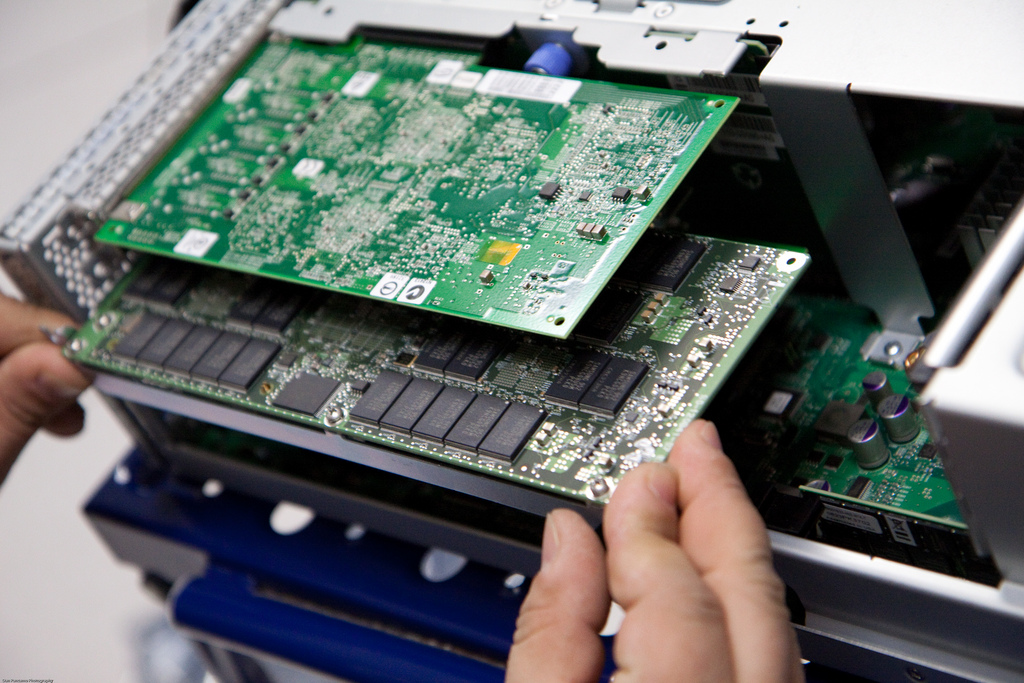

In the photo at the beginning of the article, FlashCache installation (for a while it was called PAM-II - Performance Acceleration Module, Generation 2, was still PAM-I, on DRAM, and used to cache NAS metadata)