Canadian quadcopter obeys the grimaces of the operator

Examples of persons who were used to control the multicopter during experiments. The top row shows neutral faces to which the machine does not respond. In the bottom row there are different variants of grimace triggers, corresponding to the Start command. The two right speakers are remote photos from the multicopter. The face recognition program successfully copes with low image quality, recognizing faces from up to several meters away.

To control the UAV, you usually use either a specialized device or a special program on your smartphone / tablet. But in the future, more convenient interfaces may be needed for human interaction with robots. Students from the autonomous systems laboratory of the Simon Fraser University’s School of Computer Science developedExperimental program to control multikopter using facial expressions . In principle, there is nothing particularly difficult in such a program, but the idea is interesting.

Theoretically, such control can be more intuitive than the control of a joystick or buttons on a tablet. Even now, in some situations, the face is easier to manage. For example, in an experiment with a UAV casting along a parabolic trajectory (a la grenade throwing), it is really convenient to direct the machine by tilting the head.

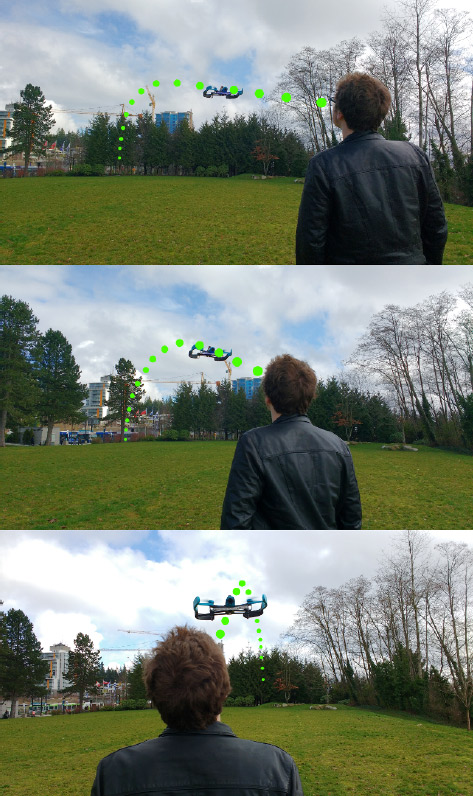

Parabolic trajectories of a UAV are calculated depending on the direction of the operator’s face.

Running a drone using a certain facial expression is also easier than getting a console or opening a program on a tablet. Imagine: threateningly enough to knit your brows or puff out your cheeks, or make some other facial expression — the device immediately takes off and performs the actions you programmed it in advance (“throwing grenades” or circling a target).

In addition to simpler control, there is also no need to spend money on the controller, as is the case with some multicopters. Everybody has a face.

Two stages of training. On the left - learning neutral facial expression. Right multicopter remembers grimace trigger

Expressions of persons to control can be arbitrary. At the preliminary stage, the facial recognition program is trained. She should see your neutral face - and grimace for the trigger team. This is done as follows. You need to raise the drone to eye level, place it in a horizontal position and give the face a neutral expression. This expression should be kept until the robot is completely satisfied. The procedure usually takes less than a minute. Then the drone rotates 90 degrees - and you make a grimace trigger that is very different from your neutral expression.

Then comes the “aiming” stage. After take-off, the multicopter continues to constantly monitor the operator’s face with a video camera. Even if the operator tries to escape - the robot will follow him and follow the expression on his face. At this stage, the operator teaches multicopter action, which he will perform on the trigger command. Currently three types of actions are implemented:

- Beam . Direct movement in a given azimuth and at a given height. The distance is determined by the size of the user's face during the aiming phase. This is where the onion string bow analogy works. The stronger the string is stretched (the farther the face) - the greater the energy of the “shot”.

- Sling . Movement along a ballistic trajectory. It can be useful if the operator wants to send a multicopter to the zone of loss of direct visibility, for an obstacle, for example, for photographing (see the video above). The developers aptly "threw" the robot at 45 m, but they say that nothing prevents to throw it at hundreds of meters. And if the UAV can find the target's face after the peak of the ballistic trajectory, then the two operators can be “thrown” by the robot at a distance of more than a kilometer.

- Boomerang . Movement around a circle of a given radius returning to the starting point. Here, the trajectory parameters are set at the manual aiming stage, depending on the angle of rotation of the multikopter.

The students used a Parrot Bebop quadcopter slightly modified with a strip of LEDs for visual feedback.

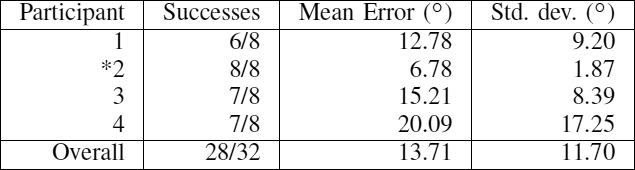

The results of the experiment "Ray". The user marked with an asterisk is an expert (system developer).

In experiments, the system performed surprisingly well. Participants had to send a drone into a hoop with a diameter of 0.8 m at a distance of 8 m - and in most cases coped with the task.

In the future, the authors of the project say, people should “interact with robots and AI applications as naturally as they do now with other people and trained animals — as described in science fiction.”

They notice that grimacing with robots is fun, and this can be used in entertainment applications - and people will come to grips with the idea that robots are so much fun.