Machine hearing. SoundNet neural network trained to recognize objects by sound

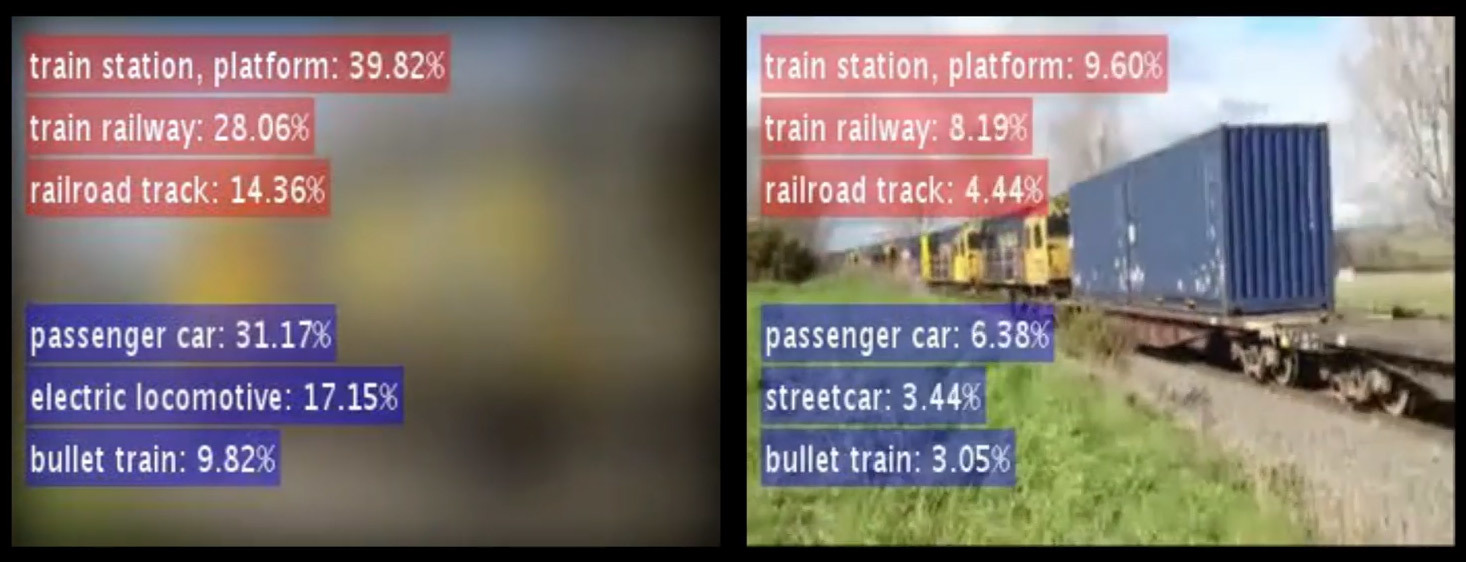

Left: an attempt to recognize the scene and objects only by sound. Right: the real source of sound

. Recently, neural networks have made considerable progress in recognizing objects and scenes in video. Such achievements were made possible by training on massive datasets with labeled objects (for example, see the work "Learning deep areas for scenarios using places database" . NIPS, 2014). Looking at photographs or video clips, a computer can almost accurately determine the scene by selecting one suitable description from 401 scenes.For example, "cluttered kitchen", "stylish kitchen", "teen bedroom", etc. But in the field of understanding the sound neural networks have not yet demonstrated such progress. Specialists from the Laboratory of Informatics and Artificial Intelligence (CSAIL) of the Massachusetts Institute of Technology have corrected this deficiency by developing the SoundNet machine learning system .

In fact, the ability to determine the scene from the sounds is just as important as determining the location from the video. In the end, the picture from the camera can often be blurred or not provide enough information. But if the microphone works - the robot will already be able to navigate where it is.

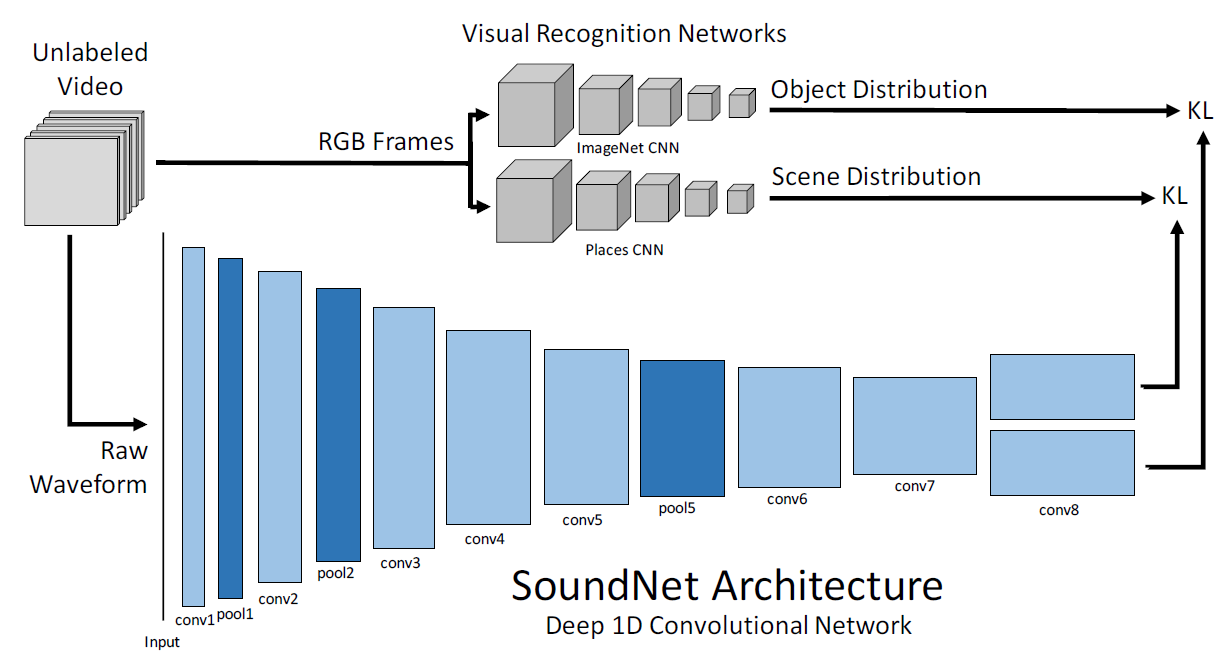

From the point of view of science, learning of the SoundNet neural network is quite a commonplace task. Employees of CSAIL used the method of natural synchronization between machine vision and computer hearing, teaching the neural network to automatically extract the sound representation of an object from unallocated video. About 2 million Flickr videos (26 TB of data) were used for training, as well as an annotated sound database — 50 categories and approximately 2000 samples.

SoundNet Neural Network Architecture

Although the neural network was trained under visual observation, the system gives an excellent result offline by classifying at least three standard acoustic scenes for which the developers checked it. Moreover, testing the neural network showed that it independently learned to recognize sounds characteristic for some scenes, and the developers did not provide its samples for recognizing these objects specifically. According to the base of unmarked video materials, the neural network itself learned which scene corresponds to the sound of a jubilant crowd (this is a stadium) and bird chirping (this is a lawn or a park). Simultaneously with the scene, the neural network is also recognized by the specific object that is the source of the sound.

The video shows some examples of object recognition by sound. Initially, the sound sounds and the recognition result is shown, and the picture itself is blurred - so you can try to check yourself. Can you understand the location and the presence of certain objects only by sound as accurately as a neural network does. For example, what does the song "Happy Birthday To You!" Most likely mean that a few people sing in chorus? The correct answer is: the object is burning candles , the scene is a restaurant, cafe, bar .

“Machine vision has become so good that we can transfer this technology to other areas,” said Carl Vondrick, a student at the Massachusetts Institute of Technology in electrical engineering and computer science, one of the authors of the scientific work. - We used the natural relationship between computer vision and sound. It was possible to achieve a large scale due to a multitude of unpartitioned video materials so that the neural network learned to understand sound. ”

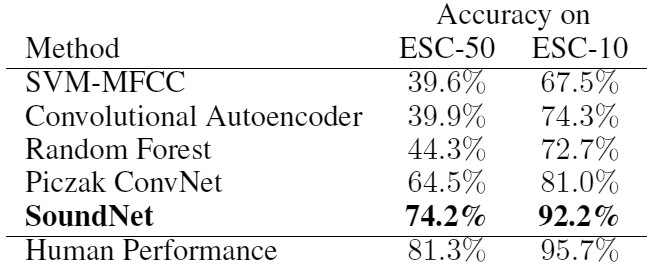

SoundNet was tested on two standard sound recording bases, and it showed 13–15% higher accuracy of object recognition than the best of such programs. On a data set with 10 different sound categories, SoundNet classifies sounds with an accuracy of 92%, and on a data set with 50 categories it shows an accuracy of 74%. For comparison, on the same data sets, people show recognition accuracy, on average, 96% and 81%.

Even people sometimes can not accurately determine exactly what they hear. Try this experiment on your own. Let a colleague launch an arbitrary video from YouTube - and you try to say without looking at the monitor what is happening, where the sounds come from and what is shown on the screen. Not always you can guess. So the challenge for artificial intelligence is really not easy, but SoundNet managed quite well to cope with it.

In the future, such computer programs may find practical practical value. For example, your mobile phone will automatically recognize that you have come to a public place - a cinema or theater, and automatically muffle the volume of a call. If the film has started and the audience has calmed down, the phone will automatically turn off the sound and turn on the vibrating alert.

Orientation by terrain by sound will help in control programs for autonomous robots and other machines.

In security systems and smart homes, the system can respond in a specific way to specific sounds. For example, the sound of a broken window. In the smart cities of the future, recognizing noise on the streets will help you understand its causes and deal with sound pollution.

The scientific article was published on October 27, 2016 in open access on the website arXiv.org (arXiv: 1610.09001, pdf ).