Ultramodern OpenGL. Part 2

All a good mood and lower temperature outside the window. As promised, I am publishing a continuation of the article on the super-duper of modern OpenGL. Who has not read the first part - Ultramodern OpenGL. Part 1 .

Maybe you're lucky and I can shove all the remaining material into this article, this is not certain ...

Array texture

Texture arrays were added back in OpenGL 3.0, but for some reason few people write about them (information is reliably hidden by Masons). All of you are familiar with programming and know what array is , although I’d better “approach” from the other side.

To reduce the number of switching between textures, and as a result, to reduce state switching operations, people use texture atlases (a texture that stores data for several objects). But smart guys from Khronos have developed an alternative for us - Array texture. Now we can store textures as layers in this array, that is, it is an alternative to atlases. The OpenGL Wiki has a slightly different description about mipmaps, etc., but it seems too complicated to me ( link ).

The advantages of using this approach compared to atlases are that each layer is considered as a separate texture in terms of wrapping and mipmapping.

But back to our rams ... The texture array has three types of target:

- GL_TEXTURE_1D_ARRAY

- GL_TEXTURE_2D_ARRAY

- GL_TEXTURE_CUBE_MAP_ARRAY

Code for creating a texture array:

GLsizei width = 512;

GLsizei height = 512;

GLsizei layers = 3;

glCreateTextures(GL_TEXTURE_2D_ARRAY, 1, &texture_array);

glTextureStorage3D(texture_array, 0, GL_RGBA8, width, height, layers);The most attentive ones noticed that we are creating a repository for 2D textures, but for some reason we are using a 3D array, there is no mistake or typo here. We store 2D textures, but since they are located in “layers” we get a 3D array (in fact, pixel data is stored, not textures. The 3D array has 2D layers with pixel data).

Here it is easy to understand on the example of 1D texture. Each line in a 2D pixel array is a separate 1D layer. Mipmap textures can also be created automatically.

On this, all the difficulties end and adding an image to a specific layer is quite simple:

glTextureSubImage3D(texarray, mipmap_level, offset.x, offset.y, layer, width, height, 1, GL_RGBA, GL_UNSIGNED_BYTE, pixels);When using arrays, we need to change the shader a little

#version 450 core

layout (location = 0) out vec4 color;

layout (location = 0) in vec2 texture_0;

uniform sampler2DArray texture_array;

uniform uint diffuse_layer;

float getCoord(uint capacity, uint layer)

{

return max(0, min(float(capacity - 1), floor(float(layer) + 0.5)));

}

void main()

{

color = texture(texture_array, vec3(texture_0, getCoord(3, diffuse_layer)));

}The best option would be to calculate the desired layer outside the shader, for this we can use UBO / SSBO (it is also used to transfer matrices, and many other data, but it's somehow another time). If anyone can’t wait for tyk_1 and tyk_2 , you can read.

As for the sizes, i.e. GL_MAX_ARRAY_TEXTURE_LAYERS which is 256 in OpenGL 3.3 and 2048 in OpenGL 4.5.

It is worth telling about the Sampler Object (not related to the Array texture, but a useful thing) - this is an object that is used to adjust the state of a texture unit, regardless of which object is currently attached to the unit. It helps separate sampler states from a particular texture object, which improves abstraction.

GLuint sampler_state = 0;

glGenSamplers(1, &sampler_state);

glSamplerParameteri(sampler_state, GL_TEXTURE_WRAP_S, GL_REPEAT);

glSamplerParameteri(sampler_state, GL_TEXTURE_WRAP_T, GL_REPEAT);

glSamplerParameteri(sampler_state, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glSamplerParameteri(sampler_state, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

glSamplerParameterf(sampler_state, GL_TEXTURE_MAX_ANISOTROPY_EXT, 16.0f);I just created a sampler object, enabled linear filtering and 16x anisotropic filtering for any texture unit.

GLuint texture_unit = 0;

glBindSampler(texture_unit, sampler_state);Here we just bind the sampler to the desired texture unit, and when it ceases to be the desired bindim 0 to this unit.

glBindSampler(texture_unit, 0);When we linked the sampler, its settings take precedence over the settings of the texture unit. Result: There is no need to modify the existing code base to add sampler objects. You can leave the texture creation as it is (with its own sampler states) and just add code to control and use the sampler objects.

When it is time to delete the object, we simply call this function:

glDeleteSamplers(1, &sampler_state);Texture view

I will translate this as “a texture pointer (it may be more correct than the link, I xs)”, because I do not know the best translation.

What are pointers in the perspective of OpenGL?

Everything is very simple, this is a pointer to the data of an immutable (namely, mutable) texture, as we see in the picture below.

In fact, this is an object that shares the texel data of a certain texture object, for analogy we can use std :: shared_ptr from C ++ . As long as there is at least one texture pointer, the original texture will not be removed by the driver.

The wiki describes it in more detail, as well as reading about the types of texture and target (they do not have to match)

To create a pointer, we need to get a texture handle by calling glGenTexture(no initialization is needed) and then glTextureView .

glGenTextures(1, &texture_view);

glTextureView(texture_view, GL_TEXTURE_2D, source_name, internal_format, min_level, level_count, 5, 1);Texture pointers can point to the Nth level of mipmap, quite useful and convenient. Pointers can be either texture arrays, parts of arrays, a specific layer in this array, or it can be a slice of a 3D texture as a 2D texture.

Single buffer for index and vertex

Well, everything will be quick and easy. Previously, the OpenGL specification for Vertex Buffer Object recommended that the developer split vertex and index data into different buffers, but now this is not necessary (a long history why not).

All we need to do is save the indexes in front of the vertices and tell where the vertices start (more precisely, the offset), for this there is the glVertexArrayVertexBuffer command

Here's how we would do it:

GLint alignment = GL_NONE;

glGetIntegerv(GL_UNIFORM_BUFFER_OFFSET_ALIGNMENT, &alignment);

const GLsizei ind_len = GLsizei(ind_buffer.size() * sizeof(element_t));

const GLsizei vrt_len = GLsizei(vrt_buffer.size() * sizeof(vertex_t));

const GLuint ind_len_aligned = align(ind_len, alignment);

const GLuint vrt_len_aligned = align(vrt_len, alignment);

GLuint buffer = GL_NONE;

glCreateBuffers(1, &buffer);

glNamedBufferStorage(buffer, ind_len_aligned + vrt_len_aligned, nullptr, GL_DYNAMIC_STORAGE_BIT);

glNamedBufferSubData(buffer, 0, ind_len, ind_buffer.data());

glNamedBufferSubData(buffer, ind_len_aligned, vrt_len, vrt_buffer.data());

GLuint vao = GL_NONE;

glCreateVertexArrays(1, &vao);

glVertexArrayVertexBuffer(vao, 0, buffer, ind_len_aligned, sizeof(vertex_t));

glVertexArrayElementBuffer(vao, buffer);Tessellation and compute shading

I will not tell you about the tessellation shader, since there is a lot of material in Google about this (in Russian), here are a couple of lessons: 1 , 2 , 3 . We proceed to consider the shader for calculations (bliiin, also a lot of material, I will tell you briefly).

The advantage of video cards in a very large number of cores, video cards are designed for a huge number of small tasks that can be performed in parallel. The calculation shader, as the name implies, makes it possible to solve problems that are not related to graphics (not necessary).

A picture, I don’t know what to call it (like streams are grouped).

What can we use for?

- Image processing

- Bloom

- Tile-Based Algorithms (Delayed Shading)

- Simulations

- Particles

- Water

Further I see no reason to write, there is also a lot of information in Google, here is a simple example of use:

//биндим пйплайн с расчетным шейдером

glUseProgramStages( pipeline, GL_COMPUTE_SHADER_BIT, cs);

//биндим текстуру, как изображение для чтения/записи

glBindImageTexture( 0, tex, 0, GL_FALSE, 0, GL_WRITE_ONLY,

GL_RGBA8);

//запускаем 80x45 потоковых групп (достаточно для 1280х720)

glDispatchCompute( 80, 45, 1);Here is an example of an empty compute shader:

#version 430

layout(local_size_x = 1, local_size_y = 1) in;

layout(rgba32f, binding = 0) uniform image2D img_output;

void main() {

// base pixel color for image

vec4 pixel = vec4(0.0, 0.0, 0.0, 1.0);

// get index in global work group i.e x,y position

ivec2 pixel_coords = ivec2(gl_GlobalInvocationID.xy);

//

// interesting stuff happens here later

//

// output to a specific pixel in the image

imageStore(img_output, pixel_coords, pixel);

}Here are a few links for a deeper look at 1 , 2 , 3 , 4 .

Path rendering

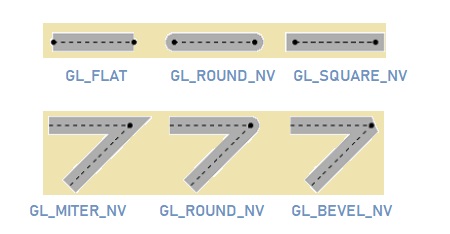

This is a new (not new) extension from NVidia , its main goal is vector 2D rendering. We can use it for texts or UI, and since the graphics are vector, it does not depend on resolution, which is undoubtedly a big plus and our UI will look great.

The basic concept is a stencil, then a cover (cover in the original). Set the path stencil, then visualize the pixels.

For management, standard GLuint is used, and the create and delete functions have a standard naming convention.

glGenPathsNV // генерация

glDeletePathsNV // удалениеHere is a little about how we can get the path:

- SVG or PostScript in string'e

glPathStringNV - array of commands with corresponding coordinates

and for updating dataglPathCommandsNVglPathSubCommands, glPathCoords, glPathSubCoords - fonts

glPathGlyphsNV, glPathGlyphRangeNV - linear combinations of existing paths (interpolation of one, two or more paths)

glCopyPathNV, glInterpolatePathsNV, glCombinePathsNV - linear transformation of an existing path

glTransformPathNV

List of standard commands:

- move-to (x, y)

- close-path

- line-to (x, y)

- quadratic-curve (x1, y1, x2, y2)

- cubic-curve (x1, y1, x2, y2, x3, y3)

- smooth-quadratic-curve (x, y)

- smooth-cubic-curve (x1, y1, x2, y2)

- elliptical-arc (rx, ry, x-axis-rotation, large-arc-flag, sweep-flag, x, y)

Here's what the path string looks like in PostScript:

"100 180 moveto 40 10 lineto 190 120 lineto 10 120 lineto 160 10 lineto closepath” //звезда

"300 300 moveto 100 400 100 200 300 100 curveto 500 200 500 400 300 300 curveto

closepath” //сердцеAnd here in SVG:

"M100,180 L40,10 L190,120 L10,120 L160,10 z” //звезда

"M300 300 C 100 400,100 200,300 100,500 200,500 400,300 300Z” //сердцеThere are still all sorts of goodies with types of fillings, edges, bends:

I will not describe everything here, since there is a lot of material and this will take an entire article (if it is interesting, I will write it somehow).

Here is a list of rendering primitives

- Cubic curves

- Quadratic curves

- Lines

- Font glyphs

- Arcs

- Dash & Endcap Style

Here is some code, and then there is a lot of text:

//Компилирование SVG пути

glPathStringNV( pathObj, GL_PATH_FORMAT_SVG_NV,

strlen(svgPathString), svgPathString);

//заполняем трафарета

glStencilFillPathNV( pathObj, GL_COUNT_UP_NV, 0x1F);

//конфигурация

//покрываем трафарет (визуализируем пикселями)

glCoverFillPathNV( pathObj, GL_BOUNDING_BOX_NV);That's all.

It seems to me that this article came out less interesting and informative, it was difficult to single out the main thing in the material. If someone is interested in learning more in detail, I can discard some NVidia materials and links to specifications (if I remember where I saved them). I am also happy for any help in editing the article.

As promised, I will write the following article about optimizing and reducing draw calls. I would like to ask you to write in the comments about what else you would like to read and what you are interested in:

- Writing a game on cocos2d-x (Practice only, no water)

- Translation of a series of articles on Vulkan

- Some topics on OpenGL (quaternions, new functionality)

- Computer graphics algorithms (lighting, space screen ambient occlusion, space screen reflection)

- Your options

Thank you all for your attention.