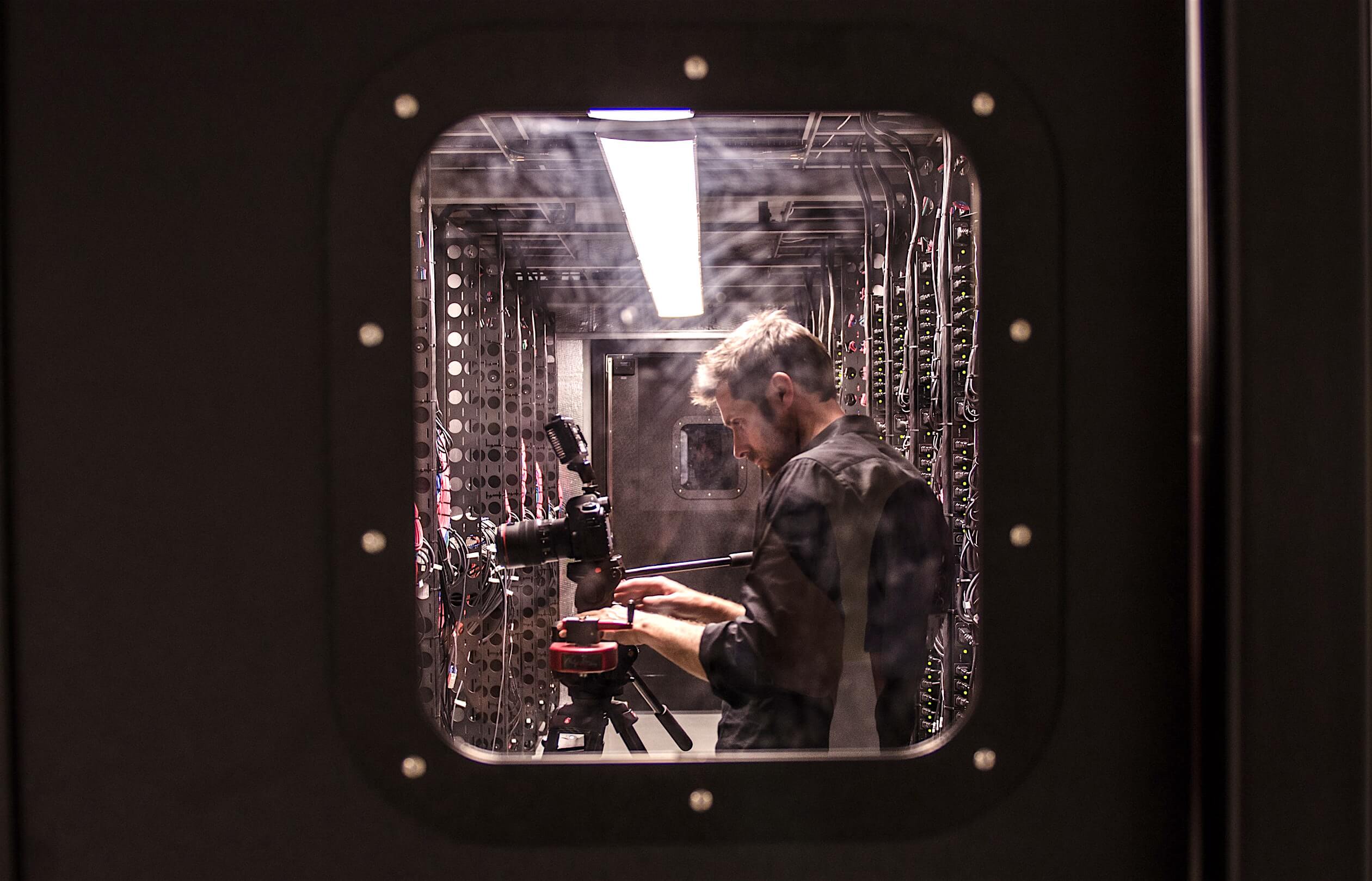

What may be the computing systems of the future

We tell you what new things can appear in data centers and not only in them.

/ photo jesse orrico Unsplash

Silicon transistors are believed to be approaching their technological limit. Last time, we talked about materials that can replace silicon and discussed alternative approaches to the development of transistors. Today we are talking about concepts that can transform the principles of operation of traditional computing systems: quantum machines, neuromorphic chips, and DNA-based computers .

DNA computers

This is a system that uses the computational power of DNA molecules. DNA strands consist of four nitrogen bases: cytosine, adenine, guanine and thymine. By linking them in a specific sequence, you can encode information. To modify the data, special enzymes are used, which, using chemical reactions, complete the DNA chains, as well as cut and shorten them. Such reactions can be carried out in different parts of the molecule at the same time, which allows parallel calculations.

The first DNA-based computer was introduced in 1994. Leonard Adleman, a professor of molecular biology and computer science, has used several tubes with billions of DNA molecules to try to solve the traveling salesman problemfor a graph with seven vertices. Adleman designated its peaks and ribs with DNA fragments with twenty nitrogen bases, and then applied the method of polymerase chain reaction (PCR).

A drawback of Adleman's computer was its “narrow focus”. He was imprisoned for solving one problem and could not perform others. Since then, the situation has changed - at the end of March, scientists from the University of Maynooth and the California Institute of Technology presented a computer into which data is downloaded in the form of DNA sequences and can be reprogrammed.

The system is able to open the way for a new type of computing system, it remains to solve the problem of slow data input and output (the sequencing process is quite expensive and takes a long time).

Despite the difficulties, experts say that in the future, DNA computers the size of modern desktops will outperform supercomputers in performance. They will be able to find application in data centers engaged in processing large data sets.

Neuromorphic processors

The term "neuromorphic" means that the architecture of the chip is based on the principles of the human brain. Such processors emulate the work of millions of neurons with processes called axons and dendrites. The former are responsible for the transmission of information, while the latter are responsible for its perception. Neurons are interconnected by synapses - special contacts through which electrical signals (nerve impulses) are transmitted.

The creation of neuromorphic systems was discussed back in the 1990s . But seriously, they started working in this area after the 2000s. Specialists from IBM Research took part in the SyNAPSE project, whose goal was to develop a computer with an architecture different from that of von Neumann. As part of this project, the company designed the TrueNorth chip.. It emulates the work of a million neurons and 256 million synapses.

Neuromorphic processors are working not only at IBM. Intel has been developing the Loihi chip since 2017 . It consists of 130 thousand artificial neurons and 130 million synapses. A year ago, the company completed the development of a prototype for the 14-nm process technology.

Neuromorphic devices can accelerate the training of neural networks. Such chips, unlike classical processors, do not need to regularly access registers or memory for data. All information is constantly stored in artificial neurons. This feature will allow you to train neural networks locally (without connecting to a repository with a set of test data).

Neuromorphic processors are expected to find use in smartphones and the Internet of things. But so far, there is no need to talk about the large-scale implementation of technology in user devices.

Quantum machines

The basis of quantum computers is made up of qubits. Their work is based on the principles of quantum physics - entanglement and superposition. Superposition allows the qubit to be in a state of zero and one at the same time. Entanglement is a phenomenon in which the states of several qubits are interconnected. This approach allows operations with zero and one at the same time.

/ photo IBM Research CC BY-NA

As a result, quantum computers solve a number of problems much faster than traditional systems. Examples include building mathematical models in the financial, chemical and medical fields, as well as cryptographic operations.

Today, a relatively small number of companies are involved in the development of quantum computing. Among them are IBM with its 50-qubit quantum computer, Intel with 49-qubit and InoQ, which is testing a 79-qubit device . Also in this area are Google , Rigetti and D-Wave .

It is too early to talk about the massive introduction of quantum computers. Even if you do not take into account the high cost of the devices, they have serious technological limitations.

In particular, quantum machines operate at temperatures close to absolute zero. Therefore, such devices are installed only in specialized laboratories. This is a necessary measure to protect fragile qubits that can maintain superposition for only a few seconds (any temperature fluctuations lead to their decoherence ).

Although at the beginning of the year, IBM introduced a quantum computer that can work outside the laboratory with a tightly controlled environment - for example, in local data centers of companies. But you can’t buy a device yet, you can only rent its power through a cloud platform. The company promises that in the future this computer can be purchased by anyone, but when it happens is still unknown.

Materials from our Telegram channel: