Kubernetes Dashboard and GitLab Integration

Kubernetes Dashboard is an easy-to-use tool for obtaining up-to-date information about a working cluster and minimal control over it. You begin to appreciate it even more when access to these features is needed not only by administrators / DevOps engineers, but also by those who are less used to the console and / or do not intend to deal with all the intricacies of interacting with kubectl and other utilities. So it happened with us: the developers wanted quick access to the Kubernetes web interface, and since we use GitLab, the solution came naturally.

Why is this?

Direct developers may be interested in a tool like K8s Dashboard for debugging tasks. Sometimes you want to view logs and resources, and sometimes kill pods, scale Deployments / StatefulSets and even go into the container console (there are also requests for which there is another way, for example, through kubectl-debug ).

In addition, there is a psychological moment for managers when they want to look at the cluster - to see that “everything is green”, and thus calm down that “everything works” (which, of course, is very relative ... but this is beyond the scope of the article )

As a standard CI system, we useGitLab: all developers use it too. Therefore, to give them access, it was logical to integrate Dashboard with accounts in GitLab.

Also note that we are using NGINX Ingress. If you work with other ingress solutions , you will need to independently find analogues of annotations for authorization.

We try integration

Install Dashboard

Attention : If you are going to repeat the steps described below, then - in order to avoid unnecessary operations - first read through to the next subtitle.

Since we use this integration in many installations, we automated its installation. The sources that are required for this are published in a special GitHub repository . They are based on slightly modified YAML configurations from the official Dashboard repository , as well as a Bash script for quick deployment.

The script installs Dashboard in a cluster and configures it to integrate with GitLab:

$ ./ctl.sh

Usage: ctl.sh [OPTION]... --gitlab-url GITLAB_URL --oauth2-id ID --oauth2-secret SECRET --dashboard-url DASHBOARD_URL

Install kubernetes-dashboard to Kubernetes cluster.

Mandatory arguments:

-i, --install install into 'kube-system' namespace

-u, --upgrade upgrade existing installation, will reuse password and host names

-d, --delete remove everything, including the namespace

--gitlab-url set gitlab url with schema (https://gitlab.example.com)

--oauth2-id set OAUTH2_PROXY_CLIENT_ID from gitlab

--oauth2-secret set OAUTH2_PROXY_CLIENT_SECRET from gitlab

--dashboard-url set dashboard url without schema (dashboard.example.com)

Optional arguments:

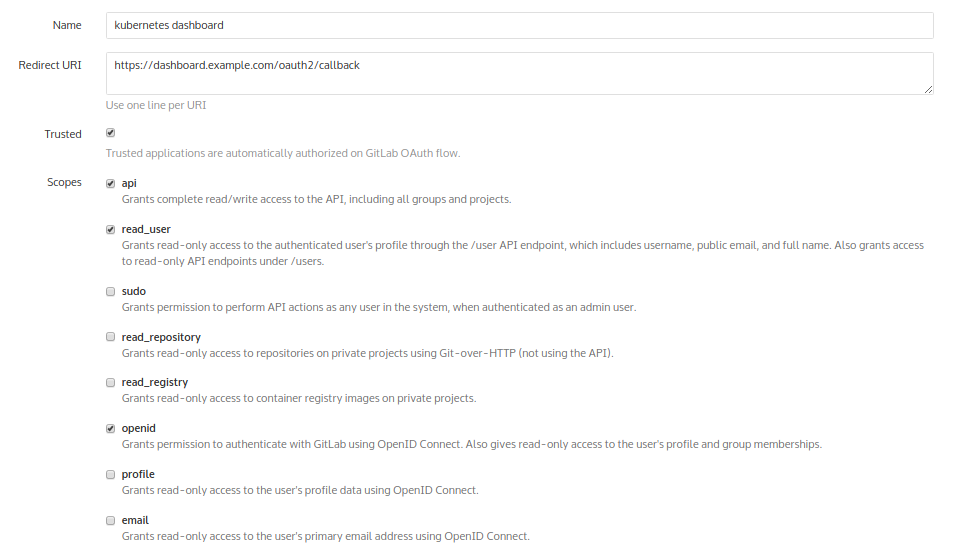

-h, --help output this messageHowever, before using it, you need to go to GitLab: Admin area → Applications - and add a new application for the future panel. Let's call it “kubernetes dashboard”:

As a result of its addition, GitLab will provide hashes:

They are used as arguments to the script. As a result, the installation is as follows:

$ ./ctl.sh -i --gitlab-url https://gitlab.example.com --oauth2-id 6a52769e… --oauth2-secret 6b79168f… --dashboard-url dashboard.example.comAfter that, check that everything started:

$ kubectl -n kube-system get pod | egrep '(dash|oauth)'

kubernetes-dashboard-76b55bc9f8-xpncp 1/1 Running 0 14s

oauth2-proxy-5586ccf95c-czp2v 1/1 Running 0 14sSooner or later everything will start, but authorization will not work right away ! The fact is that in the image used (the situation in other images is similar), the redirect catching process in the callback is incorrectly implemented. This circumstance leads to the fact that oauth erases the cookie, which itself (oauth) provides to us ...

The problem is solved by assembling your oauth image with the patch.

Patch to oauth and reinstall

To do this, use the following Dockerfile:

FROM golang:1.9-alpine3.7

WORKDIR /go/src/github.com/bitly/oauth2_proxy

RUN apk --update add make git build-base curl bash ca-certificates wget \

&& update-ca-certificates \

&& curl -sSO https://raw.githubusercontent.com/pote/gpm/v1.4.0/bin/gpm \

&& chmod +x gpm \

&& mv gpm /usr/local/bin

RUN git clone https://github.com/bitly/oauth2_proxy.git . \

&& git checkout bfda078caa55958cc37dcba39e57fc37f6a3c842

ADD rd.patch .

RUN patch -p1 < rd.patch \

&& ./dist.sh

FROM alpine:3.7

RUN apk --update add curl bash ca-certificates && update-ca-certificates

COPY --from=0 /go/src/github.com/bitly/oauth2_proxy/dist/ /bin/

EXPOSE 8080 4180

ENTRYPOINT [ "/bin/oauth2_proxy" ]

CMD [ "--upstream=http://0.0.0.0:8080/", "--http-address=0.0.0.0:4180" ]And here is the rd.patch patch itself

diff --git a/dist.sh b/dist.sh

index a00318b..92990d4 100755

--- a/dist.sh

+++ b/dist.sh

@@ -14,25 +14,13 @@ goversion=$(go version | awk '{print $3}')

sha256sum=()

echo "... running tests"

-./test.sh

+#./test.sh

-for os in windows linux darwin; do

- echo "... building v$version for $os/$arch"

- EXT=

- if [ $os = windows ]; then

- EXT=".exe"

- fi

- BUILD=$(mktemp -d ${TMPDIR:-/tmp}/oauth2_proxy.XXXXXX)

- TARGET="oauth2_proxy-$version.$os-$arch.$goversion"

- FILENAME="oauth2_proxy-$version.$os-$arch$EXT"

- GOOS=$os GOARCH=$arch CGO_ENABLED=0 \

- go build -ldflags="-s -w" -o $BUILD/$TARGET/$FILENAME || exit 1

- pushd $BUILD/$TARGET

- sha256sum+=("$(shasum -a 256 $FILENAME || exit 1)")

- cd .. && tar czvf $TARGET.tar.gz $TARGET

- mv $TARGET.tar.gz $DIR/dist

- popd

-done

+os='linux'

+echo "... building v$version for $os/$arch"

+TARGET="oauth2_proxy-$version.$os-$arch.$goversion"

+GOOS=$os GOARCH=$arch CGO_ENABLED=0 \

+ go build -ldflags="-s -w" -o ./dist/oauth2_proxy || exit 1

checksum_file="sha256sum.txt"

cd $DIR/dist

diff --git a/oauthproxy.go b/oauthproxy.go

index 21e5dfc..df9101a 100644

--- a/oauthproxy.go

+++ b/oauthproxy.go

@@ -381,7 +381,9 @@ func (p *OAuthProxy) SignInPage(rw http.ResponseWriter, req *http.Request, code

if redirect_url == p.SignInPath {

redirect_url = "/"

}

-

+ if req.FormValue("rd") != "" {

+ redirect_url = req.FormValue("rd")

+ }

t := struct {

ProviderName string

SignInMessage string

Now you can build the image and push it into our GitLab. Next,

manifests/kube-dashboard-oauth2-proxy.yamlwe indicate the use of the desired image (replace it with your own): image: docker.io/colemickens/oauth2_proxy:latestIf you have a registry closed by authorization - do not forget to add a secret for pulling images:

imagePullSecrets:

- name: gitlab-registry... and add the secret itself for registry:

---

apiVersion: v1

data:

.dockercfg: eyJyZWdpc3RyeS5jb21wYW55LmNvbSI6IHsKICJ1c2VybmFtZSI6ICJvYXV0aDIiLAogInBhc3N3b3JkIjogIlBBU1NXT1JEIiwKICJhdXRoIjogIkFVVEhfVE9LRU4iLAogImVtYWlsIjogIm1haWxAY29tcGFueS5jb20iCn0KfQoK

=

kind: Secret

metadata:

annotations:

name: gitlab-registry

namespace: kube-system

type: kubernetes.io/dockercfgAn attentive reader will see that the above long line is base64 from the config:

{"registry.company.com": {

"username": "oauth2",

"password": "PASSWORD",

"auth": "AUTH_TOKEN",

"email": "mail@company.com"

}

}This is the user data in GitLab, the code by which Kubernetes will pull the image from the registry.

After everything is done, you can remove the current (incorrectly working) Dashboard installation with the command:

$ ./ctl.sh -d... and reinstall everything:

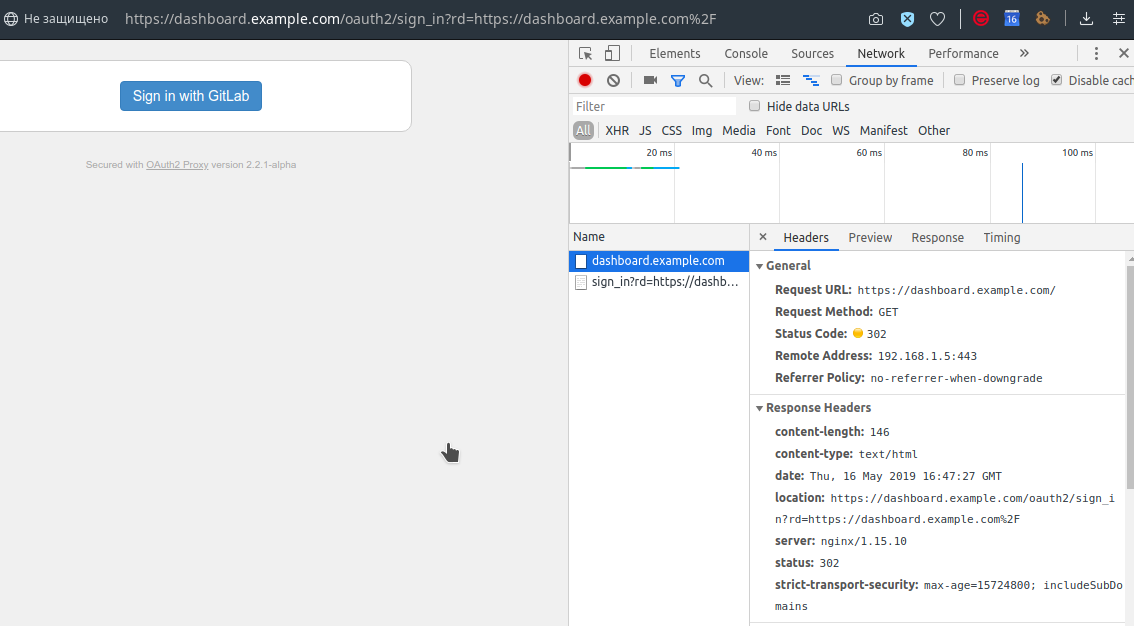

$ ./ctl.sh -i --gitlab-url https://gitlab.example.com --oauth2-id 6a52769e… --oauth2-secret 6b79168f… --dashboard-url dashboard.example.comIt is time to go into the Dashboard and find a rather archaic login button:

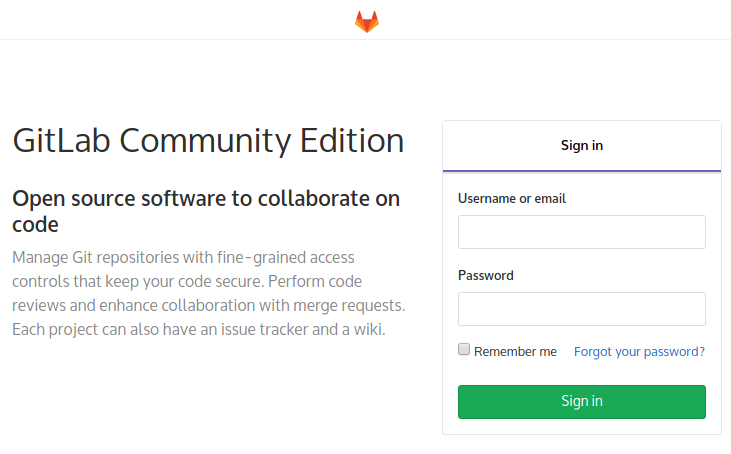

After clicking on it, GitLab will meet us, offering to log in on its usual page (of course, if we were not previously logged in there):

Log in to your GitLab credentials - and everything happened:

About Dashboard Features

If you are a developer who previously did not work with Kubernetes, or just for some reason did not come across Dashboard before, I will illustrate some of its features.

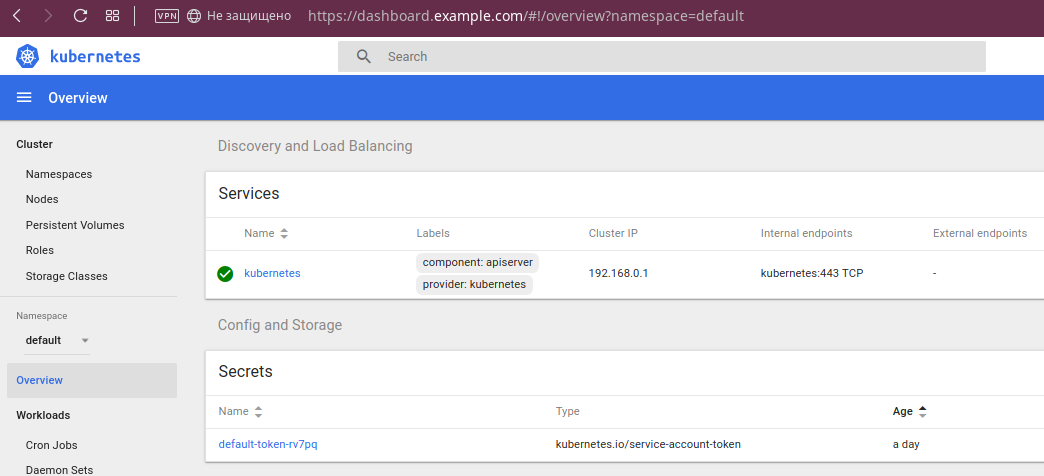

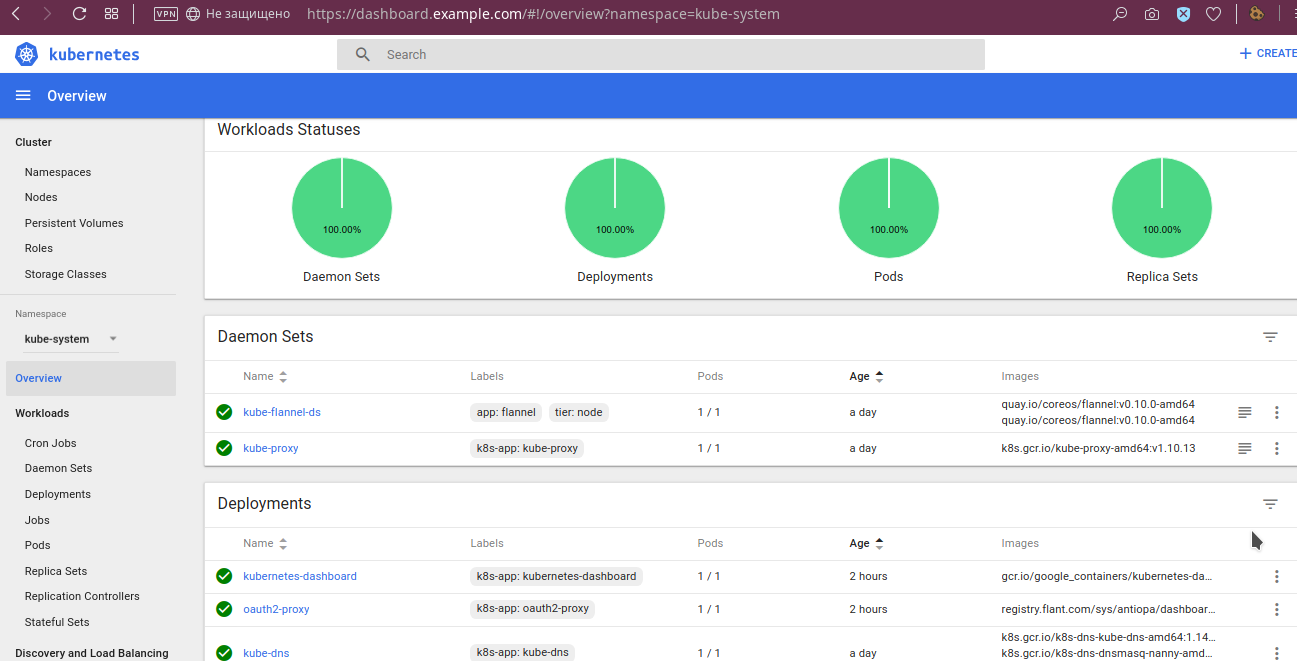

Firstly, you can see that “everything is green”:

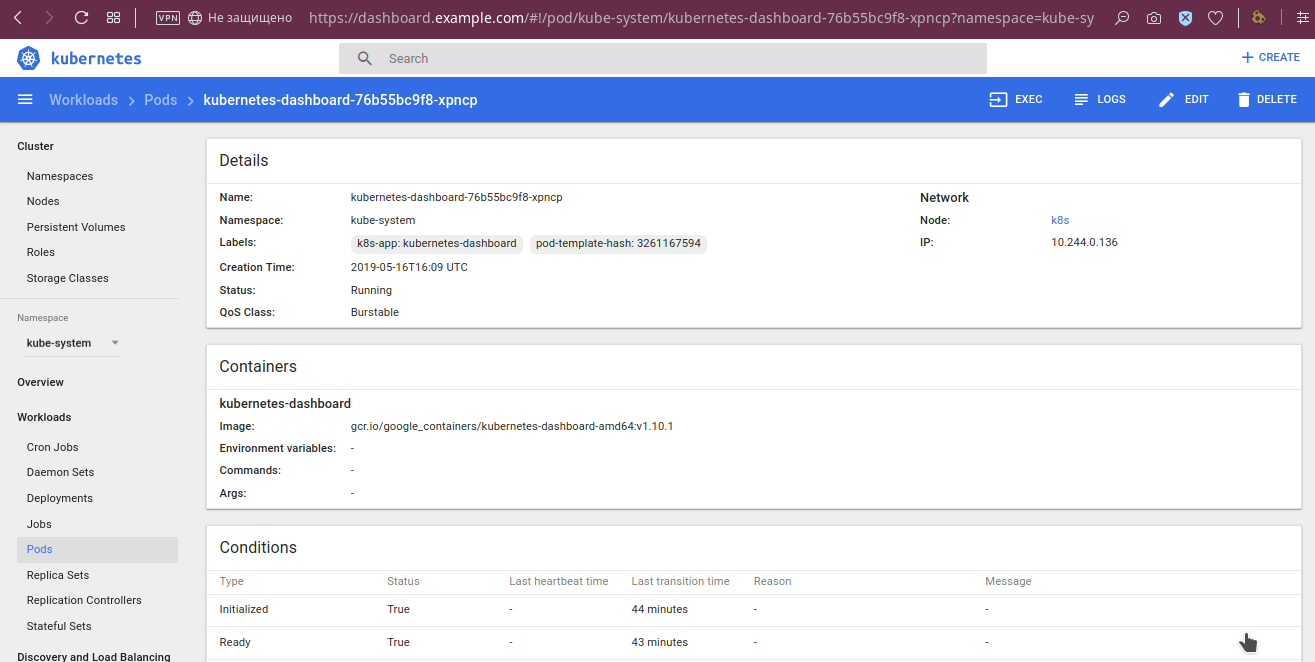

By pods, more detailed data are available, such as environment variables, deflated image, startup arguments, their status:

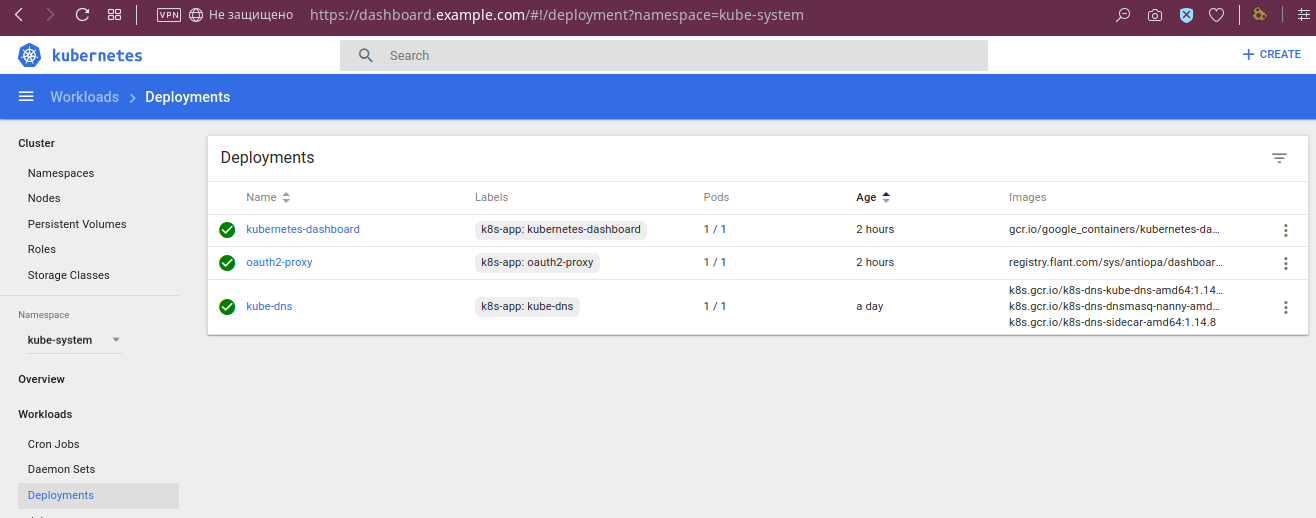

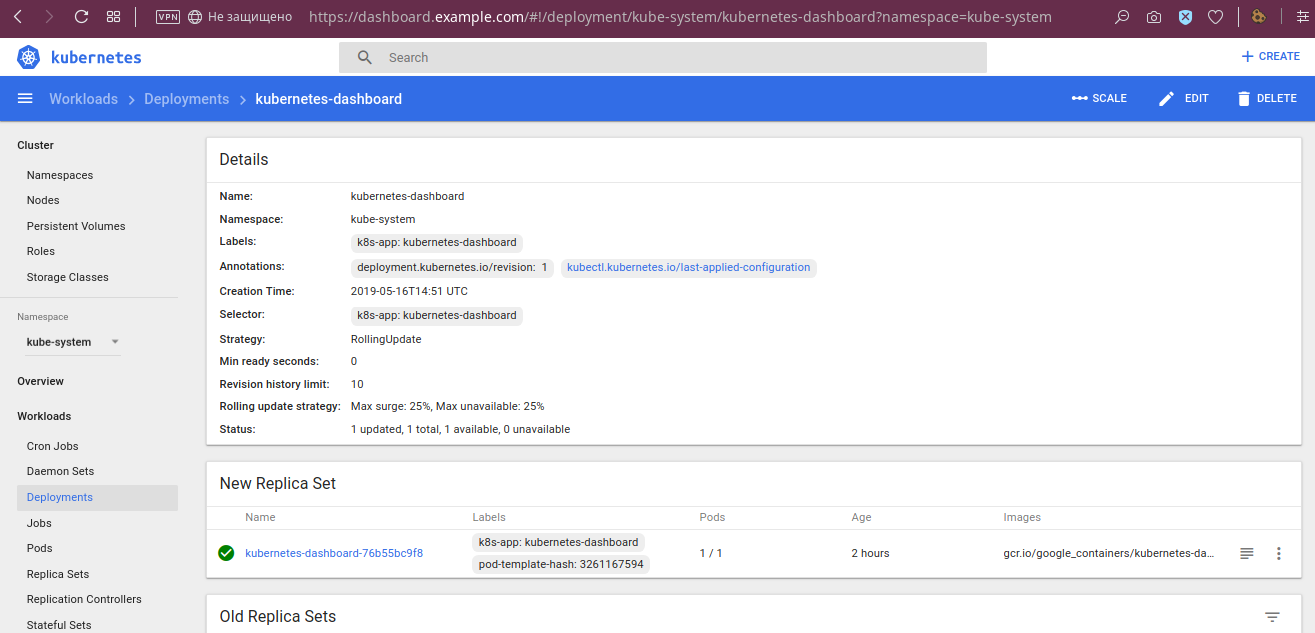

The deployment statuses are visible:

... and other details:

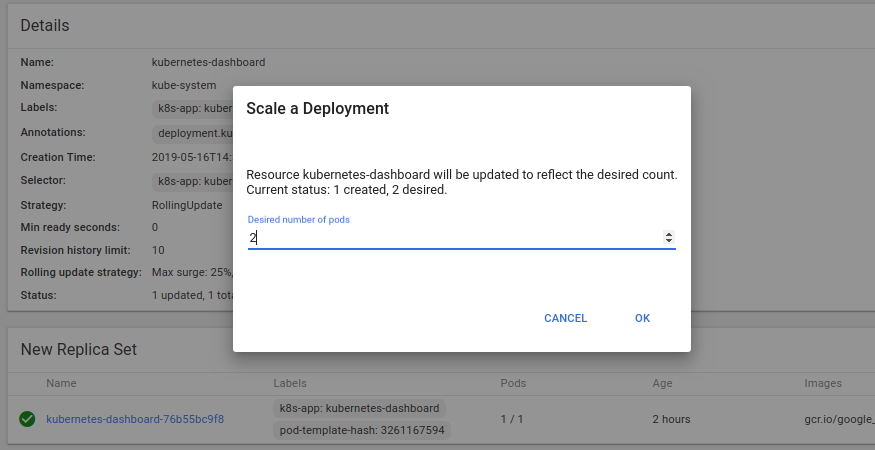

... and it’s also possible to scale the deployment:

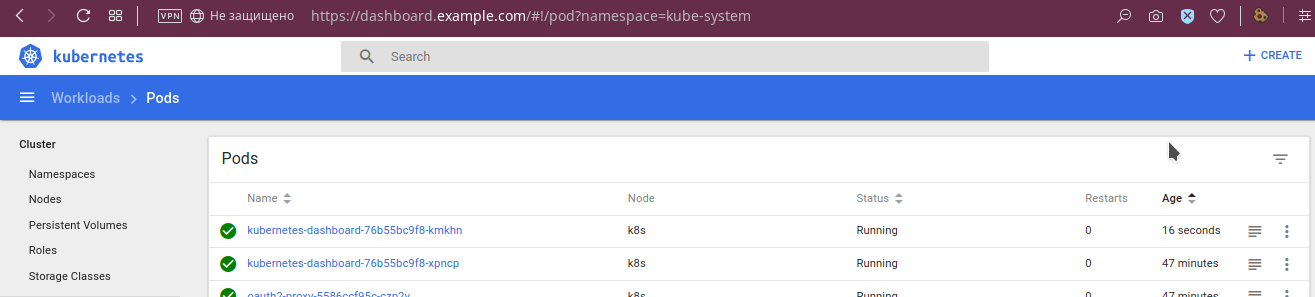

The result of this operation:

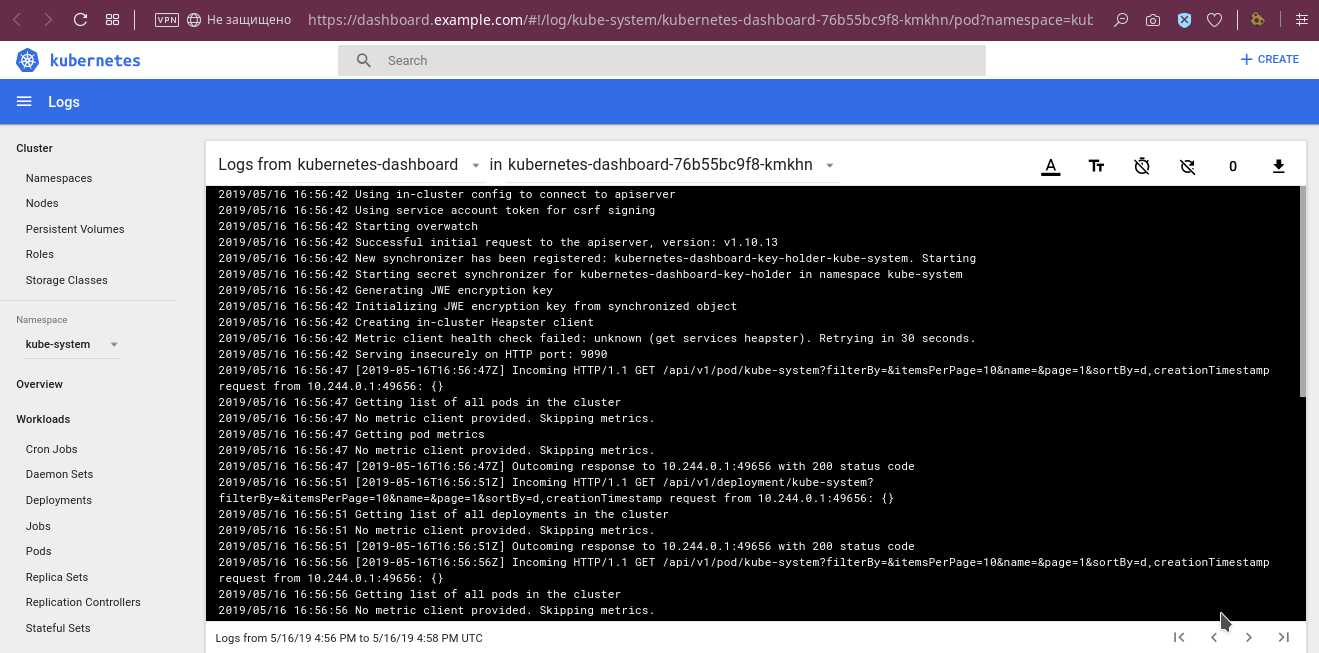

Among other useful features already mentioned at the beginning of the article are viewing logs:

... and the function of entering the container console of the selected pod:

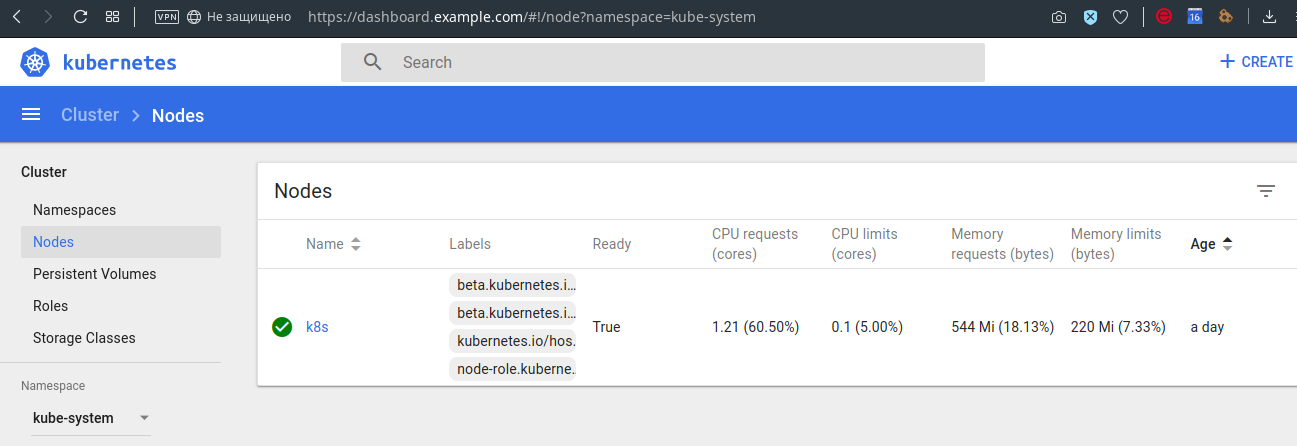

For example, you can also see the limit / request 's on the nodes:

Of course, this is not all the features of the panel, but I hope that the general idea has developed.

Disadvantages of Integration and Dashboard

In the described integration there is no access control . With it, all users who have any access to GitLab get access to the Dashboard. They have the same access to the Dashboard, corresponding to the rights of the Dashboard itself, which are defined in RBAC . Obviously, this is not suitable for everyone, but for our case it turned out to be sufficient.

Of the noticeable minuses in the Dashboard itself, I note the following:

- it is impossible to get into the console of the init container;

- it is not possible to edit Deployments and StatefulSets, although this is fixable in ClusterRole;

- Dashboard compatibility with the latest versions of Kubernetes and the future of the project raises questions.

The latter problem deserves special attention.

Dashboard Status and Alternatives

The Dashboard compatibility table with Kubernetes releases, presented in the latest version of the project ( v1.10.1 ), is not very happy:

Despite this, there is (already adopted in January) PR # 3476 , which announces support for K8s 1.13. In addition, among the issues of the project you can find references to users working with the panel in K8s 1.14. Finally, commits to the project code base do not stop. So (at least!) The actual status of the project is not as bad as it might first appear from the official compatibility table.

Finally, Dashboard has alternatives. Among them:

- K8Dash is a young interface (the first commits are dated March of this year), already offering good features, such as a visual representation of the current status of the cluster and the management of its objects. It is positioned as a “real-time interface”, because automatically updates the displayed data without requiring a page refresh in the browser.

- OpenShift Console is a web interface from Red Hat OpenShift, which, however, will bring other project developments to your cluster, which does not suit everyone.

- Kubernator is an interesting project created as a lower-level (than Dashboard) interface with the ability to view all objects in the cluster. However, everything looks like its development has stopped.

- Polaris - just the other day announced a project that combines the functions of a panel (shows the current state of the cluster, but does not manage its objects) and the automatic "best practice validation" (checks the cluster for the correct configuration of the Deployments running in it).

Instead of conclusions

Dashboard is the standard tool for the Kubernetes clusters that we serve. Its integration with GitLab has also become part of our “default installation”, as many developers are happy with the opportunities that they have with this panel.

Kubernetes Dashboard periodically has alternatives from the Open Source community (and we are happy to consider them), but at this stage we remain with this solution.

PS

Read also in our blog:

- “ Kubebox and other console shells for Kubernetes ”;

- “ Best CI / CD practices with Kubernetes and GitLab (review and video report) ”;

- “ Build and install applications in Kubernetes using dapp and GitLab CI ”;

- “ GitLab CI for continuous integration and delivery in production. Part 1: our pipeline . ”

UPD According to the whoch report, an error was found in the patch (tabs were replaced by spaces). The patch in the article has been updated.