How to move, upload and integrate very large data cheaply and quickly? What is pushdown optimization?

Any operation with big data requires a lot of computing power. A typical move of data from a database to Hadoop can take weeks or cost as much as an airplane wing. Do not want to wait and splurge? Balance the load on different platforms. One way is pushdown optimization.

I asked Alexei Ananyev, the leading Russian trainer for the development and administration of Informatica products, to talk about the pushdown optimization function in Informatica Big Data Management (BDM). Ever learned to work with Informatica products? Most likely, it was Alex who told you the basics of PowerCenter and explained how to build mappings.

Alexey Ananiev, Head of Training at DIS Group

What is pushdown?

Many of you are already familiar with Informatica Big Data Management (BDM). The product can integrate big data from different sources, move it between different systems, provides easy access to them, allows you to profile them and much more.

In skillful hands, BDM can work wonders: tasks will be completed quickly and with minimal computing resources.

You want it too? Learn how to use the pushdown feature in BDM to distribute computing load across platforms. Pushdown technology allows you to turn mapping into a script and choose the environment in which this script will run. The possibility of such a choice allows you to combine the strengths of different platforms and achieve their maximum performance.

To configure the script runtime, select the pushdown type. The script can be fully run on Hadoop or partially distributed between the source and the receiver. There are 4 possible types of pushdown. Mapping can not be turned into a script (native). Mapping can be performed as much as possible at the source (source) or completely at the source (full). Mapping can also be turned into a Hadoop (none) script.

Pushdown optimization

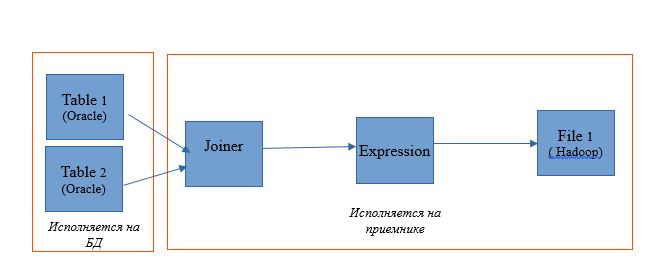

The listed 4 types can be combined in different ways - optimize pushdown for the specific needs of the system. For example, it is often more advisable to extract data from a database using its own capabilities. And to transform the data - by Hadoop, so that the database itself is not overloaded.

Let's look at the case when both the source and the receiver are in the database, and the transformation execution platform can be selected: depending on the settings, it will be Informatica, a database server or Hadoop. Such an example will make it possible to most accurately understand the technical side of this mechanism. Naturally, in real life, this situation does not arise, but it is best suited to demonstrate the functionality.

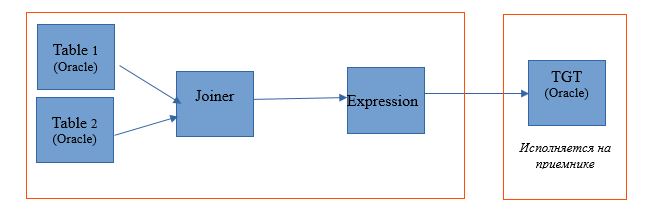

Take mapping to read two tables in a single Oracle database. And let the reading results be written to a table in the same database. The mapping scheme will be as follows:

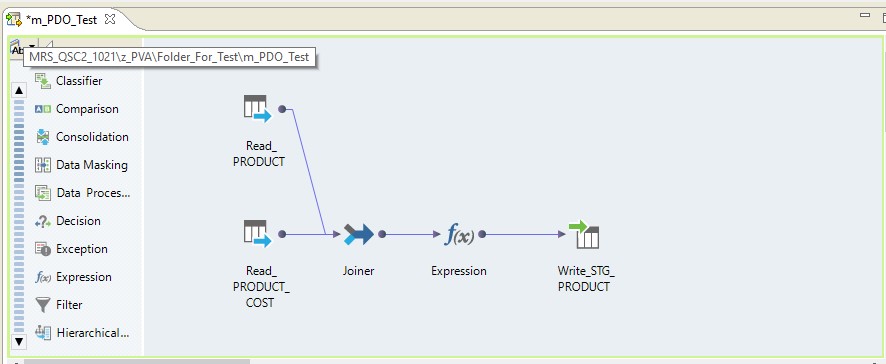

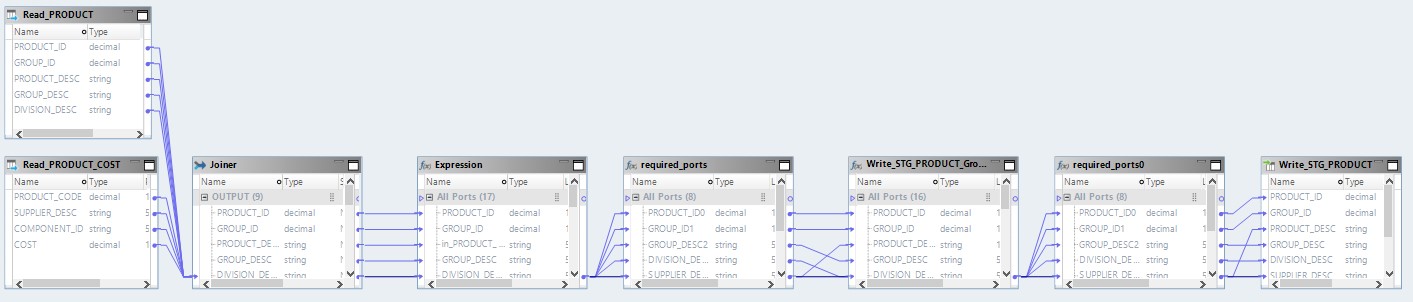

In the form of mapping on Informatica BDM 10.2.1, it looks like this:

Type pushdown - native

If we select the pushdown native type, the mapping will be performed on the Informatica server. The data will be read from the Oracle server, transferred to the Informatica server, transformed there and transferred to Hadoop. In other words, we get a regular ETL process.

Type pushdown - source

When choosing the type source, we get the opportunity to distribute our process between the database server (DB) and Hadoop. When executing a process with this setting, requests to select data from tables will fly to the database. And the rest will be done in the form of steps on Hadoop.

The execution scheme will look like this:

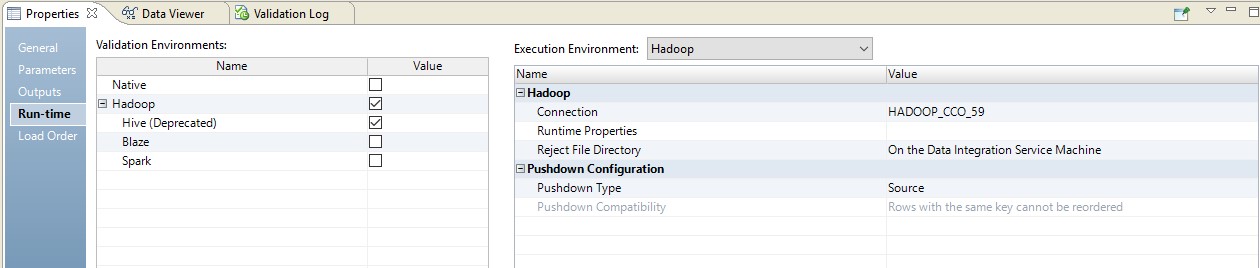

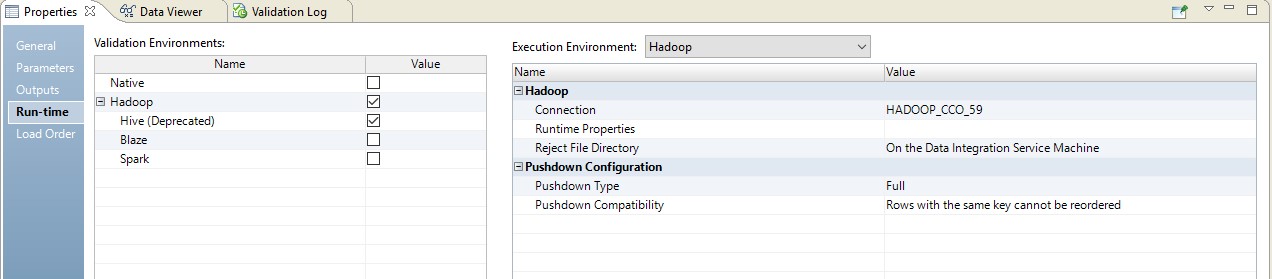

Below is an example of setting up the runtime.

In this case, mapping will be performed in two steps. In his settings, we will see that he turned into a script that will be sent to the source. Moreover, the combination of tables and data conversion will be performed in the form of an overridden query at the source.

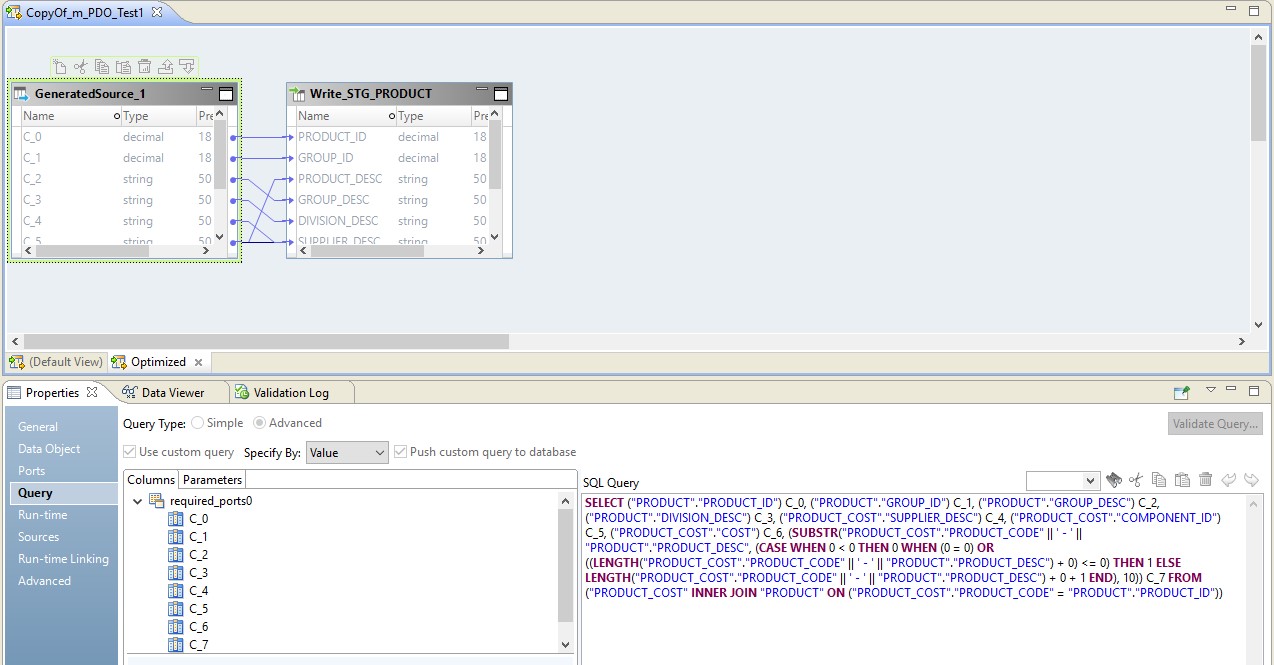

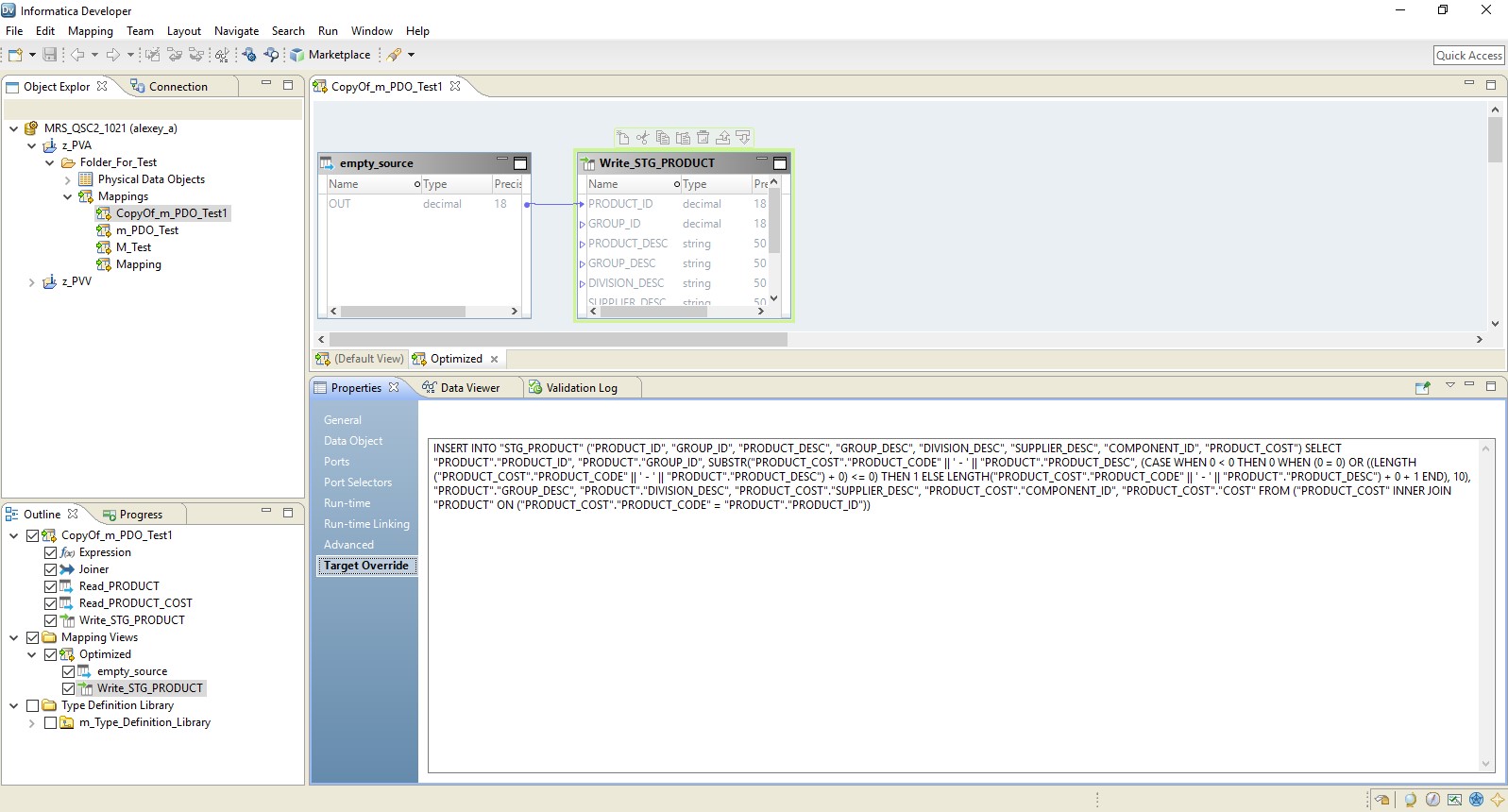

In the picture below, we see optimized mapping on BDM, and on the source - an overridden request.

Hadoop's role in this configuration comes down to managing data flow - conducting it. The result of the request will be sent to Hadoop. After reading, the file from Hadoop will be written to the receiver.

Type pushdown - full

When choosing the full type, mapping will completely turn into a database request. And the query result will be directed to Hadoop. A diagram of such a process is presented below.

An example setup is shown below.

As a result, we get an optimized mapping similar to the previous one. The only difference is that all the logic is transferred to the receiver in the form of an override of its insertion. An example of optimized mapping is presented below.

Here, as in the previous case, Hadoop acts as a conductor. But here the source is read in its entirety, and then at the receiver level the data processing logic is executed.

Type pushdown - null

Well, the last option is the pushdown type, within which our mapping will turn into a Hadoop script.

Optimized mapping will now look like this:

Here, the data from the source files will first be read on Hadoop. Then, by his own means, these two files will be combined. After that, the data will be converted and uploaded to the database.

Understanding the principles of pushdown optimization, you can very effectively organize many processes for working with big data. So, just recently, a large company in just a few weeks uploaded large data from the storage to Hadoop, which it had been collecting for several years before.