How I patched the Universe

There are a lot of articles on the development of games on Habré, but among them there are very few articles that relate to “behind the scenes” topics. One of these topics is the organization of delivery, in fact, of the game to a large number of users for a long time (one, two, three). Despite the fact that for some the task may seem trivial, I decided to share my experience of walking the rake in this matter for one specific project. Anyone interested - please.

A small digression about the disclosure of information. Most companies are very jealous that the “inner kitchen” does not become accessible to the general public. Why - I do not know, but what is - that is. In this particular project - The Universim - I was lucky and the CEO of Crytivo Inc. (formerly Crytivo Games Inc.) Alex Wallet turned out to be absolutely sane in such a matter, so I have the opportunity to share experience with others.

A little about patcher in itself

I have been involved in game development for a long time. In some - as a game designer and programmer, in some - as a fusion of a system administrator and a programmer (I do not like the term “devops”, since it does not accurately reflect the essence of the tasks I perform in such projects).

At the end of 2013 (the horror of how time flies), I thought about delivering new versions (builds) to users. Of course, at that time there were many solutions for such a task, but the desire to make a product and the desire for “bicycle building” won. In addition, I wanted to learn C # more deeply - so I decided to make my own patcher. Looking ahead, I will say that the project was a success, more than a dozen companies have used it and are using it in their projects, some asked to make a version taking into account exactly their wishes.

The classic solution involves creating delta packages (or diffs) from version to version. However, this approach is inconvenient for both tester players and developers - in one case, in order to get the latest version of the game, you need to go through the entire chain of updates. Those. the player needs to sequentially pull together a certain amount of data that he (a) will never use, and the developer to store on his server (or servers) a bunch of outdated data that some of the players might once need.

In another case - you need to download the patch for your version to the latest, but the developer needs to keep all this zoo of patches at home. Some implementations of patch systems require certain software and some logic on the servers - which also creates an additional headache for developers. In addition, often game developers do not want to do anything that is not directly related to the development of the game itself. I will say even more - most are not specialists who can configure servers for the distribution of content - this is simply not their area of activity.

With all this in mind, I wanted to come up with a solution that would be as simple as possible for users (who want to play faster and not dance with patches of different versions), as well as for developers who need to write a game and not find out what and why not updated by the next user.

Knowing how some data synchronization protocols work — when data is analyzed on the client and only changes from the server are transmitted — I decided to use the same approach.

In addition, in practice, from version to version throughout the entire development period, many game files change slightly - the texture is there, the model itself, a few sounds.

As a result, it seemed logical to consider each file in the game directory as a set of data blocks. When the next version is released, the game build is analyzed, a block map is built and the game files themselves are compressed block by block. The client analyzes existing blocks and only the difference is downloaded.

Initially, the patcher was planned as a module in Unity3D, however, one unpleasant detail emerged that made me reconsider this. The fact is that Unity3D is an application (engine) that is completely independent of your code. And while the engine is running, a whole bunch of files are open, which creates problems when you want to update them.

On Unix-like systems, overwriting an open file (unless it is specifically blocked) does not present any problems, but on Windows, without dancing with a tambourine, such a “feint with ears” fails. That is why I made the patcher as a separate application that does not load anything but system libraries. De facto, the patcher itself turned out to be a utility completely independent of the Unity3D engine, which did not prevent, however, adding it to the Unity3D store.

Patcher algorithm

So, developers release new versions with a certain frequency. Players want these versions to get. The goal of the developer is to provide this process with minimal costs and with minimal headache for players.

From the developer

When preparing the patch, the algorithm for the actions of the patcher looks like this:

○ Create a tree of game files with their attributes and checksums SHA512

○ For each file:

► Break the contents into blocks.

► Save the SHA256 checksum.

► Compress a block and add it to the file block map.

► Save the block address in the index.

○ Save the file tree with their checksums.

○ Save the version data file.

The developer has to upload the received files to the server.

Player side

On the client, the patcher does the following:

○ Copies itself to a file with a different name. This will update the patcher executable file if necessary. Then control is transferred to the copy and the original completes.

○ Download the version file and compare with the local version file.

○ If the comparison did not reveal a difference - you can play, we have the latest version. If there is a difference, go to the next item.

○ Download a tree of files with their checksums.

○ For each file in the tree from the server:

► If there is a file, it considers its checksum (SHA512). If not, it considers it to be, but empty (i.e. consists of solid zeros) and also considers its checksum.

► If the sum of the local file does not match the checksum of the file from the latest version:

► Creates a local block map and compares it with the block map from the server.

► For each local block that differs from the remote one, it downloads a compressed block from the server and overwrites it locally.

○ If there are no errors, updates the version file.

I made the data block size a multiple of 1024 bytes, after a certain number of tests, I decided that it was easier to operate with 64KB blocks. Although the universality in the code remains:

#region DQPatcher class

public class DQPatcher

{

// some internal constants

// 1 minute timeout by default

private const int DEFAULT_NETWORK_TIMEOUT = 60000;

// maximum number of compressed blocks, which we will download at once

private const UInt16 MAX_COMPRESSED_BLOCKS = 1000;

// default block size, you can use range from 4k to 64k,

//depending on average size of your files in the project tree

private const uint DEFAULT_BLOCK_SIZE = 64 * 1024;

...

#region public constants and vars section

// X * 1024 bytes by default for patch creation

public static uint blockSize = DEFAULT_BLOCK_SIZE;

...

#endregion

....If you make the blocks small, then the client requires fewer changes when the changes themselves are few. However, another problem arises - the size of the index file increases inversely with the decrease in block size - i.e. if we operate with blocks of 8KB, then the index file will be 8 times larger than with blocks of 64KB.

I chose SHA256 / 512 for files and blocks from the following considerations: the speed compared to the (obsolete) MD5 / SHA128 differs slightly, and you still need to read blocks and files. And the likelihood of collisions with SHA256 / 512 is significantly less than with MD5 / SHA128. To be completely boring - it is in this case, but it is so small that this probability can be neglected.

Additionally, the client takes into account the following points:

► Data blocks can be shifted in different versions, i.e. locally, we have block number 10, and on the server we have block number 12, or vice versa. This is taken into account so as not to download extra data.

► Blocks are requested not one at a time, but in groups - the client tries to combine the ranges of the necessary blocks and requests them from the server using the Range header. This also minimizes server load:

// get compressed remote blocks of data and return it to the caller

// Note: we always operating with compressed data, so all offsets are in the _compressed_ data file!!

// Throw an exception, if fetching compressed blocks failed

public byte[] GetRemoteBlocks(string remoteName, UInt64 startByteRange, UInt64 endByteRange)

{

if (verboseOutput) Console.Error.WriteLine("Getting partial content for [" + remoteName + "]");

if (verboseOutput) Console.Error.WriteLine("Range is [" + startByteRange + "-" + endByteRange + "]");

int bufferSize = 1024;

byte[] remoteData;

byte[] buffer = new byte[bufferSize];

HttpWebRequest httpRequest = (HttpWebRequest)WebRequest.Create(remoteName);

httpRequest.KeepAlive = true;

httpRequest.AddRange((int)startByteRange, (int)endByteRange);

httpRequest.Method = WebRequestMethods.Http.Get;

httpRequest.ReadWriteTimeout = this.networkTimeout;

try

{

// Get back the HTTP response for web server

HttpWebResponse httpResponse = (HttpWebResponse)httpRequest.GetResponse();

if (verboseOutput) Console.Error.WriteLine("Got partial content length: " + httpResponse.ContentLength);

remoteData = new byte[httpResponse.ContentLength];

Stream httpResponseStream = httpResponse.GetResponseStream();

if (!((httpResponse.StatusCode == HttpStatusCode.OK) || (httpResponse.StatusCode == HttpStatusCode.PartialContent))) // rise an exception, we expect partial content here

{

RemoteDataDownloadingException pe = new RemoteDataDownloadingException("While getting remote blocks:\n" + httpResponse.StatusDescription);

throw pe;

}

int bytesRead = 0;

int rOffset = 0;

while ((bytesRead = httpResponseStream.Read(buffer, 0, bufferSize)) > 0)

{

// if(verboseOutput) Console.Error.WriteLine("Got ["+bytesRead+"] bytes of remote data block.");

Array.Copy(buffer, 0, remoteData, rOffset, bytesRead);

rOffset += bytesRead;

}

if (verboseOutput) Console.Error.WriteLine("Total got: [" + rOffset + "] bytes");

httpResponse.Close();

}

catch (Exception ex)

{

if (verboseOutput) Console.Error.WriteLine(ex.ToString());

PatchException pe = new PatchException("Unable to fetch URI " + remoteName, ex);

throw pe;

}

return remoteData;

}Of course, it turned out that the client can be interrupted at any time and upon subsequent launch, it will de facto continue its work, and will not download everything from scratch.

Here you can watch a video illustrating the work of the patcher on the Angry Bots example project:

About how the game universe patch was organized

In September 2015, Alex Koshelkov contacted me and offered to join the project - they needed a solution that would provide 30 thousand (with a tail) of players with monthly updates. The initial size of the game in the archive is 600 megabytes. Before contacting me, there were attempts to make your own version using Electron, but everything came up against the same problem of open files (by the way, the current version of Electron can do this) and some others. Also, none of the developers understood how this would work - they provided me with several bicycle designs, the server part was absent altogether - they wanted to do it after all the other tasks have been solved.

In addition, it was necessary to solve the problem of how to prevent the leakage of player keys - the fact is that the keys were for the Steam platform, although the game itself on Steam was not yet publicly available. Distributing the game was required strictly by the key - although there was a chance that the players could share the game key with friends, this could be neglected, since if the game appeared on Steam, the key could be activated only once.

In the normal version of the patcher, the data tree for the patch looks like this:

./

| - linux

| | - 1.0.0

| `- version.txt

| - macosx

| | - 1.0.0

| `- version.txt

`- windows

| - 1.0.0

`- version.txt

I needed to make sure that only those with the correct key had access.

I came up with the following solution - for each key we get its hash (SHA1), then we use it as a path to access the patch data on the server. On the server, we transfer the patch data to a level higher than the docroot, and add symlinks to the directory with the patch data in the docroot itself. Symbolic links have the same names as key hashes, only broken into several levels (to facilitate the operation of the file system), i.e. the hash 0f99e50314d63c30271 ... ... ade71963e7ff will be represented as

./0f/99/e5/0314d63c30271.....ade71963e7ff -----> / full / path / to / patch-data /

Thus, it is not necessary to distribute the keys themselves to someone who will be supporting the update servers - just pass their hashes, which are absolutely useless to the players themselves.

To add new keys (or delete old ones) - just add / remove the corresponding symbolic link.

With this implementation, the verification of the key itself is obviously not performed anywhere; receiving 404 errors on the client indicates that the key is incorrect (or has been deactivated).

It should be noted that key access is not a full-fledged DRM protection - these are just restrictions at the stage of (closed) alpha and beta testing. And the search is easily cut off by the means of the web server itself (at least in Nginx, which I use).

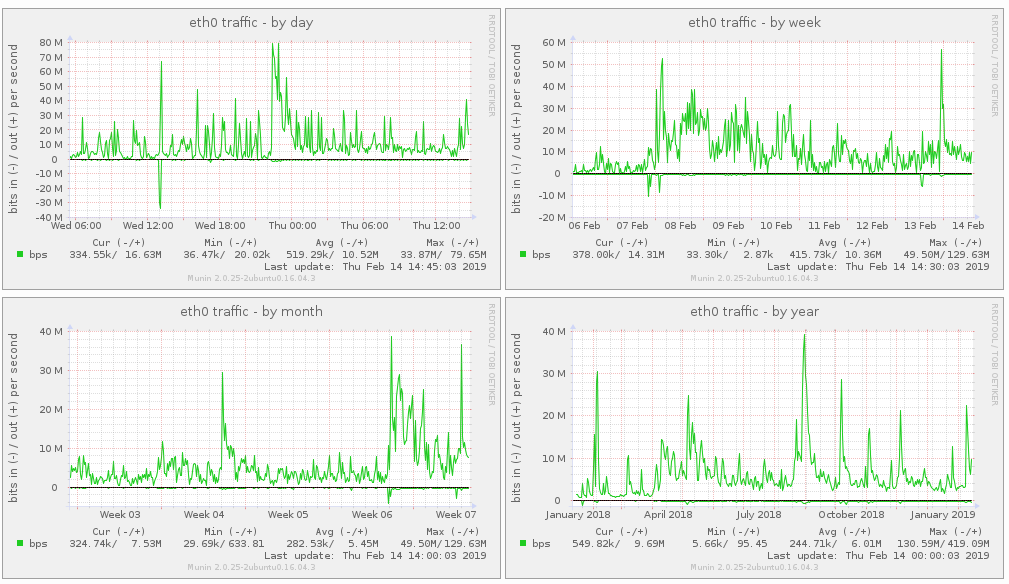

In the launch month, only 2.5 TB of traffic was given out on the first day alone, and about the same amount is distributed on average per month on the following days:

Therefore, if you plan to distribute a lot of content, it is best to calculate in advance how much it will cost you. According to personal observations - the cheapest traffic from European hosters, the most expensive (I would say “gold”) from Amazon and Google.

In practice, the traffic savings per year on average at The Universim are huge - compare the numbers above. Of course, if a user doesn’t have a game at all or if it is very outdated, a miracle will not happen and he will have to download a lot of data from the server - if from scratch, then a little more than the game takes in the archive. However, with monthly updates, things get really good. In less than 6 months, the American mirror gave a little more than 10 TB of traffic, without the use of a patcher this value would have grown significantly.

This is what the project’s annual traffic looks like:

A few words about the most memorable “rake” that we had to step upon while working on a custom patcher for the game “The Universim”:

● The biggest trouble was awaiting me from the antiviruses. Well, they don’t like applications that download something from the Internet there, modify files (including executable ones), and then also try to run the downloaded. Some antiviruses did not just block access to local files - they also blocked the calls to the update server themselves, getting directly into the data that the client downloaded. The solution was to use a valid digital signature for the patcher - this dramatically reduces the paranoia of antiviruses, and using the HTTPS protocol instead of HTTP - quickly eliminates some of the errors associated with the curiosity of antiviruses.

● Progress update. Many users (and customers) want to see update progress. One has to improvise, since it is not always possible to reliably show progress without having to do extra work. Yes, and the exact time of the end of the patch process cannot be displayed either, since the patcher itself does not have data on which files need updating in advance.

● A huge number of users from the USA have connection speeds to servers from Europe not very high. Migrating the update server to the USA solved this problem. For users of other continents, we left the server in Germany. By the way, traffic in the USA is much more expensive than European, in some cases - several dozen times.

● Apple is not very comfortable with this method of installing applications. Official policy - applications should be installed only from their store. But the trouble is, applications at the alpha and beta testing stages are not allowed in the store. And even more so, there is nothing to talk about selling raw applications from early access. Therefore, you have to write instructions on how to dance on poppies. The option with AppAnnie (then they were still independent) was not considered due to the limit on the number of testers.

● Networking is fairly unpredictable. In order for the application not to give up immediately, I had to enter an error counter. 9 caught exceptions allow you to firmly tell the user that he has problems with the network.

● 32-bit operating systems have restrictions on the size of files that are displayed in memory (Memory Mapped Files - MMF) for each thread of execution and for the process as a whole. The first versions of the patcher used MMF to speed up work, but since the files of game resources can be huge, I had to abandon this approach and use ordinary file streams. A special performance loss, by the way, was not observed - most likely due to the proactive reading of the OS.

● You have to be prepared for users to complain. No matter how good your product may be, there will always be those who are not satisfied. And the more users of your product (in the case of The Universim there are more than 50 thousand at the moment) - the more quantitatively there will be complaints to you. In percentage terms, this is a very small number, but in quantitative terms ...

Despite the fact that the project as a whole was a success, it has some drawbacks:

● Even though I initially took out all the main logic separately, the GUI part is different in implementation for MAC and Windows. The Linux version did not cause problems - all the problems were mainly only when using a monolithic build that did not require the Mono Runtime Environment - MRE. But since you need to have an additional license to distribute such executable files, it was decided to abandon monolithic builds and simply require MRE. The Linux version differs from the Windows version only in support for file attributes specific to * nix systems. For my second project, which will be more than just a patcher, I plan to use a modular approach in the form of a kernel-process that runs in the background and allows managing everything on the local interface. And the control itself can be carried out from an application based on Electron and the like (or simply from a browser). With any little thing. Before talking about the size of the distribution of such applications - look at the size of the games. Demo (!!!) versions of some occupy 5 or more gigabytes in the archive (!!!).

● The structures used now do not save space when the game is released for 3 platforms - de facto, you need to keep 3 copies of almost identical data, albeit compressed.

● The current version of the patcher does not cache its work - every time all the checksums of all files are recalculated. It would be possible to significantly reduce the time if the patcher cached the results for those files that are already on the client. But there is one dilemma - if the file is damaged (or missing), but the cache entry for this file is saved, then the patcher will skip it, which will cause problems.

● The current version is not able to work simultaneously with multiple servers (unless you make Round-robin using DNS) - I would like to switch to a “torrent-like” technology so that you can use multiple servers at the same time. There is no question of using clients as a data source, as this raises many legal issues and it is easier to refuse from the beginning.

● If you want to restrict access to updates, then this logic will have to be implemented independently. Actually, this can hardly be called a drawback, since everyone can have their own wishes regarding the restrictions. The simplest key restriction - without any server part - is made fairly simple, as I showed above.

● A patcher is created for only one project at a time. If you want to build something similar to Steam, then an entire content delivery system is already required. And this is a project of a completely different level.

I plan to put the patcher myself in the public domain after the “second generation” is implemented - a game content delivery system that will include not only the evolved patcher, but also a telemetry module (since developers need to know what the players are doing), Cloud Saves module and some other modules.

If you have a non-profit project and need a patcher, write me the details about your project and I will give you a copy for free. There will be no links here, since this is not the “I PR” hub.

I will be happy to answer your questions.