Three seamless data centers, or how VTB protects business systems

VTB's retail business is served by over 150 systems, and everything needs to be reliably protected. Some systems are critical, some are tightly attached to each other - in general, the task is large-scale. In this post you will learn how it was resolved. So that even when a meteorite falls on one of the data centers, the bank’s work is not interrupted, and the data remains intact.

Initially, it was planned to implement a catastrophic solution of two identical data centers, the main and the backup, with "manual" switching to the backup site. But with such a scheme, the reserve site would be idle idle, although it would require the same maintenance as the main one. As a result, we decided to apply the active-active scheme, in which both data centers (spaced 40 km away) operate normally and serve business systems at the same time. As a result, the total capacity and performance doubles, which is especially true for peak loads (no need for scaling). And service can be carried out without prejudice to business processes.

The creation of a disaster-tolerant data center system was divided into two stages. The first reserved about 50 important business systems for which RPO should be close to zero - including ABS, anti-fraud system, processing, CRM and remote banking system that provides online connections for both individuals and legal entities. Such a variety of systems has become the main difficulty in developing conceptual solutions for the backup infrastructure.

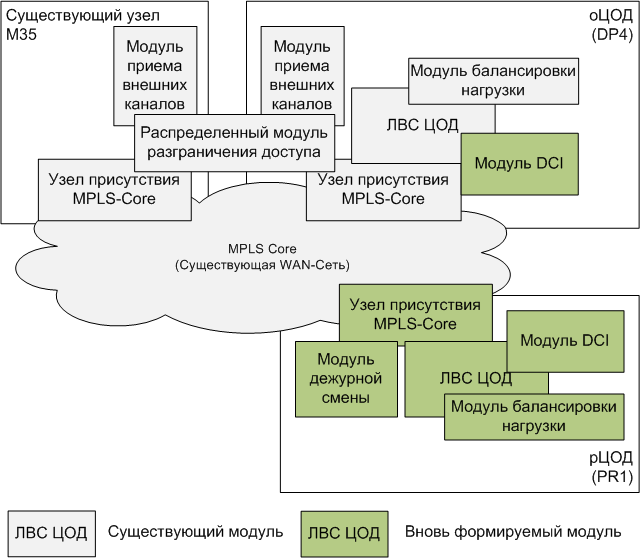

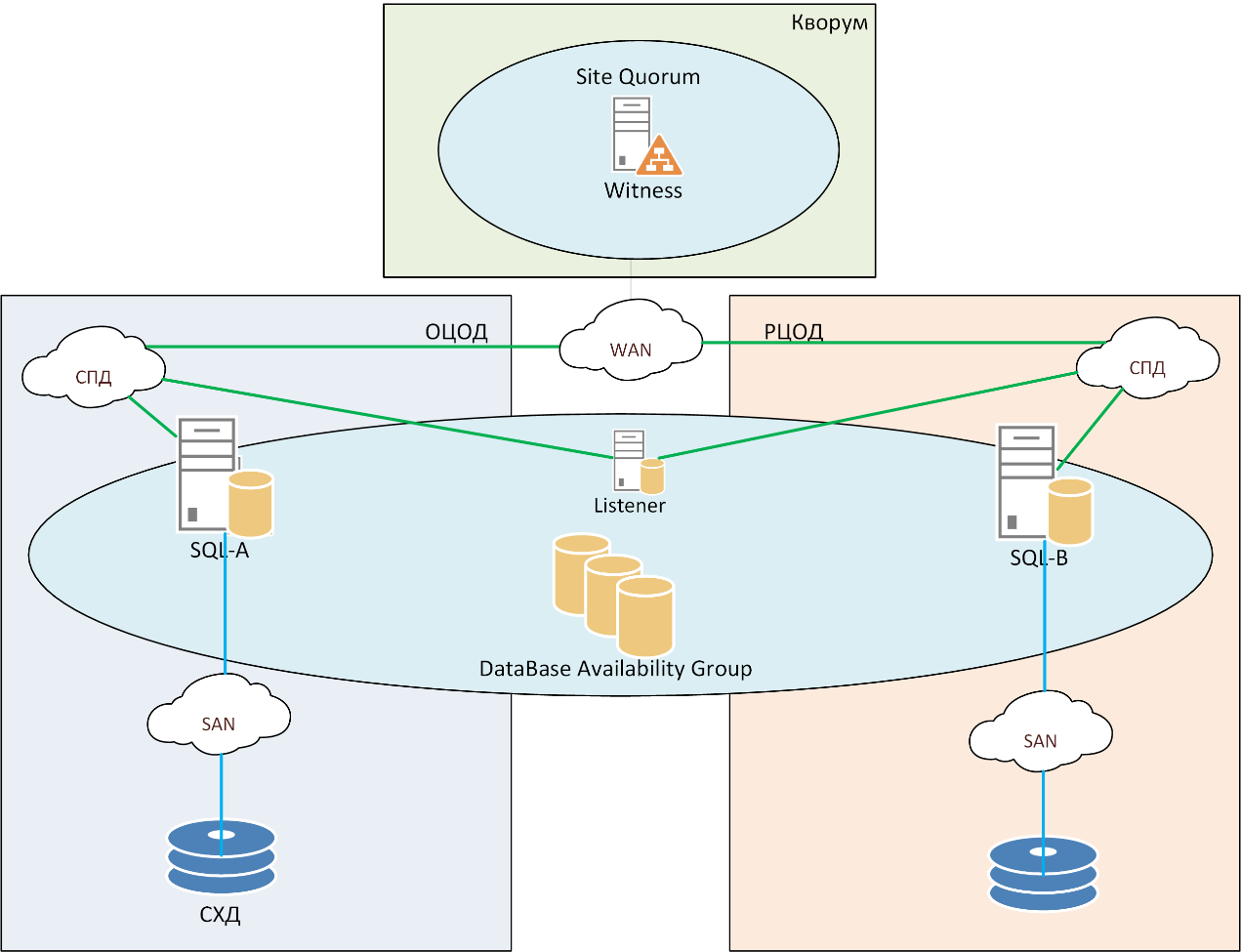

In a first approximation, everything was built on standard solutions. But when the sketch began to be applied to real business systems, it turned out that there was a lot of work to be done with a file: many components simply could not be described by typical solutions. In such cases, it was necessary to look for individual approaches, for example, for the largest business system — the ABS “General Ledger”. We also had to rework a typical solution for Oracle, because it did not meet the requirement of complete absence of data loss. The same thing happened with Microsoft SQL databases, and with a number of other systems. Among the critical ones were internal information buses through which other systems exchange data. In particular, USB-front and USB-back.

ISUBS-front

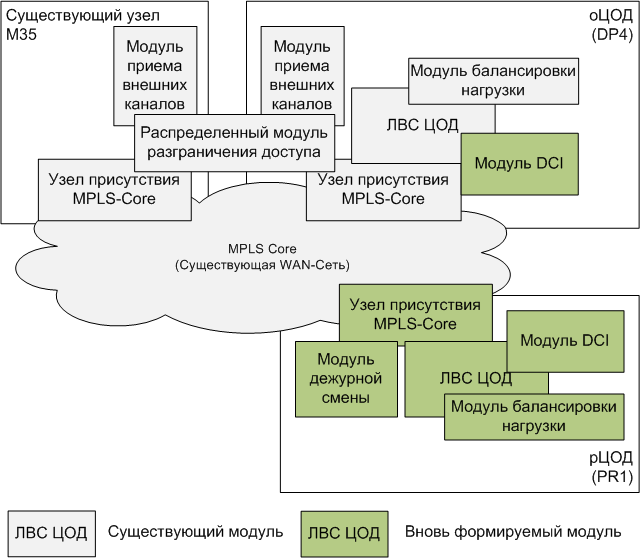

IS backup scheme ISBS-back IS backup scheme

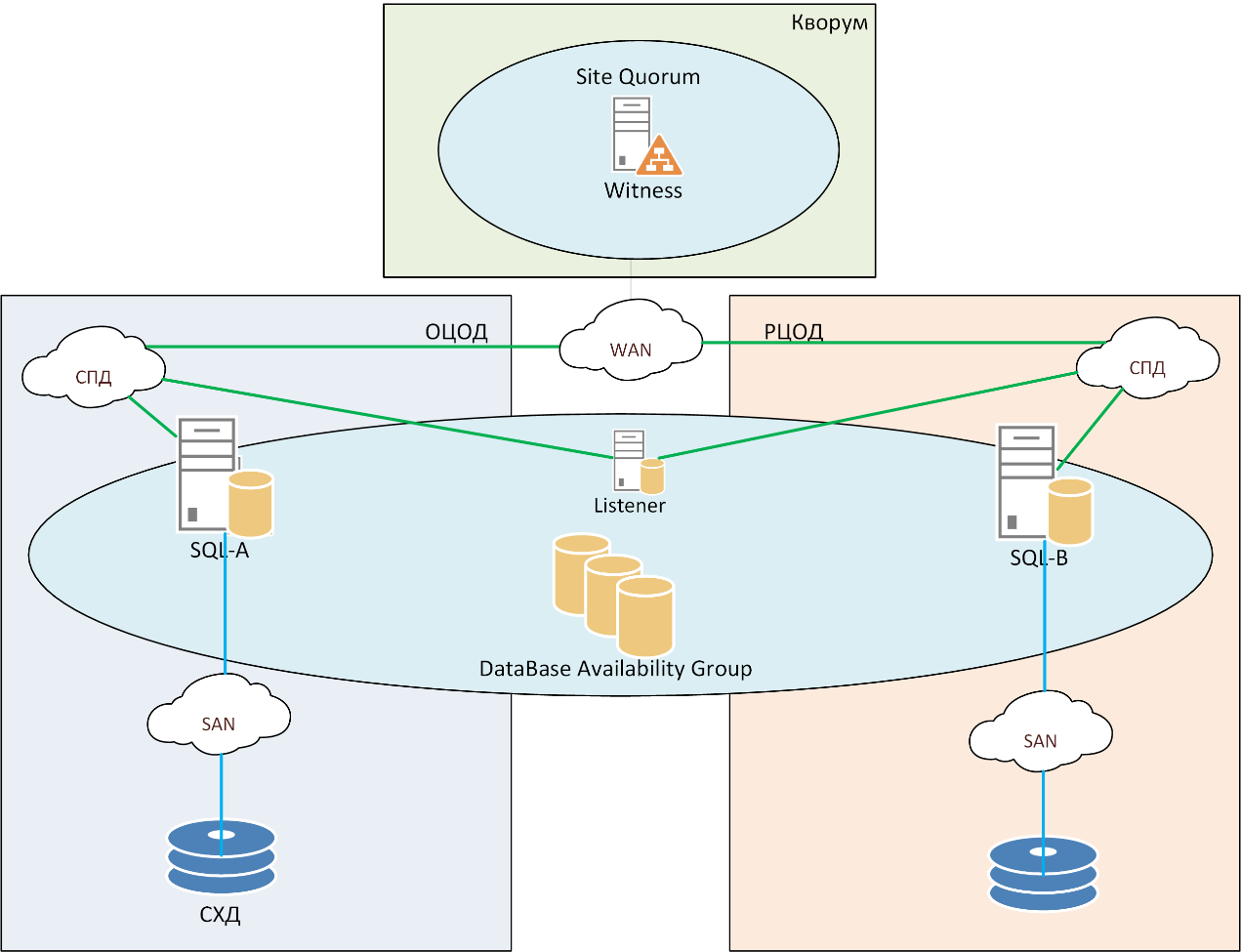

MS SQL Server redundancy scheme

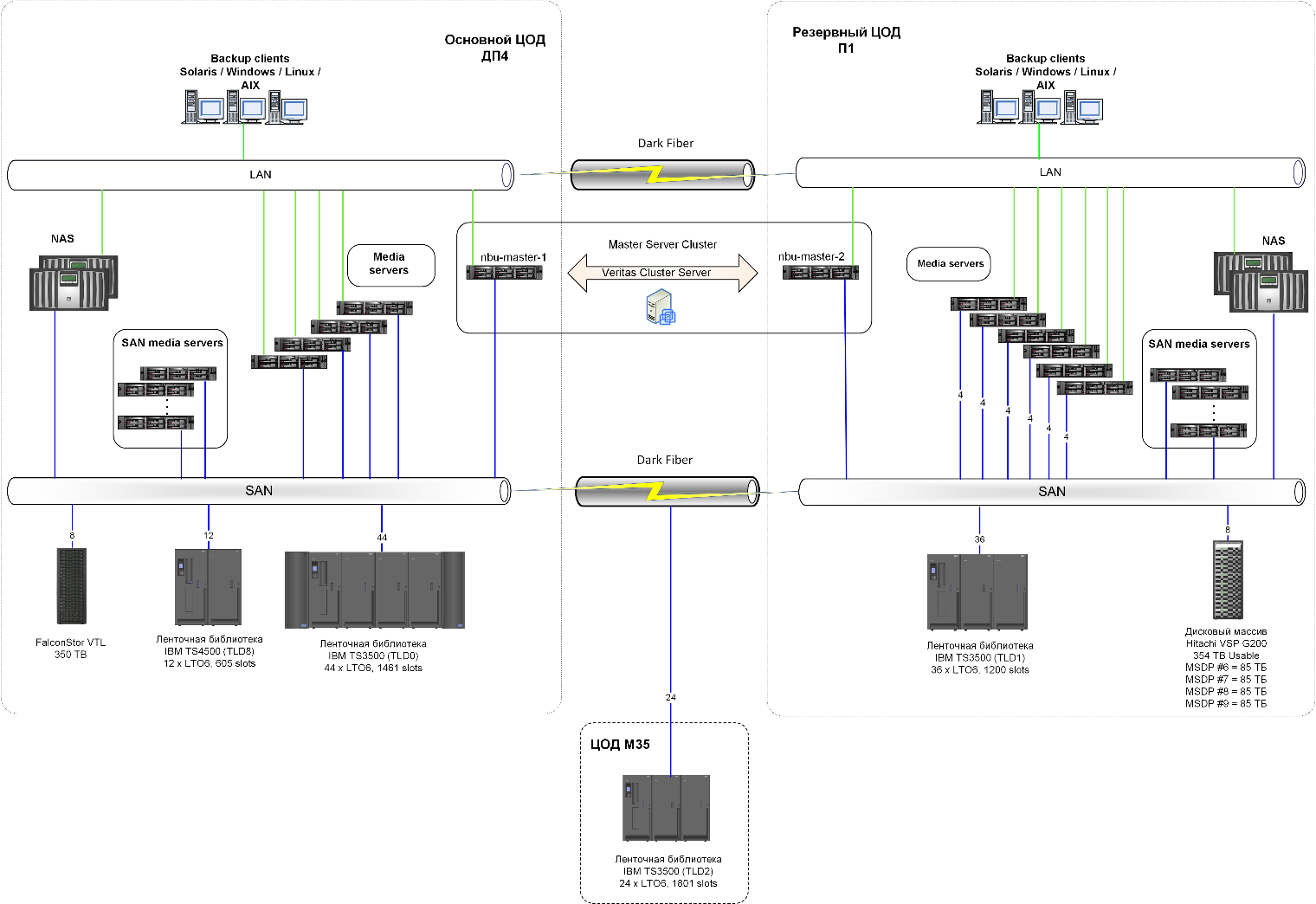

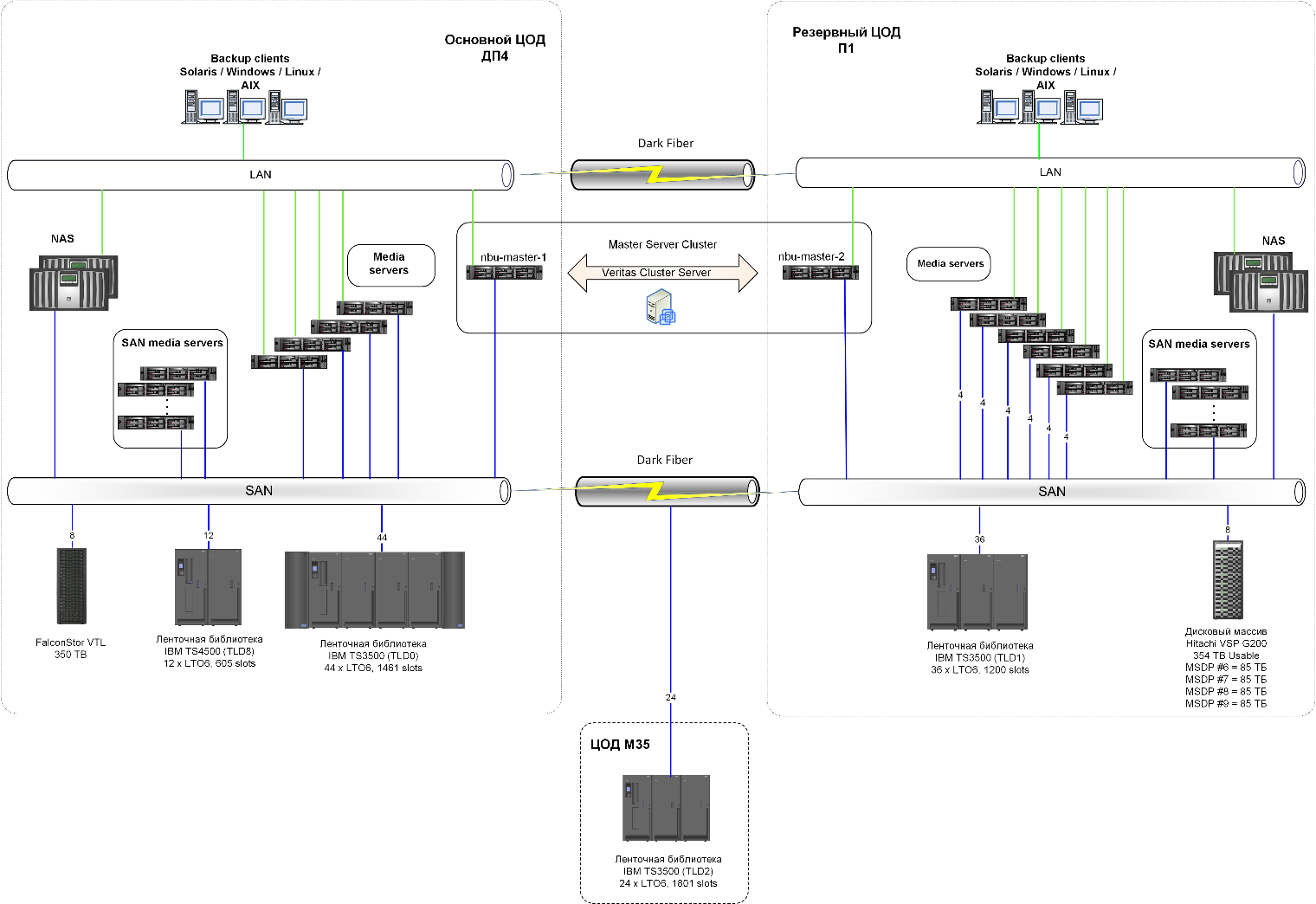

In addition to the two main data centers operating in active-active mode, a third data center was created, which contains devices that act as coordinators. This is done so that in the event of a disconnection between the two main sites, a split-brain situation does not occur. The network of the two main data centers is flat, without routing, built on Cisco equipment, uses the L2 tunnel to L3 via OTV, and the sites themselves are connected by the MPLS network via fiber optic (running in two different ways). For the data network, the channel is 160 Gbit / s, and for the storage network - 256 Gbit / s. In a data storage network, both sites are optically coupled.

Data network diagram

To implement the project, we purchased only 40% of the equipment, the remaining 60% were already in stock.

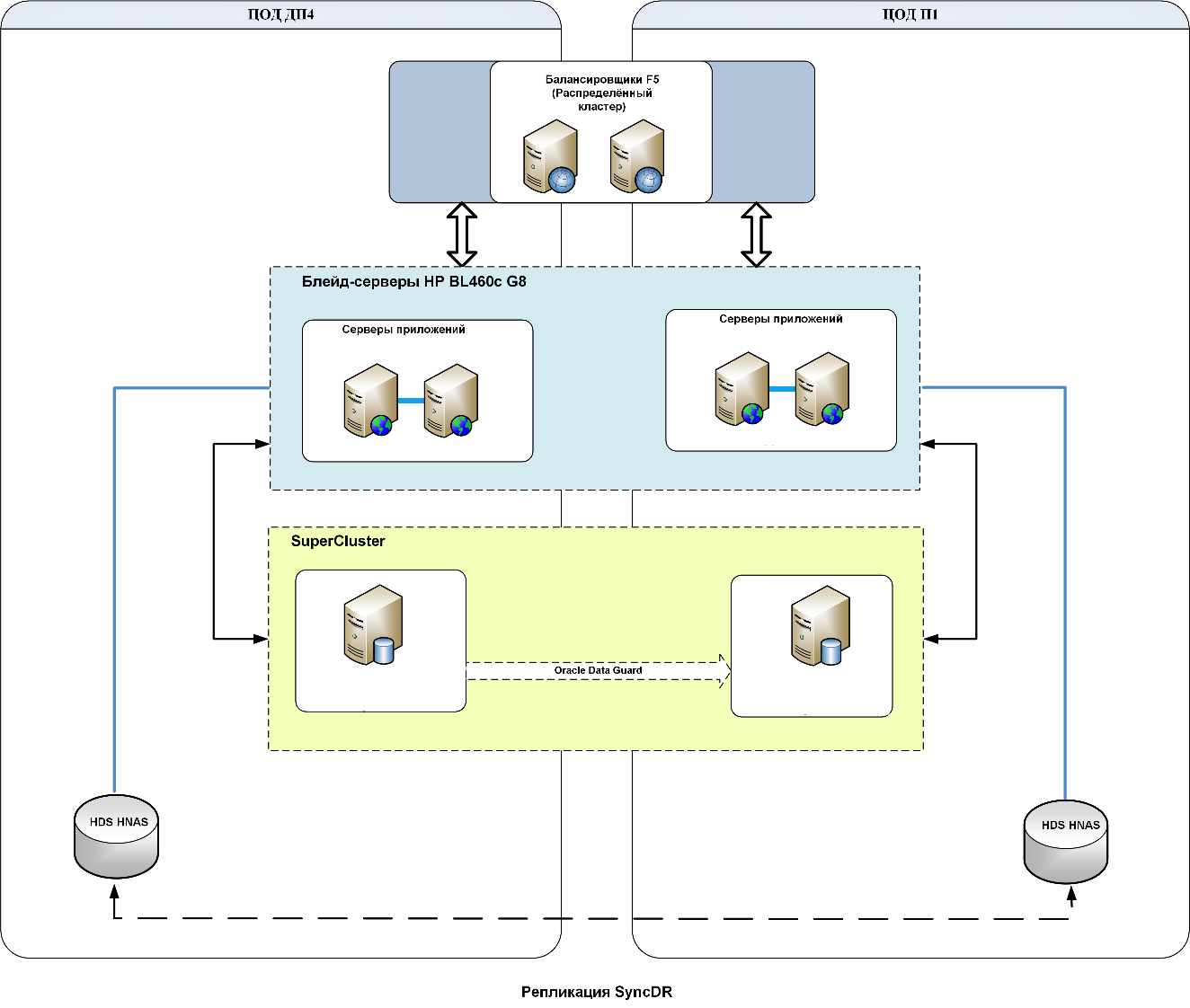

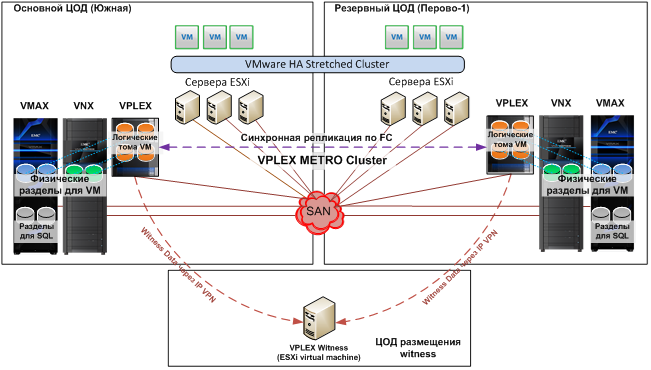

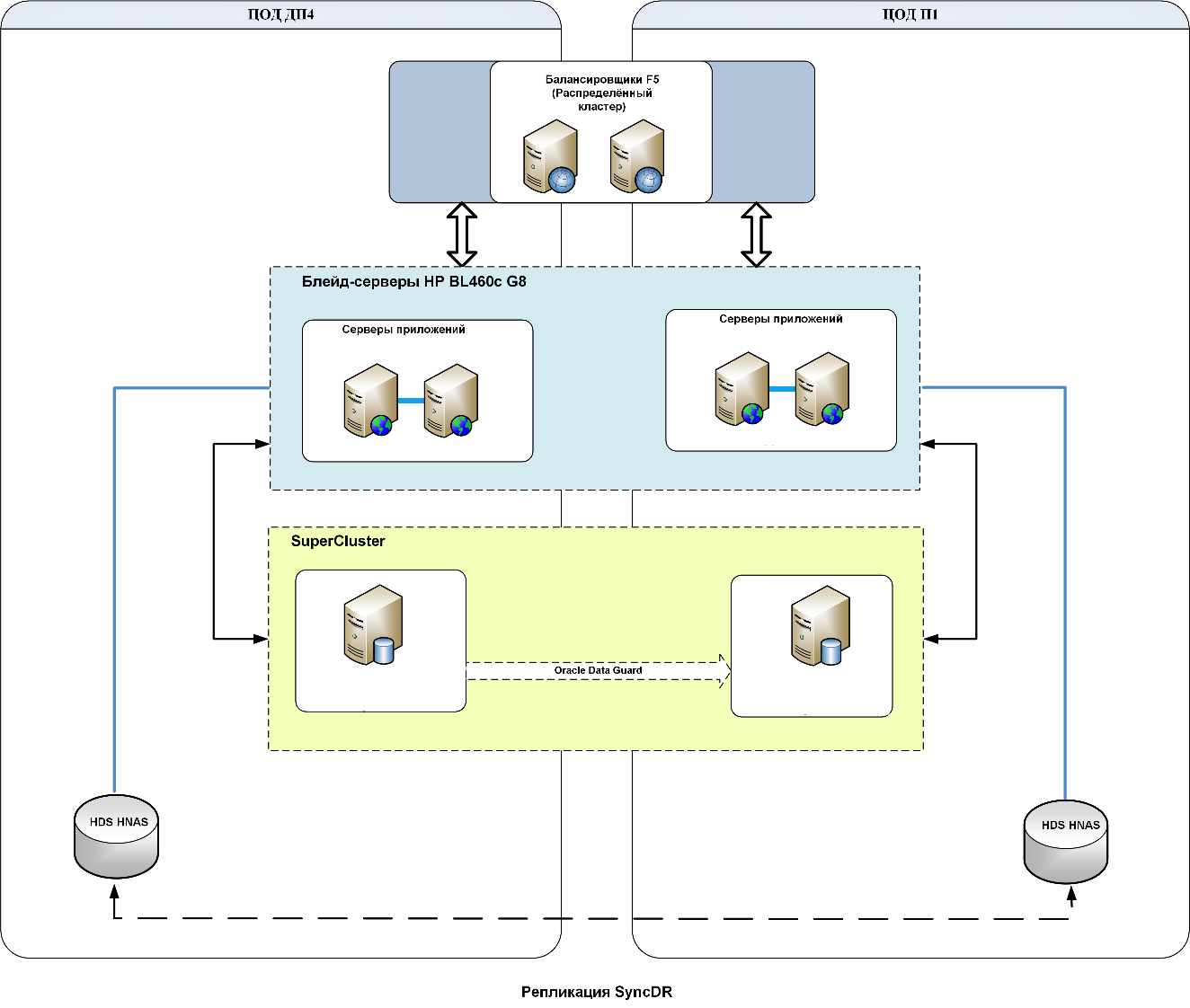

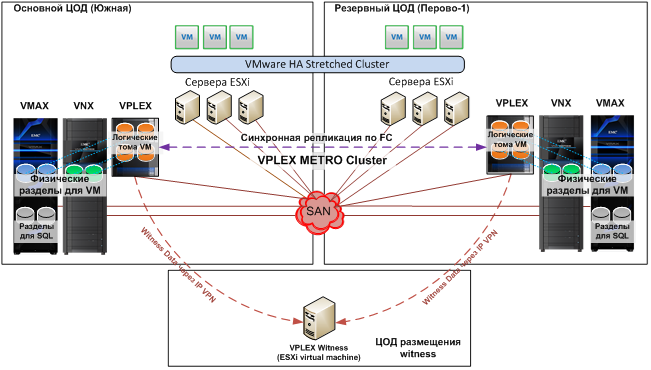

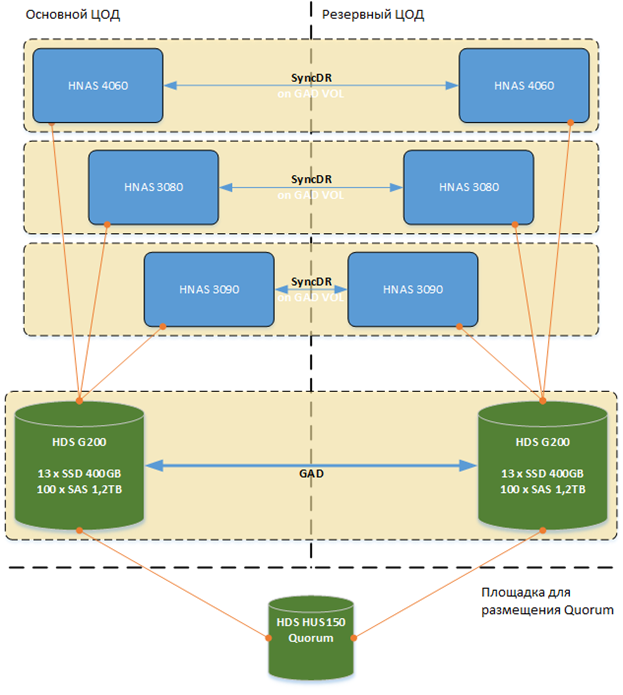

Storage systems are combined at both sites, and a cluster of balancers F5 BIG-IP is made for universal access from outside to the application server. For VMs, a stretched VMware cluster was built, EMC VPLEX virtualizers and EMC Vmax and Hitachi VSP disk arrays connected at the sites in the virtualizer cluster were used. The file service is stretched between two data centers and is built on Hitachi technologies: Hitachi GAD is used to synchronize data between sites, and clustered HNAS devices located in both data centers are used to provide file service.

VMware and VPLEX Disk Array Scheme

For databases, we use replication with built-in tools: Oracle Data Guard for Oracle and Always On for Microsoft SQL servers. To avoid data loss, Always On works in synchronous mode, and Oracle is simultaneously writing redo to another site, this will allow it to recover at the last moment. The methodology is developed, debugged and documented.

For the databases of many systems, IBM Power servers are used, 1700 xew Hewlett Packard x86 blade-servers of different generations, mainly dual-processor. The network is built on Cisco Nexus 7000 equipment, and SAN on Brocade DCX of different generations. Also, Oracle engineering systems are distributed across the sites: Exadata, SuperCluster, Exalogic.

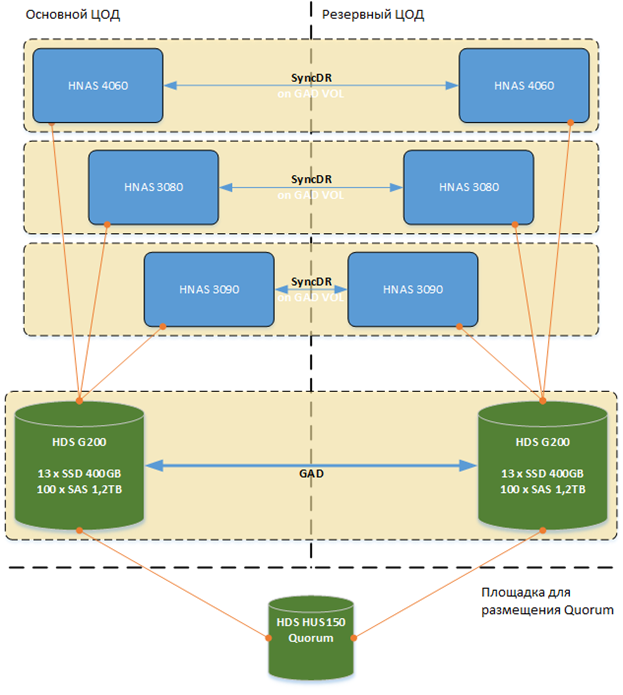

File service diagram

The usable capacity of the reserved systems in each of the two main data centers is approximately 2 petabytes. By means of equipment only storage, virtual machine systems and file services are reserved. All other databases and application systems are backed up by software. Synchronization between arrays is performed in the file service using Hitachi GAD technology. In all other cases, the data is replicated using the databases or applications themselves.

IBS scheme

After completing the first stage — backing up the order of the 50 most critical business systems — we and colleagues at Jet Infosystems checked the operation of all elements: the network, disk arrays, virtualization of storage and other things. We tested the work of each business system while using the data center and when switching between them: put the system in a disaster-resistant environment, then completely switched to another data center, checked the system there and returned it back to the normal production environment. During all tests, performance was measured and dynamics evaluated. With any operation and switching schemes, performance did not decrease, availability did not suffer. As a result, we ensured a seamless connection between data centers at the level of physical servers (cluster configuration), virtual infrastructure (distributed cluster),

A new stage is ahead - the transfer to the disaster-tolerant model of the remaining business systems of the bank. The project is implemented by Jet Infosystems .

Project development

Initially, it was planned to implement a catastrophic solution of two identical data centers, the main and the backup, with "manual" switching to the backup site. But with such a scheme, the reserve site would be idle idle, although it would require the same maintenance as the main one. As a result, we decided to apply the active-active scheme, in which both data centers (spaced 40 km away) operate normally and serve business systems at the same time. As a result, the total capacity and performance doubles, which is especially true for peak loads (no need for scaling). And service can be carried out without prejudice to business processes.

The creation of a disaster-tolerant data center system was divided into two stages. The first reserved about 50 important business systems for which RPO should be close to zero - including ABS, anti-fraud system, processing, CRM and remote banking system that provides online connections for both individuals and legal entities. Such a variety of systems has become the main difficulty in developing conceptual solutions for the backup infrastructure.

In a first approximation, everything was built on standard solutions. But when the sketch began to be applied to real business systems, it turned out that there was a lot of work to be done with a file: many components simply could not be described by typical solutions. In such cases, it was necessary to look for individual approaches, for example, for the largest business system — the ABS “General Ledger”. We also had to rework a typical solution for Oracle, because it did not meet the requirement of complete absence of data loss. The same thing happened with Microsoft SQL databases, and with a number of other systems. Among the critical ones were internal information buses through which other systems exchange data. In particular, USB-front and USB-back.

ISUBS-front

IS backup scheme ISBS-back IS backup scheme

MS SQL Server redundancy scheme

In addition to the two main data centers operating in active-active mode, a third data center was created, which contains devices that act as coordinators. This is done so that in the event of a disconnection between the two main sites, a split-brain situation does not occur. The network of the two main data centers is flat, without routing, built on Cisco equipment, uses the L2 tunnel to L3 via OTV, and the sites themselves are connected by the MPLS network via fiber optic (running in two different ways). For the data network, the channel is 160 Gbit / s, and for the storage network - 256 Gbit / s. In a data storage network, both sites are optically coupled.

Data network diagram

Infrastructure

To implement the project, we purchased only 40% of the equipment, the remaining 60% were already in stock.

Storage systems are combined at both sites, and a cluster of balancers F5 BIG-IP is made for universal access from outside to the application server. For VMs, a stretched VMware cluster was built, EMC VPLEX virtualizers and EMC Vmax and Hitachi VSP disk arrays connected at the sites in the virtualizer cluster were used. The file service is stretched between two data centers and is built on Hitachi technologies: Hitachi GAD is used to synchronize data between sites, and clustered HNAS devices located in both data centers are used to provide file service.

VMware and VPLEX Disk Array Scheme

For databases, we use replication with built-in tools: Oracle Data Guard for Oracle and Always On for Microsoft SQL servers. To avoid data loss, Always On works in synchronous mode, and Oracle is simultaneously writing redo to another site, this will allow it to recover at the last moment. The methodology is developed, debugged and documented.

For the databases of many systems, IBM Power servers are used, 1700 xew Hewlett Packard x86 blade-servers of different generations, mainly dual-processor. The network is built on Cisco Nexus 7000 equipment, and SAN on Brocade DCX of different generations. Also, Oracle engineering systems are distributed across the sites: Exadata, SuperCluster, Exalogic.

File service diagram

The usable capacity of the reserved systems in each of the two main data centers is approximately 2 petabytes. By means of equipment only storage, virtual machine systems and file services are reserved. All other databases and application systems are backed up by software. Synchronization between arrays is performed in the file service using Hitachi GAD technology. In all other cases, the data is replicated using the databases or applications themselves.

IBS scheme

Testing

After completing the first stage — backing up the order of the 50 most critical business systems — we and colleagues at Jet Infosystems checked the operation of all elements: the network, disk arrays, virtualization of storage and other things. We tested the work of each business system while using the data center and when switching between them: put the system in a disaster-resistant environment, then completely switched to another data center, checked the system there and returned it back to the normal production environment. During all tests, performance was measured and dynamics evaluated. With any operation and switching schemes, performance did not decrease, availability did not suffer. As a result, we ensured a seamless connection between data centers at the level of physical servers (cluster configuration), virtual infrastructure (distributed cluster),

A new stage is ahead - the transfer to the disaster-tolerant model of the remaining business systems of the bank. The project is implemented by Jet Infosystems .