Incident Management Process in Tutu.ru

For each company, sooner or later, the topic of incident management becomes relevant. Some already have tuned and debugged processes, someone is just starting his way in this direction. Today I want to talk about how we in Tutu.ru built the process of processing “glitches in battle”, and what we did.

Analysis of incidents in our company is carried out by the operations department (or, more simply, support). Therefore, I will begin by describing how it is designed. In Tutu.ru we have a certain set of products, these are railway, air, tours, trains, buses. Each product team has specialists in the operations department. Also, representatives of our department are in the cross-functional and infrastructure teams.

We are responsible for ensuring that our users do not have problems when using the service. This includes team-specific tasks to support our external and internal clients, as well as work aimed at ensuring that the site experiences hardware failures, withstands loads, and continues to solve users' problems well and quickly, despite the speed of change.

An incident (or failure, emergency) is a certain critical (usually massive) problem that significantly reduces the availability, correctness, effectiveness or reliability of business functionality or infrastructure systems.

As part of the incident management process, we set ourselves the following main goals :

1. As soon as possible, restore the performance of our systems.

2. Prevent the occurrence of the same failure twice.

3. Promptly inform stakeholders.

We had a self-written monitoring system, which implied an independent subscription of employees to reports of problems. That is, only those who signed up for them received alerts. We learned about failures from this system, and then acted in a rather fragmented way - someone from the operation received an SMS, started looking at what was happening, came to the people who needed to solve or analyze the problem (or called them if it was about idle time), and the failure was finally resolved. Then someone wrote a report about the failure in the format accepted at that time (a description of the problem and the reasons, a chronology and a set of planned actions aimed at “not repeating”).

At the same time, there were obvious problems :

1. There was no complete certainty that anyone was subscribed to a critical alert. That is, there was a possibility that the failure would be noticed already when it affected other critical parts of the system.

2. There was no one responsible for solving the problem, who will achieve its elimination as soon as possible. It is also unclear who should check the current status.

3. It was not possible to keep track of incident information over time.

4. There was a lack of understanding of whether anyone saw a message from monitoring? Did you react? What details have already been found out, and is there any progress in the solution?

5. Several experts duplicated each other's actions. We looked at the same logs / charts, went to the same people, and distracted them with repeated questions about whether they knew about the problem, at what stage the solution was, and so on.

6. For those who did not directly participate in solving the incident, it was difficult to obtain information about its causes, how to fix it, and what was happening. Because nowhere was it fixed who and what was doing.

7. There was no equal understanding of what needs to be written in the crash report and what is not, how deeply immersed in the analysis. There was no clear process for formulating "action" for improvement. As a result, crash reports could be incomplete, often looking different. From them it was difficult to understand what the effect was, what are the reasons for what they did in the decision process, and how they were repaired.

8. Only support specialists and those who were directly involved in eliminating the causes and the negative effect plunged into the analysis of the emergency. As a result, by no means all interested received feedback on problems.

With this process of handling failures, we did not achieve our goals. The same incidents happened, and the available reports did not help us much to deal with similar failures in the future. The reports did not always answer important questions - how the problem was resolved, whether everything necessary was undertaken for non-repetition, and if not, why. In addition, the inconsistency of our actions at the time of the incident did not contribute to speeding up the decision time, especially with the increase in the number of departments.

Now more about these changes.

It is aimed at achieving the first goal of the process - the rapid restoration of the performance of our systems.

At the beginning of work to improve this phase, we were seriously “hurt” by the lack of effective communication even within the operation department. During working hours, we could communicate about problems in the department’s skype chat, but team chat is designed for any working issues. It does not provide an instant reaction to messages, and during off hours not all team members use this tool.

Therefore, we decided that we needed a separate channel for communications on emergency situations, in which there would be no other working correspondence. Our choice fell on Telegram, since it is easy and convenient to use from a mobile, and you can also expand its capabilities using bots. We created a chat and agreed to write to it when someone found out that a failure had occurred and started to deal with it. Since there are no unnecessary messages in this chat, we are obliged to respond quickly to it at any time of the day. At first, only support employees were in the chat, but quite quickly, administrators and some developers appeared in it. That is, those people who can help quickly fix the failure.

This turned out to be a very convenient practice, since all participants in the incident processing process are gathered in one place, and everyone has information about what is happening. Often now you don’t even need to take turns calling, for example, administrators in order to find someone who can tackle the problem. All the necessary people are in the chat, and they themselves unsubscribe when they notice an alert (or you can call them up). I’ll explain here that we don’t have an on-call as such, so the one who is near the computer at the moment is dealing with incidents outside the office hours. So far it works for us.

After that, the problem of informing people involved in malfunctioning about what was happening “in battle” and who was involved in the solution was almost solved. Later we brought critical messages from monitoring directly to this chat. Now, alerts are guaranteed to come to everyone who needs it, and this does not depend on whether a person has subscribed to sensors or not.

We also made a number of improvements to improve process control.

We can find out that an incident has occurred in two ways:

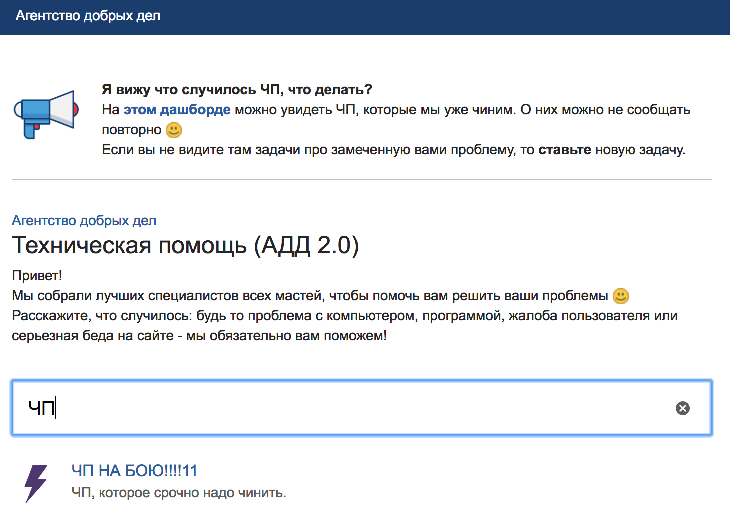

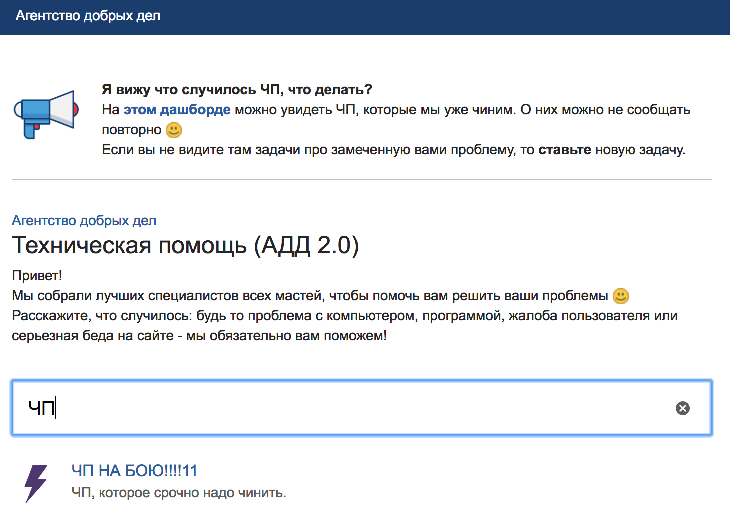

In any case, we must record the incident in JIRA - to control the time of occurrence / solution of the problem, as well as inform interested parties. To do this, we have allocated a special section in the Service Desk (“emergency fight”), where any employee of the company can set a task. We also have a panel that displays all active tasks with this component. The panel is accessible directly from the task setting interface in the Service Desk, and on it you can find the answer to the question whether there is a problem in the battle and whether it is solved.

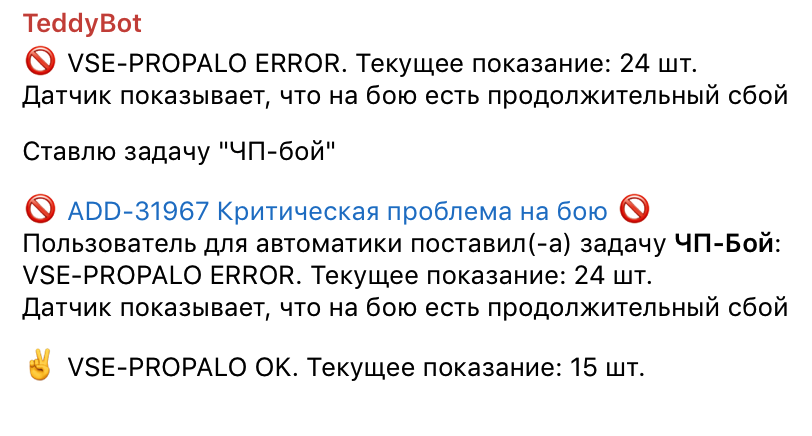

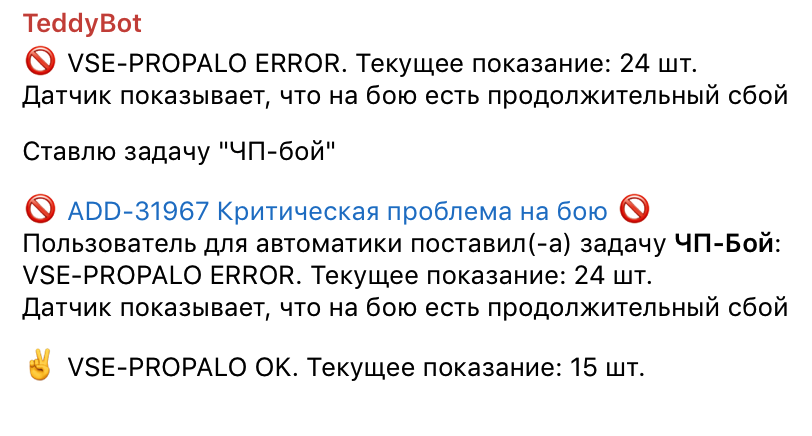

At the event of setting the “PE-Boy” task, we configured to send an automatic notification to Telegram chat to solve the PE. This allows you to be sure that the problem will be noticed even after hours.

If we learned about the problem from monitoring, then we must set the “PE-fight” task ourselves. Since the corresponding alerts come to the chat, it seemed convenient to us to automate the statement of the problem in JIRA. Now we can do this by pressing a single button in the chat - the task is created on behalf of a special user for automation and contains the text of the triggered alert. Thus, fixing the incident is quick and convenient, we do not spend too much time on it.

When the “PE-battle” task arrives, we define the “navigator” - a person from the operation department who will be responsible for eliminating the failure. This does not mean that the others stop participating in the analysis, everyone is involved, but the navigator is necessary so that the employees do not duplicate each other's actions. The navigator must coordinate communications and actions to resolve the incident, attract the necessary forces for this. And also timely record information on the progress of work, so that later during the analysis it would be possible to easily restore the chronology.

The navigator is appointed as the performer of the “PE-battle” task. When the task is assigned, our colleagues understand that they are dealing with the problem, and, in which case, they know who to contact with questions.

When we managed to eliminate the negative effects - the active phase is completed, the task closes.

In this phase, from the point of view of processes, the most difficult was to form a common understanding of who should be in the role of navigator. We decided that it may be the one who first noticed the emergency, but it is better if it is a sapper of the affected product.

Begins immediately after active completion. Serves to achieve the second goal of the process - to prevent the recurrence of the same failure.

The phase includes a full comprehensive analysis of what happened. The person responsible for the analysis of the incident must collect all available information about what happened, for what reasons, what actions were taken to restore working capacity. After the reasons for the incident became clear, we are holding a meeting to analyze the state of emergency. Usually people involved in solving the problem participate in it, as well as team leaders of the involved development teams. The purpose of the meeting is to identify growth points for our systems or processes and plan actions to improve them to prevent similar incidents in the future.

The result is a crash report, which contains both complete information about what happened and what bottlenecks we identified and what we plan to do to eliminate them.

In order for our reports to be written in a high-quality and contain everything necessary, we formulated clear requirements for each section and fixed them in the standard. We also revised the document template and added useful hints to it. In addition, we have collected in a separate document general recommendations on the design of the report, which help to correctly submit the material and avoid common mistakes.

Failure Report is an Important Document, which serves the third purpose of the process - informing stakeholders about incidents and about actions taken after. It is also a tool for gathering experience and knowledge about how our systems work and break down. We can always return to a particular incident, see how it went and how it was handled, or recall from a report for the sake of what goals this or that improvement task was made.

We got the following document structure :

Description of the incident.Consists of two parts. The first is a brief description of what happened, indicating the causes and effect. The second part is detailing. In it, we describe in detail the reasons that led to the incident, how we found out about it, how it developed, what were the log entries / error messages, and how they were eventually repaired. In general, complete technical information about what happened is collected here.

The effect. We describe how the incident affected our users - how many users encountered what problems. We need to understand the effect in order to prioritize improvement tasks.

Chronology.To record all actions, events related to the incident, indicating the time. From this section you can get an idea of the course of the incident, see how quickly we reacted, we can draw conclusions about whether the monitoring worked on time, how long the correction took.

According to the chronology, bottlenecks in the processes are usually quite clearly visible.

Charts and statistics. In this section, we collect all the graphs that show the effect (for example, how many 5XX errors were), as well as any other illustrations of any aspects of the incident.

Actions taken.This section is usually completed after a technical meeting. Here, all planned improvement actions, tasks set, with an indication of the responsible are recorded. We also enter here corrections that were made in the active phase, if they give a long-term effect. All actions in this section are formulated in such a way that it is clear which of the identified problems they help to solve. After the completion of the analysis of the incident, we continue to monitor the implementation of the tasks until all of them are completed or reasonably canceled.

Separately, we highlighted the status of the documentand formulated requirements for its change. This helps to better understand what the report should look like at each stage of the incident analysis work. There can be three statuses - “in work”, “ready for a technical meeting”, and “work completed”. Thanks to this, it has become convenient to track failures that are still being worked on.

To summarize. We began to introduce a new incident management process a year and a half ago, and we have continued to improve it since then. The new organization of work helps us more effectively achieve our goals and solve problems.

Now all critical messages from monitoring are displayed in telegram chat to solve the emergency, in which there are all the necessary employees. A chat is required for quick response at any time.

Thanks to the emergence of the role of the navigator and the formulation of requirements for this role, each incident always has a responsible person who is aware of what is happening. He timely publishes all the necessary information, and also coordinates the process of resolving the incident. As a result, we were able to reduce the number of duplicate actions.

All information is now recorded in the chat. Due to this, it became possible to transmit a retrospective analysis to any employee of the operating service, regardless of whether he was present at the time of the active phase of the failure.

After we formulated and described clear requirements and recommendations for the preparation of the document, the employees of the operation department began to form an equal understanding of what should be reflected in the report. We are constantly working on the quality of reporting, for example, conducting reviews, which also facilitates the exchange of experience.

Thanks to the fact that we began to hold parsing meetings, team leaders and administrators are now more deeply involved in the process. They participate in meetings, know the consequences of those or other bottlenecks in the code or infrastructure, and are better informed about why we need to perform the tasks that we put in teams after analyzing incidents.

Analysis of incidents in our company is carried out by the operations department (or, more simply, support). Therefore, I will begin by describing how it is designed. In Tutu.ru we have a certain set of products, these are railway, air, tours, trains, buses. Each product team has specialists in the operations department. Also, representatives of our department are in the cross-functional and infrastructure teams.

We are responsible for ensuring that our users do not have problems when using the service. This includes team-specific tasks to support our external and internal clients, as well as work aimed at ensuring that the site experiences hardware failures, withstands loads, and continues to solve users' problems well and quickly, despite the speed of change.

An incident (or failure, emergency) is a certain critical (usually massive) problem that significantly reduces the availability, correctness, effectiveness or reliability of business functionality or infrastructure systems.

As part of the incident management process, we set ourselves the following main goals :

1. As soon as possible, restore the performance of our systems.

2. Prevent the occurrence of the same failure twice.

3. Promptly inform stakeholders.

How they worked before:

We had a self-written monitoring system, which implied an independent subscription of employees to reports of problems. That is, only those who signed up for them received alerts. We learned about failures from this system, and then acted in a rather fragmented way - someone from the operation received an SMS, started looking at what was happening, came to the people who needed to solve or analyze the problem (or called them if it was about idle time), and the failure was finally resolved. Then someone wrote a report about the failure in the format accepted at that time (a description of the problem and the reasons, a chronology and a set of planned actions aimed at “not repeating”).

At the same time, there were obvious problems :

1. There was no complete certainty that anyone was subscribed to a critical alert. That is, there was a possibility that the failure would be noticed already when it affected other critical parts of the system.

2. There was no one responsible for solving the problem, who will achieve its elimination as soon as possible. It is also unclear who should check the current status.

3. It was not possible to keep track of incident information over time.

4. There was a lack of understanding of whether anyone saw a message from monitoring? Did you react? What details have already been found out, and is there any progress in the solution?

5. Several experts duplicated each other's actions. We looked at the same logs / charts, went to the same people, and distracted them with repeated questions about whether they knew about the problem, at what stage the solution was, and so on.

6. For those who did not directly participate in solving the incident, it was difficult to obtain information about its causes, how to fix it, and what was happening. Because nowhere was it fixed who and what was doing.

7. There was no equal understanding of what needs to be written in the crash report and what is not, how deeply immersed in the analysis. There was no clear process for formulating "action" for improvement. As a result, crash reports could be incomplete, often looking different. From them it was difficult to understand what the effect was, what are the reasons for what they did in the decision process, and how they were repaired.

8. Only support specialists and those who were directly involved in eliminating the causes and the negative effect plunged into the analysis of the emergency. As a result, by no means all interested received feedback on problems.

With this process of handling failures, we did not achieve our goals. The same incidents happened, and the available reports did not help us much to deal with similar failures in the future. The reports did not always answer important questions - how the problem was resolved, whether everything necessary was undertaken for non-repetition, and if not, why. In addition, the inconsistency of our actions at the time of the incident did not contribute to speeding up the decision time, especially with the increase in the number of departments.

What did:

- We divided the work with incidents into two phases - active and retrospective - and began to improve each of them individually.

- We developed a standard for processing emergency situations, which recorded all the requirements and agreements, described a set of actions that are mandatory for each phase of the process.

- We began to write recommendation documents in which we collected good practices for the various stages of the incident analysis process.

Now more about these changes.

Active phase

It is aimed at achieving the first goal of the process - the rapid restoration of the performance of our systems.

At the beginning of work to improve this phase, we were seriously “hurt” by the lack of effective communication even within the operation department. During working hours, we could communicate about problems in the department’s skype chat, but team chat is designed for any working issues. It does not provide an instant reaction to messages, and during off hours not all team members use this tool.

Therefore, we decided that we needed a separate channel for communications on emergency situations, in which there would be no other working correspondence. Our choice fell on Telegram, since it is easy and convenient to use from a mobile, and you can also expand its capabilities using bots. We created a chat and agreed to write to it when someone found out that a failure had occurred and started to deal with it. Since there are no unnecessary messages in this chat, we are obliged to respond quickly to it at any time of the day. At first, only support employees were in the chat, but quite quickly, administrators and some developers appeared in it. That is, those people who can help quickly fix the failure.

This turned out to be a very convenient practice, since all participants in the incident processing process are gathered in one place, and everyone has information about what is happening. Often now you don’t even need to take turns calling, for example, administrators in order to find someone who can tackle the problem. All the necessary people are in the chat, and they themselves unsubscribe when they notice an alert (or you can call them up). I’ll explain here that we don’t have an on-call as such, so the one who is near the computer at the moment is dealing with incidents outside the office hours. So far it works for us.

After that, the problem of informing people involved in malfunctioning about what was happening “in battle” and who was involved in the solution was almost solved. Later we brought critical messages from monitoring directly to this chat. Now, alerts are guaranteed to come to everyone who needs it, and this does not depend on whether a person has subscribed to sensors or not.

We also made a number of improvements to improve process control.

We can find out that an incident has occurred in two ways:

- by alerts from monitoring,

- from our employees who noticed a problem - if the monitoring didn’t inform us about it for some reason.

In any case, we must record the incident in JIRA - to control the time of occurrence / solution of the problem, as well as inform interested parties. To do this, we have allocated a special section in the Service Desk (“emergency fight”), where any employee of the company can set a task. We also have a panel that displays all active tasks with this component. The panel is accessible directly from the task setting interface in the Service Desk, and on it you can find the answer to the question whether there is a problem in the battle and whether it is solved.

At the event of setting the “PE-Boy” task, we configured to send an automatic notification to Telegram chat to solve the PE. This allows you to be sure that the problem will be noticed even after hours.

If we learned about the problem from monitoring, then we must set the “PE-fight” task ourselves. Since the corresponding alerts come to the chat, it seemed convenient to us to automate the statement of the problem in JIRA. Now we can do this by pressing a single button in the chat - the task is created on behalf of a special user for automation and contains the text of the triggered alert. Thus, fixing the incident is quick and convenient, we do not spend too much time on it.

When the “PE-battle” task arrives, we define the “navigator” - a person from the operation department who will be responsible for eliminating the failure. This does not mean that the others stop participating in the analysis, everyone is involved, but the navigator is necessary so that the employees do not duplicate each other's actions. The navigator must coordinate communications and actions to resolve the incident, attract the necessary forces for this. And also timely record information on the progress of work, so that later during the analysis it would be possible to easily restore the chronology.

The navigator is appointed as the performer of the “PE-battle” task. When the task is assigned, our colleagues understand that they are dealing with the problem, and, in which case, they know who to contact with questions.

When we managed to eliminate the negative effects - the active phase is completed, the task closes.

In this phase, from the point of view of processes, the most difficult was to form a common understanding of who should be in the role of navigator. We decided that it may be the one who first noticed the emergency, but it is better if it is a sapper of the affected product.

Retrospective phase

Begins immediately after active completion. Serves to achieve the second goal of the process - to prevent the recurrence of the same failure.

The phase includes a full comprehensive analysis of what happened. The person responsible for the analysis of the incident must collect all available information about what happened, for what reasons, what actions were taken to restore working capacity. After the reasons for the incident became clear, we are holding a meeting to analyze the state of emergency. Usually people involved in solving the problem participate in it, as well as team leaders of the involved development teams. The purpose of the meeting is to identify growth points for our systems or processes and plan actions to improve them to prevent similar incidents in the future.

The result is a crash report, which contains both complete information about what happened and what bottlenecks we identified and what we plan to do to eliminate them.

In order for our reports to be written in a high-quality and contain everything necessary, we formulated clear requirements for each section and fixed them in the standard. We also revised the document template and added useful hints to it. In addition, we have collected in a separate document general recommendations on the design of the report, which help to correctly submit the material and avoid common mistakes.

Failure Report is an Important Document, which serves the third purpose of the process - informing stakeholders about incidents and about actions taken after. It is also a tool for gathering experience and knowledge about how our systems work and break down. We can always return to a particular incident, see how it went and how it was handled, or recall from a report for the sake of what goals this or that improvement task was made.

We got the following document structure :

Description of the incident.Consists of two parts. The first is a brief description of what happened, indicating the causes and effect. The second part is detailing. In it, we describe in detail the reasons that led to the incident, how we found out about it, how it developed, what were the log entries / error messages, and how they were eventually repaired. In general, complete technical information about what happened is collected here.

The effect. We describe how the incident affected our users - how many users encountered what problems. We need to understand the effect in order to prioritize improvement tasks.

Chronology.To record all actions, events related to the incident, indicating the time. From this section you can get an idea of the course of the incident, see how quickly we reacted, we can draw conclusions about whether the monitoring worked on time, how long the correction took.

According to the chronology, bottlenecks in the processes are usually quite clearly visible.

Charts and statistics. In this section, we collect all the graphs that show the effect (for example, how many 5XX errors were), as well as any other illustrations of any aspects of the incident.

Actions taken.This section is usually completed after a technical meeting. Here, all planned improvement actions, tasks set, with an indication of the responsible are recorded. We also enter here corrections that were made in the active phase, if they give a long-term effect. All actions in this section are formulated in such a way that it is clear which of the identified problems they help to solve. After the completion of the analysis of the incident, we continue to monitor the implementation of the tasks until all of them are completed or reasonably canceled.

Separately, we highlighted the status of the documentand formulated requirements for its change. This helps to better understand what the report should look like at each stage of the incident analysis work. There can be three statuses - “in work”, “ready for a technical meeting”, and “work completed”. Thanks to this, it has become convenient to track failures that are still being worked on.

To summarize. We began to introduce a new incident management process a year and a half ago, and we have continued to improve it since then. The new organization of work helps us more effectively achieve our goals and solve problems.

How the problems of the active phase were solved:

- There was no complete certainty that anyone was subscribed to a critical alert.

Now all critical messages from monitoring are displayed in telegram chat to solve the emergency, in which there are all the necessary employees. A chat is required for quick response at any time.

- There is no one responsible for solving the problem, who will achieve its elimination as soon as possible. It is also not clear from whom to clarify the current status.

- Lack of information related to the incident at any time.

- Several experts duplicated each other's actions.

Thanks to the emergence of the role of the navigator and the formulation of requirements for this role, each incident always has a responsible person who is aware of what is happening. He timely publishes all the necessary information, and also coordinates the process of resolving the incident. As a result, we were able to reduce the number of duplicate actions.

Solving the problems of the retrospective phase:

- For those who did not directly participate in the resolution of the incident, it was difficult to obtain information about the causes, methods of repair and what was happening.

All information is now recorded in the chat. Due to this, it became possible to transmit a retrospective analysis to any employee of the operating service, regardless of whether he was present at the time of the active phase of the failure.

- There was no uniform understanding of what to write in the crash report. According to reports, it was sometimes difficult to completely restore the picture of what happened.

After we formulated and described clear requirements and recommendations for the preparation of the document, the employees of the operation department began to form an equal understanding of what should be reflected in the report. We are constantly working on the quality of reporting, for example, conducting reviews, which also facilitates the exchange of experience.

- Only support specialists and those who were directly involved in eliminating the causes and the negative effect plunged into the analysis of the emergency. Not all interested people received feedback about the problems.

Thanks to the fact that we began to hold parsing meetings, team leaders and administrators are now more deeply involved in the process. They participate in meetings, know the consequences of those or other bottlenecks in the code or infrastructure, and are better informed about why we need to perform the tasks that we put in teams after analyzing incidents.