Selected chapters of control theory on fingers: linear observers of dynamical systems

- Tutorial

I continue to write about control theory "on the fingers." To understand the current text, you must read the two previous articles:

I am not at all a specialist in control theory, I just read textbooks. My task is to understand the theory and put it into practice. This article is just a theory (well, a little bit of accompanying code), in the next we’ll talk about practice, a small piece of which can be seen here:

Corrections and additions are welcome. For the hundredth time, I remind you that first of all I write these texts for myself, since it makes me structure my knowledge in my head. This site is for me a kind of public notebook, to which, by the way, I regularly come back. Just in case, I’ll recall a bearded joke that describes my life well:

So, let's recall what we talked about in the last article , namely, what the Russian school calls the analytical construction of optimal regulators. I’ll even take an example from that article.

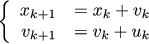

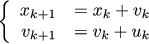

Suppose we have a car whose state at a given moment in time k is characterized by its coordinate x_k and speed v_k. The experiment begins with a certain initial state (x_0, v_0), and our task is to stop the car at zero coordinates. We can act on it through gas / brakes, that is, through acceleration u_k. We write this information in a form that is convenient to transmit from person to person, because the verbal description is cumbersome and can allow several tacts:

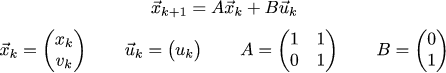

It’s even more convenient to write this information in matrix form, since the matrices can be substituted into a variety of wordings, thus the language is very flexible and can describe many different problems:

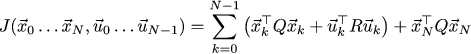

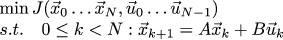

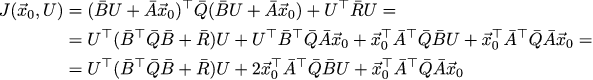

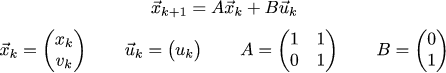

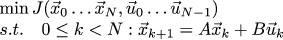

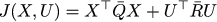

So, we described the dynamics of the system, but we did not describe either the goal or what to consider good management. Let's write the following quadratic function, which takes as the parameters the trajectory of the car and the sequence of control signals:

We try to find a trajectory of the car that minimizes the value of this function. This function sets a compromise of goals: we want the system to converge to zero as soon as possible, while maintaining a reasonable amount of control (no need to slow down!)

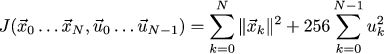

In total, we have the task of minimizing a function with a linear constraint on the input variables. For our specific car task, the last time we chose such weights 1 and 256 for our purposes:

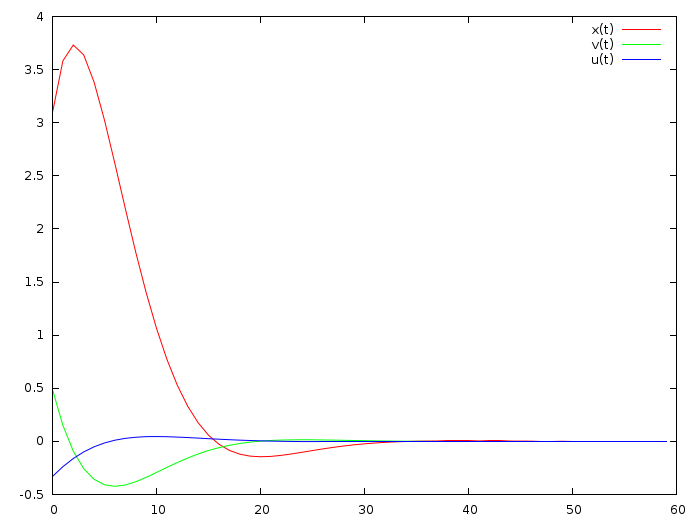

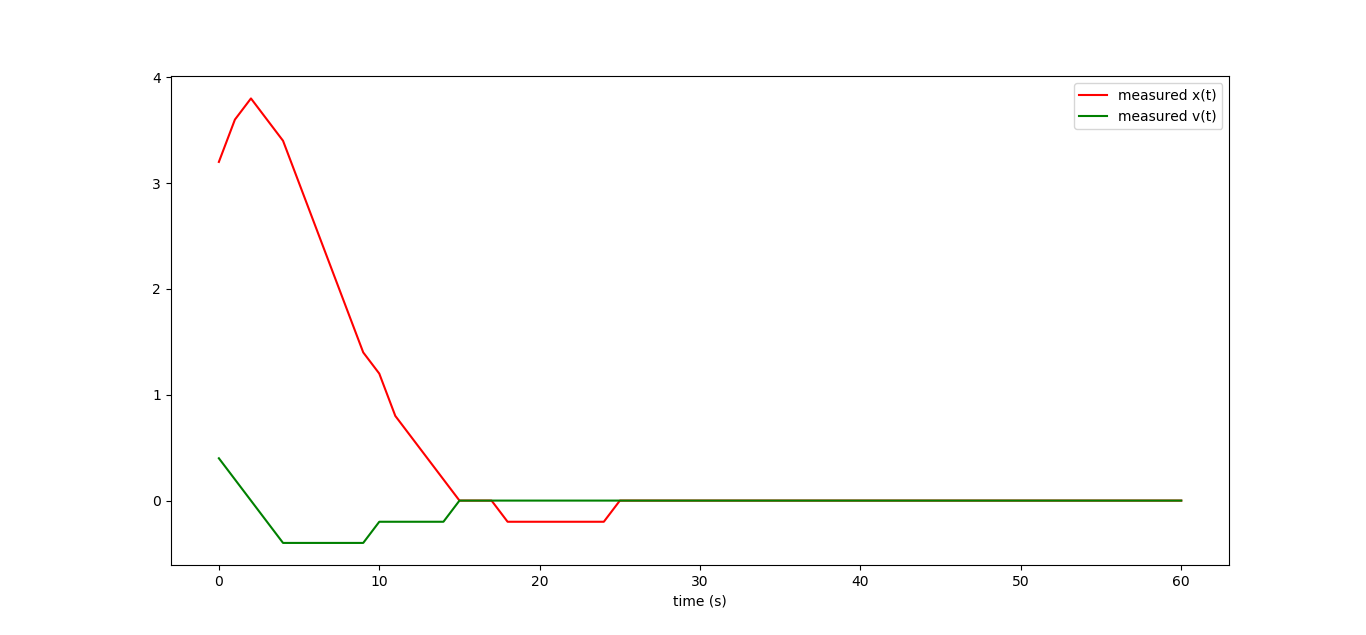

If at the initial moment our car is in position 3.1 and has a speed of half a meter per second, then the optimal control is as follows (I quote from the last article) :

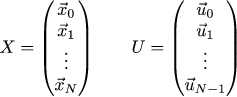

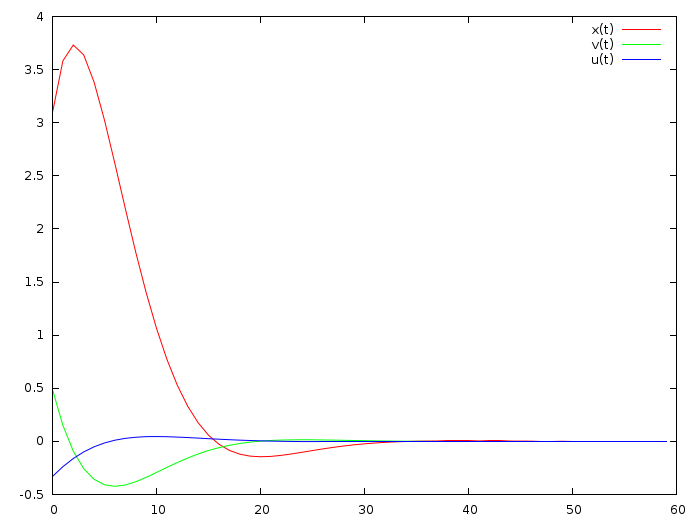

Let's try to draw the same curves in a slightly different way than last time. Recording amounts is very cumbersome, let's reduce to the matrix form. First of all, we will reduce our entire trajectory and our entire control sequence to healthy column matrices:

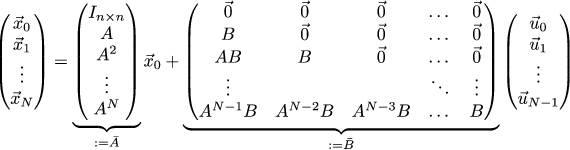

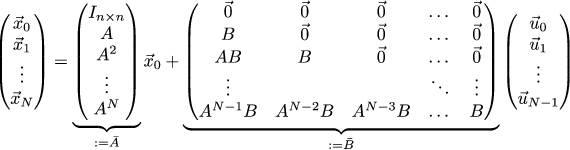

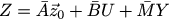

Then the relationship of coordinates with the control can be written as follows:

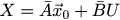

Or even more briefly:

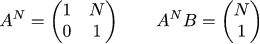

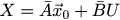

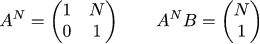

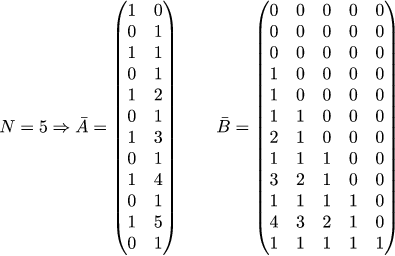

In this article, the sticks above the matrices mean reducing small matrices that describe one iteration into large for the whole task. For our particular problem, the degrees of the matrix A ^ n are as follows:

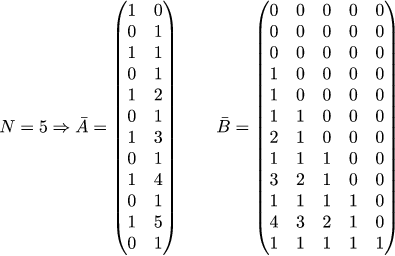

For the sake of clarity, let's explicitly write Ā and B И:

And even I will give the source code that fills these matrices in numpy:

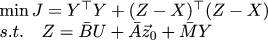

We reduce to large matrices and coefficients of our quadratic form:

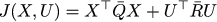

In our particular car problem, Q̄ is the identity matrix and R̄ is the identity matrix multiplied by scalar 256. Then the quality control function is written in matrix form very briefly:

And we look for the minimum of this function with one linear restriction:

Note to yourself: a great reason to deal with the Lagrange multipliers, the Legendre transform and the Hamilton function obtained from this. And also how the explanation of LQR follows from this through dynamic programming and Riccati equations. Apparently, you still need to write articles :)

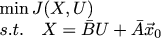

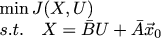

I am lazy, so this time we will go in the most direct way. J depends on two arguments that are linearly related to each other. Instead of minimizing the function with linear constraints, let's just remove the extra variables from it, namely, replace the occurrences of X with на x_0 + B̄ U in it:

This is a rather boring, but completely mechanical conclusion that does not require the brain. When moving from the penultimate line to the last one, we took advantage of the fact that Q̄ is symmetric, this allowed us to write Q̄ + Q̄ ^ T = 2 Q̄.

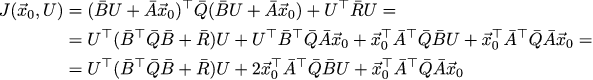

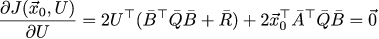

Now we just need to find the minimum of the quadratic function, no restrictions are imposed on the variables. We recall the tenth grade of the school and equate to zero partial derivatives.

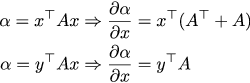

If you can differentiate ordinary polynomials, then you can take derivatives of matrix ones as well. Just in case, I recall the rules of differentiation:

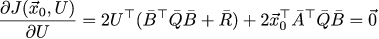

Again, a little bit of tedious writing of squiggles and we get our partial derivative with respect to the control vector U (we again used the symmetry of Q̄ and R̄):

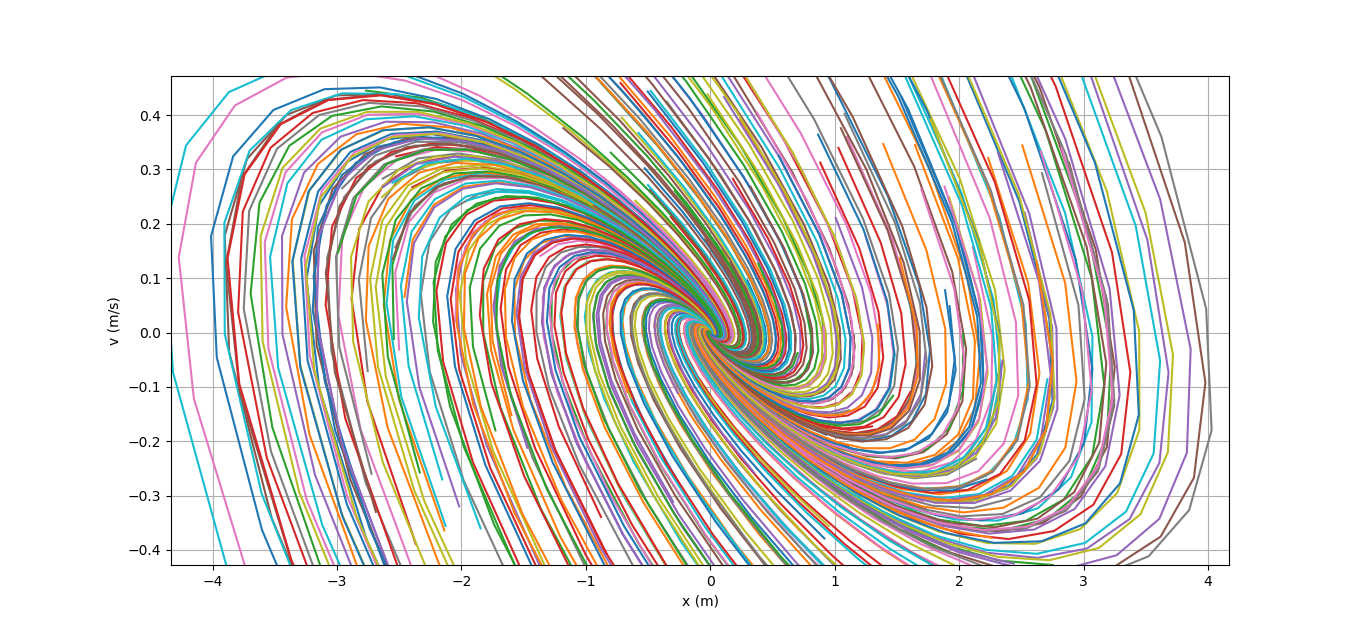

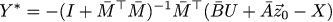

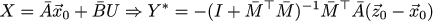

Then the optimal control vector U * can be found as follows:

Well, how do we write the squiggles, but still didn’t draw anything? Let's fix it!

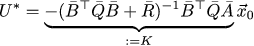

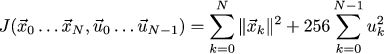

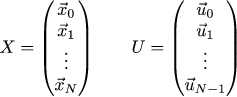

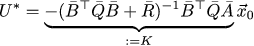

This will give the following picture, note that it perfectly matches the one that we received in the previous article (drawing here ):

So, the theory tells us that (with a long event horizon) the optimal control linearly depends on the remaining distance to the final target. Last time we proved it for the one-dimensional case, in two-dimensional believing the word. This time again I will not rigorously prove it, leaving this for a later discussion of the Lagrange multipliers. However, it is intuitively clear that it is. After all, we got the formula U = K * x0, where the matrix K does not depend on the state of the system, but only on the structure of the differential equation (on the matrices A and B).

That is, u_0 = k_0 * x_0. By the way, we got k_0 equal to [-0.052 -0.354]: Compare this result with what I got with the help of OLS and with the one that was obtained by solving the Riccati equation (in the comments to the previous article).

Intuitively, if u0 depends linearly on x_0, then with a long event horizon the same should be for x1.

Continuing the discussion, we expect that the control u_i should be considered as u_i = k_0 * x_i. That is, the optimal control should be obtained by the scalar product of the constant vector k_0 and the residual distance to the target x_i.

But our result says exactly the opposite! We found that u_i = k_i * x_0! That is, we found the sequence k_i, which depends only on the structure of the equation of the system, but not on its state. And control is obtained using the scalar product of a constant vector (the initial position of the system) and the sequence found ...

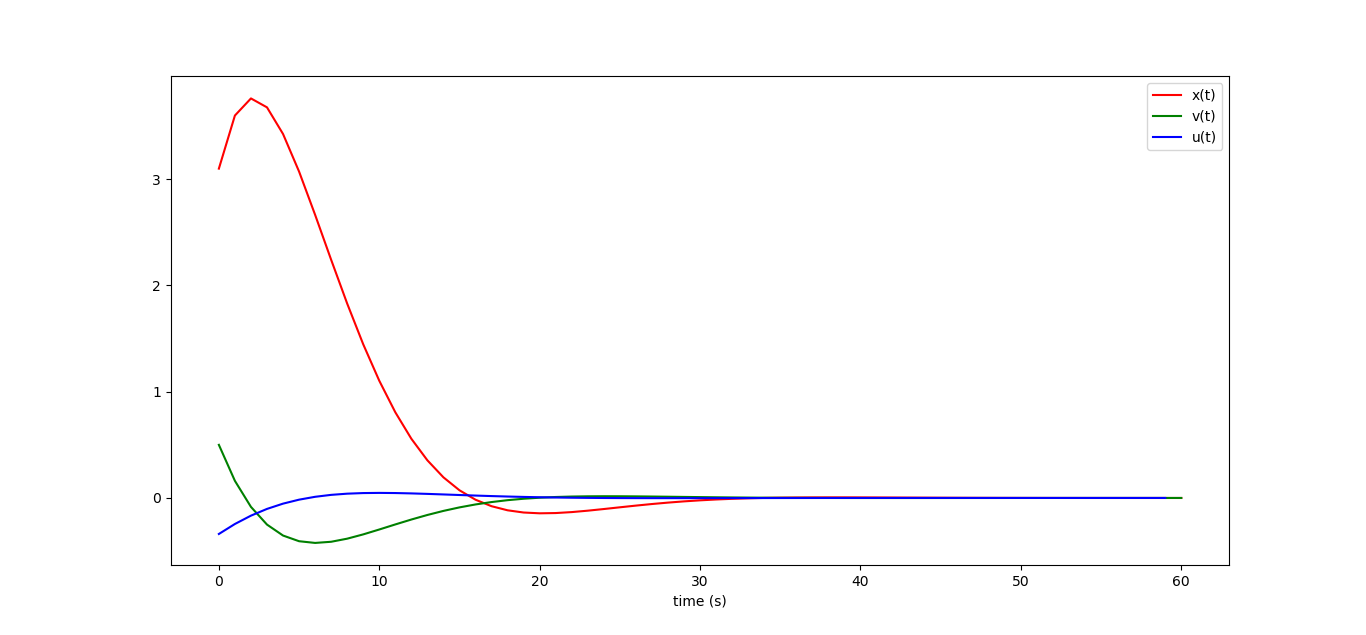

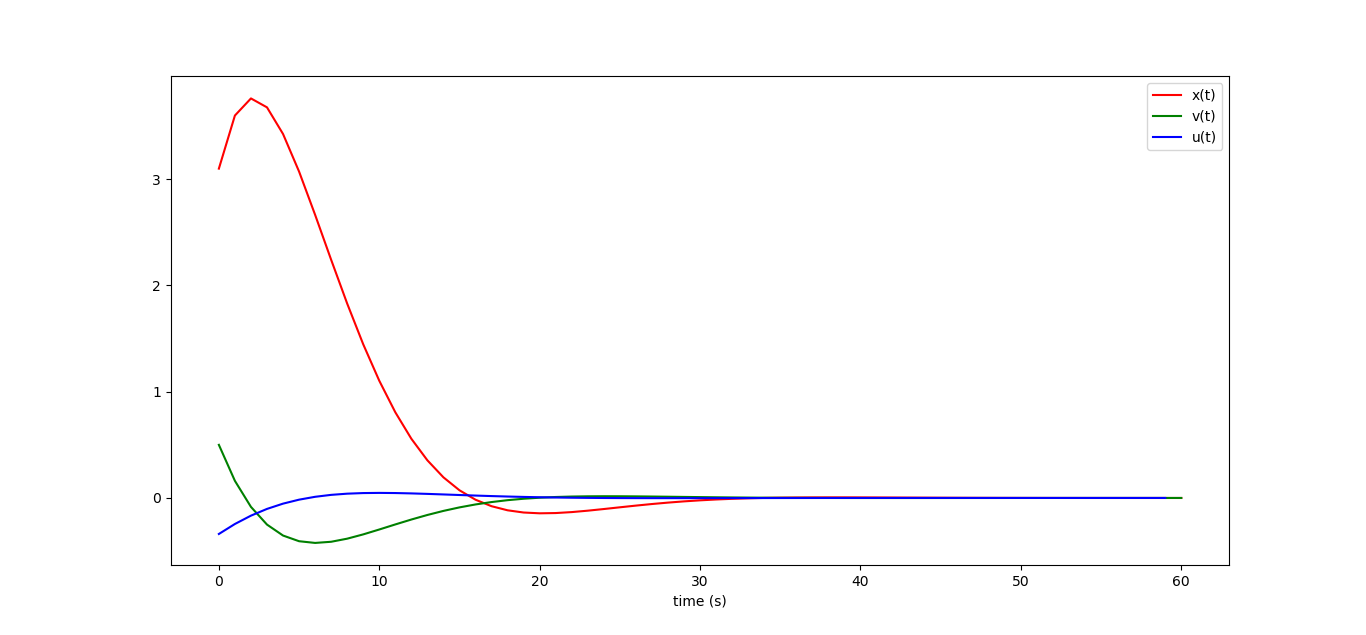

That is, if everything is fine, then we should have the equality k_0 * x_i = u_i = k_i * x_0. Let's draw an illustrative graph, taking for simplicity x_0 equal to k_0. On one axis of the graph, we postpone the coordinate of the machine, on the other the speed. Starting from one point k_0 = x_0 we get two different sequences of points k_i and x_i converging to zero coordinates.

Drawing is available here .

If roughly, then k_i * x_0 form a sequence of projections k_i onto the vector x_0. The same for k_0 * x_i, this is a sequence of projections x_i onto k_0. It is clearly seen on our graph that these projections coincide. Once again, this is not a proof, but only an illustration.

Thus, we have obtained a dynamical system of the form x_ {k + 1} = (A + BK) x_k. If the eigenvalues of the matrix A + BK are less than unity in absolute value, then this system converges to the origin at any initial x_0. In other words, the solution of this differential equation is the (matrix) exponent.

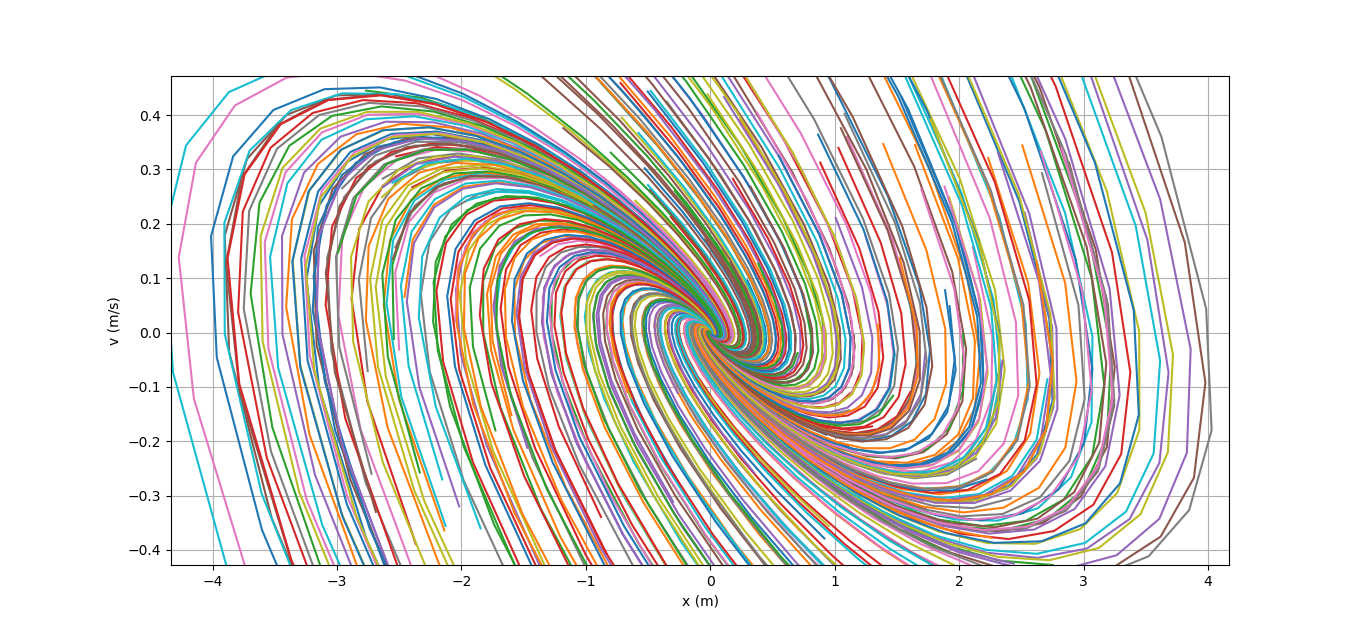

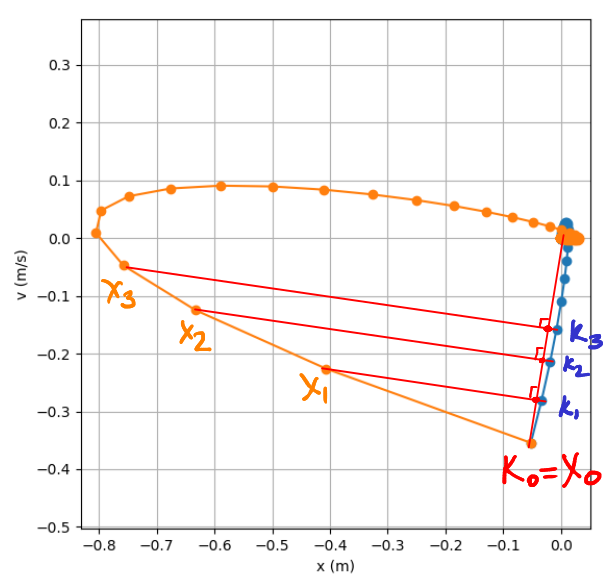

As an illustration, I accidentally selected several hundred different x_0, and drew the trajectories of our machine. Take a drawing here . Here is the resulting picture:

All the trajectories safely converge to zero, a slight twisting of the trajectories indicates that the matrix A + BK is a rotation and contraction matrix (has complex eigenvalues smaller than unity in absolute value). If I am not mistaken, then this picture is called a phase portrait.

So, we cheerfully minimized our function J, and got the optimal control vector U0 as K * X0:

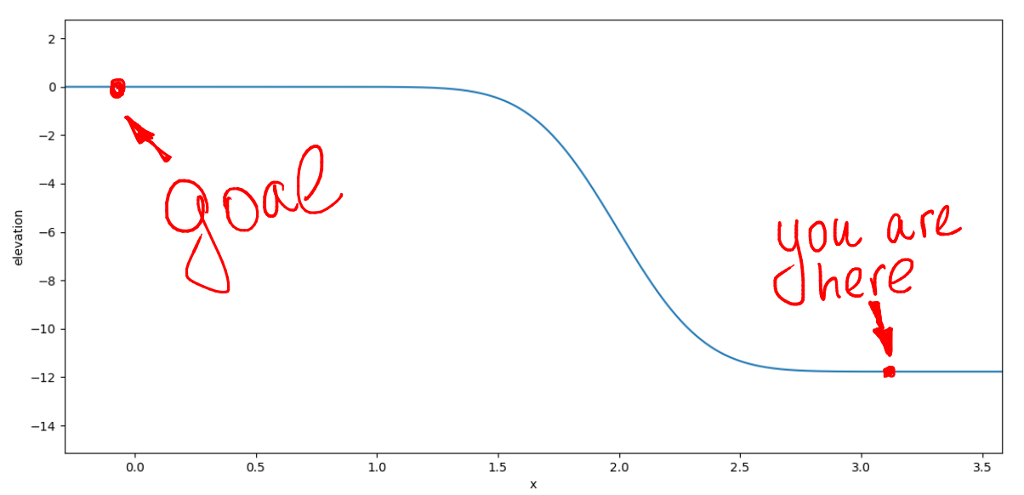

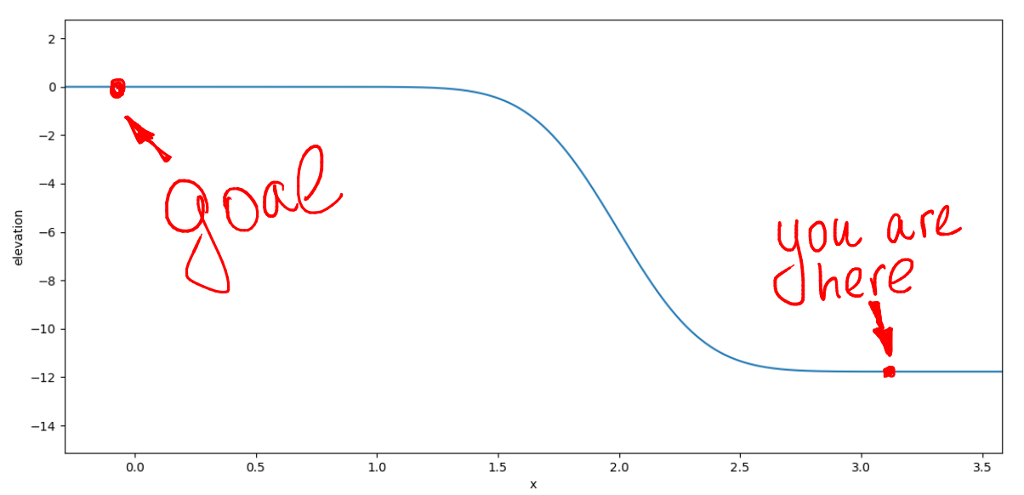

Is there anyway the difference between considering control as or as u_i = k_0 * x_i? There is a colossal one if we have unaccounted factors in our system (that is, almost always). Let's imagine that our car does not roll on a horizontal surface, but such a hill can meet on its way:

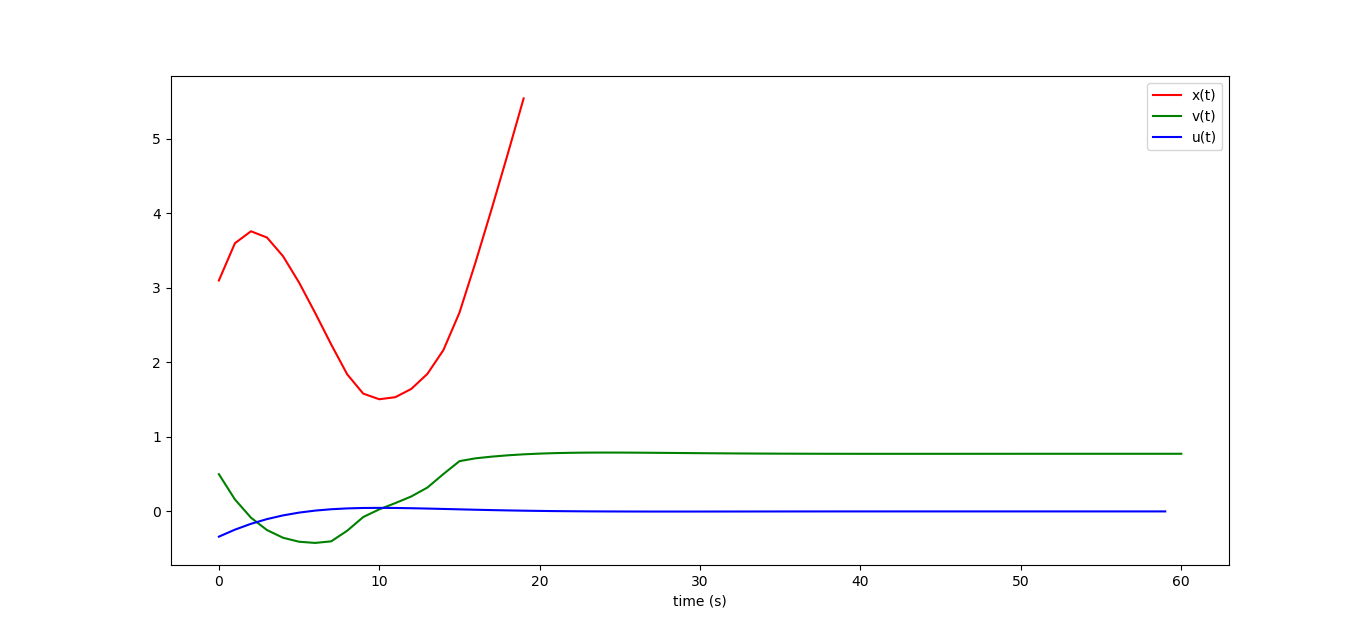

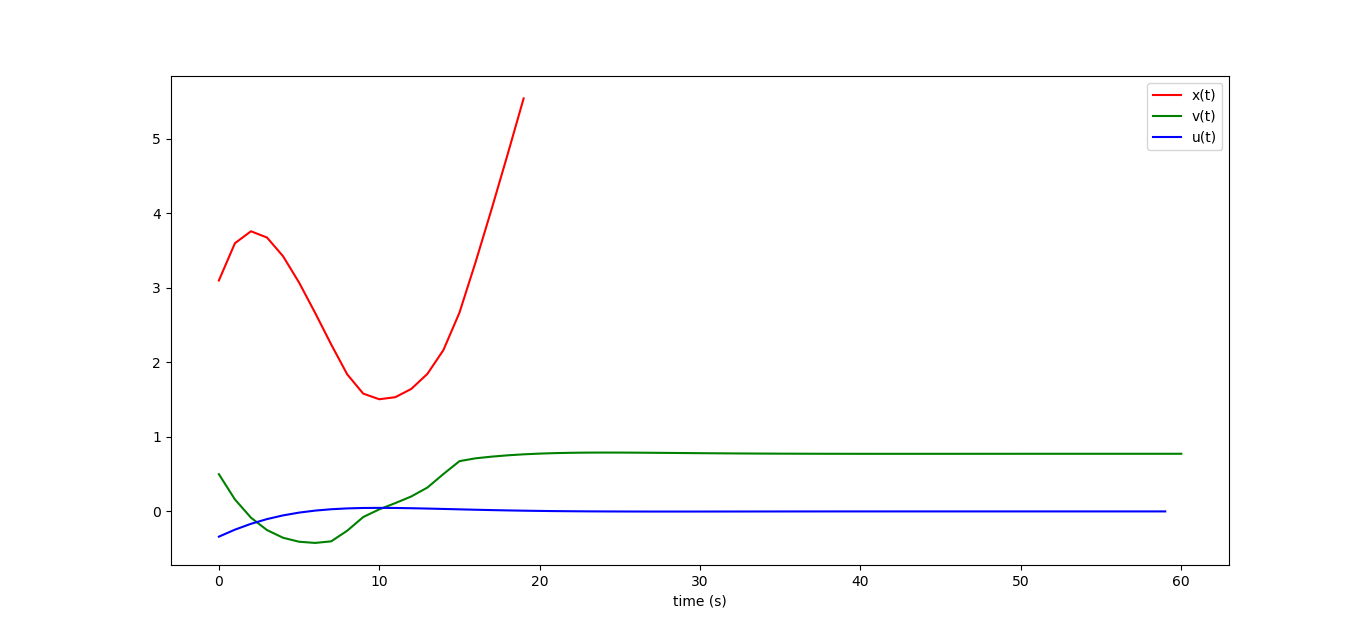

If we calculate the optimal control without taking this hill into account, trouble may happen

(drawing here ): The

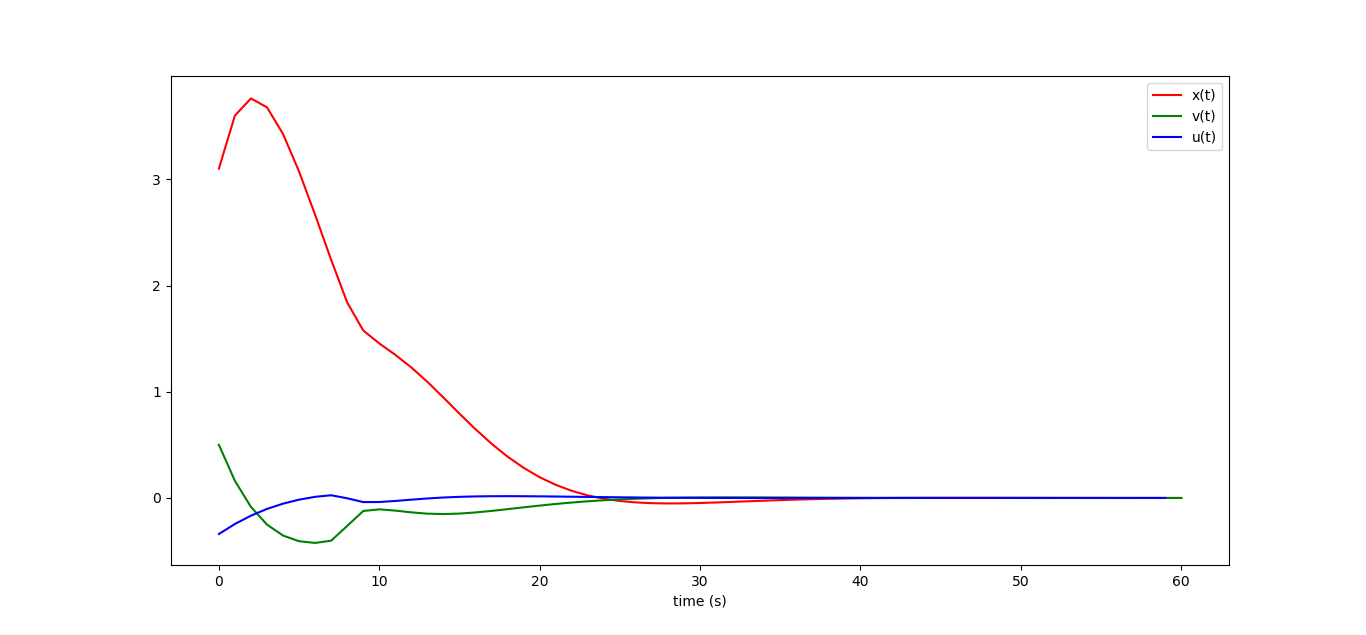

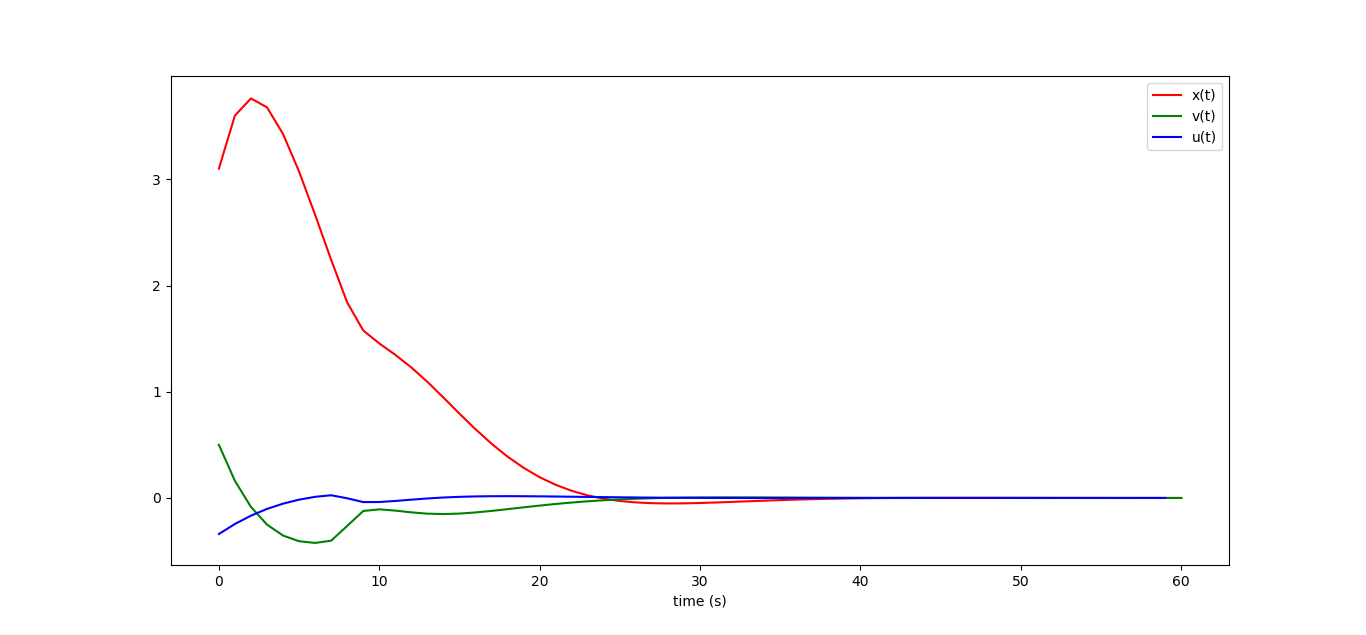

machine will not only stop, but also completely go to infinity to the right, I even drew x_i graphics only one third, then it grows linearly. If we close the control loop, then small disturbances in the dynamics of the system do not lead to catastrophic consequences:

So, we can control a dynamic system so that it strives to get to the origin. But what if we would like the car to stop not at x = 0, but at x = 3? Obviously, it is enough to subtract the desired result from the current state x_i, and then everything is as usual. Moreover, this desired result may well not be constant, but change over time. Can we follow another dynamic system?

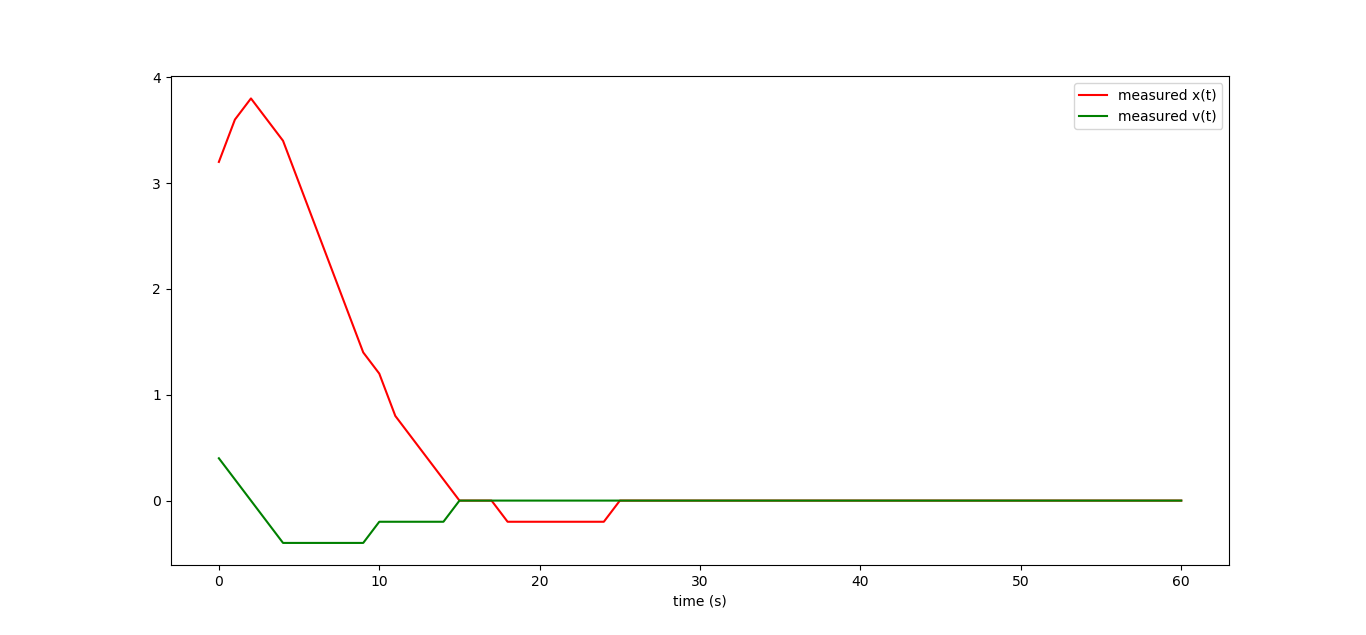

Let's assume that we do not control our machine directly, but at the same time we want to monitor it. We measure both states of the machine using some sensors that have a rather low resolution:

I just took our curves and made their values coarser. Let's try to filter out noise introduced by measurements. As usual, we arm ourselves with the least squares.

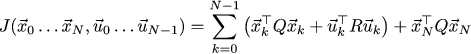

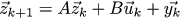

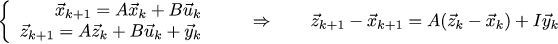

So, our machine obeys the law x_ {k + 1} = A x_k + B u_k, so let's build a diffus observer that follows the same law:

Here y_k is the correction term through which we will tell our observer how far we are from the real state of things. Like last time, we write the equation in matrix form:

Okay. Now what do we want? We want the correction terms y_k to be small, and so that z_k converges to x_k:

Like last time, we express Z through Y, we equate the partial derivatives with respect to Y to zero, and we get an expression for the optimal correction term:

Here vague forebodings start to torment me ... Somewhere I already saw it! Ok, let's remember how X is related to U, we get the following expression:

But this is, up to renaming letters, an expression for U *! And in fact, let's go back a little bit, too quickly we rushed into action. By combining the dynamics for x_k with the dynamics for z_k we get the dynamics for the error x_k-z_k.

That is, our task of tracking the machine is absolutely equivalent to the task of a linear-quadratic controller. We find the optimal gain matrix, which in our case will be equal to [[-0.594, -0.673], [- 0.079, -0.774]], and we obtain the following observer code:

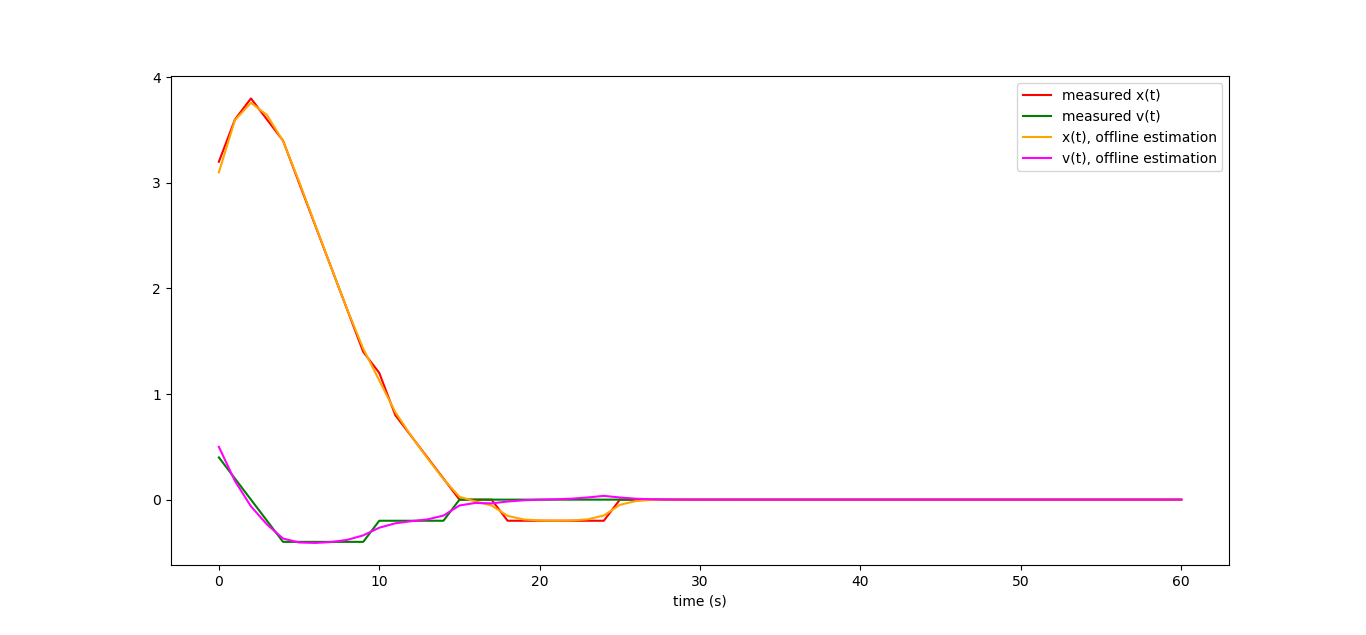

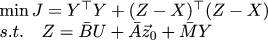

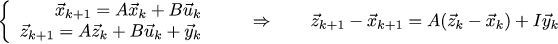

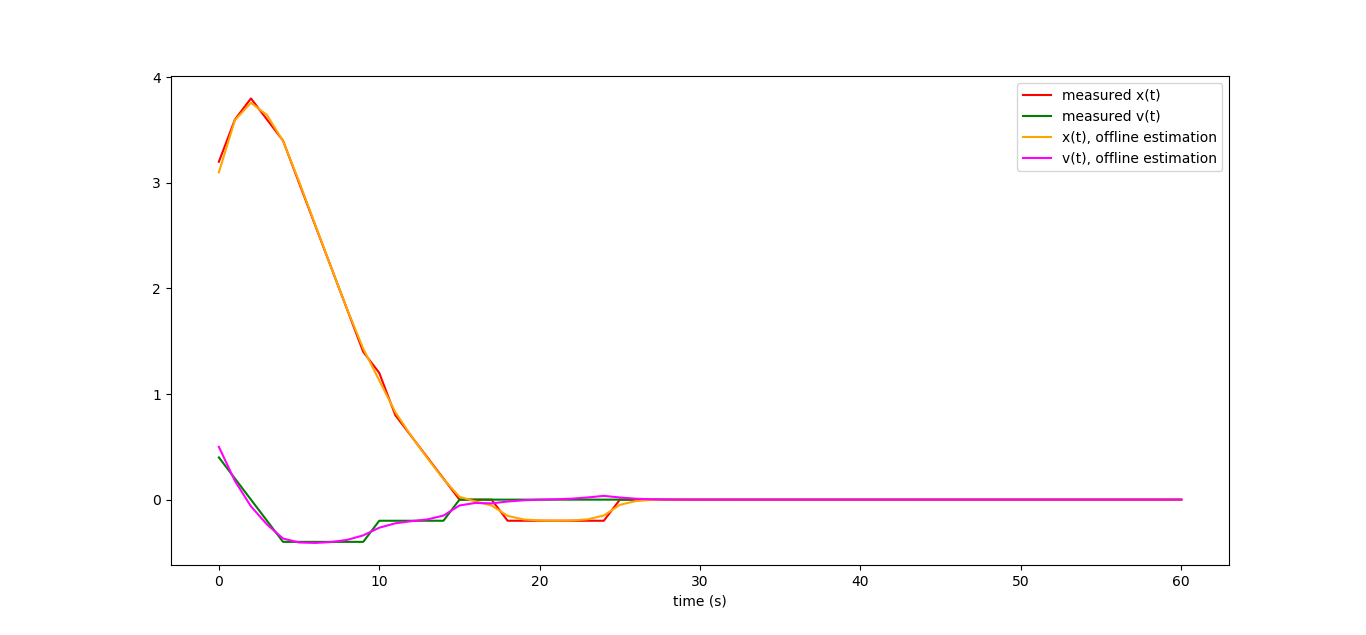

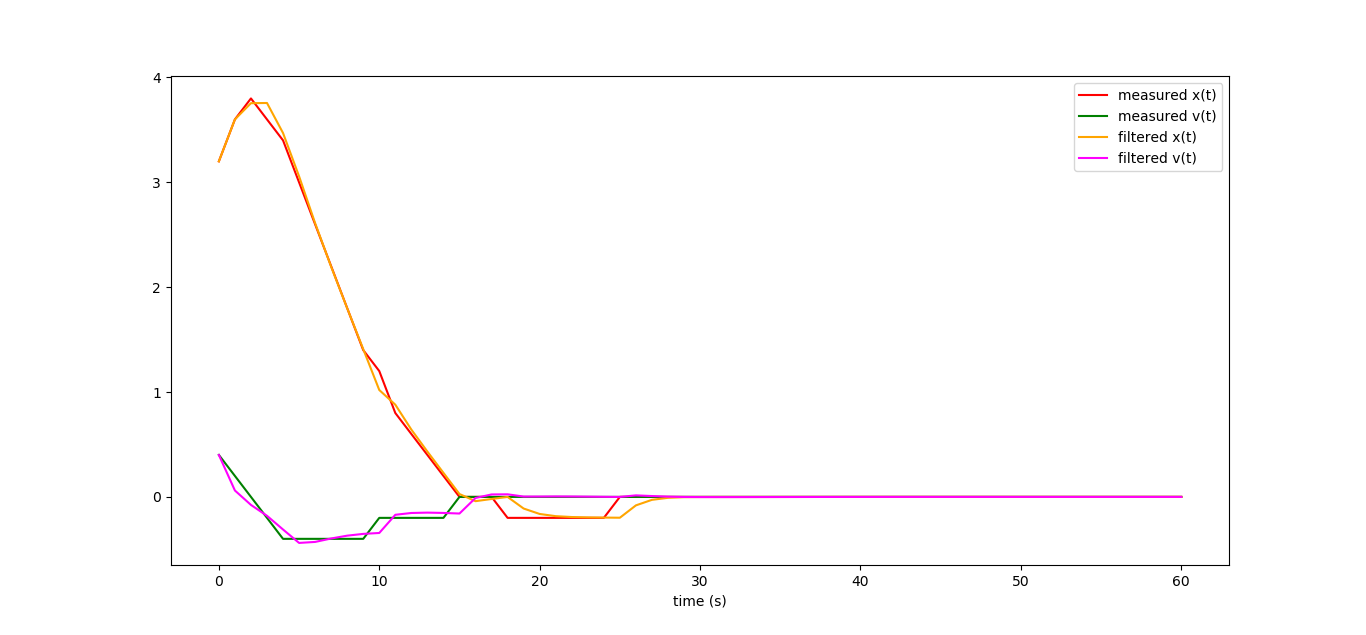

But the filtered curves, they are imperfect, but still closer to reality than low-resolution measurements.

The dynamics of the system is determined by the eigenvalues of the matrix [[1-0.594.1-0.673], [- 0.079.1-0.774]], which are equal to (0.316 + - 0.133 i).

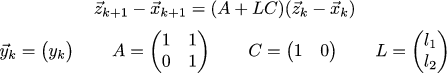

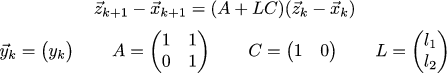

And let's go even further. Let's assume that we can only measure position, but not for the speed of the sensor. Will we be able to build an observer in this case? Here we go beyond LQR, but not too far. Let's write the following system:

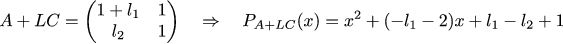

Matrix C prescribes to us what we can actually observe in our system. Our task is to find a matrix L that allows the observer to behave well. Let’s write with our hands that the eigenvalues of the matrix A + LC, which describes the general dynamics of the observer, should be approximately the same as the eigenvalues of the matrix from the previous example. Let's approximate the previous eigenvalues by fractions (1/3 + - 1/6 i). We write the characteristic polynomial of the matrix A + LC, and make it equal to the polynomial (x- (1/3 + 1/6 * i)) * (x- (1 / 3-1 / 6 * i). Then we solve the simplest system from linear equations, and the matrix L is found.Calculations

can be checked here , and drawing graphs here .

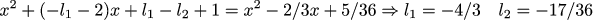

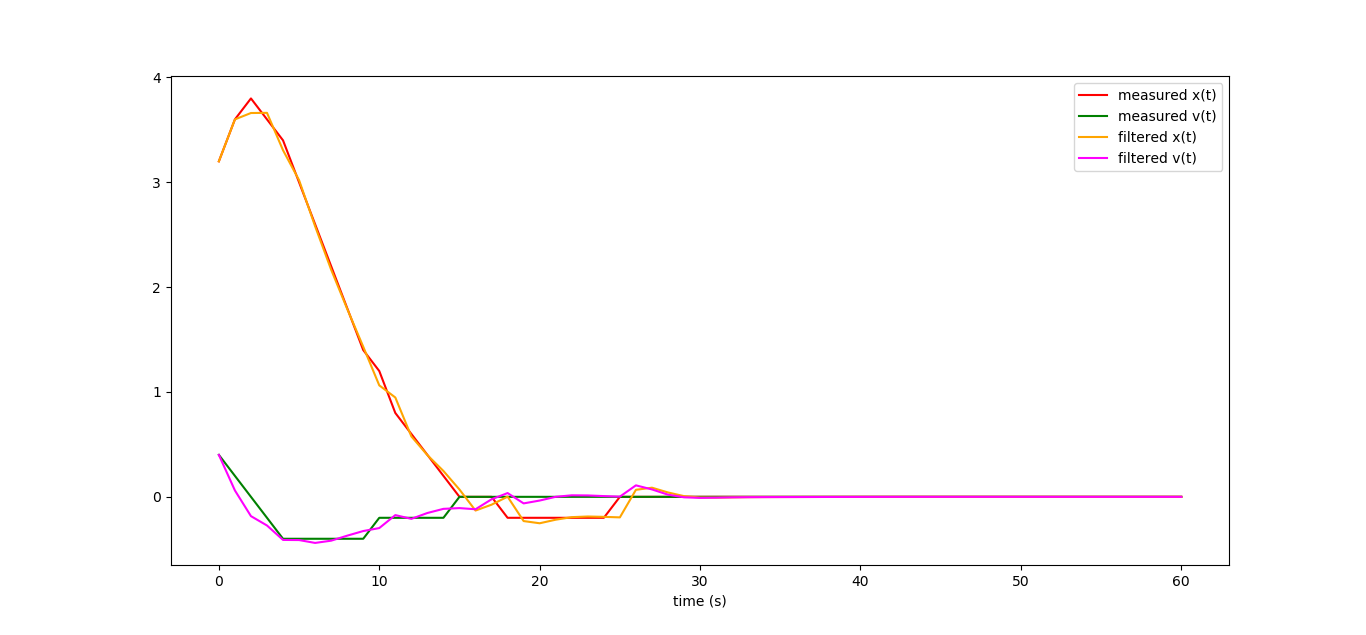

This is how the work schedules of our observer look if we measure (and with a large error) only the coordinate of our machine:

From very incomplete data, we can very well restore the dynamics of the entire system! By the way, the linear observer we built in fact is the famous Kalman filter, but we will talk about it sometime next time.

If, nevertheless, Y * is brought to the logical end (take the code here) , then the resulting observer curves are much, much smoother (and closer to the truth!) Than those that should be equivalent to them. Why?

- Least squares methods

- Linear-quadratic controller, introductory

- Linear-quadratic controller and linear observers

I am not at all a specialist in control theory, I just read textbooks. My task is to understand the theory and put it into practice. This article is just a theory (well, a little bit of accompanying code), in the next we’ll talk about practice, a small piece of which can be seen here:

Corrections and additions are welcome. For the hundredth time, I remind you that first of all I write these texts for myself, since it makes me structure my knowledge in my head. This site is for me a kind of public notebook, to which, by the way, I regularly come back. Just in case, I’ll recall a bearded joke that describes my life well:

A conversation between two teachers:

- Well, this year I got a dumb group!

- Why?

- Imagine explaining the theorem - they don’t understand! I explain the second time - they don’t understand! The third time I explain, I already understood. But they don’t understand ...

Linear-quadratic regulator, side view

LQR: Statement of the Problem

So, let's recall what we talked about in the last article , namely, what the Russian school calls the analytical construction of optimal regulators. I’ll even take an example from that article.

Suppose we have a car whose state at a given moment in time k is characterized by its coordinate x_k and speed v_k. The experiment begins with a certain initial state (x_0, v_0), and our task is to stop the car at zero coordinates. We can act on it through gas / brakes, that is, through acceleration u_k. We write this information in a form that is convenient to transmit from person to person, because the verbal description is cumbersome and can allow several tacts:

It’s even more convenient to write this information in matrix form, since the matrices can be substituted into a variety of wordings, thus the language is very flexible and can describe many different problems:

So, we described the dynamics of the system, but we did not describe either the goal or what to consider good management. Let's write the following quadratic function, which takes as the parameters the trajectory of the car and the sequence of control signals:

We try to find a trajectory of the car that minimizes the value of this function. This function sets a compromise of goals: we want the system to converge to zero as soon as possible, while maintaining a reasonable amount of control (no need to slow down!)

In total, we have the task of minimizing a function with a linear constraint on the input variables. For our specific car task, the last time we chose such weights 1 and 256 for our purposes:

If at the initial moment our car is in position 3.1 and has a speed of half a meter per second, then the optimal control is as follows (I quote from the last article) :

LQR: We solve the problem

Let's try to draw the same curves in a slightly different way than last time. Recording amounts is very cumbersome, let's reduce to the matrix form. First of all, we will reduce our entire trajectory and our entire control sequence to healthy column matrices:

Then the relationship of coordinates with the control can be written as follows:

Or even more briefly:

In this article, the sticks above the matrices mean reducing small matrices that describe one iteration into large for the whole task. For our particular problem, the degrees of the matrix A ^ n are as follows:

For the sake of clarity, let's explicitly write Ā and B И:

And even I will give the source code that fills these matrices in numpy:

import matplotlib.pyplot as plt

import numpy as np

np.set_printoptions(precision=3,suppress=True,linewidth=128,threshold=100000)

def build_Abar(A, N):

n = A.shape[0]

Abar = np.matrix( np.zeros(((N+1)*n,n)) )

for i in range(N+1):

Abar[i*n:(i+1)*n,:] = A**i

return Abar

def build_Bbar(A, B, N):

(n,m) = B.shape

Bbar = np.matrix( np.zeros(((N+1)*n,N*m)) )

for i in range(N):

for j in range(N-i):

Bbar[(N-i)*n:(N-i+1)*n,(N-i-j-1)*m:(N-i-j)*m] = (A**j)*B

return Bbar

A = np.matrix([[1,1],[0,1]])

B = np.matrix([[0],[1]])

(n,m) = B.shape

N=60

Abar = build_Abar(A, N)

Bbar = build_Bbar(A, B, N)

We reduce to large matrices and coefficients of our quadratic form:

In our particular car problem, Q̄ is the identity matrix and R̄ is the identity matrix multiplied by scalar 256. Then the quality control function is written in matrix form very briefly:

And we look for the minimum of this function with one linear restriction:

Note to yourself: a great reason to deal with the Lagrange multipliers, the Legendre transform and the Hamilton function obtained from this. And also how the explanation of LQR follows from this through dynamic programming and Riccati equations. Apparently, you still need to write articles :)

I am lazy, so this time we will go in the most direct way. J depends on two arguments that are linearly related to each other. Instead of minimizing the function with linear constraints, let's just remove the extra variables from it, namely, replace the occurrences of X with на x_0 + B̄ U in it:

This is a rather boring, but completely mechanical conclusion that does not require the brain. When moving from the penultimate line to the last one, we took advantage of the fact that Q̄ is symmetric, this allowed us to write Q̄ + Q̄ ^ T = 2 Q̄.

Now we just need to find the minimum of the quadratic function, no restrictions are imposed on the variables. We recall the tenth grade of the school and equate to zero partial derivatives.

If you can differentiate ordinary polynomials, then you can take derivatives of matrix ones as well. Just in case, I recall the rules of differentiation:

Again, a little bit of tedious writing of squiggles and we get our partial derivative with respect to the control vector U (we again used the symmetry of Q̄ and R̄):

Then the optimal control vector U * can be found as follows:

Well, how do we write the squiggles, but still didn’t draw anything? Let's fix it!

K=-(Bbar.transpose()*Bbar+np.identity(N*m)*256).I*Bbar.transpose()*Abar

print("K = ",K)

X0=np.matrix([[3.1],[0.5]])

U=K*X0

X=Abar*X0 + Bbar*U

plt.plot(range(N+1), X[0::2], label="x(t)", color='red')

plt.plot(range(N+1), X[1::2], label="v(t)", color='green')

plt.plot(range(N), U, label="u(t)", color='blue')

plt.legend()

plt.show()

This will give the following picture, note that it perfectly matches the one that we received in the previous article (drawing here ):

LQR: Solution Analysis

Linear connection of control and system status

So, the theory tells us that (with a long event horizon) the optimal control linearly depends on the remaining distance to the final target. Last time we proved it for the one-dimensional case, in two-dimensional believing the word. This time again I will not rigorously prove it, leaving this for a later discussion of the Lagrange multipliers. However, it is intuitively clear that it is. After all, we got the formula U = K * x0, where the matrix K does not depend on the state of the system, but only on the structure of the differential equation (on the matrices A and B).

That is, u_0 = k_0 * x_0. By the way, we got k_0 equal to [-0.052 -0.354]: Compare this result with what I got with the help of OLS and with the one that was obtained by solving the Riccati equation (in the comments to the previous article).

K = [[-0.052 -0.354]

[-0.034 -0.281]

[-0.019 -0.215]

[-0.008 -0.158]

[ 0. -0.11 ]

...

Intuitively, if u0 depends linearly on x_0, then with a long event horizon the same should be for x1.

Continuing the discussion, we expect that the control u_i should be considered as u_i = k_0 * x_i. That is, the optimal control should be obtained by the scalar product of the constant vector k_0 and the residual distance to the target x_i.

But our result says exactly the opposite! We found that u_i = k_i * x_0! That is, we found the sequence k_i, which depends only on the structure of the equation of the system, but not on its state. And control is obtained using the scalar product of a constant vector (the initial position of the system) and the sequence found ...

That is, if everything is fine, then we should have the equality k_0 * x_i = u_i = k_i * x_0. Let's draw an illustrative graph, taking for simplicity x_0 equal to k_0. On one axis of the graph, we postpone the coordinate of the machine, on the other the speed. Starting from one point k_0 = x_0 we get two different sequences of points k_i and x_i converging to zero coordinates.

Drawing is available here .

If roughly, then k_i * x_0 form a sequence of projections k_i onto the vector x_0. The same for k_0 * x_i, this is a sequence of projections x_i onto k_0. It is clearly seen on our graph that these projections coincide. Once again, this is not a proof, but only an illustration.

Thus, we have obtained a dynamical system of the form x_ {k + 1} = (A + BK) x_k. If the eigenvalues of the matrix A + BK are less than unity in absolute value, then this system converges to the origin at any initial x_0. In other words, the solution of this differential equation is the (matrix) exponent.

In the insane asylum, where mathematics students could not stand the load, one of them runs with a knife and shouts: “I’ll differentiate everyone!” Patients scatter, except for another math student. When the first bursts into the chamber with a cry of "I will differentiate!", The second phlegmatically remarks: "I am in the X degree!" The first, waving a knife: "I will differentiate on a game!"

As an illustration, I accidentally selected several hundred different x_0, and drew the trajectories of our machine. Take a drawing here . Here is the resulting picture:

All the trajectories safely converge to zero, a slight twisting of the trajectories indicates that the matrix A + BK is a rotation and contraction matrix (has complex eigenvalues smaller than unity in absolute value). If I am not mistaken, then this picture is called a phase portrait.

Open and closed control loops

So, we cheerfully minimized our function J, and got the optimal control vector U0 as K * X0:

U=K*X0

X=Abar*X0 + Bbar*U

Is there anyway the difference between considering control as or as u_i = k_0 * x_i? There is a colossal one if we have unaccounted factors in our system (that is, almost always). Let's imagine that our car does not roll on a horizontal surface, but such a hill can meet on its way:

If we calculate the optimal control without taking this hill into account, trouble may happen

(drawing here ): The

machine will not only stop, but also completely go to infinity to the right, I even drew x_i graphics only one third, then it grows linearly. If we close the control loop, then small disturbances in the dynamics of the system do not lead to catastrophic consequences:

for i in range(N):

Xi = X[i*n:(i+1)*n,0]

U[i*m:(i+1)*m,0] = K[0:m,:]*Xi

idxslope = int((Xi[0,0]+5)/10.*1000.)

if (idxslope<0 or idxslope>=1000):

idxslope = 0

X[(i+1)*n:(i+2)*n,0] = A*Xi + B*U[i*m:(i+1)*m,0] + B*slope[idxslope]

Building a linear observer

So, we can control a dynamic system so that it strives to get to the origin. But what if we would like the car to stop not at x = 0, but at x = 3? Obviously, it is enough to subtract the desired result from the current state x_i, and then everything is as usual. Moreover, this desired result may well not be constant, but change over time. Can we follow another dynamic system?

Let's assume that we do not control our machine directly, but at the same time we want to monitor it. We measure both states of the machine using some sensors that have a rather low resolution:

I just took our curves and made their values coarser. Let's try to filter out noise introduced by measurements. As usual, we arm ourselves with the least squares.

When you have a hammer in your hands, everything around you seems like nails.

So, our machine obeys the law x_ {k + 1} = A x_k + B u_k, so let's build a diffus observer that follows the same law:

Here y_k is the correction term through which we will tell our observer how far we are from the real state of things. Like last time, we write the equation in matrix form:

Okay. Now what do we want? We want the correction terms y_k to be small, and so that z_k converges to x_k:

Like last time, we express Z through Y, we equate the partial derivatives with respect to Y to zero, and we get an expression for the optimal correction term:

Here vague forebodings start to torment me ... Somewhere I already saw it! Ok, let's remember how X is related to U, we get the following expression:

But this is, up to renaming letters, an expression for U *! And in fact, let's go back a little bit, too quickly we rushed into action. By combining the dynamics for x_k with the dynamics for z_k we get the dynamics for the error x_k-z_k.

That is, our task of tracking the machine is absolutely equivalent to the task of a linear-quadratic controller. We find the optimal gain matrix, which in our case will be equal to [[-0.594, -0.673], [- 0.079, -0.774]], and we obtain the following observer code:

for i in range(N):

Zi = Z[i*n:(i+1)*n,0]

Xi = X[i*n:(i+1)*n,0]

Z[(i+1)*n:(i+2)*n,0] = A*Zi + B*U[i*m:(i+1)*m,0] + np.matrix([[-0.594,-0.673],[-0.079,-0.774]])*(Zi-Xi)

But the filtered curves, they are imperfect, but still closer to reality than low-resolution measurements.

The dynamics of the system is determined by the eigenvalues of the matrix [[1-0.594.1-0.673], [- 0.079.1-0.774]], which are equal to (0.316 + - 0.133 i).

And let's go even further. Let's assume that we can only measure position, but not for the speed of the sensor. Will we be able to build an observer in this case? Here we go beyond LQR, but not too far. Let's write the following system:

Matrix C prescribes to us what we can actually observe in our system. Our task is to find a matrix L that allows the observer to behave well. Let’s write with our hands that the eigenvalues of the matrix A + LC, which describes the general dynamics of the observer, should be approximately the same as the eigenvalues of the matrix from the previous example. Let's approximate the previous eigenvalues by fractions (1/3 + - 1/6 i). We write the characteristic polynomial of the matrix A + LC, and make it equal to the polynomial (x- (1/3 + 1/6 * i)) * (x- (1 / 3-1 / 6 * i). Then we solve the simplest system from linear equations, and the matrix L is found.Calculations

can be checked here , and drawing graphs here .

This is how the work schedules of our observer look if we measure (and with a large error) only the coordinate of our machine:

From very incomplete data, we can very well restore the dynamics of the entire system! By the way, the linear observer we built in fact is the famous Kalman filter, but we will talk about it sometime next time.

Question for attentive readers

If, nevertheless, Y * is brought to the logical end (take the code here) , then the resulting observer curves are much, much smoother (and closer to the truth!) Than those that should be equivalent to them. Why?