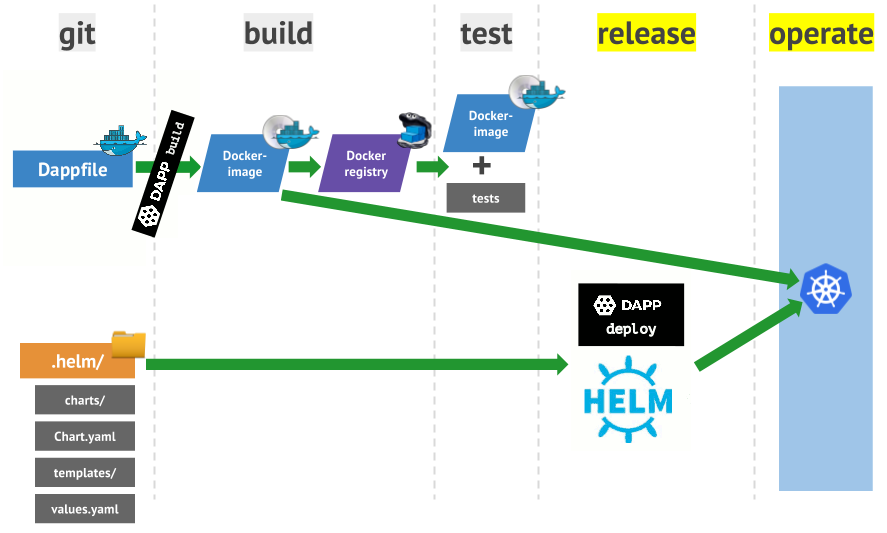

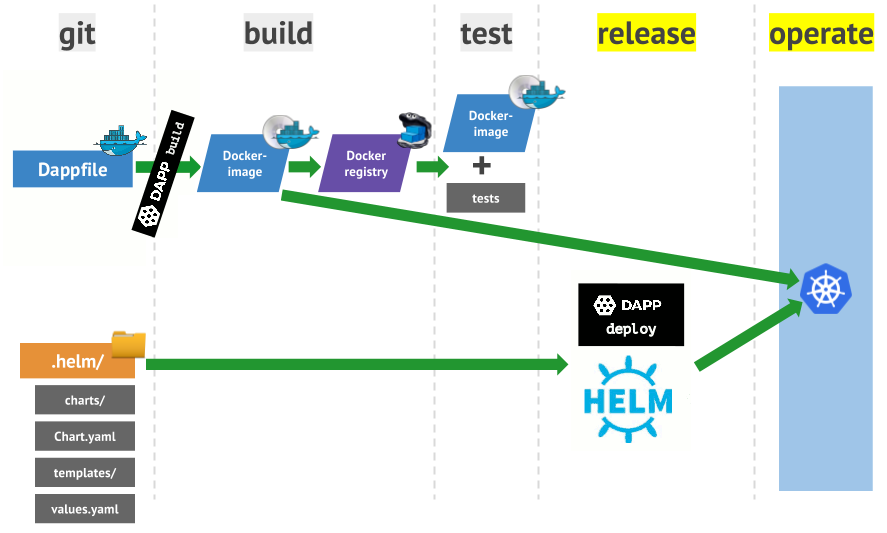

Practice with dapp. Part 2. Deploying Docker images in Kubernetes using Helm

dapp is our Open Source utility that helps DevOps engineers support CI / CD processes ( Updated August 13, 2019: the dapp project has now been renamed to werf , its code has been completely rewritten to Go, and the documentation has been significantly improved) (more about it read in the announcement ) . The documentation for it gives an example of building a simple application, and in more detail this process (with a demonstration of the main features of dapp) was presented in the first part of the article . Now, based on the same simple application, I'll show you how dapp works with the Kubernetes cluster.

As in the first article, all add-ons for symfony-demo application code are inour repository . But

To go through the steps, you need to start with the branch

Now you need to create a Kubernetes cluster where dapp will launch the application. To do this, we will use Minikube as the recommended way to start the cluster on the local machine.

The installation is simple and consists of downloading Minikube and the kubectl utility. Instructions are available at the following links:

Note : Read also our translation of the article “ Getting Started in Kubernetes with Minikube ”.

After installation, you need to run

After a successful start, you can see what is in the cluster:

The command will show all resources in the namespace ( namespace ) by default (

So, we launched the Kubernetes cluster in the virtual machine. What else will you need to run the application?

First, for this you need to load the image to where the cluster can get it. You can use the general Docker Registry or install your Registry in the cluster (we do this for production clusters). For local development, the second option is also better, and implementing it with dapp is quite simple - there is a special command for this:

After its execution, the following redirection appears in the list of system processes:

... and in the namespace under the name the

We will test the launched Registry, putting our image into it with the team

It can be seen that the image tag in the Registry is composed of the name dimg and the name of the branch (through a hyphen).

The second part needed to run the application in the cluster is the resource configuration. The standard Kubernetes cluster management utility is

However, dapp does not use directly

Therefore, our next step is to install Helm. Official instructions can be found in the project documentation .

After installation, you must run

(Hereinafter, the “!!!” sign manually marks lines that are worth paying attention to.)

That is: Deployment appeared under the name

The third part is the configuration itself for the application. At this stage, you need to understand what you need to lay out in a cluster for the application to work.

The following scheme is proposed:

IngressController is an optional component of the Kubernetes cluster for organizing load-balanced web applications. This is essentially nginx, the configuration of which depends on the resources of Ingress added to the cluster. The component must be installed separately, and addon exists for minikube. You can read more about it in this article in English, but for now, just run the installation of IngressController :

... and see what appeared in the cluster:

How to check? IngressController includes

The result is positive - an answer comes from nginx with a string

Now you can describe the configuration of the application. The basic configuration will help generate the command

dapp expects this structure in a directory called

We have now created a chart description. Chart is a configuration unit for Helm, you can think of it as a package. For example, there is a chart for nginx, for MySQL, for Redis. And with the help of such charts you can collect the necessary configuration in the cluster. Helm does not upload separate images to Kubernetes, namely Charts ( official documentation ).

A file

File

The directory

Finally, a directory

First, we describe a simple Deployment option for our application:

In the configuration it is described that we need one replica so far, and in it is

Mentioned in the config

The

Next, we describe Service :

With this resource, we create a DNS record

These two descriptions are combined through

Now everything is ready to run

We see that in the cluster appears under in the state

... and after a while everything works:

Created ReplicaSet , Pod , Service , that is, the application is running. This can be checked "the old fashioned way" by going into the container:

Now, to make the application available on

Has appeared

In general, we can assume that the deployment of the application in Minikube succeeded. The query shows that the IngressController is forwarding to port 443 and the application responds with what needs to be checked

To see the normal page of the application, you need to deploy the application with a different setting or comment out this block for tests. The repeated deployment in Kubernetes (after the changes in the application code) looks like this:

Under recreated, you can go to the browser and see a beautiful picture:

With Minikube and Helm, you can test your applications in the Kubernetes cluster, and dapp will help you build, deploy your Registry and the application itself.

The article does not mention secret variables that can be used in templates for private keys, passwords and other private information. We will write about this separately.

Read also in our blog:

As in the first article, all add-ons for symfony-demo application code are inour repository . But

Vagrantfilethis time it will not work out: Docker and dapp will have to be installed locally. To go through the steps, you need to start with the branch

dapp_build, which was added Dappfilein the first article.$ git clone https://github.com/flant/symfony-demo.git

$ cd symfony-demo

$ git checkout dapp_build

$ git checkout -b kube_test

$ dapp dimg buildStarting a cluster using Minikube

Now you need to create a Kubernetes cluster where dapp will launch the application. To do this, we will use Minikube as the recommended way to start the cluster on the local machine.

The installation is simple and consists of downloading Minikube and the kubectl utility. Instructions are available at the following links:

Note : Read also our translation of the article “ Getting Started in Kubernetes with Minikube ”.

After installation, you need to run

minikube setup. Minikube will download the ISO and launch a virtual machine from it in VirtualBox. After a successful start, you can see what is in the cluster:

$ kubectl get all

NAME READY STATUS RESTARTS AGE

po/hello-minikube-938614450-zx7m6 1/1 Running 3 71d

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/hello-minikube 10.0.0.102 8080:31429/TCP 71d

svc/kubernetes 10.0.0.1 443/TCP 71d

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/hello-minikube 1 1 1 1 71d

NAME DESIRED CURRENT READY AGE

rs/hello-minikube-938614450 1 1 1 71d The command will show all resources in the namespace ( namespace ) by default (

default). A list of all namespaces can be viewed through kubectl get ns.Preparation, step # 1: registry for images

So, we launched the Kubernetes cluster in the virtual machine. What else will you need to run the application?

First, for this you need to load the image to where the cluster can get it. You can use the general Docker Registry or install your Registry in the cluster (we do this for production clusters). For local development, the second option is also better, and implementing it with dapp is quite simple - there is a special command for this:

$ dapp kube minikube setup

Restart minikube [RUNNING]

minikube: Running

localkube: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.100

Starting local Kubernetes v1.6.4 cluster...

Starting VM...

Moving files into cluster...

Setting up certs...

Starting cluster components...

Connecting to cluster...

Setting up kubeconfig...

Kubectl is now configured to use the cluster.

Restart minikube [OK] 34.18 sec

Wait till minikube ready [RUNNING]

Wait till minikube ready [OK] 0.05 sec

Run registry [RUNNING]

Run registry [OK] 61.44 sec

Run registry forwarder daemon [RUNNING]

Run registry forwarder daemon [OK] 5.01 secAfter its execution, the following redirection appears in the list of system processes:

username 13317 0.5 0.4 57184 36076 pts/17 Sl 14:03 0:00 kubectl port-forward --namespace kube-system kube-registry-6nw7m 5000:5000... and in the namespace under the name the

kube-systemRegistry is created and a proxy to it:$ kubectl get -n kube-system all

NAME READY STATUS RESTARTS AGE

po/kube-addon-manager-minikube 1/1 Running 2 22m

po/kube-dns-1301475494-7kk6l 3/3 Running 3 22m

po/kube-dns-v20-g7hr9 3/3 Running 9 71d

po/kube-registry-6nw7m 1/1 Running 0 3m

po/kube-registry-proxy 1/1 Running 0 3m

po/kubernetes-dashboard-9zsv8 1/1 Running 3 71d

po/kubernetes-dashboard-f4tp1 1/1 Running 1 22m

NAME DESIRED CURRENT READY AGE

rc/kube-dns-v20 1 1 1 71d

rc/kube-registry 1 1 1 3m

rc/kubernetes-dashboard 1 1 1 71d

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.0.0.10 53/UDP,53/TCP 71d

svc/kube-registry 10.0.0.142 5000/TCP 3m

svc/kubernetes-dashboard 10.0.0.249 80:30000/TCP 71d

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 22m

NAME DESIRED CURRENT READY AGE

rs/kube-dns-1301475494 1 1 1 22m We will test the launched Registry, putting our image into it with the team

dapp dimg push --tag-branch :minikube. Used here :minikubeis an alias built into dapp specifically for Minikube, which will be converted to localhost:5000/symfony-demo.$ dapp dimg push --tag-branch :minikube

symfony-demo-app

localhost:5000/symfony-demo:symfony-demo-app-kube_test [PUSHING]

pushing image `localhost:5000/symfony-demo:symfony-demo-app-kube_test` [RUNNING]

The push refers to a repository [localhost:5000/symfony-demo]

0ea2a2940c53: Preparing

ffe608c425e1: Preparing

5c2cc2aa6663: Preparing

edbfc49bce31: Preparing

308e5999b491: Preparing

9688e9ffce23: Preparing

0566c118947e: Preparing

6f9cf951edf5: Preparing

182d2a55830d: Preparing

5a4c2c9a24fc: Preparing

cb11ba605400: Preparing

6f9cf951edf5: Waiting

182d2a55830d: Waiting

5a4c2c9a24fc: Waiting

cb11ba605400: Waiting

9688e9ffce23: Waiting

0566c118947e: Waiting

0ea2a2940c53: Layer already exists

308e5999b491: Layer already exists

ffe608c425e1: Layer already exists

edbfc49bce31: Layer already exists

5c2cc2aa6663: Layer already exists

0566c118947e: Layer already exists

9688e9ffce23: Layer already exists

182d2a55830d: Layer already exists

6f9cf951edf5: Layer already exists

cb11ba605400: Layer already exists

5a4c2c9a24fc: Layer already exists

symfony-demo-app-kube_test: digest: sha256:5c55386de5f40895e0d8292b041d4dbb09373b78d398695a1f3e9bf23ee7e123 size: 2616

pushing image `localhost:5000/symfony-demo:symfony-demo-app-kube_test` [OK] 0.54 secIt can be seen that the image tag in the Registry is composed of the name dimg and the name of the branch (through a hyphen).

Preparation Step 2: Resource Configuration (Helm)

The second part needed to run the application in the cluster is the resource configuration. The standard Kubernetes cluster management utility is

kubectl. If you need to create a new resource ( Deployment , Service , Ingress , etc.) or change the properties of an existing resource, the YAML file with the configuration is transferred to the utility input. However, dapp does not use directly

kubectl, but works with the so-called package manager - Helm , which provides the templating of YAML files and manages the rollout to the cluster itself. Therefore, our next step is to install Helm. Official instructions can be found in the project documentation .

After installation, you must run

helm init. What she does? Helm consists of the client part that we installed and the server part. The command helm initinstalls the server part ( tiller). Let's see what appeared in namespace kube-system:$ kubectl get -n kube-system all

NAME READY STATUS RESTARTS AGE

po/kube-addon-manager-minikube 1/1 Running 2 1h

po/kube-dns-1301475494-7kk6l 3/3 Running 3 1h

po/kube-dns-v20-g7hr9 3/3 Running 9 71d

po/kube-registry-6nw7m 1/1 Running 0 1h

po/kube-registry-proxy 1/1 Running 0 1h

po/kubernetes-dashboard-9zsv8 1/1 Running 3 71d

po/kubernetes-dashboard-f4tp1 1/1 Running 1 1h

!!! po/tiller-deploy-3703072393-bdqn8 1/1 Running 0 3m

NAME DESIRED CURRENT READY AGE

rc/kube-dns-v20 1 1 1 71d

rc/kube-registry 1 1 1 1h

rc/kubernetes-dashboard 1 1 1 71d

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.0.0.10 53/UDP,53/TCP 71d

svc/kube-registry 10.0.0.142 5000/TCP 1h

svc/kubernetes-dashboard 10.0.0.249 80:30000/TCP 71d

!!! svc/tiller-deploy 10.0.0.196 44134/TCP 3m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 1h

!!! deploy/tiller-deploy 1 1 1 1 3m

NAME DESIRED CURRENT READY AGE

rs/kube-dns-1301475494 1 1 1 1h

!!! rs/tiller-deploy-3703072393 1 1 1 3m (Hereinafter, the “!!!” sign manually marks lines that are worth paying attention to.)

That is: Deployment appeared under the name

tiller-deploywith one ReplicaSet and one hearth ( Pod ). For Deployment , the Service ( tiller-deploy) of the same name has been made , which allows access through port 44134.Preparation step 3: IngressController

The third part is the configuration itself for the application. At this stage, you need to understand what you need to lay out in a cluster for the application to work.

The following scheme is proposed:

- the application is Deployment . For starters, this will be a single ReplicaSet from one hearth, as is done for the Registry;

- the application responds on port 8000, so you need to define Service so that it can respond to requests from outside;

- we have a web application, so we need a way to get packets from users on port 80. This is done by the Ingress resource . For such resources to work, you need to configure IngressController .

IngressController is an optional component of the Kubernetes cluster for organizing load-balanced web applications. This is essentially nginx, the configuration of which depends on the resources of Ingress added to the cluster. The component must be installed separately, and addon exists for minikube. You can read more about it in this article in English, but for now, just run the installation of IngressController :

$ minikube addons enable ingress

ingress was successfully enabled... and see what appeared in the cluster:

$ kubectl get -n kube-system all

NAME READY STATUS RESTARTS AGE

!!! po/default-http-backend-vbrf3 1/1 Running 0 2m

po/kube-addon-manager-minikube 1/1 Running 2 3h

po/kube-dns-1301475494-7kk6l 3/3 Running 3 3h

po/kube-dns-v20-g7hr9 3/3 Running 9 72d

po/kube-registry-6nw7m 1/1 Running 0 3h

po/kube-registry-proxy 1/1 Running 0 3h

po/kubernetes-dashboard-9zsv8 1/1 Running 3 72d

po/kubernetes-dashboard-f4tp1 1/1 Running 1 3h

!!! po/nginx-ingress-controller-hmvg9 1/1 Running 0 2m

po/tiller-deploy-3703072393-bdqn8 1/1 Running 0 1h

NAME DESIRED CURRENT READY AGE

!!! rc/default-http-backend 1 1 1 2m

rc/kube-dns-v20 1 1 1 72d

rc/kube-registry 1 1 1 3h

rc/kubernetes-dashboard 1 1 1 72d

!!! rc/nginx-ingress-controller 1 1 1 2m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

!!! svc/default-http-backend 10.0.0.131 80:30001/TCP 2m

svc/kube-dns 10.0.0.10 53/UDP,53/TCP 72d

svc/kube-registry 10.0.0.142 5000/TCP 3h

svc/kubernetes-dashboard 10.0.0.249 80:30000/TCP 72d

svc/tiller-deploy 10.0.0.196 44134/TCP 1h

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 3h

deploy/tiller-deploy 1 1 1 1 1h

NAME DESIRED CURRENT READY AGE

rs/kube-dns-1301475494 1 1 1 3h

rs/tiller-deploy-3703072393 1 1 1 1h How to check? IngressController includes

default-http-backend, which responds with a 404 error to all pages for which there is no handler. This can be seen with this command:$ curl -i $(minikube ip)

HTTP/1.1 404 Not Found

Server: nginx/1.13.1

Date: Fri, 14 Jul 2017 14:29:46 GMT

Content-Type: text/plain; charset=utf-8

Content-Length: 21

Connection: keep-alive

Strict-Transport-Security: max-age=15724800; includeSubDomains;

default backend - 404The result is positive - an answer comes from nginx with a string

default backend - 404.Configuration Description for Helm

Now you can describe the configuration of the application. The basic configuration will help generate the command

helm create имя_приложения:$ helm create symfony-demo

$ tree symfony-demo

symfony-demo/

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── ingress.yaml

│ ├── NOTES.txt

│ └── service.yaml

└── values.yamldapp expects this structure in a directory called

.helm( see documentation ), so you need to rename it symfony-demoto .helm. We have now created a chart description. Chart is a configuration unit for Helm, you can think of it as a package. For example, there is a chart for nginx, for MySQL, for Redis. And with the help of such charts you can collect the necessary configuration in the cluster. Helm does not upload separate images to Kubernetes, namely Charts ( official documentation ).

A file

Chart.yamlis a description of the chart of our application. Here you need to specify at least the application name and version:$ cat Chart.yaml

apiVersion: v1

description: A Helm chart for Kubernetes

name: symfony-demo

version: 0.1.0File

values.yaml- a description of the variables that will be available in the templates. For example, there is a file in the generated file image: repository: nginx. This variable will be available through this structure: {{ .Values.image.repository }}. The directory

chartsis empty for now, because our chart application does not use external charts yet. Finally, a directory

templates- here are stored templates of YAML files with a description of resources for their placement in the cluster. The generated templates are not much needed, so you can familiarize yourself with them and delete them. First, we describe a simple Deployment option for our application:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: {{ .Chart.Name }}-backend

spec:

replicas: 1

template:

metadata:

labels:

app: {{ .Chart.Name }}-backend

spec:

containers:

- command: [ '/opt/start.sh' ]

image: {{ tuple "symfony-demo-app" . | include "dimg" }}

imagePullPolicy: Always

name: {{ .Chart.Name }}-backend

ports:

- containerPort: 8000

name: http

protocol: TCP

env:

- name: KUBERNETES_DEPLOYED

value: "{{ now }}"In the configuration it is described that we need one replica so far, and in it is

templateindicated which pods need to be replicated. This description indicates the image that will be launched and the ports that are available to other containers in the hearth. Mentioned in the config

.Chart.Nameis the value of charts.yaml. The

KUBERNETES_DEPLOYEDvariable is needed so that Helm updates the pods if we update the image without changing the tag. This is convenient for debugging and local development. Next, we describe Service :

apiVersion: v1

kind: Service

metadata:

name: {{ .Chart.Name }}-srv

spec:

type: ClusterIP

selector:

app: {{ .Chart.Name }}-backend

ports:

- name: http

port: 8000

protocol: TCPWith this resource, we create a DNS record

symfony-demo-app-srvby which other Deployments will be able to access the application. These two descriptions are combined through

---and written to .helm/templates/backend.yaml, after which you can deploy the application!First deployment

Now everything is ready to run

dapp kube deploy( Updated August 13, 2019: for more details, see the werf documentation ):$ dapp kube deploy :minikube --image-version kube_test

Deploy release symfony-demo-default [RUNNING]

Release "symfony-demo-default" has been upgraded. Happy Helming!

LAST DEPLOYED: Fri Jul 14 18:32:38 2017

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1beta1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

symfony-demo-app-backend 1 1 1 0 7s

==> v1/Service

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

symfony-demo-app-srv 10.0.0.173 8000/TCP 7s

Deploy release symfony-demo-default [OK] 7.02 sec We see that in the cluster appears under in the state

ContainerCreating:po/symfony-demo-app-backend-3899272958-hzk4l 0/1 ContainerCreating 0 24s... and after a while everything works:

$ kubectl get all

NAME READY STATUS RESTARTS AGE

po/hello-minikube-938614450-zx7m6 1/1 Running 3 72d

!!! po/symfony-demo-app-backend-3899272958-hzk4l 1/1 Running 0 47s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/hello-minikube 10.0.0.102 8080:31429/TCP 72d

svc/kubernetes 10.0.0.1 443/TCP 72d

!!! svc/symfony-demo-app-srv 10.0.0.173 8000/TCP 47s

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/hello-minikube 1 1 1 1 72d

deploy/symfony-demo-app-backend 1 1 1 1 47s

NAME DESIRED CURRENT READY AGE

rs/hello-minikube-938614450 1 1 1 72d

!!! rs/symfony-demo-app-backend-3899272958 1 1 1 47s Created ReplicaSet , Pod , Service , that is, the application is running. This can be checked "the old fashioned way" by going into the container:

$ kubectl exec -ti symfony-demo-app-backend-3899272958-hzk4l bash

root@symfony-demo-app-backend-3899272958-hzk4l:/# curl localhost:8000We open access

Now, to make the application available on

$(minikube ip), add the Ingress resource . To do this, we describe it in the .helm/templates/backend-ingress.yamlfollowing way:apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: {{ .Chart.Name }}

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- http:

paths:

- path: /

backend:

serviceName: {{ .Chart.Name }}-srv

servicePort: 8000serviceNamemust match the Service name that was declared in backend.yaml. Deploy the application again:$ dapp kube deploy :minikube --image-version kube_test

Deploy release symfony-demo-default [RUNNING]

Release "symfony-demo-default" has been upgraded. Happy Helming!

LAST DEPLOYED: Fri Jul 14 19:00:28 2017

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/Service

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

symfony-demo-app-srv 10.0.0.173 8000/TCP 27m

==> v1beta1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

symfony-demo-app-backend 1 1 1 1 27m

==> v1beta1/Ingress

NAME HOSTS ADDRESS PORTS AGE

symfony-demo-app * 192.168.99.100 80 2s

Deploy release symfony-demo-default [OK] 3.06 sec Has appeared

v1beta1/Ingress! Let's try to access the application through the IngressController . This can be done through the IP cluster:$ curl -Lik $(minikube ip)

HTTP/1.1 301 Moved Permanently

Server: nginx/1.13.1

Date: Fri, 14 Jul 2017 16:13:45 GMT

Content-Type: text/html

Content-Length: 185

Connection: keep-alive

Location: https://192.168.99.100/

Strict-Transport-Security: max-age=15724800; includeSubDomains;

HTTP/1.1 403 Forbidden

Server: nginx/1.13.1

Date: Fri, 14 Jul 2017 16:13:45 GMT

Content-Type: text/html; charset=UTF-8

Transfer-Encoding: chunked

Connection: keep-alive

Host: 192.168.99.100

X-Powered-By: PHP/7.0.18-0ubuntu0.16.04.1

Strict-Transport-Security: max-age=15724800; includeSubDomains;

You are not allowed to access this file. Check app_dev.php for more information.In general, we can assume that the deployment of the application in Minikube succeeded. The query shows that the IngressController is forwarding to port 443 and the application responds with what needs to be checked

app_dev.php. This is the specifics of the selected application (symfony), because the file is web/app_dev.phpeasy to notice:// This check prevents access to debug front controllers that are deployed by

// accident to production servers. Feel free to remove this, extend it, or make

// something more sophisticated.

if (isset($_SERVER['HTTP_CLIENT_IP'])

|| isset($_SERVER['HTTP_X_FORWARDED_FOR'])

|| !(in_array(@$_SERVER['REMOTE_ADDR'], ['127.0.0.1', 'fe80::1', '::1']) || php_sapi_name() === 'cli-server')

) {

header('HTTP/1.0 403 Forbidden');

exit('You are not allowed to access this file. Check '.basename(__FILE__).' for more information.');

}To see the normal page of the application, you need to deploy the application with a different setting or comment out this block for tests. The repeated deployment in Kubernetes (after the changes in the application code) looks like this:

$ dapp dimg build

...

Git artifacts: latest patch ... [OK] 1.86 sec

signature: dimgstage-symfony-demo:13a2487a078364c07999d1820d4496763c2143343fb94e0d608ce1a527254dd3

Docker instructions ... [OK] 1.46 sec

signature: dimgstage-symfony-demo:e0226872a5d324e7b695855b427e8b34a2ab6340ded1e06b907b165589a45c3b

instructions:

EXPOSE 8000

$ dapp dimg push --tag-branch :minikube

...

symfony-demo-app-kube_test: digest: sha256:eff826014809d5aed8a82a2c5cfb786a13192ae3c8f565b19bcd08c399e15fc2 size: 2824

pushing image `localhost:5000/symfony-demo:symfony-demo-app-kube_test` [OK] 1.16 sec

localhost:5000/symfony-demo:symfony-demo-app-kube_test [OK] 1.41 sec

$ dapp kube deploy :minikube --image-version kube_test

$ kubectl get all

!!! po/symfony-demo-app-backend-3438105059-tgfsq 1/1 Running 0 1mUnder recreated, you can go to the browser and see a beautiful picture:

Total

With Minikube and Helm, you can test your applications in the Kubernetes cluster, and dapp will help you build, deploy your Registry and the application itself.

The article does not mention secret variables that can be used in templates for private keys, passwords and other private information. We will write about this separately.

PS

Read also in our blog:

- The first part of the article : “ Practice with dapp. Part 1: Building simple applications ";

- “ Werf is our CI / CD tool in Kubernetes (review and video report) ” (Dmitry Stolyarov; May 27, 2019 at DevOpsConf) ;

- “We officially represent dapp - DevOps-utility for supporting CI / CD ”;

- “ Build projects with dapp. Part 1: Java ";

- “ Build projects with dapp. Part 2: JavaScript (frontend) ";

- “ Build and install applications in Kubernetes using dapp and GitLab CI ”;

- “ We assemble Docker images for CI / CD quickly and conveniently together with dapp (review and video from the report) ”;

- “ Getting Started at Kubernetes with Minikube ” (translation) ;

- “ Our experience with Kubernetes in small projects ” (video report, which includes an introduction to the Kubernetes technical device .