Technological stack of classification of texts in natural languages

In this post, we will consider modern approaches used to classify natural language texts by their subject. The selected methods of working with documents are determined by the general complex specificity of the problem - noisy training samples, samples of insufficient size or even missing samples, a strong skew of class sizes and so on. In general, real practical tasks. I ask for cat.

There are two main tasks - this is binary classification and multiclass classification. The binary classification gives us the answer, is this document interesting at all, or is it not at all in the subject and is not worth reading. Here we have an imbalance in class sizes of about 1 to 20, that is, twenty good ones fall into one good document. But the training sample itself is problematic - there are noises both in completeness and in accuracy. The noise in completeness is when not all good, good documents are marked as good (FalseNegative), and the noise in accuracy is when not all the documents marked as good are really good (FalsePositive).

The multiclass classification poses our task to determine the subject of the document and attribute it to one of hundreds of thematic classes. The training sample for this problem is very noisy in terms of completeness, but quite clean in accuracy - all the same, markup is done only manually, not like in the first case, on heuristics. But then, thanks to a large number of classes, we begin to enjoy strong distortions in the number of documents per class. The maximum recorded bias is more than 6 thousand. That is, when in one class of documents more than in another, 6 thousand times. Just because there is one document in the second grade. Only. But this is not the largest distortion available, since there are classes in which there are zero documents. Well, the assessors did not find suitable documents - turn around as you know.

That is, our problems are as follows:

We will solve these problems sequentially by developing one classifier - signature, then another - covering the weaknesses of the first - template, and then polish it all with machine learning in the form of xgboost and regression. And on top of this ensemble of models we will roll over a method that covers the shortcomings of all of the above - namely, the method of working without training samples in general.

Google and Yandex know which post to show first when people ask about Word2Vec. Therefore, we will give a brief squeeze from that post and pay attention to what is not written there. Of course, there are other good methods in the distribution semantics section - GloVe, AdaGram, Text2Vec, Seq2Vec and others. But I did not see any strong advantages over W2V in them. If you learn to work with W2V vectors, you can get amazing results.

* Not really

Enter word or sentence (EXIT to break): coffee coffee

0.734483

tea 0.690234

tea 0.688656

cappuccino 0.666638

coffee 0.636362

cocoa 0.619801

espresso 0.599390

Enter word or sentence (EXIT to break): coffee

beans 0.757635

instant 0.709936

tea 0.709579

coffe 0.704036

mellanrost 0.694822

sublemented 0.694553

ground 0.690066

coffee 0.680409

Enter word or sentence (EXIT to break): mobile phone

cell 0.811114

phone 0.776416

smartphone 0.730191

phone 0.719766

mobile 0.717972

mobile 0.706131

Find a word that relates to Ukraine in the same way as a dollar refers to the United States (that is, what is the currency of Ukraine?):

Enter three words (EXIT to break): usa dollar Ukraine

hryvnia 0.622719

dollar 0.607078

hryvnia 0.597969

ruble 0.596636

Find such A word that refers to germany is the same as France refers to france (that is, a translation of the word germany):

Enter three words (EXIT to break): france France germany

germany 0.748171

england 0.692712

netherlands 0.665715

united kingdom 0.651329

Learning quickly from unprepared texts:

The elements of the vector do not make sense, only the distances between the vectors => only the metric between the tokens is one-dimensional. This flaw is the most offensive. It seems that we have as many as 256 real numbers, we occupy a whole kilobyte of memory, and in fact the only operation available to us is to compare this vector with another one, and get a cosine measure as an estimate of the proximity of these vectors. Process two kilobytes of data, get 4 bytes of result. And nothing more.

Then, we get a set of clusters in which tokens are grouped by meaning, and, if necessary, each cluster can be labeled => each cluster has an independent meaning.

More details about the construction of the semantic vector are described in this post .

The text consists of tokens, each of which is tied to its own cluster;

You can calculate the number of occurrences of the token in the text and translate into the number of occurrences of the cluster in the text (the sum of all tokens in the cluster);

Unlike the size of the dictionary (2.5 million tokens), the number of clusters is much smaller (2048) => the effect of reducing the dimension works;

Let's move from the total number of tokens counted in the text to their relative number (share). Then:

We normalize the shares of specific texts. expectation and dispersion calculated over the entire database:

This will increase the importance of rare clusters (not found in all texts - for example, names) compared with frequent clusters (for example - pronouns, verbs and other related words);

The text is determined by its signature - a vector of 2048 real numbers that make sense as normalized fractions of tokens of thematic clusters from which the text is composed.

Each text document is associated with a signature;

The marked up training sample of texts turns into a marked up database of signatures;

For the text being studied, its signature is formed, which is sequentially compared with the signatures of each file from the training set;

The classification decision is made based on kNN (k nearest neighbors), where k = 3.

Benefits:

There is no loss of information from generalization (a comparison is made with each original file from the training set). The essence of machine learning is to find some patterns, isolate them and work only with them - drastically reducing the size of the model compared to the size of the training sample. True or false, arising as artifacts of the learning process or due to lack of training data - it does not matter. The main thing is that the initial training information is no longer available - you have to operate only with the model. In the case of a signature classifier, we can afford to keep in mind the entire training set (not quite so, more on that later - when it comes to tuning the classifier). The main thing is that we can determine what kind of example from the training sample looks like our document most of all and connect, if necessary, an additional analysis of the situation. Lack of generalization - the essence of the absence of information loss;

Acceptable speed - about 0.3 seconds to run a signature database of 1.7 million documents in 24 streams. And this is without SIMD instructions, but already taking into account the maximization of cache hits. And anyway - dad can in si ;

The ability to highlight fuzzy duplicates:

Normalized grades (0; 1];

Ease of replenishing the training set with new documents (and ease of excluding documents from it). New training images are connected on the fly - accordingly, the quality of the classifier grows as it works. It learns. And deleting documents from the database is good for experiments, building training samples, and so on - just exclude the signature of this particular document from the signature database and run its classification - you will get a correct assessment of the quality of the classifier;

The mutual arrangement of tokens (phrases) is not taken into account in any way. It is clear that phrases are the strongest classifying feature;

Large minimum requirements for RAM (35+ GB);

It does not work well (in any way) when a class has a small number of samples (units). Imagine a 256-dimensional surface of a sphere ... No, it’s better not. There is simply an area in which documents of a subject of interest to us should be located. If there are a lot of points in this area — signatures of documents from the training set — the chances of a new document being close to three of these points are higher (kNN), than if 1-2 points proudly burn throughout the area. Therefore, there are chances to work even with the only positive example for the class, but these chances, of course, are not realized as often as we would like.

How to calculate grade for binary classification? Very simple - we take the average distance to the 3 best signatures from each class (positive and negative) and evaluate it as:

Positive / (positive + negative).

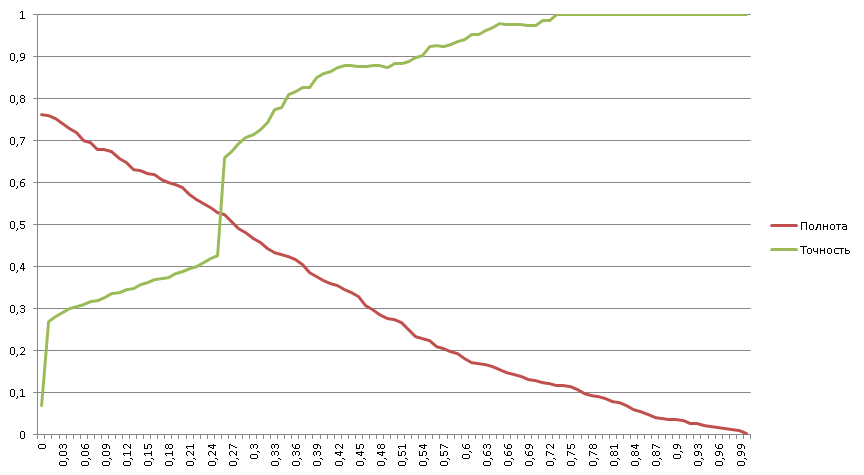

Therefore, most estimates are in a very narrow range, and people, as usual, want to see numbers interpreted as percentages. That 0.54 is very good, and 0.46 is very bad - to explain for a long time and not productively. Nevertheless, the graphics look good, a classic cross.

As can be seen from the graph “accuracy and completeness” the working area of the classifier is quite narrow. The solution is to mark up the original text training file that Word2Vec learns from. To do this, labels that define the document class are embedded in the text of the document:

As a result, the clusters are not randomly arranged in the vector space, but are arranged in such a way that the clusters of one class gravitate towards each other and are separated by the maximum distance from the clusters of the opposite class. Clusters characteristic of both classes are located in the middle.

Memory requirements are reduced by reducing the size of the signature database:

Training set signatures containing an excessive number of samples (over a million) are sequentially clustered into a large number of clusters, and one signature is used from each cluster. Thereby:

To correct the flaws of the signature classifier, the classifier on templates is called upon. On grams, simply put. If the signature classifier matches the text with the signature and stumbles when there are few such signatures, then the template classifier reads the contents of the text more thoughtfully and, if the text is large enough, even a single text is enough to highlight a large number of classification features.

Based on grams up to 16 elements in length:

Some of the target texts are designed in accordance with ISO standards. There are many typical elements:

Some of the target documents contain information on the design:

Almost all contain stable phrases, for example:

It is a simplified implementation of the Naive Baess classifier (without normalization);

Generates up to 60 million classification grams;

It works quickly (state machine).

Grams stand out from the texts;

The relative frequency of grams per class is calculated;

If for different classes the relative frequency is very different (at times), the gram is a classification sign;

Rare grams found 1 time are discarded;

The training sample is classified, and according to those documents for which the classification was erroneous, additional grams are formed, and added to the general database of grams.

Grams stand out from the text;

The weights of all grams are summed for each class to which they belong;

The class with the highest total weights wins;

Wherein:

Benefits:

Disadvantages:

Why:

There is no need to normalize - a strong simplification of the code;

Unnormalized estimates contain more information that is potentially available for classification.

How to use:

Classifier ratings are good signs for use in universal classifiers (xgboost, SVM, ANN);

Moreover, universal classifiers themselves determine the optimal normalization value.

The responses of the signature and template classifiers are combined into a single feature vector, which is subjected to additional processing:

Using the resulting feature vector, the xgboost model is constructed, which gives an increase in accuracy within 3% of the accuracy of the original classifiers.

The essence of regression is from:

The optimization criterion is the maximum area under the product of completeness and accuracy on the segment [0; 1]; in addition, the issuance of a classifier that does not fall into the segment is considered a false positive.

What gives:

Maximizes the working area of the classifier. Humans see the usual assessment in the form of percentages, from zero to one hundred, while this assessment falls in such a way as to maximize both completeness and accuracy. It is clear that the maximum of the work is in the cross, where both completeness and accuracy do not have too small values, but the areas on the right and left, where high completeness is multiplied by no accuracy and where high accuracy is multiplied by no completeness, are uninteresting. They don’t pay money for them;

Throws part of the examples to the area below zero => is a signal that the classifier is not able to work out these examples => the classifier gives a refusal. From an engineering point of view, this is generally an excellent ability - the classifier itself honestly warns that yes, there are 1-2% of good documents in the discarded stream, but the classifier itself does not know how to work with them - use other methods. Or humble yourself.

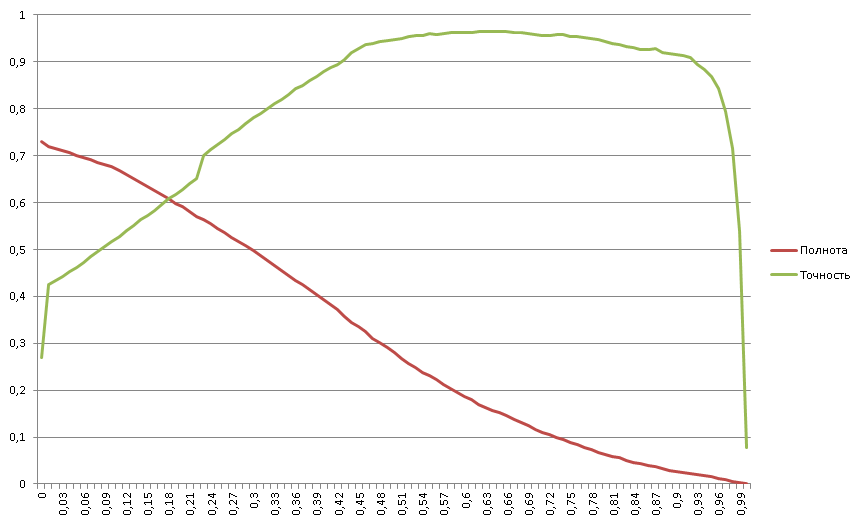

Posh schedule, especially pleasing blockage on the right. I remind you that here we have a ratio of positive examples in the stream twenty times less than negative ones. Nevertheless, the completeness graph looks quite canonical - convex-concave with an inflection point in the middle, around 0.3. Why this is important, we will show further.

Completeness begins with 73% => 27% of all positive examples cannot be worked out effectively by the classifier. These 27% of positive examples were below zero precisely because of ongoing regression optimization. For a business, it is better to report that we are not able to work with these documents than to give false-negative responses to them. But the workspace of the classifier begins with almost 30% accuracy - and these are the numbers that business already likes;

There is a blockage of accuracy from 96% to 0% => in the sample there are about 4% of examples marked as negative, although in reality they are positive (the problem of the completeness of the markup);

Five areas are clearly visible:

Dividing into areas makes it possible to develop additional classification tools for each of the areas, especially for the 1st and 5th.

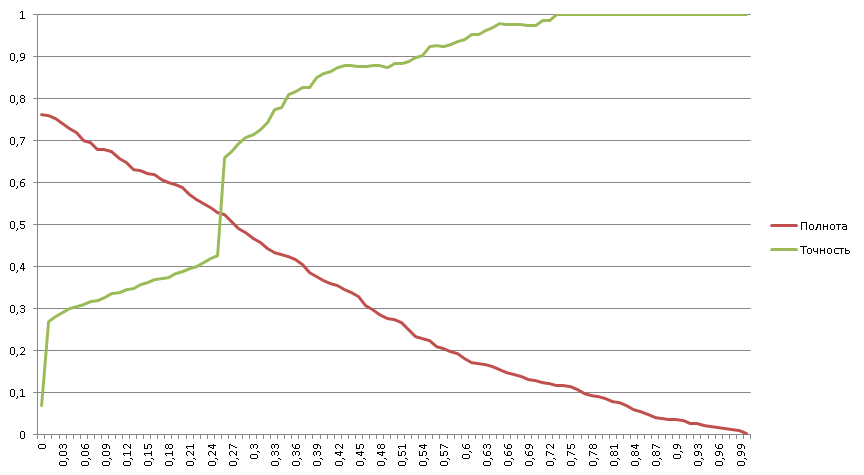

Here we solve the same problem of binary classification, but at the same time for one positive example there are already 182 negative ones.

Completeness begins with 76% => 24% of all positive examples cannot be worked out effectively by the classifier;

12% of all positive examples are unambiguously recognized by the classifier (100% accuracy);

Four areas are clearly visible:

The completeness graph is concave without inflection points => the classifier has high selectivity (corresponds to the right side of the classical completeness graph with an inflection point in the middle).

The reality is that there are a sufficiently large number of classes to which not a single document is marked, and the only information available is the message from the Customer which documents he wants to classify into this class.

Manually constructing a training sample is not possible, since for 1.7 million documents available in the database, you can expect only a few documents that fall under this class, and possibly not just one.

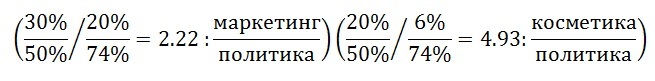

From the analysis of the class name, we see that the Customer is interested in documents related to “Marketing Research” and “Cosmetics”. To detect such documents, it is necessary: To

form a semantic core of texts on given topics, in this case, in all languages of interest;

Find those topics that are not related to a given class - use them as negative examples (for example, politics);

Find in the database of documents several sample documents that are partially or precisely related to a given class;

Mark found documents by the forces of the Customer’s assessors;

Build a classifier.

We go to Wikipedia and find an article that is called, oddly enough, “ marketing research ”. Below there is an inconspicuous reference to the " category ". In the categories are all Wikipedia articles on this topic and nested categories with their articles. In general, a rich source of thematic texts.

But that is not all. To the right of the menu are links to similar articles and categories in other languages. Texts of the same subject, but in languages of interest to us. Or for all in a row - the classifiers themselves will figure it out.

We have three topics:

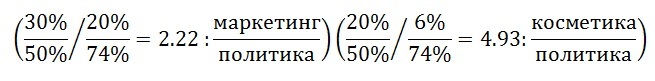

We use a template classifier for these three classes. We process all the documents in the database of documents and get:

These shares do not show the actual distribution of documents by topics, they show only the average weights of the attributes of each class, highlighted by a template classifier.

However, if a document with a distribution of shares of 30%, 20%, 50% about such a document is examined, it can be argued that it contains more features of the text with specified topics against negative topics (politics), respectively - this text is potentially interesting:

As a result of processing the entire database of documents, ~ 80 documents were selected, of which: Two turned out to be fully consistent with the class:

~ 36% of all documents found are partially related to the class;

The rest are not related to the class at all.

It is important that as a result of the evaluation, a marked-up sample of ~ 80 documents containing both positive and negative examples was obtained.

More than 60% of false positives indicate that one policy as a negative example is not enough.

Solution:

Automatically find other text topics that can be used as negative and, potentially, positive examples. To do this:

Automatically generate sets of texts on a large number of topics and select those that correlate (negatively or positively) with the marked-up sample of ~ 80 documents.

If someone else does not know, the whole Wikipedia is already kindly parsed and sorted into files in RDF format. Take and use. Of interest:

It is worth remembering that DBPedia does not replace Wikipedia as a source of texts for machine linguistics, since the size of the annotation is usually 1-2 paragraphs, and does not go to any comparison with most articles in terms of volume. But no one forbids pumping out articles you like and their categories from Wikipedia in the future, right?

The search results for correlated topics will be:

At the same time, the correlated topics depend not only on the “Marketing research of cosmetics” class, but also on the topics of the documents contained in the database (for topics with negative correlation). What allows you to use the found topics:

This post showed those technologies that we use at the moment and where we plan to move in the future (and this is ontology). I take this opportunity to say that in my group we are recruiting people who begin / continue to study machine linguistics, especially students / graduates who are very glad to know which universities.

Tasks to be Solved

There are two main tasks - this is binary classification and multiclass classification. The binary classification gives us the answer, is this document interesting at all, or is it not at all in the subject and is not worth reading. Here we have an imbalance in class sizes of about 1 to 20, that is, twenty good ones fall into one good document. But the training sample itself is problematic - there are noises both in completeness and in accuracy. The noise in completeness is when not all good, good documents are marked as good (FalseNegative), and the noise in accuracy is when not all the documents marked as good are really good (FalsePositive).

The multiclass classification poses our task to determine the subject of the document and attribute it to one of hundreds of thematic classes. The training sample for this problem is very noisy in terms of completeness, but quite clean in accuracy - all the same, markup is done only manually, not like in the first case, on heuristics. But then, thanks to a large number of classes, we begin to enjoy strong distortions in the number of documents per class. The maximum recorded bias is more than 6 thousand. That is, when in one class of documents more than in another, 6 thousand times. Just because there is one document in the second grade. Only. But this is not the largest distortion available, since there are classes in which there are zero documents. Well, the assessors did not find suitable documents - turn around as you know.

That is, our problems are as follows:

- Noisy training samples;

- Strong skews in class sizes;

- Classes represented by a small number of documents;

- Classes in which there are no documents at all (but you need to work with them).

We will solve these problems sequentially by developing one classifier - signature, then another - covering the weaknesses of the first - template, and then polish it all with machine learning in the form of xgboost and regression. And on top of this ensemble of models we will roll over a method that covers the shortcomings of all of the above - namely, the method of working without training samples in general.

Distribution Semantics (Word2Vec)

Google and Yandex know which post to show first when people ask about Word2Vec. Therefore, we will give a brief squeeze from that post and pay attention to what is not written there. Of course, there are other good methods in the distribution semantics section - GloVe, AdaGram, Text2Vec, Seq2Vec and others. But I did not see any strong advantages over W2V in them. If you learn to work with W2V vectors, you can get amazing results.

Word2Vec:

- It is taught without a teacher in any texts;

- Associates each token with a vector of real numbers, such that:

- For any two tokens, you can determine the metric * of the distance between them (cosine measure);

- For semantically related tokens, the distance is positive and tends to 1:

- Interchangeable words (Skipgram model);

- Associated words (BagofWords model).

* Not really

Interchangeable words

Enter word or sentence (EXIT to break): coffee coffee

0.734483

tea 0.690234

tea 0.688656

cappuccino 0.666638

coffee 0.636362

cocoa 0.619801

espresso 0.599390

Associated words

Enter word or sentence (EXIT to break): coffee

beans 0.757635

instant 0.709936

tea 0.709579

coffe 0.704036

mellanrost 0.694822

sublemented 0.694553

ground 0.690066

coffee 0.680409

Multiple words (A + B)

Enter word or sentence (EXIT to break): mobile phone

cell 0.811114

phone 0.776416

smartphone 0.730191

phone 0.719766

mobile 0.717972

mobile 0.706131

Projection Relations (A-B + C)

Find a word that relates to Ukraine in the same way as a dollar refers to the United States (that is, what is the currency of Ukraine?):

Enter three words (EXIT to break): usa dollar Ukraine

hryvnia 0.622719

dollar 0.607078

hryvnia 0.597969

ruble 0.596636

Find such A word that refers to germany is the same as France refers to france (that is, a translation of the word germany):

Enter three words (EXIT to break): france France germany

germany 0.748171

england 0.692712

netherlands 0.665715

united kingdom 0.651329

Advantages and disadvantages:

Learning quickly from unprepared texts:

- 1.5 months per 100 GB training file, BagOfWords and Skipgram models, on 24 cores;

- Reduces dimension:

- 2.5 million tokens dictionary shrinks to 256 elements of the vector of real numbers;

- Vector operations quickly degrade:

- The result of adding 3-5 words is already practically useless => not applicable for word processing;

The elements of the vector do not make sense, only the distances between the vectors => only the metric between the tokens is one-dimensional. This flaw is the most offensive. It seems that we have as many as 256 real numbers, we occupy a whole kilobyte of memory, and in fact the only operation available to us is to compare this vector with another one, and get a cosine measure as an estimate of the proximity of these vectors. Process two kilobytes of data, get 4 bytes of result. And nothing more.

Semantic vector

- The coffee example shows that tokens are grouped by meaning (drinks);

- It is necessary to form a sufficient number of clusters;

- Clustering is not necessary.

Then, we get a set of clusters in which tokens are grouped by meaning, and, if necessary, each cluster can be labeled => each cluster has an independent meaning.

More details about the construction of the semantic vector are described in this post .

Text signature

The text consists of tokens, each of which is tied to its own cluster;

You can calculate the number of occurrences of the token in the text and translate into the number of occurrences of the cluster in the text (the sum of all tokens in the cluster);

Unlike the size of the dictionary (2.5 million tokens), the number of clusters is much smaller (2048) => the effect of reducing the dimension works;

Let's move from the total number of tokens counted in the text to their relative number (share). Then:

- The share does not depend on the length of the text, but depends only on the frequency of the appearance of tokens in the text => it becomes possible to compare texts of different lengths;

- The share characterizes the subject of the text => the subject of the text is determined by those words that are used;

We normalize the shares of specific texts. expectation and dispersion calculated over the entire database:

This will increase the importance of rare clusters (not found in all texts - for example, names) compared with frequent clusters (for example - pronouns, verbs and other related words);

The text is determined by its signature - a vector of 2048 real numbers that make sense as normalized fractions of tokens of thematic clusters from which the text is composed.

Signature Classifier

Each text document is associated with a signature;

The marked up training sample of texts turns into a marked up database of signatures;

For the text being studied, its signature is formed, which is sequentially compared with the signatures of each file from the training set;

The classification decision is made based on kNN (k nearest neighbors), where k = 3.

Advantages and disadvantages

Benefits:

There is no loss of information from generalization (a comparison is made with each original file from the training set). The essence of machine learning is to find some patterns, isolate them and work only with them - drastically reducing the size of the model compared to the size of the training sample. True or false, arising as artifacts of the learning process or due to lack of training data - it does not matter. The main thing is that the initial training information is no longer available - you have to operate only with the model. In the case of a signature classifier, we can afford to keep in mind the entire training set (not quite so, more on that later - when it comes to tuning the classifier). The main thing is that we can determine what kind of example from the training sample looks like our document most of all and connect, if necessary, an additional analysis of the situation. Lack of generalization - the essence of the absence of information loss;

Acceptable speed - about 0.3 seconds to run a signature database of 1.7 million documents in 24 streams. And this is without SIMD instructions, but already taking into account the maximization of cache hits. And anyway - dad can in si ;

The ability to highlight fuzzy duplicates:

- Free (comparison of signatures and so on at the stage of classification);

- High selectivity (only potential duplicates are analyzed, composed mainly of the same tokens, i.e. - having a high measure of signature proximity);

- Customizability (you can lower the threshold and get not just duplicates, but thematically and stylistically close texts);

Normalized grades (0; 1];

Ease of replenishing the training set with new documents (and ease of excluding documents from it). New training images are connected on the fly - accordingly, the quality of the classifier grows as it works. It learns. And deleting documents from the database is good for experiments, building training samples, and so on - just exclude the signature of this particular document from the signature database and run its classification - you will get a correct assessment of the quality of the classifier;

Disadvantages:

The mutual arrangement of tokens (phrases) is not taken into account in any way. It is clear that phrases are the strongest classifying feature;

Large minimum requirements for RAM (35+ GB);

It does not work well (in any way) when a class has a small number of samples (units). Imagine a 256-dimensional surface of a sphere ... No, it’s better not. There is simply an area in which documents of a subject of interest to us should be located. If there are a lot of points in this area — signatures of documents from the training set — the chances of a new document being close to three of these points are higher (kNN), than if 1-2 points proudly burn throughout the area. Therefore, there are chances to work even with the only positive example for the class, but these chances, of course, are not realized as often as we would like.

Accuracy and completeness (binary classification)

How to calculate grade for binary classification? Very simple - we take the average distance to the 3 best signatures from each class (positive and negative) and evaluate it as:

Positive / (positive + negative).

Therefore, most estimates are in a very narrow range, and people, as usual, want to see numbers interpreted as percentages. That 0.54 is very good, and 0.46 is very bad - to explain for a long time and not productively. Nevertheless, the graphics look good, a classic cross.

Fine tune signature classifier

As can be seen from the graph “accuracy and completeness” the working area of the classifier is quite narrow. The solution is to mark up the original text training file that Word2Vec learns from. To do this, labels that define the document class are embedded in the text of the document:

As a result, the clusters are not randomly arranged in the vector space, but are arranged in such a way that the clusters of one class gravitate towards each other and are separated by the maximum distance from the clusters of the opposite class. Clusters characteristic of both classes are located in the middle.

Memory requirements are reduced by reducing the size of the signature database:

Training set signatures containing an excessive number of samples (over a million) are sequentially clustered into a large number of clusters, and one signature is used from each cluster. Thereby:

- A relatively uniform coverage of the vector space by sample signatures is preserved;

- The signatures of samples excessively close to each other, and, accordingly, of little use for classification, are deleted.

Signature classifier, resume

- Quick;

- Easy to complement;

- It also allows you to detect duplicates;

- Does not take into account the relative position of tokens;

- It does not work when there are few examples in the training set.

Template Classifier

To correct the flaws of the signature classifier, the classifier on templates is called upon. On grams, simply put. If the signature classifier matches the text with the signature and stumbles when there are few such signatures, then the template classifier reads the contents of the text more thoughtfully and, if the text is large enough, even a single text is enough to highlight a large number of classification features.

Template Classifier:

Based on grams up to 16 elements in length:

Some of the target texts are designed in accordance with ISO standards. There are many typical elements:

- Headings

- Signatures

- Reconciliation sheets and archive tags;

Some of the target documents contain information on the design:

- Xml (including all modern office standards);

- Html

Almost all contain stable phrases, for example:

- “Strictly confidential, burn before reading”;

It is a simplified implementation of the Naive Baess classifier (without normalization);

Generates up to 60 million classification grams;

It works quickly (state machine).

Classifier construction

Grams stand out from the texts;

The relative frequency of grams per class is calculated;

If for different classes the relative frequency is very different (at times), the gram is a classification sign;

Rare grams found 1 time are discarded;

The training sample is classified, and according to those documents for which the classification was erroneous, additional grams are formed, and added to the general database of grams.

Classifier Usage

Grams stand out from the text;

The weights of all grams are summed for each class to which they belong;

The class with the highest total weights wins;

Wherein:

- A class in which there were more instructional texts forms usually more classifying grams;

- A rare class (few educational texts) generates few grams, but their average weight is higher (due to the predominance of long grams unique to this class);

- A text in which there is no obvious predominance of rare long grams will be assigned to a class with a large number of teaching examples (a posterior distribution tends to a priori). This is how the original naive Baess and, in general, all universal classifiers work;

- Text that has long unique grams of a rare class will be assigned more likely to this rare class (intentionally introduced distortion in the posterior distribution in order to increase selectivity in rare classes). Money pays for the result, and the more difficult it is to get the result, the more money. It's clear. The most difficult result is just rare thematic classes. Therefore, it makes sense to distort your classifier in such a way that it would be better to look for rare classes, albeit sinking in quality on large, pop classes. In the end, the signature classifier does a great job with pop classes.

Advantages and disadvantages

Benefits:

- Relatively fast and compact;

- It parallels well;

- Able to learn from a single document in the training sample per class;

Disadvantages:

- Оценки ненормированы и в оригинальном виде не обеспечивают критерий pairwize (нельзя гарантировать, что оценка с большим весом более вероятна);

- Генерится огромное количество грамм с околонулевой вероятностью встретить их в реальных документах (не дублях). Но зато, встретив такую редкую грамму в документе, можно об этом документе сразу сказать много интересного. А памяти на сервере хватает – это не проблема;

- Огромные требования к памяти на этапе обучения. Да, нужен кластер, но их есть у нас (смотри поскриптум к данному посту).

Ненормированность оценок

Why:

There is no need to normalize - a strong simplification of the code;

Unnormalized estimates contain more information that is potentially available for classification.

How to use:

Classifier ratings are good signs for use in universal classifiers (xgboost, SVM, ANN);

Moreover, universal classifiers themselves determine the optimal normalization value.

Final generalized classifier (multiclass)

The responses of the signature and template classifiers are combined into a single feature vector, which is subjected to additional processing:

- Normalization of estimates to unity, to obtain their relative share per class;

- Grouping rare classes to obtain a sufficient number of training examples per group;

- Taking the difference of group estimates to obtain a flatter surface for gradient descent.

Using the resulting feature vector, the xgboost model is constructed, which gives an increase in accuracy within 3% of the accuracy of the original classifiers.

Binary Generalized Classifier

The essence of regression is from:

- Signature Classifier:

- Template Classifier:

- XgBoost at the outputs of the signature and template classifier.

The optimization criterion is the maximum area under the product of completeness and accuracy on the segment [0; 1]; in addition, the issuance of a classifier that does not fall into the segment is considered a false positive.

What gives:

Maximizes the working area of the classifier. Humans see the usual assessment in the form of percentages, from zero to one hundred, while this assessment falls in such a way as to maximize both completeness and accuracy. It is clear that the maximum of the work is in the cross, where both completeness and accuracy do not have too small values, but the areas on the right and left, where high completeness is multiplied by no accuracy and where high accuracy is multiplied by no completeness, are uninteresting. They don’t pay money for them;

Throws part of the examples to the area below zero => is a signal that the classifier is not able to work out these examples => the classifier gives a refusal. From an engineering point of view, this is generally an excellent ability - the classifier itself honestly warns that yes, there are 1-2% of good documents in the discarded stream, but the classifier itself does not know how to work with them - use other methods. Or humble yourself.

Binary generalized classifier, 1:20

Posh schedule, especially pleasing blockage on the right. I remind you that here we have a ratio of positive examples in the stream twenty times less than negative ones. Nevertheless, the completeness graph looks quite canonical - convex-concave with an inflection point in the middle, around 0.3. Why this is important, we will show further.

Binary Generalized Classifier, 1:20, Analysis

Completeness begins with 73% => 27% of all positive examples cannot be worked out effectively by the classifier. These 27% of positive examples were below zero precisely because of ongoing regression optimization. For a business, it is better to report that we are not able to work with these documents than to give false-negative responses to them. But the workspace of the classifier begins with almost 30% accuracy - and these are the numbers that business already likes;

There is a blockage of accuracy from 96% to 0% => in the sample there are about 4% of examples marked as negative, although in reality they are positive (the problem of the completeness of the markup);

Five areas are clearly visible:

- Classifier failure area (less than zero);

- two quasilinear regions;

- Saturation area (maximum efficiency);

- Obstruction area (marking completeness problem).

Dividing into areas makes it possible to develop additional classification tools for each of the areas, especially for the 1st and 5th.

Binary generalized classifier, 1: 182

Here we solve the same problem of binary classification, but at the same time for one positive example there are already 182 negative ones.

Binary Generalized Classifier, 1: 182, Analysis

Completeness begins with 76% => 24% of all positive examples cannot be worked out effectively by the classifier;

12% of all positive examples are unambiguously recognized by the classifier (100% accuracy);

Four areas are clearly visible:

- Classifier failure area (less than zero);

- two quasilinear regions;

- Area of 100% accuracy.

The completeness graph is concave without inflection points => the classifier has high selectivity (corresponds to the right side of the classical completeness graph with an inflection point in the middle).

Building a classifier for classes that do not have training examples

The reality is that there are a sufficiently large number of classes to which not a single document is marked, and the only information available is the message from the Customer which documents he wants to classify into this class.

Manually constructing a training sample is not possible, since for 1.7 million documents available in the database, you can expect only a few documents that fall under this class, and possibly not just one.

Class “Marketing research of cosmetics”

From the analysis of the class name, we see that the Customer is interested in documents related to “Marketing Research” and “Cosmetics”. To detect such documents, it is necessary: To

form a semantic core of texts on given topics, in this case, in all languages of interest;

Find those topics that are not related to a given class - use them as negative examples (for example, politics);

Find in the database of documents several sample documents that are partially or precisely related to a given class;

Mark found documents by the forces of the Customer’s assessors;

Build a classifier.

Build the semantic core of texts.

We go to Wikipedia and find an article that is called, oddly enough, “ marketing research ”. Below there is an inconspicuous reference to the " category ". In the categories are all Wikipedia articles on this topic and nested categories with their articles. In general, a rich source of thematic texts.

But that is not all. To the right of the menu are links to similar articles and categories in other languages. Texts of the same subject, but in languages of interest to us. Or for all in a row - the classifiers themselves will figure it out.

Using semantic kernels

We have three topics:

- Marketing research;

- Cosmetics;

- Politics.

We use a template classifier for these three classes. We process all the documents in the database of documents and get:

- Marketing research 20%;

- Cosmetics 6%;

- Policy 74%.

These shares do not show the actual distribution of documents by topics, they show only the average weights of the attributes of each class, highlighted by a template classifier.

However, if a document with a distribution of shares of 30%, 20%, 50% about such a document is examined, it can be argued that it contains more features of the text with specified topics against negative topics (politics), respectively - this text is potentially interesting:

Document Search Results

As a result of processing the entire database of documents, ~ 80 documents were selected, of which: Two turned out to be fully consistent with the class:

- were not found earlier when processing documents manually;

- In different languages other than the language of the entry point into the semantic core (Russian);

~ 36% of all documents found are partially related to the class;

The rest are not related to the class at all.

It is important that as a result of the evaluation, a marked-up sample of ~ 80 documents containing both positive and negative examples was obtained.

Classifier construction

More than 60% of false positives indicate that one policy as a negative example is not enough.

Solution:

Automatically find other text topics that can be used as negative and, potentially, positive examples. To do this:

Automatically generate sets of texts on a large number of topics and select those that correlate (negatively or positively) with the marked-up sample of ~ 80 documents.

Ontology DBPedia

If someone else does not know, the whole Wikipedia is already kindly parsed and sorted into files in RDF format. Take and use. Of interest:

- ShortAbstracts - a brief abstract of the article;

- LongAbstracts - long abstract of the article;

- Homepages - links to external resources, home pages;

- Article Categories - page category;

- Categorylabels - readable category names;

- Externallinks - links to external resources on the topic;

- Interlanguagelinks - This article is in other languages.

It is worth remembering that DBPedia does not replace Wikipedia as a source of texts for machine linguistics, since the size of the annotation is usually 1-2 paragraphs, and does not go to any comparison with most articles in terms of volume. But no one forbids pumping out articles you like and their categories from Wikipedia in the future, right?

[Expected] DBPEdia correlated subject search results

The search results for correlated topics will be:

- Text sets in all languages on given topics;

- Assessment of the degree of correlation of texts in relation to the class;

- Human-readable (verbal) description of topics.

At the same time, the correlated topics depend not only on the “Marketing research of cosmetics” class, but also on the topics of the documents contained in the database (for topics with negative correlation). What allows you to use the found topics:

- for manual construction of classifiers on other topics;

- as additional features for texts classified by the final generalized classifier;

- To create additional dimensions of text similarity metrics in the vector space of the signature classifier: here I refer to my article in the 2015 Dialogue of the Learning Dialogue by analogy in mixed ontological networks. And to the corresponding post .

P.S

This post showed those technologies that we use at the moment and where we plan to move in the future (and this is ontology). I take this opportunity to say that in my group we are recruiting people who begin / continue to study machine linguistics, especially students / graduates who are very glad to know which universities.