Dual Wan and NetWatch implementation features in MikroTik

“If Mikrotik doesn’t work in a simple configuration, then you don’t know how to cook it ... or you obviously missed something.”

How failover and netwatch work together. A look from inside.

Almost every more or less grown company begins to want the quality of communications. Among other things, the customer often wants the fault-tolerant “Dual WAN” and VoIP telephony. Also fault tolerant, of course. A lot of guides and articles on each topic have been written separately, but suddenly it turned out that not everyone can combine the first and second.

There is already an article on Habré “Mikrotik. Failover. Load Balancing ” by vdemchuk . As it turned out, it served as a source of copy-paste code for routers for many.

A good, working solution, but SIP-clients from LAN, connecting to an external IP-PBX via NAT, lost connection when switching. The problem is known. It is connected with the work of Connection tracker , which remembers the existing connections outside, and saves their state regardless of other conditions.

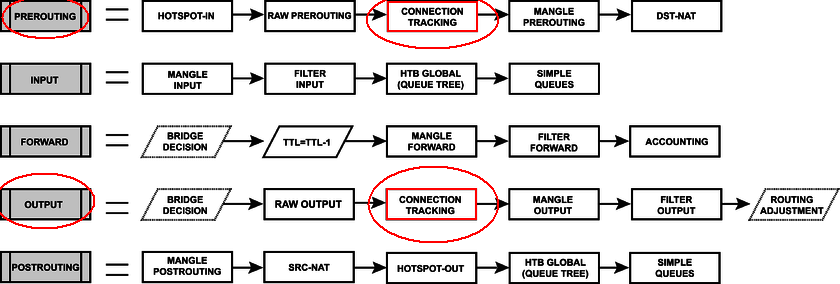

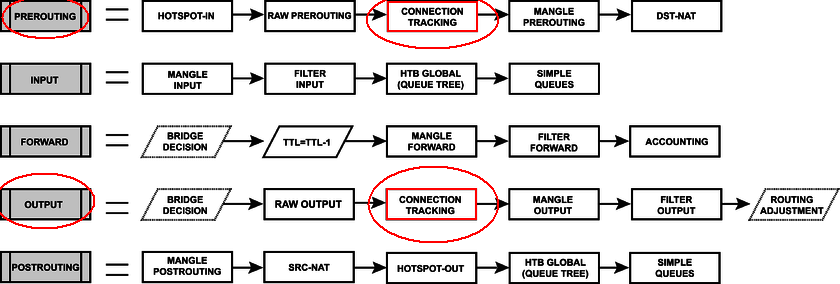

You can understand why this happens by looking at the packet flow diagram:

For transit traffic, the connection tracker processing procedure is performed in only one chain - prerouting, (i.e., before routing), untilroute selection and outgoing interface. At this stage, it is still unknown which interface the packet will go to the Internet, and it is impossible to track src-ip with several Wan interfaces. The mechanism captures the established connections already post-factum. It fixes and remembers for a while until packets go through the connection or until the specified timeout expires.

The described behavior is characteristic not only for MikroTik routers, but also for most Linux-based systems that perform NAT.

As a result, when the connection through WAN1 is interrupted, the data stream is obediently routed through WAN2, only the SOURCE IP of packets transmitted through NAT remains unchanged - from the WAN1 interface, because connection tracker already has a corresponding entry. Naturally, the answers to such packets go to the WAN1 interface, which has already lost contact with the outside world. In the end, the connection seems to be there, but in fact it is not. In this case, all new connections are established correctly.

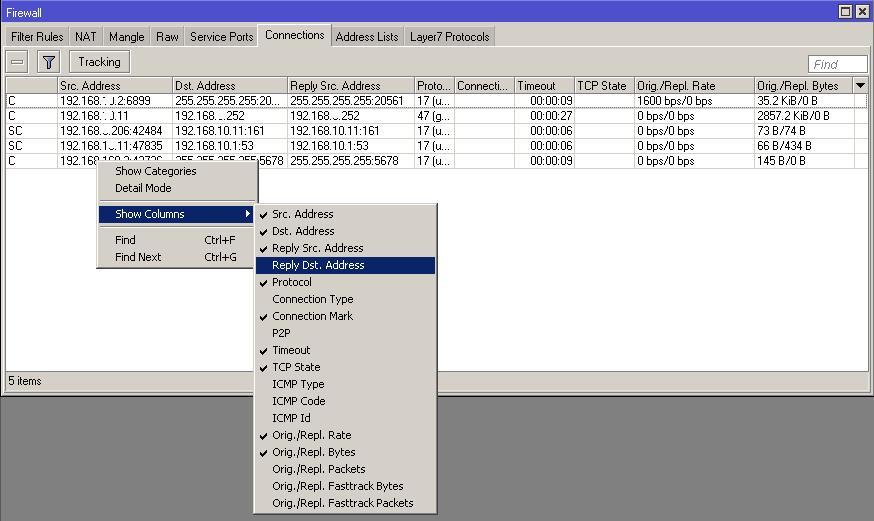

Hint: you can see from which and to what addresses NAT is done in the “Reply Src. Address and Reply Dst. Address ". The display of these columns is enabled in the “connections” table with the right mouse button.

At first glance, the output looks quite simple - when switching, reset the previously established SIP connections so that they are re-established, already with the new SRC-IP. Fortunately, a simple script on the Internet wanders.

Step one. Copy pasteurists faithfully transfer the config for Failover recursive routing:

Step Two Track the switch event. Than? "/ tool netwatch" naturally! Trying to track the fall of the WAN1 gateway usually looks like this:

Step Three Verification

The administrator extinguishes the first uplink WAN1 and manually runs the script. SIP clients reconnected. Works? Works!

The admin switches back on WAN1 and manually runs the script. SIP clients reconnected. Works? Works!

In a real environment, such a config refuses to work. Repeated repetition of step number 3 brings the admin into a state of bitterness and we hear "Your microtic does not work!"

It's all about not understanding how the Netwatch utility works . In relation to exactly recursive routing, the utility simply pings the given host according to the main routing table using active routes.

Let's do an experiment. Disable the main channel WAN1 and look at the / tool netwatch interface. We will see that host 8.8.8.8 still has the UP state.

For comparison, the check-gateway = ping option works for each route individually, including recursively, and makes the route itself active or inactive.

Netwatch uses the currently active routes. When something happens on the link to the ISP1 (WAN1) provider gateway, the route to 8.8.8.8 through WAN1 becomes inactive, and netwatch ignores it, sending packets to the new default route. Failover is playing a trick, and netwatch believes that everything is in order.

The second netwatch behavior is double triggering. Its mechanism is as follows: if pings from netwatch fall into the check-gateway timeout , then the host will be recognized DOWN for one check cycle. The channel switching script will work. SIP connections will correctly switch to the new link. Works? Not really.

Soon the routing table will be rebuilt, the host 8.8.8.8 will receive the UP status, the SIP connection reset script will work again. The connections will be reinstalled a second time through WAN2.

As a result, when ISP1 returns to service and the work traffic switches to WAN1, SIP connections will still hang through ISP2 (WAN2). This is fraught with the fact that if there are problems with the spare channel, the system will not notice this and there will be no telephone connection.

In order for the traffic to the host 8.8.8.8 used for monitoring not to be wrapped up on ISP2, we need to have a backup route to 8.8.8.8. In case of ISP1 crash, create a backup route with a large distance value, for example distance = 10 and type = blackhole. It will become active when the link disappears to WAN1 Gateway:

/ ip route add distance = 10 dst-address = 8.8.8.8 type = blackhole

As a result, we have the addition of the config with just one line:

This situation is typical precisely when the last mile falls, when the ISP1 gateway becomes unavailable. Or when using tunnels that are more prone to falls due to chain dependence.

I hope this article helps you avoid such errors. Choose fresh manuals. Keep up to date, and everything will take off from you.

How failover and netwatch work together. A look from inside.

Almost every more or less grown company begins to want the quality of communications. Among other things, the customer often wants the fault-tolerant “Dual WAN” and VoIP telephony. Also fault tolerant, of course. A lot of guides and articles on each topic have been written separately, but suddenly it turned out that not everyone can combine the first and second.

There is already an article on Habré “Mikrotik. Failover. Load Balancing ” by vdemchuk . As it turned out, it served as a source of copy-paste code for routers for many.

A good, working solution, but SIP-clients from LAN, connecting to an external IP-PBX via NAT, lost connection when switching. The problem is known. It is connected with the work of Connection tracker , which remembers the existing connections outside, and saves their state regardless of other conditions.

You can understand why this happens by looking at the packet flow diagram:

For transit traffic, the connection tracker processing procedure is performed in only one chain - prerouting, (i.e., before routing), untilroute selection and outgoing interface. At this stage, it is still unknown which interface the packet will go to the Internet, and it is impossible to track src-ip with several Wan interfaces. The mechanism captures the established connections already post-factum. It fixes and remembers for a while until packets go through the connection or until the specified timeout expires.

The described behavior is characteristic not only for MikroTik routers, but also for most Linux-based systems that perform NAT.

As a result, when the connection through WAN1 is interrupted, the data stream is obediently routed through WAN2, only the SOURCE IP of packets transmitted through NAT remains unchanged - from the WAN1 interface, because connection tracker already has a corresponding entry. Naturally, the answers to such packets go to the WAN1 interface, which has already lost contact with the outside world. In the end, the connection seems to be there, but in fact it is not. In this case, all new connections are established correctly.

Hint: you can see from which and to what addresses NAT is done in the “Reply Src. Address and Reply Dst. Address ". The display of these columns is enabled in the “connections” table with the right mouse button.

At first glance, the output looks quite simple - when switching, reset the previously established SIP connections so that they are re-established, already with the new SRC-IP. Fortunately, a simple script on the Internet wanders.

Script

:foreach i in=[/ip firewall connection find dst-address~":5060"] do={ /ip firewall connection remove $i }

Three steps to fail

Step one. Copy pasteurists faithfully transfer the config for Failover recursive routing:

Setting up routing from the article “Mikrotik. Failover. Load Balancing »

# Set up the network of providers:

/ ip address add address = 10.100.1.1 / 24 interface = ISP1

/ ip address add address = 10.200.1.1 / 24 interface = ISP2

# Set up the local interface

/ ip address add address = 10.1.1.1 / 24 interface = LAN

# hide behind NAT everything that comes out of the local network

/ ip firewall nat add src-address = 10.1.1.0 / 24 action = masquerade chain = srcnat

### Providing failover with a deeper analysis of the channel ###

# using the scope parameter specify recursive paths to nodes 8.8.8.8 and 8.8.4.4

/ ip route add dst-address = 8.8.8.8 gateway = 10.100.1.254 scope = 10

/ ip route add dst-address = 8.8.4.4 gateway = 10.200.1.254 scope = 10

# specify 2 default gateway through nodes the path to which is specified recursively

/ ip route add dst-address = 0.0.0.0 / 0 gateway = 8.8.8.8 distance = 1 check-gateway = ping

/ ip route add dst-address = 0.0.0.0 / 0 gateway = 8.8.4.4 distance = 2 check-gateway = ping

/ ip address add address = 10.100.1.1 / 24 interface = ISP1

/ ip address add address = 10.200.1.1 / 24 interface = ISP2

# Set up the local interface

/ ip address add address = 10.1.1.1 / 24 interface = LAN

# hide behind NAT everything that comes out of the local network

/ ip firewall nat add src-address = 10.1.1.0 / 24 action = masquerade chain = srcnat

### Providing failover with a deeper analysis of the channel ###

# using the scope parameter specify recursive paths to nodes 8.8.8.8 and 8.8.4.4

/ ip route add dst-address = 8.8.8.8 gateway = 10.100.1.254 scope = 10

/ ip route add dst-address = 8.8.4.4 gateway = 10.200.1.254 scope = 10

# specify 2 default gateway through nodes the path to which is specified recursively

/ ip route add dst-address = 0.0.0.0 / 0 gateway = 8.8.8.8 distance = 1 check-gateway = ping

/ ip route add dst-address = 0.0.0.0 / 0 gateway = 8.8.4.4 distance = 2 check-gateway = ping

Step Two Track the switch event. Than? "/ tool netwatch" naturally! Trying to track the fall of the WAN1 gateway usually looks like this:

Netwatch config

/ tool netwatch

add comment = "Check Main Link via 8.8.8.8" host = 8.8.8.8 timeout = 500ms /

down-script = ": log warning (" WAN1 DOWN ")

: foreach i in = [/ ip firewall connection find dst -address ~ ": 5060"] do = {

: log warning ("clear-SIP-connections: clearing connection src-address: $ [/ ip firewall connection get $ i src-address] dst-address: $ [/ ip firewall connection get $ i dst-address] ")

/ ip firewall connection remove $ i}"

up-script = ": log warning (" WAN1 UP ")

: foreach i in = [/ ip firewall connection find dst-address ~": 5060 "] do = {

: log warning (" clear-SIP-connections: clearing connection src-address: $ [/ ip firewall connection get $ i src-address] dst-address:$ [/ ip firewall connection get $ i dst-address] ")

/ ip firewall connection remove $ i}"

add comment = "Check Main Link via 8.8.8.8" host = 8.8.8.8 timeout = 500ms /

down-script = ": log warning (" WAN1 DOWN ")

: foreach i in = [/ ip firewall connection find dst -address ~ ": 5060"] do = {

: log warning ("clear-SIP-connections: clearing connection src-address: $ [/ ip firewall connection get $ i src-address] dst-address: $ [/ ip firewall connection get $ i dst-address] ")

/ ip firewall connection remove $ i}"

up-script = ": log warning (" WAN1 UP ")

: foreach i in = [/ ip firewall connection find dst-address ~": 5060 "] do = {

: log warning (" clear-SIP-connections: clearing connection src-address: $ [/ ip firewall connection get $ i src-address] dst-address:$ [/ ip firewall connection get $ i dst-address] ")

/ ip firewall connection remove $ i}"

Step Three Verification

The administrator extinguishes the first uplink WAN1 and manually runs the script. SIP clients reconnected. Works? Works!

The admin switches back on WAN1 and manually runs the script. SIP clients reconnected. Works? Works!

Fail

In a real environment, such a config refuses to work. Repeated repetition of step number 3 brings the admin into a state of bitterness and we hear "Your microtic does not work!"

Debriefing

It's all about not understanding how the Netwatch utility works . In relation to exactly recursive routing, the utility simply pings the given host according to the main routing table using active routes.

Let's do an experiment. Disable the main channel WAN1 and look at the / tool netwatch interface. We will see that host 8.8.8.8 still has the UP state.

For comparison, the check-gateway = ping option works for each route individually, including recursively, and makes the route itself active or inactive.

Netwatch uses the currently active routes. When something happens on the link to the ISP1 (WAN1) provider gateway, the route to 8.8.8.8 through WAN1 becomes inactive, and netwatch ignores it, sending packets to the new default route. Failover is playing a trick, and netwatch believes that everything is in order.

The second netwatch behavior is double triggering. Its mechanism is as follows: if pings from netwatch fall into the check-gateway timeout , then the host will be recognized DOWN for one check cycle. The channel switching script will work. SIP connections will correctly switch to the new link. Works? Not really.

Soon the routing table will be rebuilt, the host 8.8.8.8 will receive the UP status, the SIP connection reset script will work again. The connections will be reinstalled a second time through WAN2.

As a result, when ISP1 returns to service and the work traffic switches to WAN1, SIP connections will still hang through ISP2 (WAN2). This is fraught with the fact that if there are problems with the spare channel, the system will not notice this and there will be no telephone connection.

Decision

In order for the traffic to the host 8.8.8.8 used for monitoring not to be wrapped up on ISP2, we need to have a backup route to 8.8.8.8. In case of ISP1 crash, create a backup route with a large distance value, for example distance = 10 and type = blackhole. It will become active when the link disappears to WAN1 Gateway:

/ ip route add distance = 10 dst-address = 8.8.8.8 type = blackhole

As a result, we have the addition of the config with just one line:

Corrected Routing

# Set up the network of providers:

/ ip address add address = 10.100.1.1 / 24 interface = ISP1

/ ip address add address = 10.200.1.1 / 24 interface = ISP2

# Set up the local interface

/ ip address add address = 10.1.1.1 / 24 interface = LAN

# hide behind NAT everything that comes out of the local network

/ ip firewall nat add src-address = 10.1.1.0 / 24 action = masquerade chain = srcnat

### Providing failover with a deeper analysis of the channel ###

# using the scope parameter specify recursive paths to nodes 8.8.8.8 and 8.8.4.4

/ ip route add dst-address = 8.8.8.8 gateway = 10.100.1.254 scope = 10

/ ip route add distance = 10 dst-address = 8.8.8.8 type = blackhole

/ ip route add dst-address = 8.8.4.4 gateway = 10.200.1.254 scope = 10

# specify 2 default gateway through the nodes the path to which is specified recursively

/ ip route add dst-address = 0.0.0.0 / 0 gateway = 8.8.8.8 distance = 1 check-gateway = ping

/ ip route add dst-address = 0.0.0.0 / 0 gateway = 8.8.4.4 distance = 2 check-gateway = ping

/ ip address add address = 10.100.1.1 / 24 interface = ISP1

/ ip address add address = 10.200.1.1 / 24 interface = ISP2

# Set up the local interface

/ ip address add address = 10.1.1.1 / 24 interface = LAN

# hide behind NAT everything that comes out of the local network

/ ip firewall nat add src-address = 10.1.1.0 / 24 action = masquerade chain = srcnat

### Providing failover with a deeper analysis of the channel ###

# using the scope parameter specify recursive paths to nodes 8.8.8.8 and 8.8.4.4

/ ip route add dst-address = 8.8.8.8 gateway = 10.100.1.254 scope = 10

/ ip route add distance = 10 dst-address = 8.8.8.8 type = blackhole

/ ip route add dst-address = 8.8.4.4 gateway = 10.200.1.254 scope = 10

# specify 2 default gateway through the nodes the path to which is specified recursively

/ ip route add dst-address = 0.0.0.0 / 0 gateway = 8.8.8.8 distance = 1 check-gateway = ping

/ ip route add dst-address = 0.0.0.0 / 0 gateway = 8.8.4.4 distance = 2 check-gateway = ping

This situation is typical precisely when the last mile falls, when the ISP1 gateway becomes unavailable. Or when using tunnels that are more prone to falls due to chain dependence.

I hope this article helps you avoid such errors. Choose fresh manuals. Keep up to date, and everything will take off from you.