Comparison of best APIs for filtering obscene content

- Transfer

Full testing of several APIs for filtering images of various categories, such as nudity, pornography, and dismemberment.

The person immediately understands that a certain image is inappropriate, that is, NSFW (Not Safe For Work). But for artificial intelligence, things are not so clear. Many companies are now trying to develop effective tools to automatically filter such content.

I wanted to understand what the current state of the market is. Let's compare the effectiveness of existing API for filtering images in the following categories:

Tl; DR: If you just want to know the best API, you can go directly to the comparison at the end of the article.

The dataset . For evaluation, I collected my NSFW data set with an equal number of pictures in each NSFW subcategory. The data set consists of 120 images with 20 positive NSFW images for each of the five mentioned categories, and 20 SFW images. I decided not to use the publicly available set of YACVID 180, since it is mainly based on the use of nudity as a measure of NSFW content.

Collecting NSFW pictures is tiring, it’s a very long and painful task, which explains the small number of images.

The dataset is available for download here . [Warning: contains candid content]

Here is a table with raw results for each API and each image in the dataset.

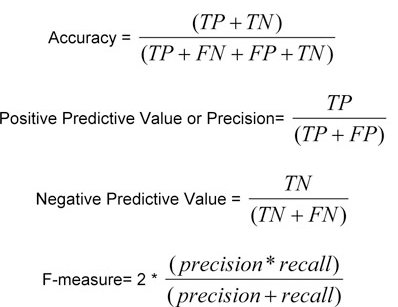

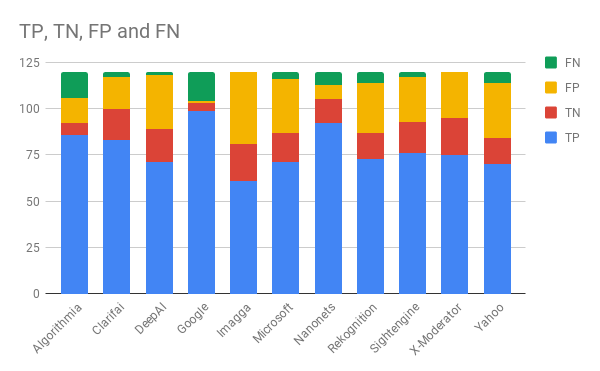

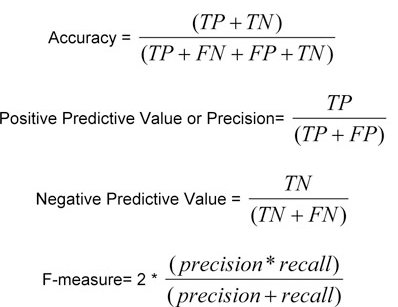

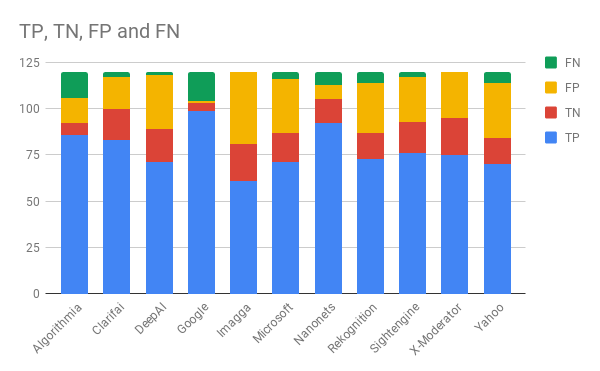

Each of the classifiers is evaluated according to generally accepted metrics:

If the classifier calls something NSFW and it is actually NSFW.

If the classifier calls something SFW, and this is actually SFW.

If the classifier is called something NSFW, which is actually SFW.

If the classifier calls something SFW, which was actually NSFW.

If the model makes a prediction, can you trust it?

If the model says that the image is NSFW, how often is the forecast correct?

If all samples are NSFW, how much does she identify?

It is a mixture of infallibility and recall, often similar to accuracy.

The following content moderation APIs were evaluated:

At first, I evaluated each API in all NSFW categories.

The Google and Sightengine APIs are really good here. They are the only truly recognized all pornographic images. Nanonets and Algorithmia are slightly behind with a score of 90%. Microsoft and Imagga showed the worst performance in this category.

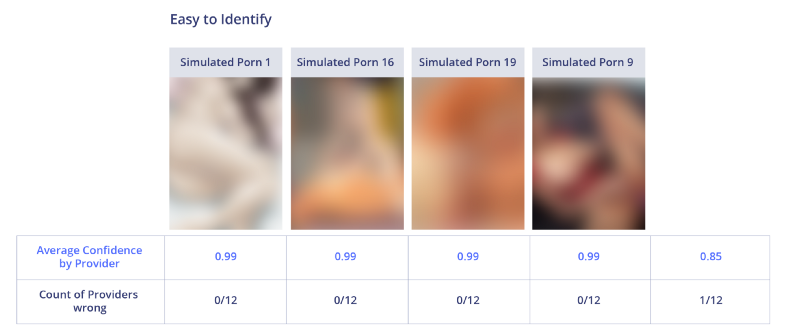

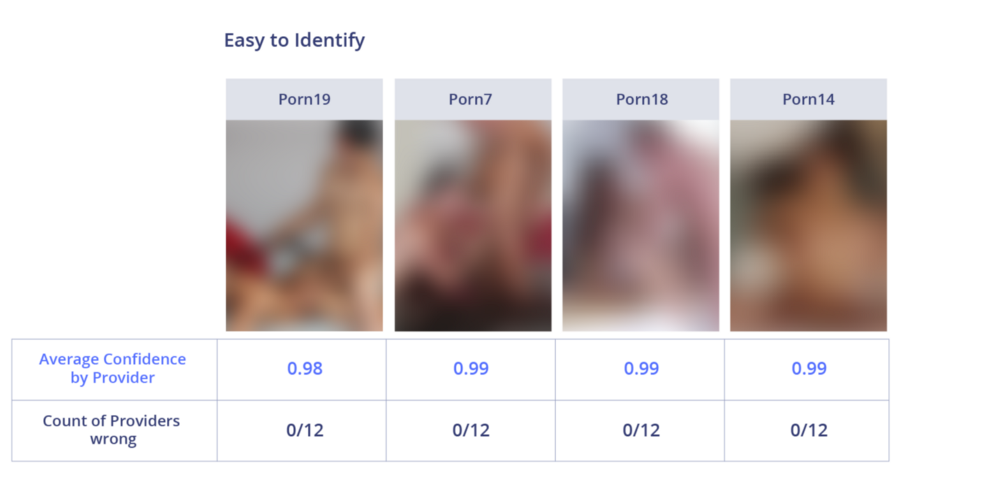

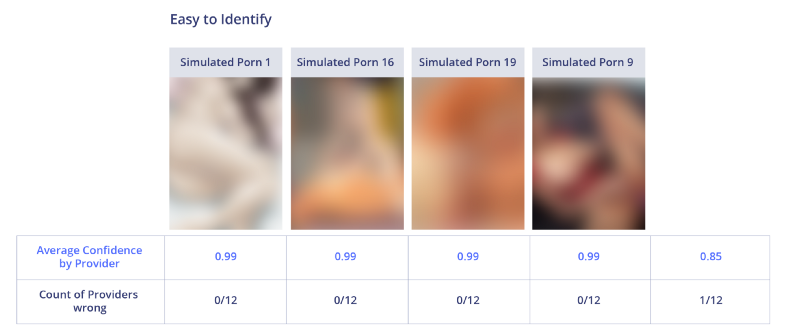

Images that are easy to identify are clearly pornographic. All APIs correctly recognized the images above. Most of them predicted NSFW with very high confidence.

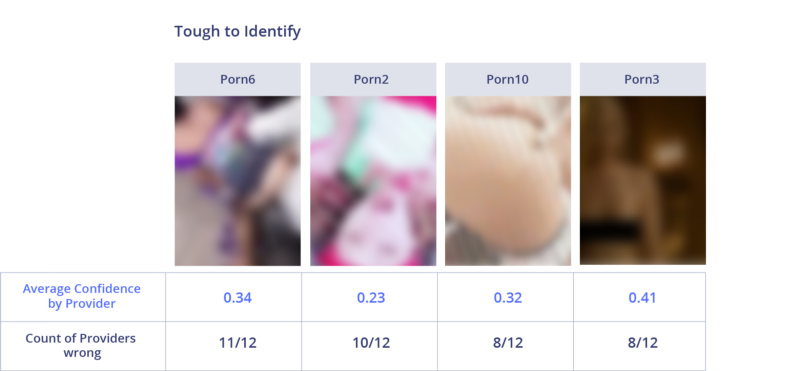

Images that are difficult to identify contain partially closed or blurred objects, which makes work difficult. In the worst case, 11 out of 12 systems made a mistake with the image. The effectiveness in recognizing pornography varies greatly depending on the intensity of porn and how well the content is visible.

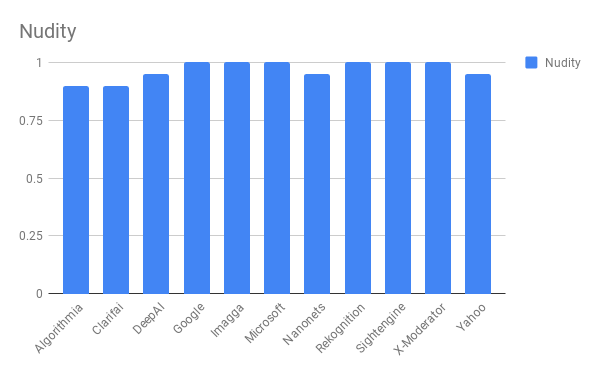

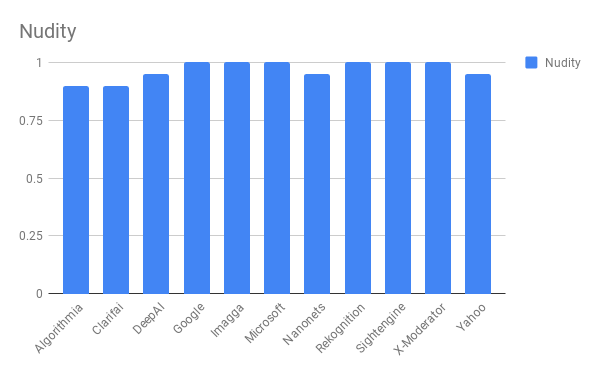

Most APIs do an amazing job with many of the pictures in this category, showing a detection rate of 100%. Even the most inefficient APIs (Clarifai and Algorithmia) showed 90%. The definition of nudity has always been the subject of debate. As can be seen from the results, systems usually fail in doubtful cases when there is a possibility that the image is still SFW.

In simple images clearly visible nudity. Anyone will call them NSFW without question. No API made a mistake, and the average score was 0.99.

On controversial images the API was wrong. Maybe the reason is that each of them has sensitivity settings.

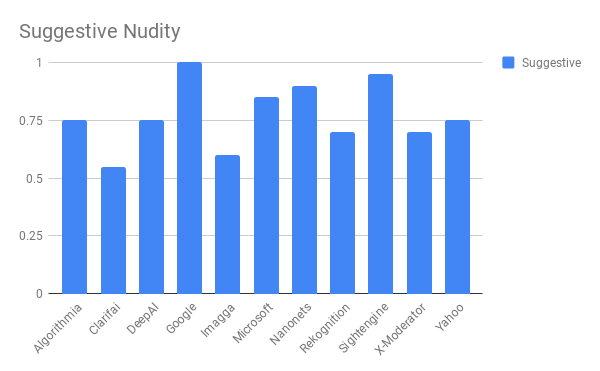

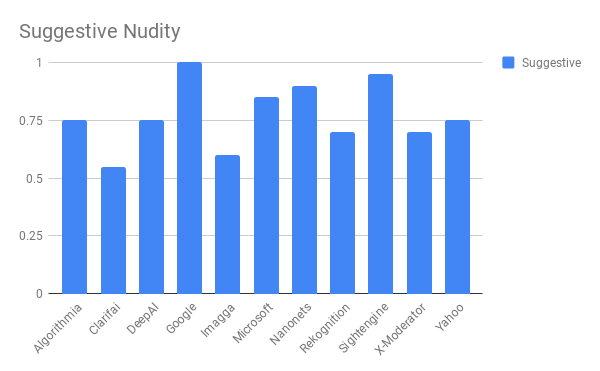

Google won again with a detection rate of 100%. Sightengine and Nanonets performed better than others with 95% and 90%, respectively. Automatic systems recognize suggestive nudity almost as easily as explicit. They make a mistake in the pictures, which usually look like SFW, with only some signs of nudity.

Again, no API was mistaken on explicit NSFW images.

In the suggestive nudity, the API disagreed. As in sheer nudity, they had different thresholds of tolerance. I myself am not sure whether to recognize these SFW pictures or not.

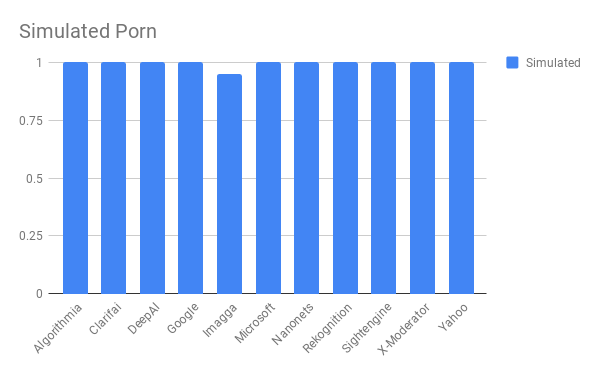

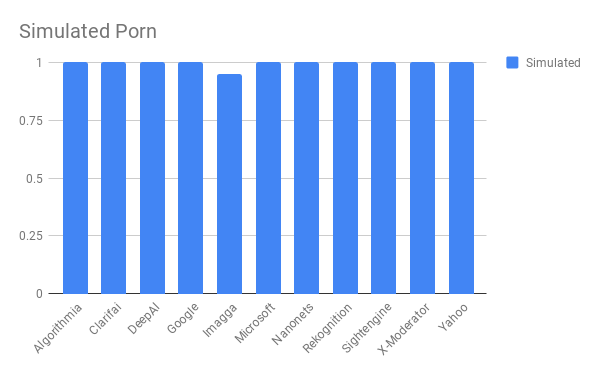

All APIs did an extremely good job here and found 100% of imitation porn examples. The only exception was Imagga, which missed one image. I wonder why the APIs work so well on this task? Apparently, it is easier for algorithms to identify artificially created images than natural ones.

All APIs have shown excellent results and high confidence ratings.

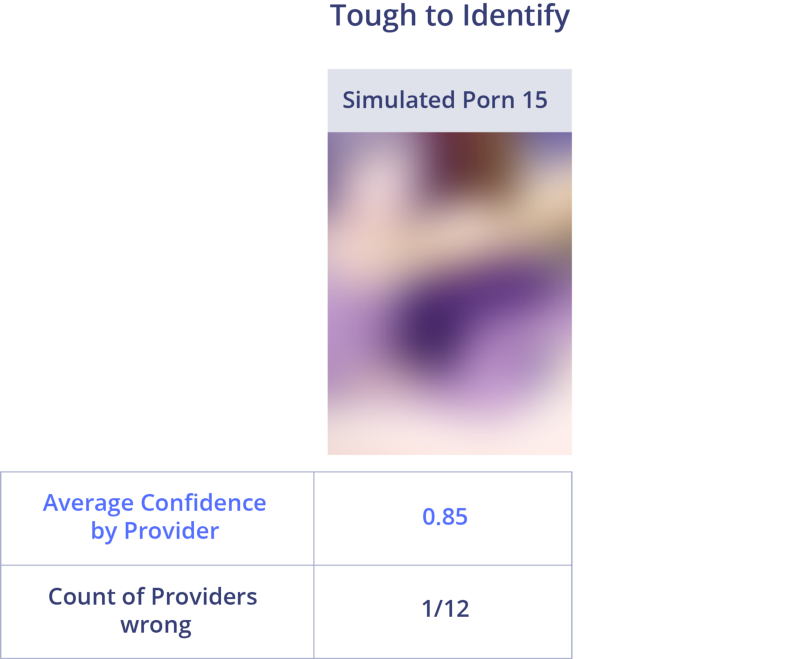

The only image in which Imagga was mistaken can be interpreted as not porn, if you do not look at it for a long time.

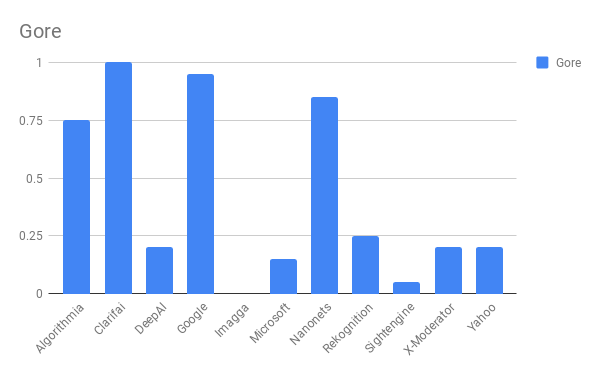

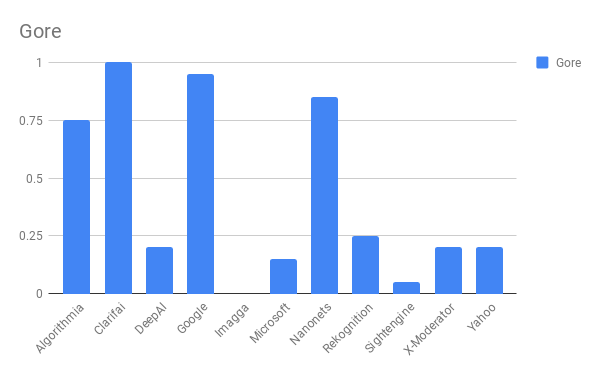

This is one of the most difficult categories, since the average detection efficiency through the API was less than 50%. Clarifai and Sightengine outperformed the competition by correctly finding 100% of the images in this category.

The API best coped with medical images, but even on the lightest of them, 4 out of 12 systems were mistaken.

Difficult images have nothing in common. However, people will very easily call these pictures bloody. This probably means that the reason for the poor performance is the lack of available data for training.

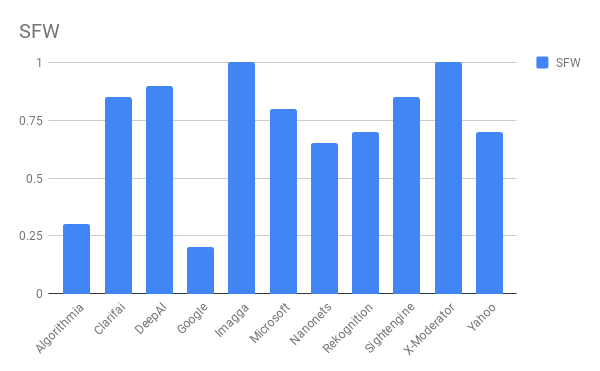

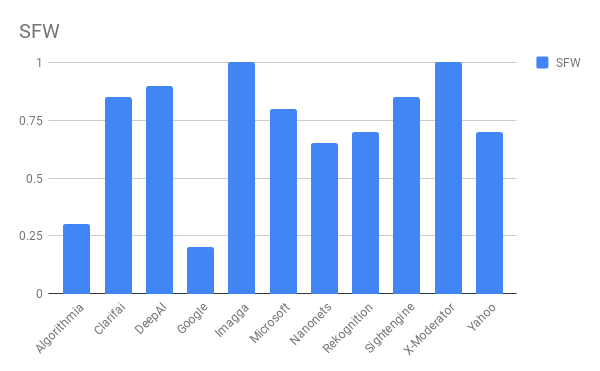

Images that are not identifiable as NSFWs are considered safe. Data collection in itself is difficult, because these pictures must be close to NSFW in order to get a good appraisal of the API. One can argue whether all these images are SFW or not. Here, Sightengine and Google showed the worst result, which explains their excellent performance in other categories. They just call all the dubious NSFW pictures. Imagga, on the other hand, did a good job here because it doesn’t call anything NSFW. X-Moderator also showed itself very well.

Links to original images: SFW15 , SFW12 , SFW6 , SFW4

Only small patches of skin are displayed on the lungs for defining images, and people also easily identify them as SFW. Only one or two systems recognized them correctly.

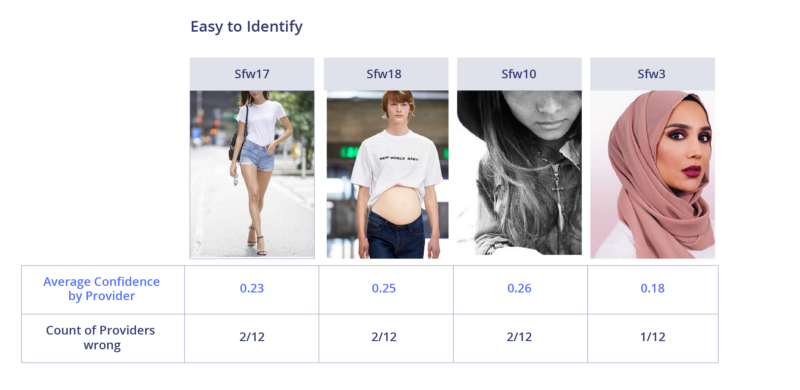

References to original images: SFW17 , SFW18 , SFW10 , SFW3

On all difficult to identify SFW images, larger areas of skin are displayed or this is anime (systems tend to consider anime pornography). Most APIs considered images with a large bare body area as SFW. The question is, is this an SFW?

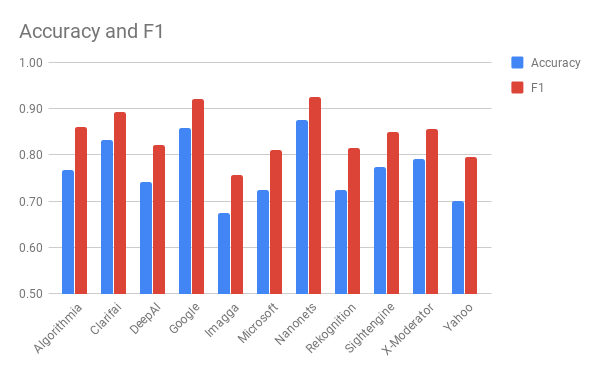

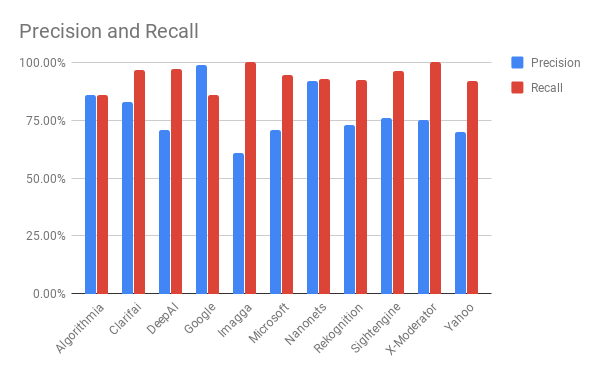

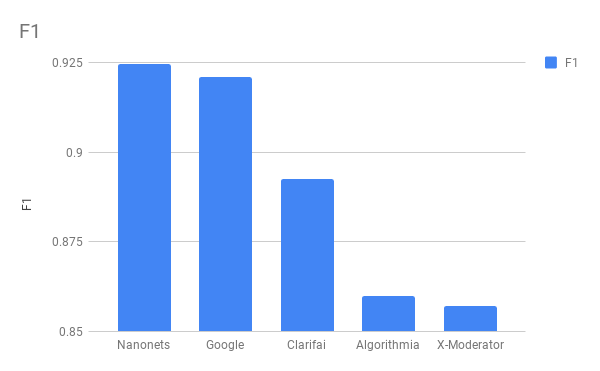

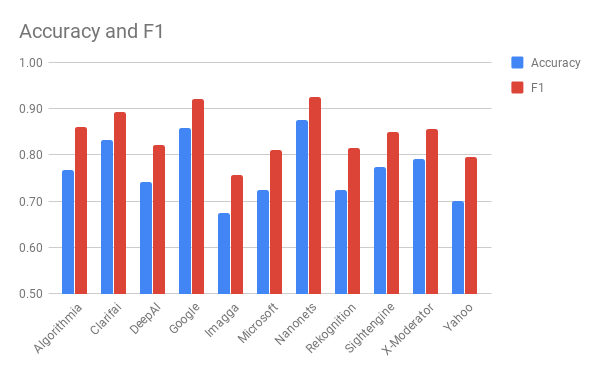

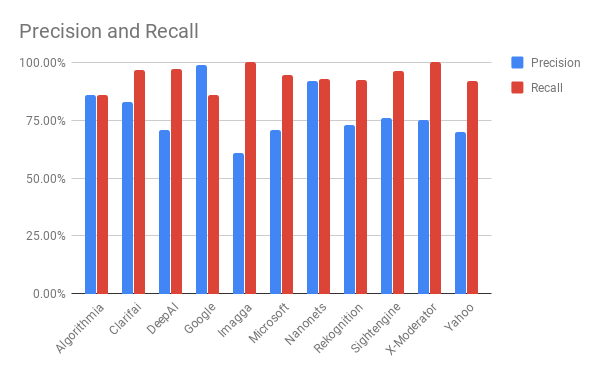

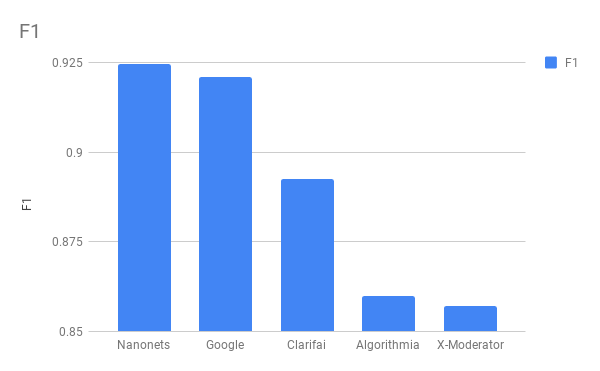

Looking at the efficiency of the API in all categories of NSFW, as well as on their effectiveness in recognizing SFW correctly, we can conclude that the best result for F1 and the best average accuracy for the system Nanonets: it works well in all categories in a stable way. The Google system shows an exceptionally good result in the NSFW categories, but too often marks safe pictures as NSFW, therefore, received a penalty on the F1 metric.

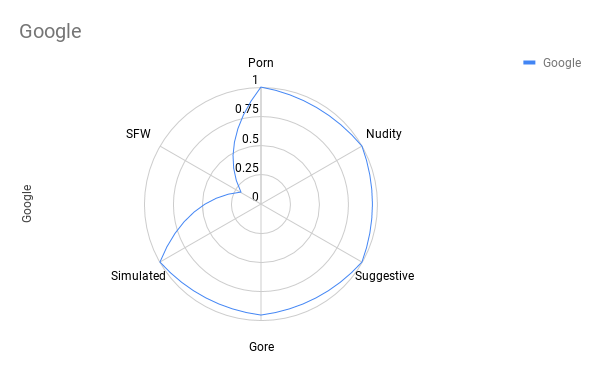

I compared the top 5 systems in terms of accuracy and the F1 score to evaluate differences in their performance. The larger the area of the radar chart, the better.

The Nanonets system is not ranked first in any category. However, this is the most balanced solution. The weakest point where you can still work on it is the accuracy of SFW recognition. It is too sensitive to any naked part of the body.

Google is the best in most NSFW categories, but worst of all in SFW detection. I want to note that I took the sample for testing from Google, that is, it “should know” these images. This may be the reason for really good performance in most categories.

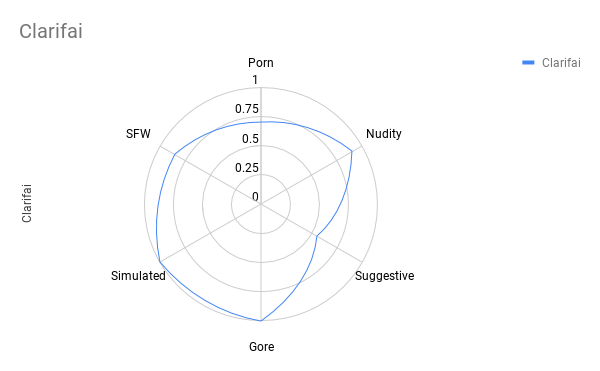

Clarifai really shines in defining the dismemberment, being ahead of most other APIs, the system is also well balanced and works well in most categories. But she lacks accuracy in revealing suggestive nudity and pornography.

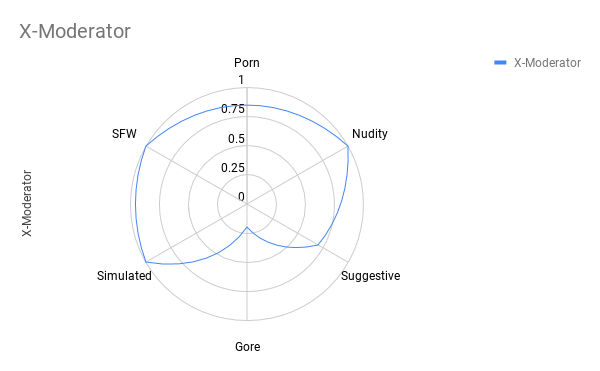

X-Moderator is another well-balanced API. In addition to dissection, it is well identifies most of the other types of NSFW. The accuracy is 100% in the definition of SFW, which distinguishes this system from competitors.

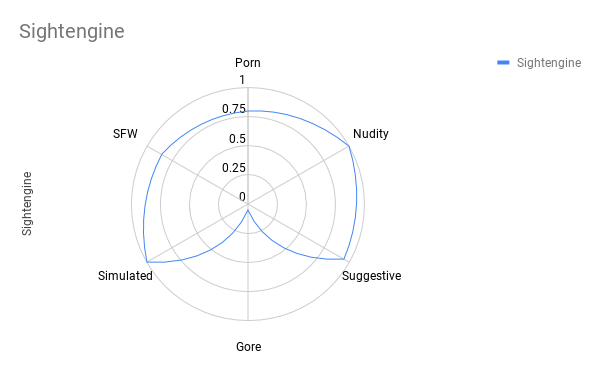

Like Google, Sightengine showed almost perfect results in identifying NSFW. However, she did not recognize a single image of the dismemberment.

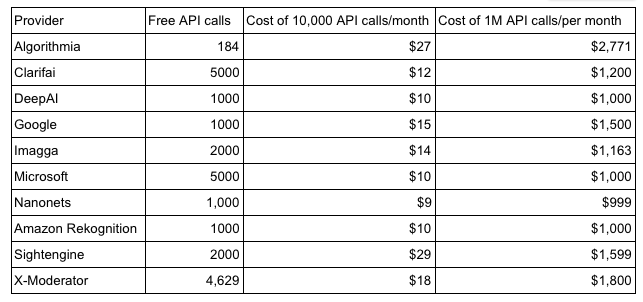

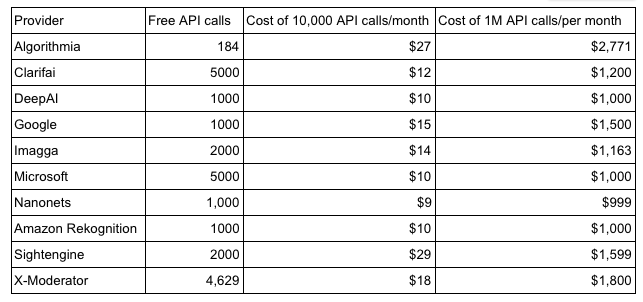

Another criterion in choosing an API is price. The prices of all companies are compared below. Most APIs offer a free trial with limited use. The only completely free API from Yahoo, but it needs to be hosted on your hosting, this API is not included in this table.

Amazon, Microsoft, Nanonets and DeepAI offer the lowest price of $ 1,000 per month for a million API calls.

The subjective nature of the NSFW content makes it difficult to determine the winner.

For social media of a general theme, which is more focused on content distribution and needs a balanced classifier, I would prefer the Nanonets API at the highest F1 grade for the classifier.

If the application is focused on children, I would be reinsured and chose the Google API for its exemplary performance in all categories of NSFW, even with the loss of some of the normal content.

Having spent a lot of time on this problem, I realized one key thing: in fact, the definition of NSFW is very vague. Each person will have their own definition. What is considered acceptable is largely dependent on what your service provides. Partial nudity is acceptable in a dating application, but not residency. And in the medical journal the opposite. Indeed, the gray area is suggestive nudity where it is impossible to get the right answer.

The person immediately understands that a certain image is inappropriate, that is, NSFW (Not Safe For Work). But for artificial intelligence, things are not so clear. Many companies are now trying to develop effective tools to automatically filter such content.

I wanted to understand what the current state of the market is. Let's compare the effectiveness of existing API for filtering images in the following categories:

- Frank nudity

- Suggestive nudity (that is suggestive of outright nudity - approx. Lane.)

- Pornography / intercourse

- Imitation / animated porn

- Dismemberment (gore) / violence

Tl; DR: If you just want to know the best API, you can go directly to the comparison at the end of the article.

Experimental Conditions

The dataset . For evaluation, I collected my NSFW data set with an equal number of pictures in each NSFW subcategory. The data set consists of 120 images with 20 positive NSFW images for each of the five mentioned categories, and 20 SFW images. I decided not to use the publicly available set of YACVID 180, since it is mainly based on the use of nudity as a measure of NSFW content.

Collecting NSFW pictures is tiring, it’s a very long and painful task, which explains the small number of images.

The dataset is available for download here . [Warning: contains candid content]

Here is a table with raw results for each API and each image in the dataset.

Metrics

Each of the classifiers is evaluated according to generally accepted metrics:

True positive: TP

If the classifier calls something NSFW and it is actually NSFW.

True negative: TN

If the classifier calls something SFW, and this is actually SFW.

False Positive: FP

If the classifier is called something NSFW, which is actually SFW.

False negative: FN

If the classifier calls something SFW, which was actually NSFW.

Accuracy

If the model makes a prediction, can you trust it?

Accuracy (precision)

If the model says that the image is NSFW, how often is the forecast correct?

Recall

If all samples are NSFW, how much does she identify?

F1 score

It is a mixture of infallibility and recall, often similar to accuracy.

The following content moderation APIs were evaluated:

Productivity by category

At first, I evaluated each API in all NSFW categories.

Pornography / intercourse

The Google and Sightengine APIs are really good here. They are the only truly recognized all pornographic images. Nanonets and Algorithmia are slightly behind with a score of 90%. Microsoft and Imagga showed the worst performance in this category.

Images that are easy to identify are clearly pornographic. All APIs correctly recognized the images above. Most of them predicted NSFW with very high confidence.

Images that are difficult to identify contain partially closed or blurred objects, which makes work difficult. In the worst case, 11 out of 12 systems made a mistake with the image. The effectiveness in recognizing pornography varies greatly depending on the intensity of porn and how well the content is visible.

Frank nudity

Most APIs do an amazing job with many of the pictures in this category, showing a detection rate of 100%. Even the most inefficient APIs (Clarifai and Algorithmia) showed 90%. The definition of nudity has always been the subject of debate. As can be seen from the results, systems usually fail in doubtful cases when there is a possibility that the image is still SFW.

In simple images clearly visible nudity. Anyone will call them NSFW without question. No API made a mistake, and the average score was 0.99.

On controversial images the API was wrong. Maybe the reason is that each of them has sensitivity settings.

Suggestive nudity

Google won again with a detection rate of 100%. Sightengine and Nanonets performed better than others with 95% and 90%, respectively. Automatic systems recognize suggestive nudity almost as easily as explicit. They make a mistake in the pictures, which usually look like SFW, with only some signs of nudity.

Again, no API was mistaken on explicit NSFW images.

In the suggestive nudity, the API disagreed. As in sheer nudity, they had different thresholds of tolerance. I myself am not sure whether to recognize these SFW pictures or not.

Imitation / animated porn

All APIs did an extremely good job here and found 100% of imitation porn examples. The only exception was Imagga, which missed one image. I wonder why the APIs work so well on this task? Apparently, it is easier for algorithms to identify artificially created images than natural ones.

All APIs have shown excellent results and high confidence ratings.

The only image in which Imagga was mistaken can be interpreted as not porn, if you do not look at it for a long time.

Dismemberment

This is one of the most difficult categories, since the average detection efficiency through the API was less than 50%. Clarifai and Sightengine outperformed the competition by correctly finding 100% of the images in this category.

The API best coped with medical images, but even on the lightest of them, 4 out of 12 systems were mistaken.

Difficult images have nothing in common. However, people will very easily call these pictures bloody. This probably means that the reason for the poor performance is the lack of available data for training.

Safe pictures

Images that are not identifiable as NSFWs are considered safe. Data collection in itself is difficult, because these pictures must be close to NSFW in order to get a good appraisal of the API. One can argue whether all these images are SFW or not. Here, Sightengine and Google showed the worst result, which explains their excellent performance in other categories. They just call all the dubious NSFW pictures. Imagga, on the other hand, did a good job here because it doesn’t call anything NSFW. X-Moderator also showed itself very well.

Links to original images: SFW15 , SFW12 , SFW6 , SFW4

Only small patches of skin are displayed on the lungs for defining images, and people also easily identify them as SFW. Only one or two systems recognized them correctly.

References to original images: SFW17 , SFW18 , SFW10 , SFW3

On all difficult to identify SFW images, larger areas of skin are displayed or this is anime (systems tend to consider anime pornography). Most APIs considered images with a large bare body area as SFW. The question is, is this an SFW?

General comparison

Looking at the efficiency of the API in all categories of NSFW, as well as on their effectiveness in recognizing SFW correctly, we can conclude that the best result for F1 and the best average accuracy for the system Nanonets: it works well in all categories in a stable way. The Google system shows an exceptionally good result in the NSFW categories, but too often marks safe pictures as NSFW, therefore, received a penalty on the F1 metric.

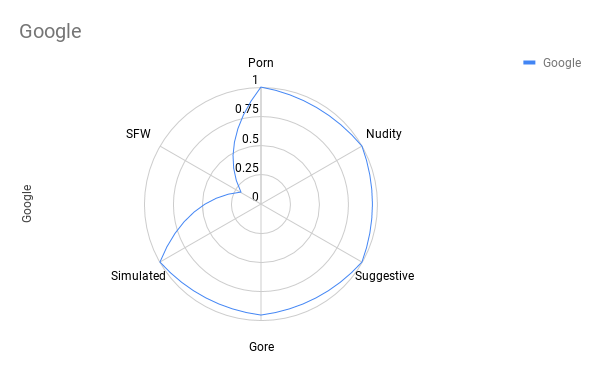

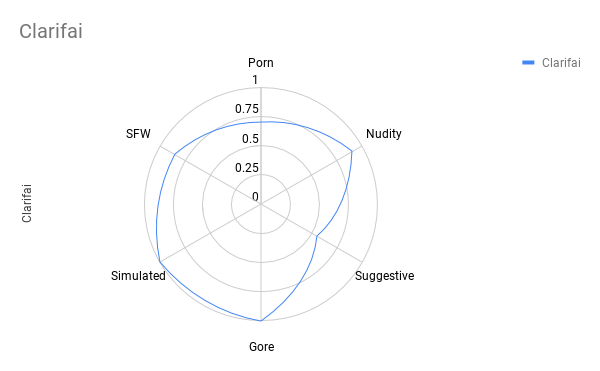

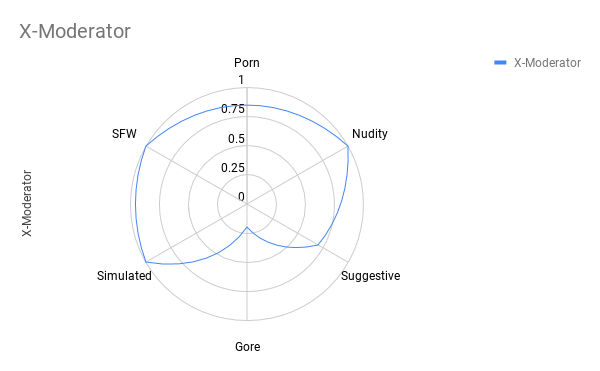

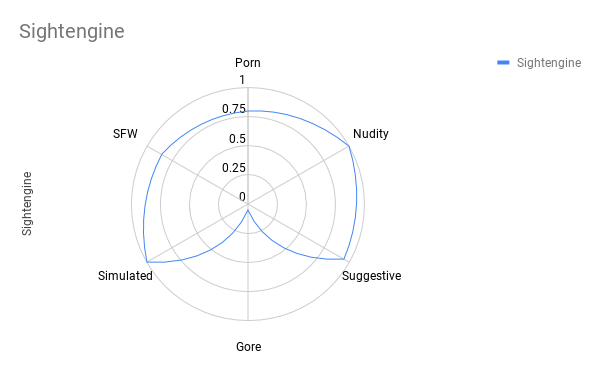

By developers

I compared the top 5 systems in terms of accuracy and the F1 score to evaluate differences in their performance. The larger the area of the radar chart, the better.

1. Nanonets

The Nanonets system is not ranked first in any category. However, this is the most balanced solution. The weakest point where you can still work on it is the accuracy of SFW recognition. It is too sensitive to any naked part of the body.

2. Google

Google is the best in most NSFW categories, but worst of all in SFW detection. I want to note that I took the sample for testing from Google, that is, it “should know” these images. This may be the reason for really good performance in most categories.

3. Clarifai

Clarifai really shines in defining the dismemberment, being ahead of most other APIs, the system is also well balanced and works well in most categories. But she lacks accuracy in revealing suggestive nudity and pornography.

4. X-Moderator

X-Moderator is another well-balanced API. In addition to dissection, it is well identifies most of the other types of NSFW. The accuracy is 100% in the definition of SFW, which distinguishes this system from competitors.

5. Sightengine

Like Google, Sightengine showed almost perfect results in identifying NSFW. However, she did not recognize a single image of the dismemberment.

Prices

Another criterion in choosing an API is price. The prices of all companies are compared below. Most APIs offer a free trial with limited use. The only completely free API from Yahoo, but it needs to be hosted on your hosting, this API is not included in this table.

Amazon, Microsoft, Nanonets and DeepAI offer the lowest price of $ 1,000 per month for a million API calls.

What is the best content moderation API?

The subjective nature of the NSFW content makes it difficult to determine the winner.

For social media of a general theme, which is more focused on content distribution and needs a balanced classifier, I would prefer the Nanonets API at the highest F1 grade for the classifier.

If the application is focused on children, I would be reinsured and chose the Google API for its exemplary performance in all categories of NSFW, even with the loss of some of the normal content.

What is NSFW really?

Having spent a lot of time on this problem, I realized one key thing: in fact, the definition of NSFW is very vague. Each person will have their own definition. What is considered acceptable is largely dependent on what your service provides. Partial nudity is acceptable in a dating application, but not residency. And in the medical journal the opposite. Indeed, the gray area is suggestive nudity where it is impossible to get the right answer.