Y. Schmidhuber: “It is wonderful to be part of the future artificial intelligence”

The GTC EUROPE 2016 graphics technology conference was held in Amsterdam in the last days of September . Professor Jürgen Schmibdhuber presented his presentation as scientific director of IDSIA, a Swiss laboratory, where he and his colleagues are engaged in research in the field of artificial intelligence.

The main thesis of the presentation - real artificial intelligence will change everything in the near future. For the most part, the article you are reading is based on a presentation by Professor Schmidhuber.

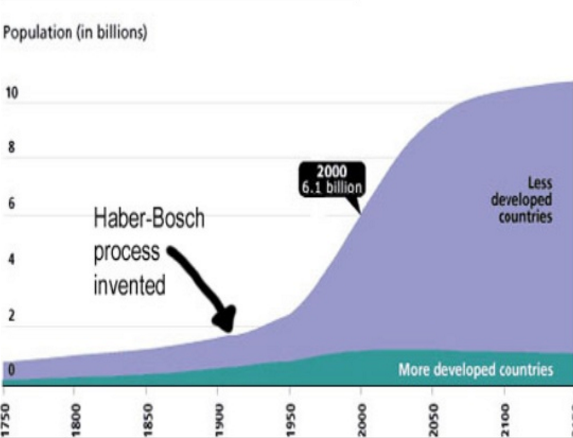

The most influential discovery of the 20th century is considered the Haber-Bosch process : the extraction of nitrogen from the air to obtain fertilizer. As a result, this led to a population explosion in less developed countries. For comparison:

An impressive leap, and the explosion of artificial intelligence in the 21st century will be even more impressive according to Jürgen Schmidhuber

It all started with the fact that Konrad Zuse introduced the first computer working on common microcircuits in 1941. Computers became faster and cheaper every year. Over 75 years, they have fallen in price by 1,000,000,000,000,000 times.

In 1987, the thesis of Schmidhuber's thesis reads something like this: "the first concrete development of a recursive self-developing AI."

The history of deep learning began in 1991. Schmidhuber deservedly considers Aleksey Grigoryevich Ivakhnenko to be the father of in-depth training . Without his methodology and control systems, there would be no discoveries in the laboratory of a professor in Switzerland.

In 1965, he published the first learning algorithms for deep networks. Of course, what we used to call “neural networks” today didn’t even seem like that then. Ivakhnenko studied deep multilayer perceptrons with functions of polynomial activation and progressive training with a number of layers with regression analysis. In 1971, an eight-layer deep learning network was described. Today, finds of that time are still used.

Obtaining a training set of input vectors with the corresponding goal of output vectors, layers with nodes of additional and multiplicative neurons grow in increments and are trained using regression analysis. After that, the excess information is removed using the verification data, where regularization is used to filter out redundant nodes. The numbers of layers and nodes in one layer can be trained in a problem-dependent way.

Now about controlled feedback. The work of the pioneers of the region:

- Continuous feedback of Euler-Lagrange calculations + Dynamic programming, Bryson 1960, Kelly 1961 ;

- Feedback through the rule of the chain, Dreyfus, 1962 ;

- Modern OS in rare discrete neural networks similar to (including Fortran code) networks, Lynma, 1970;

- Changing the weights of controllers, Dreyfus, 1973;

- Automatic differentiation could show the OS in any differentiable graph, Spilpenning, 1980;

- OS as applied to neural networks, Verbos , 1982;

- RNN Examples, Williams, Verbos, Robinson, 1980;

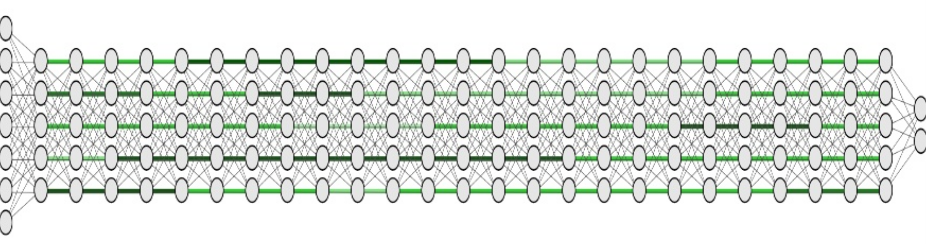

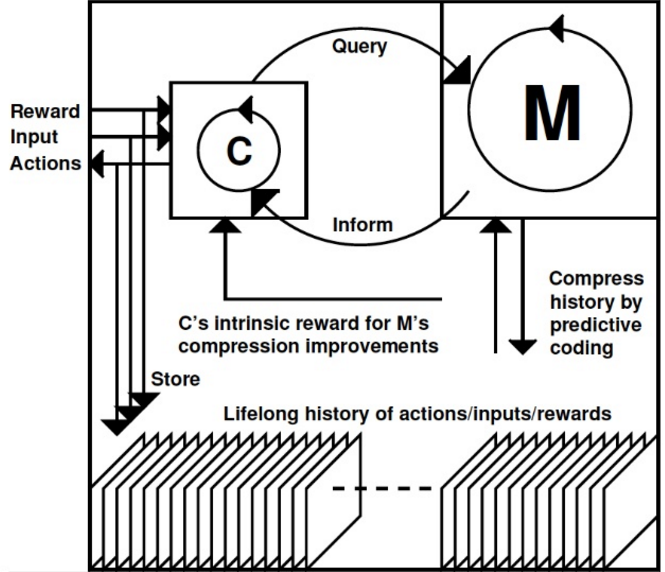

RNNs are deep learning recursive neural networks. In 1991, Professor Schmidhuber created the first network with in-depth training. The process of uncontrolled preparation before learning for hierarchical temporary memory looks like this: RNN stack & history compression & acceleration of supervised learning. LSTM - long short-term memory is a type of neural network. LSTM RNNs are now widely used in manufacturing and research to recognize data sequences in images or videos. Around 2010, the story repeated: uncontrolled FNNs were everywhere replaced by partially controlled FNNs.

Different types of signals are received at the entrance to the AI network: images, sounds, emotions, possibly - and at the system output, muscle movement (in a person or a robot) and other actions are obtained. The essence of the work is to improve the algorithm - it develops similarly to how small children train their motor skills.

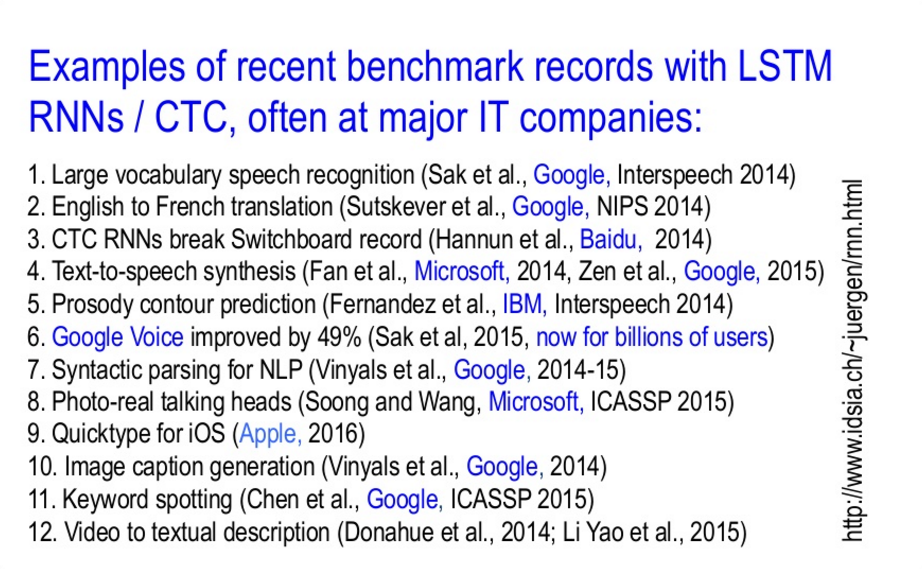

The LSTM method was developed in Switzerland. These networks are more advanced and versatile than the previous ones - they are ready for in-depth training and various tasks. The network was born due to the curiosity of the author, and is now widely used. For example, this is the Google Speech voice input feature. Using LSTM improved this service by 50 percent. The network learning process looked like this: a lot of people were saying phrases for an example of training. At the beginning of training, the neural network does not know anything, and then, after it listens to many passages, for example, English, it learns to understand it by remembering the rules of the language. Later, the network can make translations from one language to another. Examples of use are in the image below.

Most of all LSTM is used in Google, Microsoft, IBM, Samsung, Apple, Baidu. These companies need neural networks to recognize speech, images, machine translation tasks, create chat bots and much, much more.

Let's go back to the past: twenty years ago, the machine became the best chess player . Game champions of non-human origin appeared in 1994 - TD-Gammon in the game Backgammon. The learning winner was created at IBM. For chess - also at IBM - they designed the non-learning Deep Blue. This applies to machine vision.

In 1995, the robocar drovefrom Munich to Denmark and back on public highways at speeds up to 180 km \ h without GPS. In 2014, it was also the 20th anniversary of the emergence of self-driving cars in the stream on the freeway.

In 2011, a competition of road signs in Silicon Valley was held. During it, the development of the Schmidhuber laboratory showed results two times better than human, three times higher than the closest artificial participant in the competition and six times better than the FIRST non-neural thing (recognition of visual patterns). This is used in self-driving cars.

There were other victories.

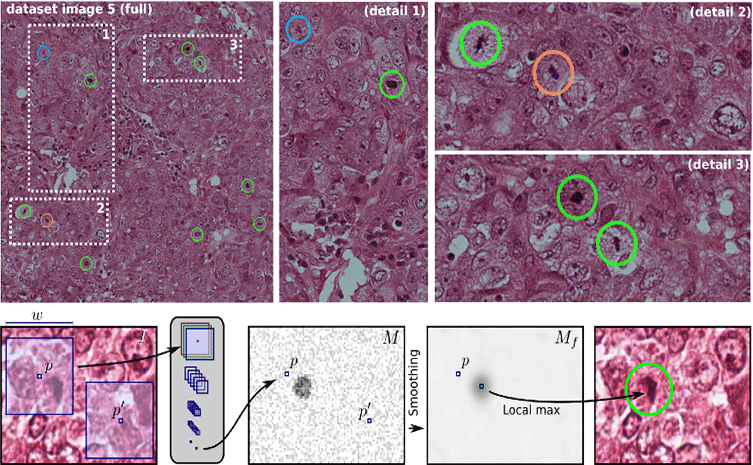

A similar technique is applicable in medical cases. For example, recognitiondangerous precancerous cells in the early stages of mitosis. This means that one doctor will be able to cure more patients per unit time.

Proactive LSTM and backbone networks - in each layer, the function x + x, for training neural networks with hundreds of layers.

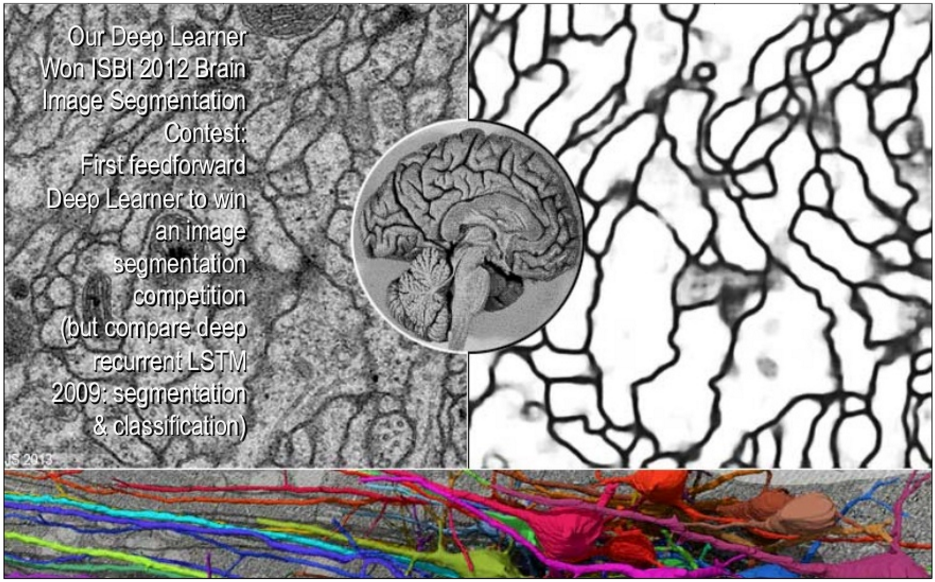

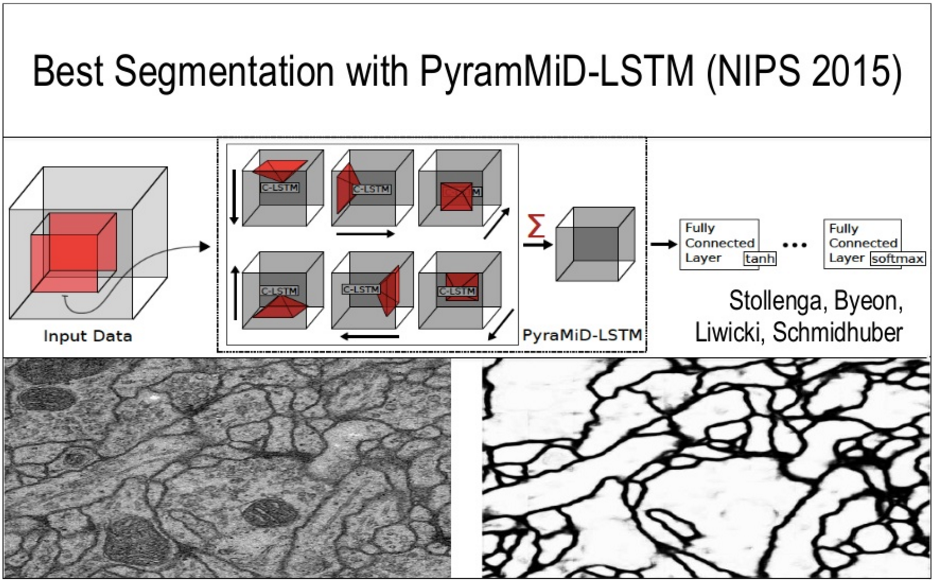

Best segmentation in LSTM networks:

Students of the professor created a library of open source neural networks Brainstorm.

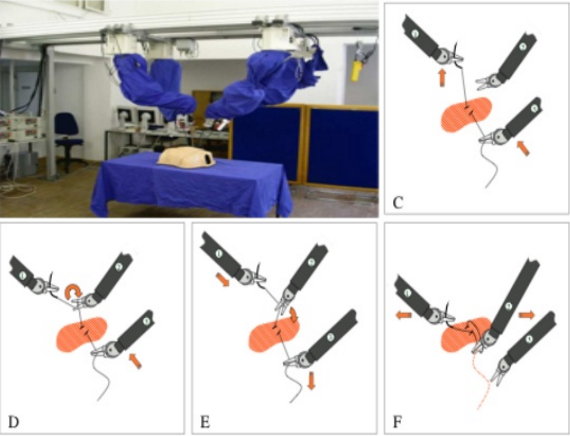

Meanwhile, the robots were busy developing fine motor skills. And LSTM networks learned to tie knots.

In a little over 20 years, deep learning has gone from tasks with at least 1000 computational steps (1991-1993) to proactive recursive neural networks with more than 100 layers (2015).

Everyone remembers how Google bought DeepMind.for $ 600 million. Now the company has a whole unit dedicated to machine learning and AI. The first employees, the founders, DeepMind came from the laboratory of Professor Schmidhuber. Later, two more doctors of sciences from IDSIA joined DeepMind.

Another work of Professor Schmidhuber on finding complex neurocontrollers with millionth scales was presented in 2013 at GECCO . Refers to search on a compressed network.

In the 1990-1991s, recursive training in visual attention on slow computers was aimed at obtaining an additional fixed input. In 2015, the same thing. In 2004, the Robot Cup in the fastest league was held at a speed of 5 meters per second. The competition was successfully usedwhat Schmidhuber proposed back in 1990 was forecasting expectations and planning using neural networks.

The “Renaissance” 2014-2015, or as it is called in English RNNAIssance (Reccurent Neural Networks based Artificial Intelligence), was already a teaching of thinking: the theory of algorithmic information for new combinations of enhanced training for recurrent neuromodels of the world and RNN-based controllers.

An interesting scientific theory is given by the professor as an explanation of the phenomenon of "fun." Formally, fun is something new, amazing, eye-catching, creative, curious, combines art, science, humor. Curiosity, for example, is used in improving skills.humanoid robots. It takes a lot of time. During the experiment, the robot learns to place the cup on a small stand. The influence of internal motivation and external praise on the learning process is studied.

Know about PowerPlay ? He not only solves, but also constantly invents problems on the border of the known and the unknown. So, on an increasing basis, the solution of common problems is being trained by constantly searching for the simplest unsolved problem so far.

What will happen next according to Schmidhuber: the creation of a small, animal-like (for example, a monkey or a crow), an AI that will learn to think and plan hierarchically. Evolution took billions of years to create such animals and much less time to move from them to a human being. Technological evolution is much faster than natural.

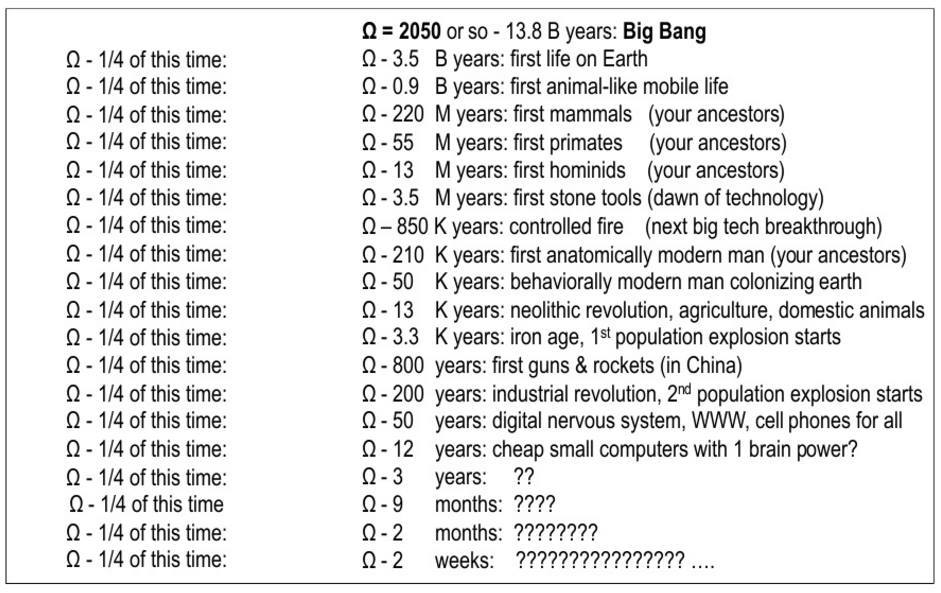

A few words about the beautiful simple pattern, opened in 2014. It deals with exponential growth in most historically important events in the universe from a human point of view. Professor's

commentary on science fiction:

I prefer to call the singularity Omega because this is exactly what Teilhard de Chardin said 100 years ago and because it sounds like “Oh my God” In 2006, I described a historical pattern that confirms that 40,000 years of dominant Human positions will lead to the Omega Point in the next few decades. So, I show an incredibly accurate exponential growth, which describes the entire path of development up to the Big Bang. It contains the whole story, probably the most important events from the point of view of man. The probability of error is less than 10%. I first talked about this in 2014.

Space is not human friendly unlike correctly designed robots

After the presentation in Amsterdam, questions and answers were raised regarding the appearance of the so-called robotic journalists or any other models that can do the work of many people. The presenter asked whether people from the "textual sphere" should worry about the presence of artificial intelligence and its development. The professor believes that robots cannot replace a person, they are created to help him. And they will not fight with a man, because they have different goals with him. Interestingly, popular science fiction films misinterpret the theme. Real life wars are waged between similar creatures - people fight with other people, not with bears, for example. But whether AI will be used for “bad military things” depends on the ethics of people - so this is not a question of AI development.

Important to remember the origins

Nature published an article last year, it is that deep learning allows computational models, consisting of many processing layers, to study data representations at many levels of abstraction. Such methods have greatly improved the state of things in the field of speech recognition, visual objects and other areas, for example, the discovery of drugs and the decoding of the genome. Deep learning reveals intricate structure in huge datasets. For this, feedback algorithms are used - they indicate how the machine should change its internal parameters, which are used to calculate the representation in each layer from the representation in the previous layer. Deep convolutional networks were a breakthrough in image, video, speech and sound processing, and recurrent networks shed light on sequential data such as text and speech.

Here is whatJurgen Schmidhuber gave a critical comment .

Machine learning is the science of gaining trust. The machine learning society benefits from the right decisions to “give responsibility” to faithful people. The inventor of an important method must obtain approval for the invention of this method. Further, another person who popularizes the idea of a method should also gain confidence in this. It can be different people. A relatively young field of study, machine learning should adopt a code of honor from the more mature sciences - for example, mathematics. What does it mean. For example, if you are proving a new theorem using methods already known, this should be clear. Well, if you invent something that is already known and learned about it in fact, then you should also clearly mention this, even later. Therefore, it is important to refer to everything that was invented before.