Code Optimization: CPU

All programs must be correct, but some programs must be fast . If a program processes video frames or network packets in real time, performance is a key factor. Using efficient algorithms and data structures is not enough. You need to write code that the compiler easily optimizes and translates into fast executable code.

In this article, we will look at basic code optimization techniques that can increase the performance of your program many times over. We will also touch on the processor device. Understanding how the processor works is necessary for writing effective programs.

The fifth chapter in Computer Systems: A Programmer's Perspective inspired me to write this article . All programs are tested on a machine with a Pentium 2117U processor , the performance on your machine may be different. In another article in this series, “Code Optimization: Memory,” we look at optimization techniques for better cache utilization.

Compilers themselves try to optimize the code. When GCC is launched with the -Og parameter, it performs the basic optimization level, with the -O1 parameter the first optimization level. There are higher levels of optimization: -O2, -O3, etc. The higher the level of optimization, the more radical the compiler makes the program. Compilers can only use safe optimizations . This means that the compiler can only modify the program so that it does not change its behavior for all input data.

We, as programmers, need to understand that there are certain characteristics of the code that will not allow the compiler to perform optimization. We call them optimization blockers . Consider two types of optimization blockers. One of them arepointers . The compiler cannot know for sure whether two pointers will point to the same memory area, and therefore does not perform some optimizations. Consider an example:

The twiddle2 function looks more efficient, it performs only three memory requests (read * xp , read * yp , write * xp ), while twiddle1 performs six requests (four reads and two records). It can be expected that these two functions have the same behavior. However, imagine that xp and yp point to the same memory location. Then twiddle1 will increase * xp four times, and twiddle2 - three times. These functions have different behavior in some cases. For this reason, the compiler will not dare to transform a less efficient function into a more efficient one.

Another optimization blocker is function calls . In general, function calls entail overhead , and we need to try to avoid them. Consider an example:

The first function calls f four times, while the second - only once. We expect the compiler to guess and convert the first function to the second. But the function f can have side effects , it can change the global state, as in this example:

In this case, func1 and func2 will return different results. It is difficult for the compiler to determine whether the function call has side effects or not. When he cannot do this, he expects the worst and does not perform optimization.

Slow programs typically perform calculations on large data sets. It will be reasonable to evaluate the effectiveness of such programs in the average number of clock cycles that the processor spends on processing one element of the array. We introduce the metric CPE (cycles per element). If we say that the program has a CPE 2.5 performance, it means that on average it spends 2.5 processor cycles to process one element.

We will present a simple program, on the example of which we will demonstrate powerful optimization techniques. The vec structure is a vector of float elements . Combine0 functioncalculates the result of the multiplication of all elements of the vector. We will optimize this function. Let's make the size of the array 5000 and initialize it with random numbers.

We will compile all the programs in this article using GCC with the -Og parameter (basic level of optimization). After compiling the program and making the necessary measurements, I get the performance of CPE 11.05 .

To measure CPE, you can use the rdtsc instruction , this is a clock counter since the last processor reset. It is necessary to compare the counter values before and after the program execution. I warn you that this is an unreliable method. For greater reliability, you can simply measure the spent processor time. The next program should be a template that demonstrates both methods.

Usually the most intense place in a program is cycles, especially the innermost cycle. It is there that we must first of all look for opportunities for optimization. In the loop of our program, we constantly call the vec_len function , which returns the length of the vector. It makes no sense to do this in each iteration, because the length of the vector does not change throughout the cycle. It would be wiser to call this function once and save the result in a variable. Therefore, we make a call to this function outside the loop.

The performance of the new version has not changed - CPE 11.05 . Apparently, the compiler himself guessed to perform this optimization. Let's run GCC without the -Og option and compile both versions of the function without any optimization at all. Now the difference is noticeable: combine0 - CPE 12.7 , combine1 - CPE 11.1 .

In this case, the compiler guessed to insert the body of the function at the place the function was called. But often the compiler will not do this, because it will not be able to determine whether the function has side effects or not. If the function performs any additional actions, such a transformation can change the behavior of the program. As an extreme example, consider a loop that turns uppercase letters into lowercase:

The strlen function traverses the entire string until it encounters a null character, and does this in every iteration. This catastrophically slows down the program. For large lines, the loop will run thousands of times slower than the more efficient one. Ideally, the compiler should recognize that calling strlen always returns the same value and push it outside the loop. But for this you need to deeply analyze the body of the cycle and determine that although the program changes the characters in the string, it does not turn any character into zero. This is beyond the scope of modern compilers.

Many programs often access memory for reading or writing. It takes a lot of time. It is advisable to work with processor registers, and not with memory. For such programs, you need to look for the opportunity to enter a temporary local variable into which to write, and only after some time to write from this variable into memory.

Count how many times we access memory in each iteration. We read an element from an array, read dest , add these values, and write the result to dest . Only three memory accesses. We do not need to read and write dest every time after processing the next element. We will introduce a temporary variable-battery, in which we will store the result, and only at the very end we will write to dest . The compiler will place the accumulator variable itself in the register, so we will get rid of unnecessary memory accesses.

Now we perform only one memory access in each iteration. Performance improves from CPE 11.05 to CPE 5.02 . If you expect the compiler to guess and perform this optimization, then consider what happens if dest points to an element in the vector. In this case, versions with and without battery will calculate a different value. The compiler cannot know what the pointers will be equal to, so it prepares for the worst and does not perform optimization. Function calls and pointers seriously block the optimizing capabilities of the compiler.

We have reached CPE 5.02 . This is not a prime number; my processor spends 5 clock cycles performing multiplication of floating point numbers. We can say that we have reached a certain lower limit .

My processor spends one clock cycle doing the addition of int numbers . Interestingly, if instead of multiplying values of type float , I will add values of type int , will I achieve CPE 1.0 performance? I am making the necessary changes to my program. I launch and get just CPE 1.58 . Where does such inefficiency come from?

The thing is that during the execution of the cycle, the processor performs not only the calculation instructions in which we are interested, but also the instructions that serve the cycle: incrementing the counter, checking the condition, moving to the beginning of the cycle. That is, the maintenance of the cycle has its overhead, and the processor does a lot of unnecessary work. If the body of the loop is small, these "empty" calculations take up a large fraction of the processor time. Cycle Promotion

Techniquelies in the fact that not one but several elements are processed in one iteration. We kind of “unwind” the cycle, increasing the length of his body and reducing the number of turns. Thus, we perform fewer iterations, perform more useful work in one iteration, and overhead costs are no longer such a large share. Let's change the functions that add integers a bit:

Now the program adds two elements to the battery per iteration. Please note that the last element may not be processed in a cycle, so you need to add it to the battery later. CPE drops from 1.58 to 1.15 . Processing four elements per iteration, I get CPE 1.03 , which is close to the value I wanted.

CPE 5.02 , which we received, is equal to the number of clock cycles that my processor spends on multiplying floating-point numbers. To process one element, we perform one multiplication, and, it would seem, this is the limit below which we will not go down. But actually we can achieve CPE 1.04. To understand more advanced optimization techniques, you must first understand how the processor works.

Modern processors are very complex. The internal structure of the processor is called microarchitecture , and this is a separate world over which we have no control. When the processor reads the instructions of the program, it breaks them into primitives that are understandable to him, and processes them as he pleases. If you look at the assembler code, it seems that the processor executes the instructions sequentially, one by one, as they are presented in the code. In fact, the processor can execute instructions in parallel and even in the opposite order , if it is sure that this will not change the final result. Execution of instructions by the processor in parallel is called concurrency at the instruction level .

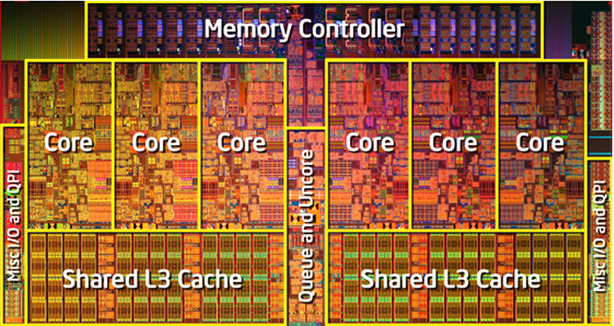

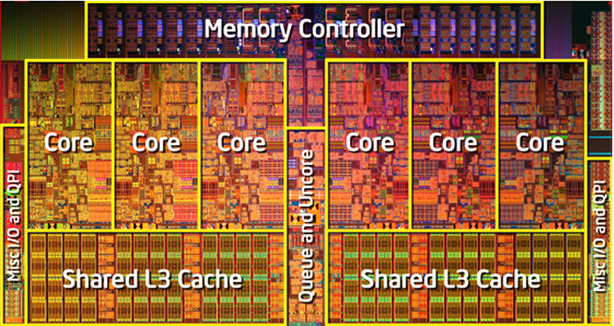

The processor is divided into parts that perform different types of tasks, we call them function blocks . Each block performs a certain series of tasks: reading from memory, writing to memory, adding integers, multiplying floating-point numbers, etc. My processor has two blocks that perform integer addition. This means that it can simultaneously perform two additions.

Imagine that the processor needs to get data from memory and it takes 100 clock cycles. But the processor will not be wise to wait for the completion of this operation to start the next one. After all, a separate block is engaged in reading data from memory, and the remaining blocks at this time can perform other calculations. Therefore, the processor reads the next few instructions in advance and tries to load all the functional blocks with them, even if you have to execute the instructions in a different order.

Reading instructions in advance is called prefetching . If, during prefetching, the processor encounters branching (for example, the if-else construct), he tries to guess which branch will be taken and reads instructions from there. If later he realizes that the wrong branch was taken, he resets the calculations, restores the previous state and goes along the other branch. Such an error costs the processor several lost cycles. For many processors, this is approximately 20 clock cycles.

My processor has one function block that performs multiplication of floating point numbers, and it spends five clock cycles per multiplication. And it would seem that if we need to perform N multiplications, we will have to spend 5 * N cycles. But this is not so, thanks to such a device as a conveyor .

In American films, you probably saw a client in the dining room walk with a tray of several chefs, and each ladle puts something different on him. This is the conveyor. Imagine a dining room with five chefs, and serving one of them takes one beat. They could serve customers like this: first, one customer goes through all the cooks, then the second, then the third and so on. In this case, a client with a full tray would leave them every five measures. But it is inefficient. It’s better if all the customers are lined up, and each bar this line will advance to the next chef. In this case, each step from the cooks will leave the client with a full tray. Note that servicing one client will still take five cycles, but serving N customers (if N is large) will take about N cycles.

Functional blocks in the processor are arranged on the basis of the conveyor principle. They divide all the work into several stages, and different instructions can be processed at different stages at the same time. Despite the fact that the execution of a single instruction may take several clock cycles, many blocks can receive a new instruction into the pipeline every clock cycle . Such blocks are called fully conveyor units . All operations, except division, are performed in a fully conveyor mode. Division is considered a complex operation, takes 3-30 clock cycles and generally does not have a conveyor.

But if the pipeline allows us to spend one clock cycle on an operation, why then did we get CPE 5.02 , not CPE 1.02 ? It's all about such a bad thing as data dependency . Let's go back to the dining room, for example. Let's say the first cook in the dining room puts either rice or buckwheat, and according to a strange rule, each client, having passed all the cooks, decides what the first cook should impose to the next client. Then we cannot start serving the next client until the current client passes all the cooks. We have to wait, because there is a certain dependence. Also in the processor. We say that there is a correlation between data if we need other data to start working on some data, and we must wait until the work on them is completed.

In our program, such a data dependency is created by the battery variable. The processor could calculate the loop instructions several iterations forward. But in each iteration, we calculate the new value of the battery, taking into account its value calculated in the previous iteration. Therefore, we cannot start multiplication in the next iteration until the multiplication in the previous one is complete. That is, each multiplication must go through the entire pipeline before we send the next to the pipeline. This prevents us from loading the conveyor.

Let's think about how to get rid of this addiction. If we need to multiply a sequence of numbers, we can multiply them one by one successively. And we can first multiply all the numbers with an even index, then all the numbers with an odd, and at the end multiply the two results. In this case, the multiplication of two sequences will be independent of each other, and the operation of multiplication from different sequences can be in the pipeline at the same time.

For greater clarity, we simplify the code and pass not a vector structure to the function, but an array of numbers at once. Such a change will not affect performance, but it will become easier to read the code. The next technique is called loop promotion with multiple batteries .

As you can see, we support two independent batteries, which are multiplied at the end to give the final result. CPE drops from 5.02 to 2.5 . Let's do a promotion with four batteries:

CPE drops to 1.28 . With eight batteries, I get CPE 1.04 , which is almost the same as what I can squeeze out of my processor. When writing code in this style, you must remember to process the remaining few elements.

My processor has only one function block that performs multiplication of floating point numbers. The Core i7 Haswell has two such blocks, on it our application can reach CPE 0.5 , but would have to use more batteries. In general, if an operation takes C clock cycles and N blocks execute it, the required number of batteries can be C * N.

As we have already said, compilers are very conservative, they are afraid of harm and never make changes to the program that may affect the final result in any case. Compilers are aware of the multi-battery loop promotion technique. GCC applies this technique when launched with a third level of optimization or higher. Compilers will apply this optimization for integers, but will never apply it for floating-point numbers. The thing is associativity , that is, whether the order in which we add or multiply the numbers affects the final result.

If we have a sequence of integers, it doesn’t matter in what order we add or multiply them, we will still get the same result, even if there is an overflow. We say that the addition and multiplication operations for integers are associative operations. Addition and multiplication for floating point numbers are not associative. Suppose a sequence of float numbers has very small numbers and very large ones. If we first multiply very small ones, we get zero. Multiplying all the others by zero, we end up with zero. If initially we are very small, we will multiply by very large, in the end we could get an adequate result.

For most real-world applications, there is no difference in which order to perform operations on numbers, so we can use this optimization technique.

We can still improve performance. The CPE 1.04 that we have reached is not the limit. Modern processors support special extensions called SSE or AVX , which allow you to work on data vectors. The processor has special vector registers called % ymm0 - % ymm15 , sized 16 or 32 bytes. Current AVX registers are 32 bytes in size and can contain four 64-bit numbers, or eight 32-bit numbers, no matter integers or floating point. You can read 32 bytes of data from memory into one such register at once, read 32 bytes of data into another register and perform arithmetic operations immediately on four or eight numbers in parallel.

This hardware is unused in the processor until you explicitly enable it. GCC supports the C language extension, which will allow you to do this. You can, of course, write code in assembler, but then the program will cease to be portable. Using these processor capabilities, you can further increase program performance by 4 or 8 times , depending on the size of the registers. In our case, we could lower the CPE to 0.25 or 0.12 .

We started with a program that had a CPE performance of 11.05 . Then we got rid of unnecessary function calls and memory queries and improved performance to CPE 5.02 . Then they used a loop promotion with several batteries. So we were able to get rid of the data dependency, were able to fully load the pipeline and got CPE 1.04 . That is, we increased the speed of the program by 11 times . Using vectorization, you can make the program run 44 to 88 times faster than the original version.

You may argue that we apply all these optimization techniques and compare performance with the original version, which was compiled only with a basic level of optimization. And, perhaps, we don’t need to know all these techniques, because the compiler will apply them for us if we order it to compile with a high level of optimization. Well, let's compile the initial version of the program with a high, third level of optimization and look at what the compiler is capable of. I get a performance of CPE 5.02 . It is far from CPE 1.04that we got by manually transforming the code. Knowing all these optimization techniques has allowed us to achieve a fivefold increase in productivity. We can use vectorization to further increase productivity by 4-8 times, the compiler will not do this for you.

The number of function blocks that read and write to memory can be a performance bottleneck. Note that these blocks are fully pipelined and can take a new instruction every cycle. My processor has one unit that reads data from memory to the processor. If for processing one element, I need to get two numbers from memory, I can’t do better than CPE 2.0, because getting two numbers will take two clock cycles. The Core i7 Haswell has four blocks that perform integer additions. But if you need to add the elements of an integer vector, you cannot achieve a CPE of 0.25. Because this processor has only two blocks that read from memory - this sets the lower limit to CPE 0.5.

The value of each battery is stored in a separate register. The x86-64 processor has 16 registers for integers and 16 YMM registers for floating point numbers. If we use too many batteries, there may not be enough registers for them. You will have to store the values of some of them in memory, which will degrade performance. If we increase the number of batteries in our program from 8 to 20, the performance drops from CPE 1.04 to CPE 1.35 .

Optimization complicates the code, making it difficult to understand. Therefore, critical pieces of code where maximum performance is required are typically optimized. Optimization increases the number of potential bugs. Optimized code needs to be thoroughly tested after each optimization step.

There is a technique called reassociation . This is when we put brackets differently in some expression, and this allows us to reduce data dependency and improve performance. Suppose in our program we want to multiply three elements in one iteration.

This cycle has a performance of CPE 5.02 . According to the rules of the C language, when there are no brackets, the operations of multiplication are performed from left to right. In this case, it is easy to see that all multiplication operations depend on the variable acc . The processor cannot start multiplying in the next iteration until it completes all the multiplications in the current one. We put the brackets differently:

The values of the variables x , y, and z in one iteration are independent of their values in any other. Therefore, while the current iteration is in progress, the processor can pre-calculate the last two multiplications in the following iterations. Performance improves to CPE 1.69 .

As already mentioned, the processor performs prefetching, that is, it reads instructions in advance. If it encounters branching (assembler commands je , jg , jl , etc.), it tries to guess which branch the calculation will go to. If he guesses wrong, then he loses several measures. This is called conditional transfer of control .

There is a cmov assembler command . By executing this command, the processor checks some condition. If this condition is true, it copies the value from one register to another. This is called conditional data transfer . In this case, no branching is created and the processor does not need to guess anything and risk losing cycles.

The idea is to reduce the number of branches in the program, making the execution flow more direct. For this, some control transfers are advantageously replaced by data transfer. Typically, the compiler translates if-else constructs using the assembler commands je , jg , jl , etc., but can sometimes use the cmov command . Consider this if-else construct :

If you translate this code using control transfer, then when you run the program, either one expression or the other will be calculated, but there is a chance that the processor will make an error in choosing a branch and lose cycles. The compiler can avoid branching if it converts this code as follows:

When a conditional instruction is very simple, it only assigns some value to one variable, the compiler translates this instruction into assembly language using the cmov command . Therefore, the last line in the previous example will be broadcast using data transfer without creating branching. The processor will have to calculate both expressions, which takes more clock cycles, but it does not fulfill branch prediction, which potentially saves clock cycles. If the expressions are fairly simple, such a conversion can be beneficial.

Sometimes the compiler does not perform this optimization because it believes that it will degrade performance. We can make it execute it if we rewrite the code in a more functional styleas presented in the previous example. Consider a real program.

Both functions do the same thing. They go around pairs of numbers from two arrays in parallel, setting the smallest of them in array a and the largest in array b . Only the second function uses a tricky technique: it calculates the minimum number, calculates the maximum number and writes each of the numbers in its place. The conditions in the calculation of the variables min and max are so simple that the compiler uses conditional data transfer for them. The performance difference is greatest if you compile with the third level of optimization: minmax1 - CPE 15.6 , minmax2 - CPE 1.18 (13.2 times faster).

A modern processor hides tremendous computing power. But to get access to it, you need to write programs in a certain style. To decide which transformations and to which part of the code to apply is the “black magic” of writing fast code. Typically, analysis is combined with experiment: they try different approaches, take performance measurements, and examine assembler code to detect bottlenecks.

You now better understand why it is preferable to use functions from standard libraries than to write your own solutions. Function code in standard libraries is already optimized.

We proposed a basic code optimization strategy:

In this article, we will look at basic code optimization techniques that can increase the performance of your program many times over. We will also touch on the processor device. Understanding how the processor works is necessary for writing effective programs.

The fifth chapter in Computer Systems: A Programmer's Perspective inspired me to write this article . All programs are tested on a machine with a Pentium 2117U processor , the performance on your machine may be different. In another article in this series, “Code Optimization: Memory,” we look at optimization techniques for better cache utilization.

Optimization blockers

Compilers themselves try to optimize the code. When GCC is launched with the -Og parameter, it performs the basic optimization level, with the -O1 parameter the first optimization level. There are higher levels of optimization: -O2, -O3, etc. The higher the level of optimization, the more radical the compiler makes the program. Compilers can only use safe optimizations . This means that the compiler can only modify the program so that it does not change its behavior for all input data.

We, as programmers, need to understand that there are certain characteristics of the code that will not allow the compiler to perform optimization. We call them optimization blockers . Consider two types of optimization blockers. One of them arepointers . The compiler cannot know for sure whether two pointers will point to the same memory area, and therefore does not perform some optimizations. Consider an example:

void twiddle1(long *xp, long *yp) {

*xp += *yp;

*xp += *yp;

}

void twiddle2(long *xp, long *yp) {

*xp += 2*(*yp);

}The twiddle2 function looks more efficient, it performs only three memory requests (read * xp , read * yp , write * xp ), while twiddle1 performs six requests (four reads and two records). It can be expected that these two functions have the same behavior. However, imagine that xp and yp point to the same memory location. Then twiddle1 will increase * xp four times, and twiddle2 - three times. These functions have different behavior in some cases. For this reason, the compiler will not dare to transform a less efficient function into a more efficient one.

Another optimization blocker is function calls . In general, function calls entail overhead , and we need to try to avoid them. Consider an example:

long f();

long func1() {

return f() + f() + f() + f();

}

long func2() {

return 4*f();

}The first function calls f four times, while the second - only once. We expect the compiler to guess and convert the first function to the second. But the function f can have side effects , it can change the global state, as in this example:

long counter = 0;

long f() {

return counter++;

}In this case, func1 and func2 will return different results. It is difficult for the compiler to determine whether the function call has side effects or not. When he cannot do this, he expects the worst and does not perform optimization.

Demo program

Slow programs typically perform calculations on large data sets. It will be reasonable to evaluate the effectiveness of such programs in the average number of clock cycles that the processor spends on processing one element of the array. We introduce the metric CPE (cycles per element). If we say that the program has a CPE 2.5 performance, it means that on average it spends 2.5 processor cycles to process one element.

We will present a simple program, on the example of which we will demonstrate powerful optimization techniques. The vec structure is a vector of float elements . Combine0 functioncalculates the result of the multiplication of all elements of the vector. We will optimize this function. Let's make the size of the array 5000 and initialize it with random numbers.

typedef struct {

long len;

float *data;

} vec;

long vec_len(vec *v) {

return v->len;

}

void combine0(vec *v, float *dest)

{

long i;

*dest = 1;

for (i = 0; i < vec_len(v); i++) {

*dest *= v->data[i];

}

}

#define SIZE 5000

float a[SIZE];

vec v = {SIZE, a};

int main() {

float res;

for (i = 0; i < SIZE; i++) // инициализация вектора случайными числами

a[i] = rand();

combine0(&v, &res);

}We will compile all the programs in this article using GCC with the -Og parameter (basic level of optimization). After compiling the program and making the necessary measurements, I get the performance of CPE 11.05 .

To measure CPE, you can use the rdtsc instruction , this is a clock counter since the last processor reset. It is necessary to compare the counter values before and after the program execution. I warn you that this is an unreliable method. For greater reliability, you can simply measure the spent processor time. The next program should be a template that demonstrates both methods.

Code Performance Measurement

#include

#include

// Получить количество тактов с момента последнего сброса процессора

static __inline__ unsigned long long rdtsc(void)

{

unsigned hi, lo;

__asm__ __volatile__ ("rdtsc" : "=a"(lo), "=d"(hi));

return ( (unsigned long long)lo)|( ((unsigned long long)hi)<<32 );

}

// Количество элементов, обрабатываемых в цикле

#define SIZE 100000000

int main(void)

{

double time1 = clock() / (double)CLOCKS_PER_SEC;

long cycles1 = rdtsc();

//---------- ИЗМЕРИТЬ ЭТОТ КОД ----------

for (int i = 0; i < SIZE; i++) {

i * i;

}

//----------------------

double time2 = clock() / (double)CLOCKS_PER_SEC;

long cycles2 = rdtsc();

long cycles = cycles2 - cycles1; // Столько тактов было потрачено

double cpe = cycles / (double)SIZE; // Столько тактов было потрачено на один элемент

double cpu_time = time2 - time1; // Потраченное процессорное время

printf("CPU TIME: %.2f sec\n", cpu_time);

printf("CPE: %.2f\n", cpe);

}

Getting rid of cycle inefficiencies

Usually the most intense place in a program is cycles, especially the innermost cycle. It is there that we must first of all look for opportunities for optimization. In the loop of our program, we constantly call the vec_len function , which returns the length of the vector. It makes no sense to do this in each iteration, because the length of the vector does not change throughout the cycle. It would be wiser to call this function once and save the result in a variable. Therefore, we make a call to this function outside the loop.

void combine1(vec *v, float *dest)

{

long i, len = vec_len(v);

*dest = 1;

for (i = 0; i < len; i++) {

*dest *= v->data[i];

}

}The performance of the new version has not changed - CPE 11.05 . Apparently, the compiler himself guessed to perform this optimization. Let's run GCC without the -Og option and compile both versions of the function without any optimization at all. Now the difference is noticeable: combine0 - CPE 12.7 , combine1 - CPE 11.1 .

In this case, the compiler guessed to insert the body of the function at the place the function was called. But often the compiler will not do this, because it will not be able to determine whether the function has side effects or not. If the function performs any additional actions, such a transformation can change the behavior of the program. As an extreme example, consider a loop that turns uppercase letters into lowercase:

void lower(char *s) {

for (long i = 0; i < strlen(s); i++)

if (s[i] >= 'A' && s[i] <= 'Z')

s[i] -= ('A' - 'a');

}The strlen function traverses the entire string until it encounters a null character, and does this in every iteration. This catastrophically slows down the program. For large lines, the loop will run thousands of times slower than the more efficient one. Ideally, the compiler should recognize that calling strlen always returns the same value and push it outside the loop. But for this you need to deeply analyze the body of the cycle and determine that although the program changes the characters in the string, it does not turn any character into zero. This is beyond the scope of modern compilers.

Get rid of unnecessary memory accesses

Many programs often access memory for reading or writing. It takes a lot of time. It is advisable to work with processor registers, and not with memory. For such programs, you need to look for the opportunity to enter a temporary local variable into which to write, and only after some time to write from this variable into memory.

Count how many times we access memory in each iteration. We read an element from an array, read dest , add these values, and write the result to dest . Only three memory accesses. We do not need to read and write dest every time after processing the next element. We will introduce a temporary variable-battery, in which we will store the result, and only at the very end we will write to dest . The compiler will place the accumulator variable itself in the register, so we will get rid of unnecessary memory accesses.

void combine2(vec *v, float *dest)

{

long i, len = vec_len(v);

float acc = 1;

for (i = 0; i < len; i++) {

acc *= v->data[i];

}

*dest = acc;

}

Now we perform only one memory access in each iteration. Performance improves from CPE 11.05 to CPE 5.02 . If you expect the compiler to guess and perform this optimization, then consider what happens if dest points to an element in the vector. In this case, versions with and without battery will calculate a different value. The compiler cannot know what the pointers will be equal to, so it prepares for the worst and does not perform optimization. Function calls and pointers seriously block the optimizing capabilities of the compiler.

Loop promotion

We have reached CPE 5.02 . This is not a prime number; my processor spends 5 clock cycles performing multiplication of floating point numbers. We can say that we have reached a certain lower limit .

My processor spends one clock cycle doing the addition of int numbers . Interestingly, if instead of multiplying values of type float , I will add values of type int , will I achieve CPE 1.0 performance? I am making the necessary changes to my program. I launch and get just CPE 1.58 . Where does such inefficiency come from?

The thing is that during the execution of the cycle, the processor performs not only the calculation instructions in which we are interested, but also the instructions that serve the cycle: incrementing the counter, checking the condition, moving to the beginning of the cycle. That is, the maintenance of the cycle has its overhead, and the processor does a lot of unnecessary work. If the body of the loop is small, these "empty" calculations take up a large fraction of the processor time. Cycle Promotion

Techniquelies in the fact that not one but several elements are processed in one iteration. We kind of “unwind” the cycle, increasing the length of his body and reducing the number of turns. Thus, we perform fewer iterations, perform more useful work in one iteration, and overhead costs are no longer such a large share. Let's change the functions that add integers a bit:

void combine_plus(vec *v, int *dest)

{

long i, limit = vec_len(v)-1;

int acc = 0;

for (i = 0; i < limit; i+=2) {

acc = acc + v->data[i] + v->data[i+1];

}

if (i < len) acc += v->data[i]; // добавить последний элемент, если он не обработан

*dest = acc;

}

Now the program adds two elements to the battery per iteration. Please note that the last element may not be processed in a cycle, so you need to add it to the battery later. CPE drops from 1.58 to 1.15 . Processing four elements per iteration, I get CPE 1.03 , which is close to the value I wanted.

CPE 5.02 , which we received, is equal to the number of clock cycles that my processor spends on multiplying floating-point numbers. To process one element, we perform one multiplication, and, it would seem, this is the limit below which we will not go down. But actually we can achieve CPE 1.04. To understand more advanced optimization techniques, you must first understand how the processor works.

CPU Introduction

Modern processors are very complex. The internal structure of the processor is called microarchitecture , and this is a separate world over which we have no control. When the processor reads the instructions of the program, it breaks them into primitives that are understandable to him, and processes them as he pleases. If you look at the assembler code, it seems that the processor executes the instructions sequentially, one by one, as they are presented in the code. In fact, the processor can execute instructions in parallel and even in the opposite order , if it is sure that this will not change the final result. Execution of instructions by the processor in parallel is called concurrency at the instruction level .

The processor is divided into parts that perform different types of tasks, we call them function blocks . Each block performs a certain series of tasks: reading from memory, writing to memory, adding integers, multiplying floating-point numbers, etc. My processor has two blocks that perform integer addition. This means that it can simultaneously perform two additions.

Imagine that the processor needs to get data from memory and it takes 100 clock cycles. But the processor will not be wise to wait for the completion of this operation to start the next one. After all, a separate block is engaged in reading data from memory, and the remaining blocks at this time can perform other calculations. Therefore, the processor reads the next few instructions in advance and tries to load all the functional blocks with them, even if you have to execute the instructions in a different order.

Reading instructions in advance is called prefetching . If, during prefetching, the processor encounters branching (for example, the if-else construct), he tries to guess which branch will be taken and reads instructions from there. If later he realizes that the wrong branch was taken, he resets the calculations, restores the previous state and goes along the other branch. Such an error costs the processor several lost cycles. For many processors, this is approximately 20 clock cycles.

Conveyor

My processor has one function block that performs multiplication of floating point numbers, and it spends five clock cycles per multiplication. And it would seem that if we need to perform N multiplications, we will have to spend 5 * N cycles. But this is not so, thanks to such a device as a conveyor .

In American films, you probably saw a client in the dining room walk with a tray of several chefs, and each ladle puts something different on him. This is the conveyor. Imagine a dining room with five chefs, and serving one of them takes one beat. They could serve customers like this: first, one customer goes through all the cooks, then the second, then the third and so on. In this case, a client with a full tray would leave them every five measures. But it is inefficient. It’s better if all the customers are lined up, and each bar this line will advance to the next chef. In this case, each step from the cooks will leave the client with a full tray. Note that servicing one client will still take five cycles, but serving N customers (if N is large) will take about N cycles.

Functional blocks in the processor are arranged on the basis of the conveyor principle. They divide all the work into several stages, and different instructions can be processed at different stages at the same time. Despite the fact that the execution of a single instruction may take several clock cycles, many blocks can receive a new instruction into the pipeline every clock cycle . Such blocks are called fully conveyor units . All operations, except division, are performed in a fully conveyor mode. Division is considered a complex operation, takes 3-30 clock cycles and generally does not have a conveyor.

Data Dependence and Multiple Batteries

But if the pipeline allows us to spend one clock cycle on an operation, why then did we get CPE 5.02 , not CPE 1.02 ? It's all about such a bad thing as data dependency . Let's go back to the dining room, for example. Let's say the first cook in the dining room puts either rice or buckwheat, and according to a strange rule, each client, having passed all the cooks, decides what the first cook should impose to the next client. Then we cannot start serving the next client until the current client passes all the cooks. We have to wait, because there is a certain dependence. Also in the processor. We say that there is a correlation between data if we need other data to start working on some data, and we must wait until the work on them is completed.

In our program, such a data dependency is created by the battery variable. The processor could calculate the loop instructions several iterations forward. But in each iteration, we calculate the new value of the battery, taking into account its value calculated in the previous iteration. Therefore, we cannot start multiplication in the next iteration until the multiplication in the previous one is complete. That is, each multiplication must go through the entire pipeline before we send the next to the pipeline. This prevents us from loading the conveyor.

Let's think about how to get rid of this addiction. If we need to multiply a sequence of numbers, we can multiply them one by one successively. And we can first multiply all the numbers with an even index, then all the numbers with an odd, and at the end multiply the two results. In this case, the multiplication of two sequences will be independent of each other, and the operation of multiplication from different sequences can be in the pipeline at the same time.

For greater clarity, we simplify the code and pass not a vector structure to the function, but an array of numbers at once. Such a change will not affect performance, but it will become easier to read the code. The next technique is called loop promotion with multiple batteries .

float combine3(float a[], long size)

{

long i, limit = size-1;

float acc0 = 1;

float acc1 = 1;

for (i = 0; i < limit; i+=2) {

acc0 *= a[i];

acc1 *= a[i+1];

}

while (i < size) acc0 *= a[i++];

return acc0 * acc1;

}

As you can see, we support two independent batteries, which are multiplied at the end to give the final result. CPE drops from 5.02 to 2.5 . Let's do a promotion with four batteries:

float combine4(float a[], long size)

{

long i, limit = size-3;;

float acc0 = 1;

float acc1 = 1;

float acc2 = 1;

float acc3 = 1;

for (i = 0; i < limit; i+=4) {

acc0 *= a[i];

acc1 *= a[i+1];

acc2 *= a[i+2];

acc3 *= a[i+3];

}

while (i < size) acc0 *= a[i++];

return acc0 * acc1 * acc2 * acc3;

}

CPE drops to 1.28 . With eight batteries, I get CPE 1.04 , which is almost the same as what I can squeeze out of my processor. When writing code in this style, you must remember to process the remaining few elements.

My processor has only one function block that performs multiplication of floating point numbers. The Core i7 Haswell has two such blocks, on it our application can reach CPE 0.5 , but would have to use more batteries. In general, if an operation takes C clock cycles and N blocks execute it, the required number of batteries can be C * N.

Associativity

As we have already said, compilers are very conservative, they are afraid of harm and never make changes to the program that may affect the final result in any case. Compilers are aware of the multi-battery loop promotion technique. GCC applies this technique when launched with a third level of optimization or higher. Compilers will apply this optimization for integers, but will never apply it for floating-point numbers. The thing is associativity , that is, whether the order in which we add or multiply the numbers affects the final result.

If we have a sequence of integers, it doesn’t matter in what order we add or multiply them, we will still get the same result, even if there is an overflow. We say that the addition and multiplication operations for integers are associative operations. Addition and multiplication for floating point numbers are not associative. Suppose a sequence of float numbers has very small numbers and very large ones. If we first multiply very small ones, we get zero. Multiplying all the others by zero, we end up with zero. If initially we are very small, we will multiply by very large, in the end we could get an adequate result.

For most real-world applications, there is no difference in which order to perform operations on numbers, so we can use this optimization technique.

Vectorization

We can still improve performance. The CPE 1.04 that we have reached is not the limit. Modern processors support special extensions called SSE or AVX , which allow you to work on data vectors. The processor has special vector registers called % ymm0 - % ymm15 , sized 16 or 32 bytes. Current AVX registers are 32 bytes in size and can contain four 64-bit numbers, or eight 32-bit numbers, no matter integers or floating point. You can read 32 bytes of data from memory into one such register at once, read 32 bytes of data into another register and perform arithmetic operations immediately on four or eight numbers in parallel.

This hardware is unused in the processor until you explicitly enable it. GCC supports the C language extension, which will allow you to do this. You can, of course, write code in assembler, but then the program will cease to be portable. Using these processor capabilities, you can further increase program performance by 4 or 8 times , depending on the size of the registers. In our case, we could lower the CPE to 0.25 or 0.12 .

Optimization Results

We started with a program that had a CPE performance of 11.05 . Then we got rid of unnecessary function calls and memory queries and improved performance to CPE 5.02 . Then they used a loop promotion with several batteries. So we were able to get rid of the data dependency, were able to fully load the pipeline and got CPE 1.04 . That is, we increased the speed of the program by 11 times . Using vectorization, you can make the program run 44 to 88 times faster than the original version.

You may argue that we apply all these optimization techniques and compare performance with the original version, which was compiled only with a basic level of optimization. And, perhaps, we don’t need to know all these techniques, because the compiler will apply them for us if we order it to compile with a high level of optimization. Well, let's compile the initial version of the program with a high, third level of optimization and look at what the compiler is capable of. I get a performance of CPE 5.02 . It is far from CPE 1.04that we got by manually transforming the code. Knowing all these optimization techniques has allowed us to achieve a fivefold increase in productivity. We can use vectorization to further increase productivity by 4-8 times, the compiler will not do this for you.

Problems and limitations

The number of function blocks that read and write to memory can be a performance bottleneck. Note that these blocks are fully pipelined and can take a new instruction every cycle. My processor has one unit that reads data from memory to the processor. If for processing one element, I need to get two numbers from memory, I can’t do better than CPE 2.0, because getting two numbers will take two clock cycles. The Core i7 Haswell has four blocks that perform integer additions. But if you need to add the elements of an integer vector, you cannot achieve a CPE of 0.25. Because this processor has only two blocks that read from memory - this sets the lower limit to CPE 0.5.

The value of each battery is stored in a separate register. The x86-64 processor has 16 registers for integers and 16 YMM registers for floating point numbers. If we use too many batteries, there may not be enough registers for them. You will have to store the values of some of them in memory, which will degrade performance. If we increase the number of batteries in our program from 8 to 20, the performance drops from CPE 1.04 to CPE 1.35 .

Optimization complicates the code, making it difficult to understand. Therefore, critical pieces of code where maximum performance is required are typically optimized. Optimization increases the number of potential bugs. Optimized code needs to be thoroughly tested after each optimization step.

Other optimization techniques: reassociation

There is a technique called reassociation . This is when we put brackets differently in some expression, and this allows us to reduce data dependency and improve performance. Suppose in our program we want to multiply three elements in one iteration.

for (long i = 0; i < limit; i+=3) {

float x = a[i], y = a[i+1], z = a[i+2];

acc = acc * x * y * z;

}

This cycle has a performance of CPE 5.02 . According to the rules of the C language, when there are no brackets, the operations of multiplication are performed from left to right. In this case, it is easy to see that all multiplication operations depend on the variable acc . The processor cannot start multiplying in the next iteration until it completes all the multiplications in the current one. We put the brackets differently:

acc = acc * ((x * y) * z);

The values of the variables x , y, and z in one iteration are independent of their values in any other. Therefore, while the current iteration is in progress, the processor can pre-calculate the last two multiplications in the following iterations. Performance improves to CPE 1.69 .

Other optimization techniques: conditional data transfer

As already mentioned, the processor performs prefetching, that is, it reads instructions in advance. If it encounters branching (assembler commands je , jg , jl , etc.), it tries to guess which branch the calculation will go to. If he guesses wrong, then he loses several measures. This is called conditional transfer of control .

There is a cmov assembler command . By executing this command, the processor checks some condition. If this condition is true, it copies the value from one register to another. This is called conditional data transfer . In this case, no branching is created and the processor does not need to guess anything and risk losing cycles.

The idea is to reduce the number of branches in the program, making the execution flow more direct. For this, some control transfers are advantageously replaced by data transfer. Typically, the compiler translates if-else constructs using the assembler commands je , jg , jl , etc., but can sometimes use the cmov command . Consider this if-else construct :

if (условие)

v = выражение1;

else

v = выражение2;

If you translate this code using control transfer, then when you run the program, either one expression or the other will be calculated, but there is a chance that the processor will make an error in choosing a branch and lose cycles. The compiler can avoid branching if it converts this code as follows:

v1 = выражение1;

v2 = выражение2;

v = (условие) ? v1 : v2;

When a conditional instruction is very simple, it only assigns some value to one variable, the compiler translates this instruction into assembly language using the cmov command . Therefore, the last line in the previous example will be broadcast using data transfer without creating branching. The processor will have to calculate both expressions, which takes more clock cycles, but it does not fulfill branch prediction, which potentially saves clock cycles. If the expressions are fairly simple, such a conversion can be beneficial.

Sometimes the compiler does not perform this optimization because it believes that it will degrade performance. We can make it execute it if we rewrite the code in a more functional styleas presented in the previous example. Consider a real program.

void minmax1(int a[], int b[], int n)

{

for (int i = 0; i < n; i++) {

if (a[i] > b[i]) {

int t = a[i];

a[i] = b[i];

b[i] = t;

}

}

}

void minmax2(int a[], int b[], int n)

{

for (int i = 0; i < n; i++) {

int min = a[i] < b[i] ? a[i] : b[i];

int max = a[i] < b[i] ? b[i] : a[i];

a[i] = min;

b[i] = max;

}

}

Both functions do the same thing. They go around pairs of numbers from two arrays in parallel, setting the smallest of them in array a and the largest in array b . Only the second function uses a tricky technique: it calculates the minimum number, calculates the maximum number and writes each of the numbers in its place. The conditions in the calculation of the variables min and max are so simple that the compiler uses conditional data transfer for them. The performance difference is greatest if you compile with the third level of optimization: minmax1 - CPE 15.6 , minmax2 - CPE 1.18 (13.2 times faster).

Conclusion

A modern processor hides tremendous computing power. But to get access to it, you need to write programs in a certain style. To decide which transformations and to which part of the code to apply is the “black magic” of writing fast code. Typically, analysis is combined with experiment: they try different approaches, take performance measurements, and examine assembler code to detect bottlenecks.

You now better understand why it is preferable to use functions from standard libraries than to write your own solutions. Function code in standard libraries is already optimized.

We proposed a basic code optimization strategy:

- High level design . Choose efficient algorithms and data structures. No compiler can replace bad algorithms or data structures with good ones.

- Basic coding principles . Avoid optimization blockers to help the compiler generate efficient code. Get rid of unnecessary function calls. If possible, take the calculation out of the loop. Get rid of unnecessary memory requests. Enter temporary variables to store intermediate results.

- Low level optimization . Use looping to reduce cycle overhead. Engage concurrency at the instruction level using multiple batteries and reassociation. Write if-else statements in a functional style so that the compiler can use conditional data transfer.