Top 6 optimizations for netty

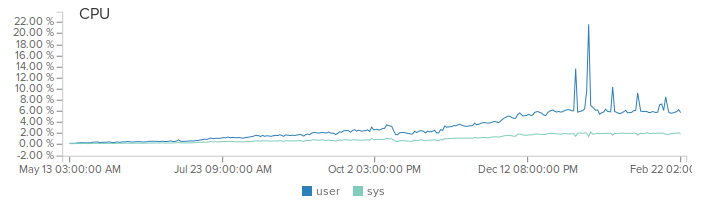

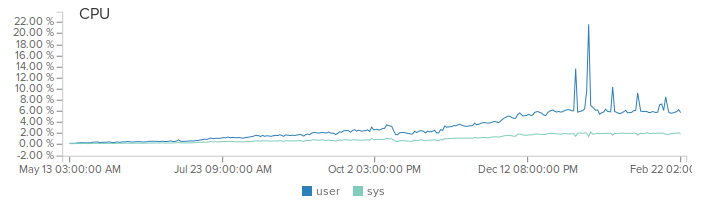

Hello. This article continues 10k per core with specific examples of optimizations that have been done to improve server performance. 5 months have passed since writing the first part, and during this time the load on our production server has grown from 500 rivers-sec to 2000 with peaks to 5000 rivers-sec. Thanks to netty, we didn’t even notice this increase (unless the disk space goes faster).

(Do not pay attention to the peaks, these are bugs during the deployment)

This article will be useful to all those who work with netty or are just starting. So let's go.

One of the key optimizations that everyone should use is connecting a native Epoll transport instead of implementing it in java. Moreover, with netty this means adding only 1 dependency:

and AutoCorrect by code to replace the following classes:

The fact is that the java implementation for working with non-blocking sockets is implemented through the Selector class , which allows you to work effectively with many connections, but its implementation in java is not the most optimal. Immediately for three reasons:

In my particular case, I got a performance increase of about 30%. Of course, this optimization is only possible for Linux servers.

I don’t know how in the CIS, but TAM is the key factor for any project. “What about security?” Is an inevitable question that you will definitely be asked if you are interested in your project, system, service or product.

In the outsourcing world from which I came, the team always usually had 1-2 DevOps on which I could always shift this issue. For example, instead of adding support for https, SSL / TLS at the application level, you could always ask administrators to configure nginx and from it already forward normal http to their server. And fast and efficient. Today, when I am both a Swiss and a reaper, and I’m a dude, I have to do everything myself - to develop, deploy, monitor. Therefore, connecting https at the application level is much faster and easier than deploying nginx.

Making openSSL work with netty is a bit more complicated than connecting a native epoll transport. You will need to connect a new dependency to the project:

Specify openSSL as the SSL provider:

Add another handler to the pipeline:

And finally, collect the native code for working with openSSL on the server. The instruction is here . In fact, the whole process boils down to:

For me, the performance gain was ~ 15%.

A complete example can be looked here and here .

Very often you have to send multiple messages to the same socket. It might look like this:

This code can be optimized.

In the second case, when writing, the net will not immediately send the message over the network, but after processing it will put it in the buffer (in case the message is less than the buffer). Thus reducing the number of system calls to send data over the network.

As I already wrote in the previous article - netty asynchronous framework with a small number of logic handler threads (usually n core * 2). Therefore, each such handler thread should execute as quickly as possible. Any kind of synchronization can prevent this, especially with loads of tens of thousands of requests per second.

To this end, netty binds each new connection to the same handler (thread) to reduce the need for code for synchronization. For example, if a user has joined the server and performs certain actions - for example, it changes the state of a model that is associated only with it, then no synchronization or volatile is needed. All messages from this user will be processed by the same thread. This is great and works for some projects.

But what if the state can change from several connections, which are most likely to be tied to different threads? For example, for the case when we make a game room and a team from the user must change the world around us?

To do this, there is a register method in netty that allows you to reassign a connection from one handler to another.

This approach allows you to process events for one game room in one thread and completely get rid of synchronizations and volatile to change the state of this room.

An example of re-binding to login in my code here and here .

Netty is often chosen for a server solution, since servers must support the work of different protocols. For example, my modest IoT cloud supports HTTP / S, WebSockets, SSL / TCP sockets for various hardware and its own binary protocol. This means that for each of these protocols there must be an IO thread (boss group) and threads are logic handlers (work group). Usually, creating several of these handlers looks like this:

But in the case of netty, the less extra threads you create, the more likely it is to create a more productive application. Fortunately, in netty EventLoop you can reuse :

It's no secret to anyone that for high-load applications, one of the bottlenecks is the garbage collector. Netty is fast, including due to the widespread use of memory outside of java heap. Netty even has its own ecosystem around off-heap buffers and a memory leak detection system. You can do the same. For instance:

change to

In this case, however, you must be sure that one of the handlers in the pipeline will free this buffer. This does not mean that you should immediately run and change your code, but you should know about this optimization opportunity. Despite more complex code and the ability to get a memory leak. For hot methods this may be the perfect solution.

I hope these simple tips allow you to speed up your application.

Let me remind you that my project is open-source. Therefore, if you are interested in how these optimizations look in existing code, see here .

(Do not pay attention to the peaks, these are bugs during the deployment)

This article will be useful to all those who work with netty or are just starting. So let's go.

Native Epoll Transport for Linux

One of the key optimizations that everyone should use is connecting a native Epoll transport instead of implementing it in java. Moreover, with netty this means adding only 1 dependency:

io.netty netty-transport-native-epoll ${netty.version} linux-x86_64 and AutoCorrect by code to replace the following classes:

- NioEventLoopGroup → EpollEventLoopGroup

- NioEventLoop → EpollEventLoop

- NioServerSocketChannel → EpollServerSocketChannel

- NioSocketChannel → EpollSocketChannel

The fact is that the java implementation for working with non-blocking sockets is implemented through the Selector class , which allows you to work effectively with many connections, but its implementation in java is not the most optimal. Immediately for three reasons:

- The selectedKeys () method on each call creates a new HashSet

- Iterating over this set creates an iterator

- And to everything else inside the selectedKeys () method a huge number of synchronization blocks

In my particular case, I got a performance increase of about 30%. Of course, this optimization is only possible for Linux servers.

Native OpenSSL

I don’t know how in the CIS, but TAM is the key factor for any project. “What about security?” Is an inevitable question that you will definitely be asked if you are interested in your project, system, service or product.

In the outsourcing world from which I came, the team always usually had 1-2 DevOps on which I could always shift this issue. For example, instead of adding support for https, SSL / TLS at the application level, you could always ask administrators to configure nginx and from it already forward normal http to their server. And fast and efficient. Today, when I am both a Swiss and a reaper, and I’m a dude, I have to do everything myself - to develop, deploy, monitor. Therefore, connecting https at the application level is much faster and easier than deploying nginx.

Making openSSL work with netty is a bit more complicated than connecting a native epoll transport. You will need to connect a new dependency to the project:

io.netty netty-tcnative ${netty.tcnative.version} linux-x86_64 Specify openSSL as the SSL provider:

return SslContextBuilder.forServer(serverCert, serverKey, serverPass)

.sslProvider(SslProvider.OPENSSL)

.build();

Add another handler to the pipeline:

new SslHandler(engine)

And finally, collect the native code for working with openSSL on the server. The instruction is here . In fact, the whole process boils down to:

- Deflate source

- mvn clean install

For me, the performance gain was ~ 15%.

A complete example can be looked here and here .

Save on system calls

Very often you have to send multiple messages to the same socket. It might look like this:

for (Message msg : messages) {

ctx.writeAndFlush(msg);

}

This code can be optimized.

for (Message msg : messages) {

ctx.write(msg);

}

ctx.flush();

In the second case, when writing, the net will not immediately send the message over the network, but after processing it will put it in the buffer (in case the message is less than the buffer). Thus reducing the number of system calls to send data over the network.

The best synchronization is the lack of synchronization.

As I already wrote in the previous article - netty asynchronous framework with a small number of logic handler threads (usually n core * 2). Therefore, each such handler thread should execute as quickly as possible. Any kind of synchronization can prevent this, especially with loads of tens of thousands of requests per second.

To this end, netty binds each new connection to the same handler (thread) to reduce the need for code for synchronization. For example, if a user has joined the server and performs certain actions - for example, it changes the state of a model that is associated only with it, then no synchronization or volatile is needed. All messages from this user will be processed by the same thread. This is great and works for some projects.

But what if the state can change from several connections, which are most likely to be tied to different threads? For example, for the case when we make a game room and a team from the user must change the world around us?

To do this, there is a register method in netty that allows you to reassign a connection from one handler to another.

ChannelFuture cf = ctx.deregister();

cf.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture channelFuture) throws Exception {

targetEventLoop.register(channelFuture.channel()).addListener(completeHandler);

}

});

This approach allows you to process events for one game room in one thread and completely get rid of synchronizations and volatile to change the state of this room.

An example of re-binding to login in my code here and here .

Reuse EventLoop

Netty is often chosen for a server solution, since servers must support the work of different protocols. For example, my modest IoT cloud supports HTTP / S, WebSockets, SSL / TCP sockets for various hardware and its own binary protocol. This means that for each of these protocols there must be an IO thread (boss group) and threads are logic handlers (work group). Usually, creating several of these handlers looks like this:

//http server

new ServerBootstrap().group(new EpollEventLoopGroup(1), new EpollEventLoopGroup(workerThreads))

.channel(channelClass)

.childHandler(getHTTPChannelInitializer(())

.bind(80);

//https server

new ServerBootstrap().group(new EpollEventLoopGroup(1), new EpollEventLoopGroup(workerThreads))

.channel(channelClass)

.childHandler(getHTTPSChannelInitializer(())

.bind(443);

But in the case of netty, the less extra threads you create, the more likely it is to create a more productive application. Fortunately, in netty EventLoop you can reuse :

EventLoopGroup boss = new EpollEventLoopGroup(1);

EventLoopGroup workers = new EpollEventLoopGroup(workerThreads);

//http server

new ServerBootstrap().group(boss, workers)

.channel(channelClass)

.childHandler(getHTTPChannelInitializer(())

.bind(80);

//https server

new ServerBootstrap().group(boss, workers)

.channel(channelClass)

.childHandler(getHTTPSChannelInitializer(())

.bind(443);

Off-heap Messages

It's no secret to anyone that for high-load applications, one of the bottlenecks is the garbage collector. Netty is fast, including due to the widespread use of memory outside of java heap. Netty even has its own ecosystem around off-heap buffers and a memory leak detection system. You can do the same. For instance:

ctx.writeAndFlush(new ResponseMessage(messageId, OK, 0));

change to

ByteBuf buf = ctx.alloc().directBuffer(5);

buf.writeByte(messageId);

buf.writeShort(OK);

buf.writeShort(0);

ctx.writeAndFlush(buf);

//buf.release();

In this case, however, you must be sure that one of the handlers in the pipeline will free this buffer. This does not mean that you should immediately run and change your code, but you should know about this optimization opportunity. Despite more complex code and the ability to get a memory leak. For hot methods this may be the perfect solution.

I hope these simple tips allow you to speed up your application.

Let me remind you that my project is open-source. Therefore, if you are interested in how these optimizations look in existing code, see here .